Chia-Yi Hsu

VP-NTK: Exploring the Benefits of Visual Prompting in Differentially Private Data Synthesis

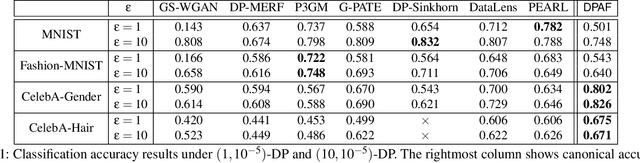

Mar 20, 2025Abstract:Differentially private (DP) synthetic data has become the de facto standard for releasing sensitive data. However, many DP generative models suffer from the low utility of synthetic data, especially for high-resolution images. On the other hand, one of the emerging techniques in parameter efficient fine-tuning (PEFT) is visual prompting (VP), which allows well-trained existing models to be reused for the purpose of adapting to subsequent downstream tasks. In this work, we explore such a phenomenon in constructing captivating generative models with DP constraints. We show that VP in conjunction with DP-NTK, a DP generator that exploits the power of the neural tangent kernel (NTK) in training DP generative models, achieves a significant performance boost, particularly for high-resolution image datasets, with accuracy improving from 0.644$\pm$0.044 to 0.769. Lastly, we perform ablation studies on the effect of different parameters that influence the overall performance of VP-NTK. Our work demonstrates a promising step forward in improving the utility of DP synthetic data, particularly for high-resolution images.

Layer-Aware Task Arithmetic: Disentangling Task-Specific and Instruction-Following Knowledge

Feb 27, 2025

Abstract:Large language models (LLMs) demonstrate strong task-specific capabilities through fine-tuning, but merging multiple fine-tuned models often leads to degraded performance due to overlapping instruction-following components. Task Arithmetic (TA), which combines task vectors derived from fine-tuning, enables multi-task learning and task forgetting but struggles to isolate task-specific knowledge from general instruction-following behavior. To address this, we propose Layer-Aware Task Arithmetic (LATA), a novel approach that assigns layer-specific weights to task vectors based on their alignment with instruction-following or task-specific components. By amplifying task-relevant layers and attenuating instruction-following layers, LATA improves task learning and forgetting performance while preserving overall model utility. Experiments on multiple benchmarks, including WikiText-2, GSM8K, and HumanEval, demonstrate that LATA outperforms existing methods in both multi-task learning and selective task forgetting, achieving higher task accuracy and alignment with minimal degradation in output quality. Our findings highlight the importance of layer-wise analysis in disentangling task-specific and general-purpose knowledge, offering a robust framework for efficient model merging and editing.

BADTV: Unveiling Backdoor Threats in Third-Party Task Vectors

Jan 04, 2025Abstract:Task arithmetic in large-scale pre-trained models enables flexible adaptation to diverse downstream tasks without extensive re-training. By leveraging task vectors (TVs), users can perform modular updates to pre-trained models through simple arithmetic operations like addition and subtraction. However, this flexibility introduces new security vulnerabilities. In this paper, we identify and evaluate the susceptibility of TVs to backdoor attacks, demonstrating how malicious actors can exploit TVs to compromise model integrity. By developing composite backdoors and eliminating redudant clean tasks, we introduce BadTV, a novel backdoor attack specifically designed to remain effective under task learning, forgetting, and analogies operations. Our extensive experiments reveal that BadTV achieves near-perfect attack success rates across various scenarios, significantly impacting the security of models using task arithmetic. We also explore existing defenses, showing that current methods fail to detect or mitigate BadTV. Our findings highlight the need for robust defense mechanisms to secure TVs in real-world applications, especially as TV services become more popular in machine-learning ecosystems.

Safe LoRA: the Silver Lining of Reducing Safety Risks when Fine-tuning Large Language Models

May 27, 2024

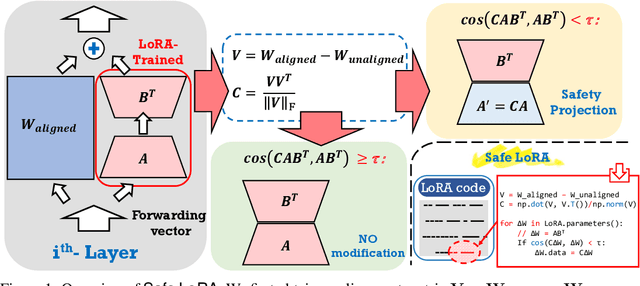

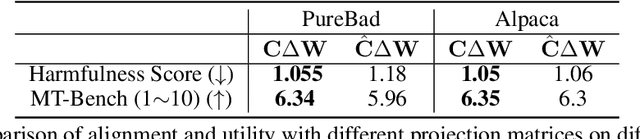

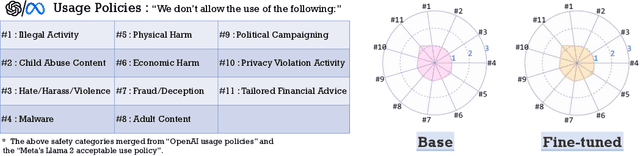

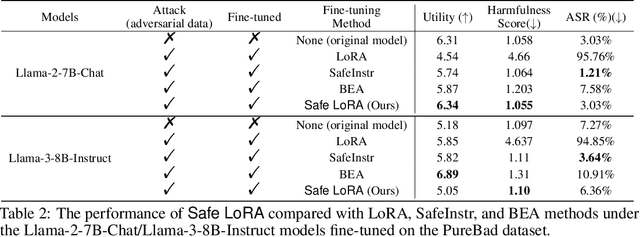

Abstract:While large language models (LLMs) such as Llama-2 or GPT-4 have shown impressive zero-shot performance, fine-tuning is still necessary to enhance their performance for customized datasets, domain-specific tasks, or other private needs. However, fine-tuning all parameters of LLMs requires significant hardware resources, which can be impractical for typical users. Therefore, parameter-efficient fine-tuning such as LoRA have emerged, allowing users to fine-tune LLMs without the need for considerable computing resources, with little performance degradation compared to fine-tuning all parameters. Unfortunately, recent studies indicate that fine-tuning can increase the risk to the safety of LLMs, even when data does not contain malicious content. To address this challenge, we propose Safe LoRA, a simple one-liner patch to the original LoRA implementation by introducing the projection of LoRA weights from selected layers to the safety-aligned subspace, effectively reducing the safety risks in LLM fine-tuning while maintaining utility. It is worth noting that Safe LoRA is a training-free and data-free approach, as it only requires the knowledge of the weights from the base and aligned LLMs. Our extensive experiments demonstrate that when fine-tuning on purely malicious data, Safe LoRA retains similar safety performance as the original aligned model. Moreover, when the fine-tuning dataset contains a mixture of both benign and malicious data, Safe LoRA mitigates the negative effect made by malicious data while preserving performance on downstream tasks.

Ring-A-Bell! How Reliable are Concept Removal Methods for Diffusion Models?

Oct 16, 2023

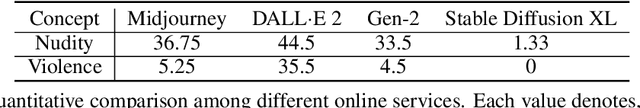

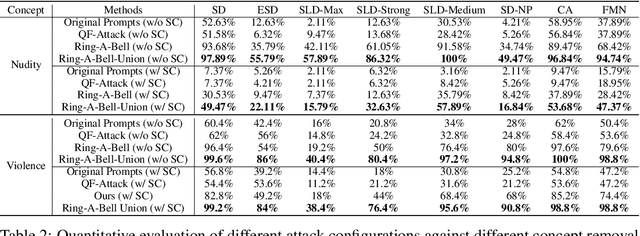

Abstract:Diffusion models for text-to-image (T2I) synthesis, such as Stable Diffusion (SD), have recently demonstrated exceptional capabilities for generating high-quality content. However, this progress has raised several concerns of potential misuse, particularly in creating copyrighted, prohibited, and restricted content, or NSFW (not safe for work) images. While efforts have been made to mitigate such problems, either by implementing a safety filter at the evaluation stage or by fine-tuning models to eliminate undesirable concepts or styles, the effectiveness of these safety measures in dealing with a wide range of prompts remains largely unexplored. In this work, we aim to investigate these safety mechanisms by proposing one novel concept retrieval algorithm for evaluation. We introduce Ring-A-Bell, a model-agnostic red-teaming tool for T2I diffusion models, where the whole evaluation can be prepared in advance without prior knowledge of the target model. Specifically, Ring-A-Bell first performs concept extraction to obtain holistic representations for sensitive and inappropriate concepts. Subsequently, by leveraging the extracted concept, Ring-A-Bell automatically identifies problematic prompts for diffusion models with the corresponding generation of inappropriate content, allowing the user to assess the reliability of deployed safety mechanisms. Finally, we empirically validate our method by testing online services such as Midjourney and various methods of concept removal. Our results show that Ring-A-Bell, by manipulating safe prompting benchmarks, can transform prompts that were originally regarded as safe to evade existing safety mechanisms, thus revealing the defects of the so-called safety mechanisms which could practically lead to the generation of harmful contents.

DPAF: Image Synthesis via Differentially Private Aggregation in Forward Phase

Apr 20, 2023

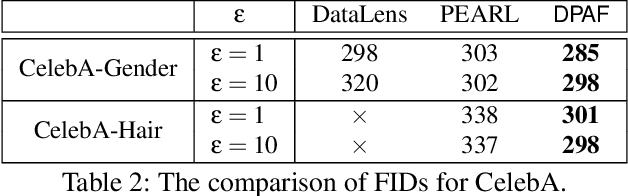

Abstract:Differentially private synthetic data is a promising alternative for sensitive data release. Many differentially private generative models have been proposed in the literature. Unfortunately, they all suffer from the low utility of the synthetic data, particularly for images of high resolutions. Here, we propose DPAF, an effective differentially private generative model for high-dimensional image synthesis. Different from the prior private stochastic gradient descent-based methods that add Gaussian noises in the backward phase during the model training, DPAF adds a differentially private feature aggregation in the forward phase, bringing advantages, including the reduction of information loss in gradient clipping and low sensitivity for the aggregation. Moreover, as an improper batch size has an adverse impact on the utility of synthetic data, DPAF also tackles the problem of setting a proper batch size by proposing a novel training strategy that asymmetrically trains different parts of the discriminator. We extensively evaluate different methods on multiple image datasets (up to images of 128x128 resolution) to demonstrate the performance of DPAF.

CAFE: Catastrophic Data Leakage in Vertical Federated Learning

Nov 02, 2021

Abstract:Recent studies show that private training data can be leaked through the gradients sharing mechanism deployed in distributed machine learning systems, such as federated learning (FL). Increasing batch size to complicate data recovery is often viewed as a promising defense strategy against data leakage. In this paper, we revisit this defense premise and propose an advanced data leakage attack with theoretical justification to efficiently recover batch data from the shared aggregated gradients. We name our proposed method as catastrophic data leakage in vertical federated learning (CAFE). Comparing to existing data leakage attacks, our extensive experimental results on vertical FL settings demonstrate the effectiveness of CAFE to perform large-batch data leakage attack with improved data recovery quality. We also propose a practical countermeasure to mitigate CAFE. Our results suggest that private data participated in standard FL, especially the vertical case, have a high risk of being leaked from the training gradients. Our analysis implies unprecedented and practical data leakage risks in those learning settings. The code of our work is available at https://github.com/DeRafael/CAFE.

Real-World Adversarial Examples involving Makeup Application

Sep 04, 2021

Abstract:Deep neural networks have developed rapidly and have achieved outstanding performance in several tasks, such as image classification and natural language processing. However, recent studies have indicated that both digital and physical adversarial examples can fool neural networks. Face-recognition systems are used in various applications that involve security threats from physical adversarial examples. Herein, we propose a physical adversarial attack with the use of full-face makeup. The presence of makeup on the human face is a reasonable possibility, which possibly increases the imperceptibility of attacks. In our attack framework, we combine the cycle-adversarial generative network (cycle-GAN) and a victimized classifier. The Cycle-GAN is used to generate adversarial makeup, and the architecture of the victimized classifier is VGG 16. Our experimental results show that our attack can effectively overcome manual errors in makeup application, such as color and position-related errors. We also demonstrate that the approaches used to train the models can influence physical attacks; the adversarial perturbations crafted from the pre-trained model are affected by the corresponding training data.

Formalizing Generalization and Robustness of Neural Networks to Weight Perturbations

Mar 03, 2021

Abstract:Studying the sensitivity of weight perturbation in neural networks and its impacts on model performance, including generalization and robustness, is an active research topic due to its implications on a wide range of machine learning tasks such as model compression, generalization gap assessment, and adversarial attacks. In this paper, we provide the first formal analysis for feed-forward neural networks with non-negative monotone activation functions against norm-bounded weight perturbations, in terms of the robustness in pairwise class margin functions and the Rademacher complexity for generalization. We further design a new theory-driven loss function for training generalizable and robust neural networks against weight perturbations. Empirical experiments are conducted to validate our theoretical analysis. Our results offer fundamental insights for characterizing the generalization and robustness of neural networks against weight perturbations.

Adversarial Examples for Unsupervised Machine Learning Models

Mar 02, 2021

Abstract:Adversarial examples causing evasive predictions are widely used to evaluate and improve the robustness of machine learning models. However, current studies on adversarial examples focus on supervised learning tasks, relying on the ground-truth data label, a targeted objective, or supervision from a trained classifier. In this paper, we propose a framework of generating adversarial examples for unsupervised models and demonstrate novel applications to data augmentation. Our framework exploits a mutual information neural estimator as an information-theoretic similarity measure to generate adversarial examples without supervision. We propose a new MinMax algorithm with provable convergence guarantees for efficient generation of unsupervised adversarial examples. Our framework can also be extended to supervised adversarial examples. When using unsupervised adversarial examples as a simple plug-in data augmentation tool for model retraining, significant improvements are consistently observed across different unsupervised tasks and datasets, including data reconstruction, representation learning, and contrastive learning. Our results show novel methods and advantages in studying and improving robustness of unsupervised learning problems via adversarial examples. Our codes are available at https://github.com/IBM/UAE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge