Chau

Effective Guidance for Model Attention with Simple Yes-no Annotations

Oct 29, 2024

Abstract:Modern deep learning models often make predictions by focusing on irrelevant areas, leading to biased performance and limited generalization. Existing methods aimed at rectifying model attention require explicit labels for irrelevant areas or complex pixel-wise ground truth attention maps. We present CRAYON (Correcting Reasoning with Annotations of Yes Or No), offering effective, scalable, and practical solutions to rectify model attention using simple yes-no annotations. CRAYON empowers classical and modern model interpretation techniques to identify and guide model reasoning: CRAYON-ATTENTION directs classic interpretations based on saliency maps to focus on relevant image regions, while CRAYON-PRUNING removes irrelevant neurons identified by modern concept-based methods to mitigate their influence. Through extensive experiments with both quantitative and human evaluation, we showcase CRAYON's effectiveness, scalability, and practicality in refining model attention. CRAYON achieves state-of-the-art performance, outperforming 12 methods across 3 benchmark datasets, surpassing approaches that require more complex annotations.

ManimML: Communicating Machine Learning Architectures with Animation

Jun 29, 2023Abstract:There has been an explosion in interest in machine learning (ML) in recent years due to its applications to science and engineering. However, as ML techniques have advanced, tools for explaining and visualizing novel ML algorithms have lagged behind. Animation has been shown to be a powerful tool for making engaging visualizations of systems that dynamically change over time, which makes it well suited to the task of communicating ML algorithms. However, the current approach to animating ML algorithms is to handcraft applications that highlight specific algorithms or use complex generalized animation software. We developed ManimML, an open-source Python library for easily generating animations of ML algorithms directly from code. We sought to leverage ML practitioners' preexisting knowledge of programming rather than requiring them to learn complex animation software. ManimML has a familiar syntax for specifying neural networks that mimics popular deep learning frameworks like Pytorch. A user can take a preexisting neural network architecture and easily write a specification for an animation in ManimML, which will then automatically compose animations for different components of the system into a final animation of the entire neural network. ManimML is open source and available at https://github.com/helblazer811/ManimML.

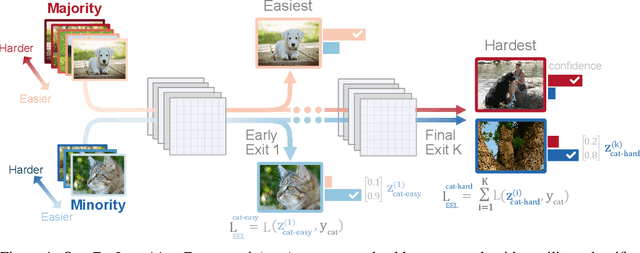

ELF: An Early-Exiting Framework for Long-Tailed Classification

Jun 22, 2020

Abstract:The natural world often follows a long-tailed data distribution where only a few classes account for most of the examples. This long-tail causes classifiers to overfit to the majority class. To mitigate this, prior solutions commonly adopt class rebalancing strategies such as data resampling and loss reshaping. However, by treating each example within a class equally, these methods fail to account for the important notion of example hardness, i.e., within each class some examples are easier to classify than others. To incorporate this notion of hardness into the learning process, we propose the EarLy-exiting Framework(ELF). During training, ELF learns to early-exit easy examples through auxiliary branches attached to a backbone network. This offers a dual benefit-(1) the neural network increasingly focuses on hard examples, since they contribute more to the overall network loss; and (2) it frees up additional model capacity to distinguish difficult examples. Experimental results on two large-scale datasets, ImageNet LT and iNaturalist'18, demonstrate that ELF can improve state-of-the-art accuracy by more than 3 percent. This comes with the additional benefit of reducing up to 20 percent of inference time FLOPS. ELF is complementary to prior work and can naturally integrate with a variety of existing methods to tackle the challenge of long-tailed distributions.

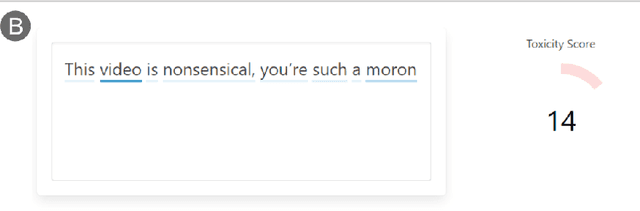

RECAST: Interactive Auditing of Automatic Toxicity Detection Models

Jan 07, 2020

Abstract:As toxic language becomes nearly pervasive online, there has been increasing interest in leveraging the advancements in natural language processing (NLP), from very large transformer models to automatically detecting and removing toxic comments. Despite the fairness concerns, lack of adversarial robustness, and limited prediction explainability for deep learning systems, there is currently little work for auditing these systems and understanding how they work for both developers and users. We present our ongoing work, RECAST, an interactive tool for examining toxicity detection models by visualizing explanations for predictions and providing alternative wordings for detected toxic speech.

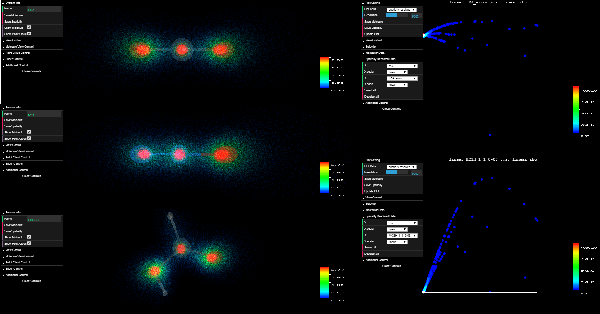

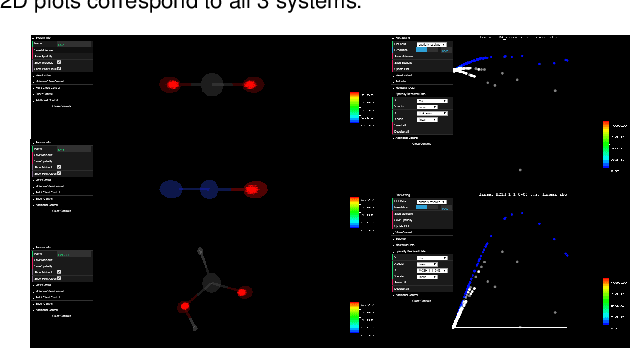

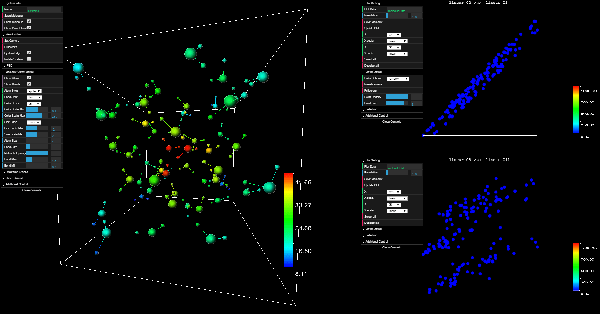

ElectroLens: Understanding Atomistic Simulations Through Spatially-resolved Visualization of High-dimensional Features

Aug 20, 2019

Abstract:In recent years, machine learning (ML) has gained significant popularity in the field of chemical informatics and electronic structure theory. These techniques often require researchers to engineer abstract "features" that encode chemical concepts into a mathematical form compatible with the input to machine-learning models. However, there is no existing tool to connect these abstract features back to the actual chemical system, making it difficult to diagnose failures and to build intuition about the meaning of the features. We present ElectroLens, a new visualization tool for high-dimensional spatially-resolved features to tackle this problem. The tool visualizes high-dimensional data sets for atomistic and electron environment features by a series of linked 3D views and 2D plots. The tool is able to connect different derived features and their corresponding regions in 3D via interactive selection. It is built to be scalable, and integrate with existing infrastructure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge