Changchun Liu

Chinese Spelling Correction: A Comprehensive Survey of Progress, Challenges, and Opportunities

Feb 17, 2025

Abstract:Chinese Spelling Correction (CSC) is a critical task in natural language processing, aimed at detecting and correcting spelling errors in Chinese text. This survey provides a comprehensive overview of CSC, tracing its evolution from pre-trained language models to large language models, and critically analyzing their respective strengths and weaknesses in this domain. Moreover, we further present a detailed examination of existing benchmark datasets, highlighting their inherent challenges and limitations. Finally, we propose promising future research directions, particularly focusing on leveraging the potential of LLMs and their reasoning capabilities for improved CSC performance. To the best of our knowledge, this is the first comprehensive survey dedicated to the field of CSC. We believe this work will serve as a valuable resource for researchers, fostering a deeper understanding of the field and inspiring future advancements.

A Large Language Model-based multi-agent manufacturing system for intelligent shopfloor

May 27, 2024

Abstract:As productivity advances, the demand of customers for multi-variety and small-batch production is increasing, thereby putting forward higher requirements for manufacturing systems. When production tasks frequent changes due to this demand, traditional manufacturing systems often cannot response promptly. The multi-agent manufacturing system is proposed to address this problem. However, because of technical limitations, the negotiation among agents in this kind of system is realized through predefined heuristic rules, which is not intelligent enough to deal with the multi-variety and small batch production. To this end, a Large Language Model-based (LLM-based) multi-agent manufacturing system for intelligent shopfloor is proposed in the present study. This system delineates the diverse agents and defines their collaborative methods. The roles of the agents encompass Machine Server Agent (MSA), Bid Inviter Agent (BIA), Bidder Agent (BA), Thinking Agent (TA), and Decision Agent (DA). Due to the support of LLMs, TA and DA acquire the ability of analyzing the shopfloor condition and choosing the most suitable machine, as opposed to executing a predefined program artificially. The negotiation between BAs and BIA is the most crucial step in connecting manufacturing resources. With the support of TA and DA, BIA will finalize the distribution of orders, relying on the information of each machine returned by BA. MSAs bears the responsibility for connecting the agents with the physical shopfloor. This system aims to distribute and transmit workpieces through the collaboration of the agents with these distinct roles, distinguishing it from other scheduling approaches. Comparative experiments were also conducted to validate the performance of this system.

An Auto-tuning Framework for Autonomous Vehicles

Aug 14, 2018

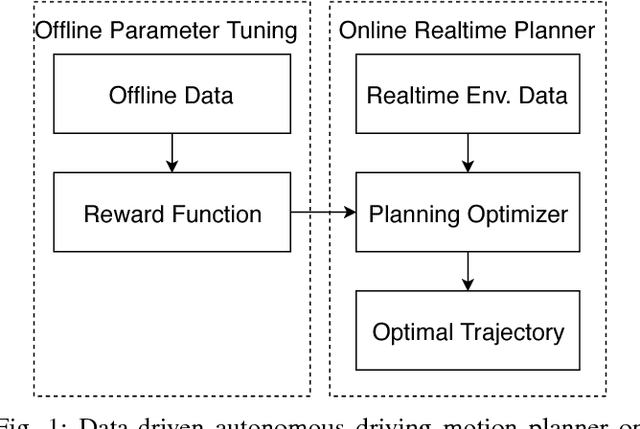

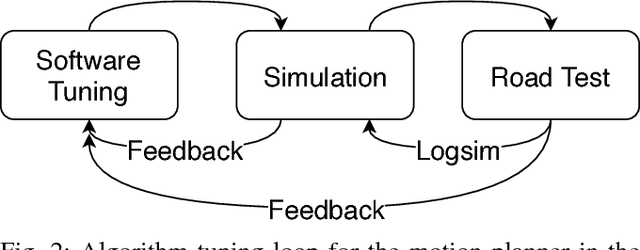

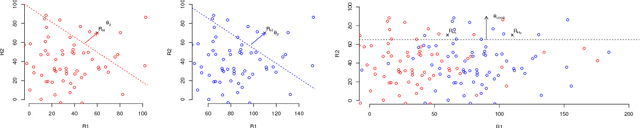

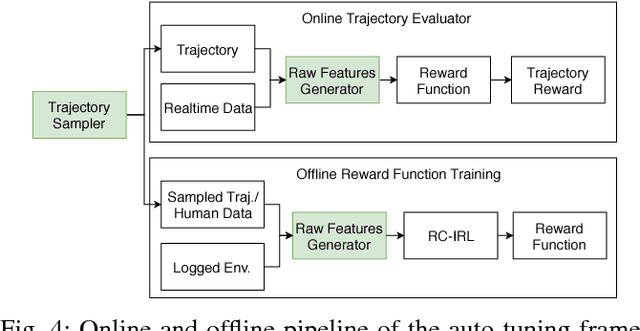

Abstract:Many autonomous driving motion planners generate trajectories by optimizing a reward/cost functional. Designing and tuning a high-performance reward/cost functional for Level-4 autonomous driving vehicles with exposure to different driving conditions is challenging. Traditionally, reward/cost functional tuning involves substantial human effort and time spent on both simulations and road tests. As the scenario becomes more complicated, tuning to improve the motion planner performance becomes increasingly difficult. To systematically solve this issue, we develop a data-driven auto-tuning framework based on the Apollo autonomous driving framework. The framework includes a novel rank-based conditional inverse reinforcement learning algorithm, an offline training strategy and an automatic method of collecting and labeling data. Our auto-tuning framework has the following advantages that make it suitable for tuning an autonomous driving motion planner. First, compared to that of most inverse reinforcement learning algorithms, our algorithm training is efficient and capable of being applied to different scenarios. Second, the offline training strategy offers a safe way to adjust the parameters before public road testing. Third, the expert driving data and information about the surrounding environment are collected and automatically labeled, which considerably reduces the manual effort. Finally, the motion planner tuned by the framework is examined via both simulation and public road testing and is shown to achieve good performance.

Baidu Apollo EM Motion Planner

Jul 20, 2018

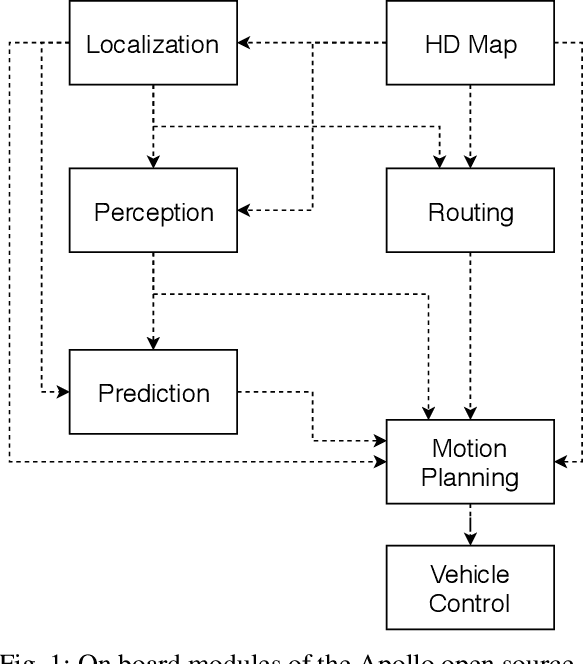

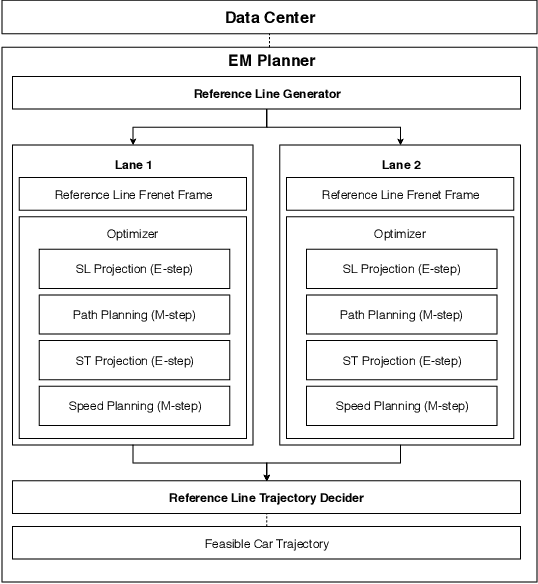

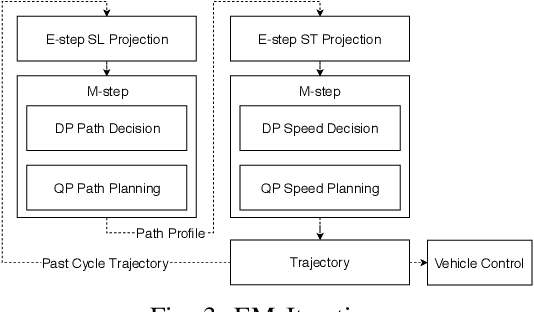

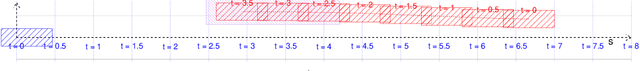

Abstract:In this manuscript, we introduce a real-time motion planning system based on the Baidu Apollo (open source) autonomous driving platform. The developed system aims to address the industrial level-4 motion planning problem while considering safety, comfort and scalability. The system covers multilane and single-lane autonomous driving in a hierarchical manner: (1) The top layer of the system is a multilane strategy that handles lane-change scenarios by comparing lane-level trajectories computed in parallel. (2) Inside the lane-level trajectory generator, it iteratively solves path and speed optimization based on a Frenet frame. (3) For path and speed optimization, a combination of dynamic programming and spline-based quadratic programming is proposed to construct a scalable and easy-to-tune framework to handle traffic rules, obstacle decisions and smoothness simultaneously. The planner is scalable to both highway and lower-speed city driving scenarios. We also demonstrate the algorithm through scenario illustrations and on-road test results. The system described in this manuscript has been deployed to dozens of Baidu Apollo autonomous driving vehicles since Apollo v1.5 was announced in September 2017. As of May 16th, 2018, the system has been tested under 3,380 hours and approximately 68,000 kilometers (42,253 miles) of closed-loop autonomous driving under various urban scenarios. The algorithm described in this manuscript is available at https://github.com/ApolloAuto/apollo/tree/master/modules/planning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge