Cédric Richard

Université de Nice Sophia-Antipolis, CNRS, Nice, France

Riemannian Change Point Detection on Manifolds with Robust Centroid Estimation

Aug 25, 2025Abstract:Non-parametric change-point detection in streaming time series data is a long-standing challenge in signal processing. Recent advancements in statistics and machine learning have increasingly addressed this problem for data residing on Riemannian manifolds. One prominent strategy involves monitoring abrupt changes in the center of mass of the time series. Implemented in a streaming fashion, this strategy, however, requires careful step size tuning when computing the updates of the center of mass. In this paper, we propose to leverage robust centroid on manifolds from M-estimation theory to address this issue. Our proposal consists of comparing two centroid estimates: the classical Karcher mean (sensitive to change) versus one defined from Huber's function (robust to change). This comparison leads to the definition of a test statistic whose performance is less sensitive to the underlying estimation method. We propose a stochastic Riemannian optimization algorithm to estimate both robust centroids efficiently. Experiments conducted on both simulated and real-world data across two representative manifolds demonstrate the superior performance of our proposed method.

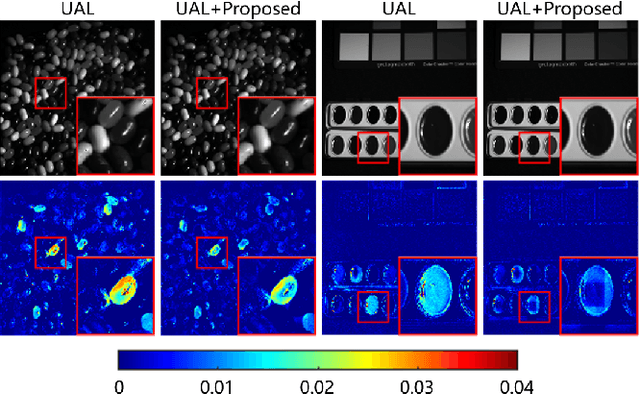

DTU-Net: A Multi-Scale Dilated Transformer Network for Nonlinear Hyperspectral Unmixing

Mar 06, 2025

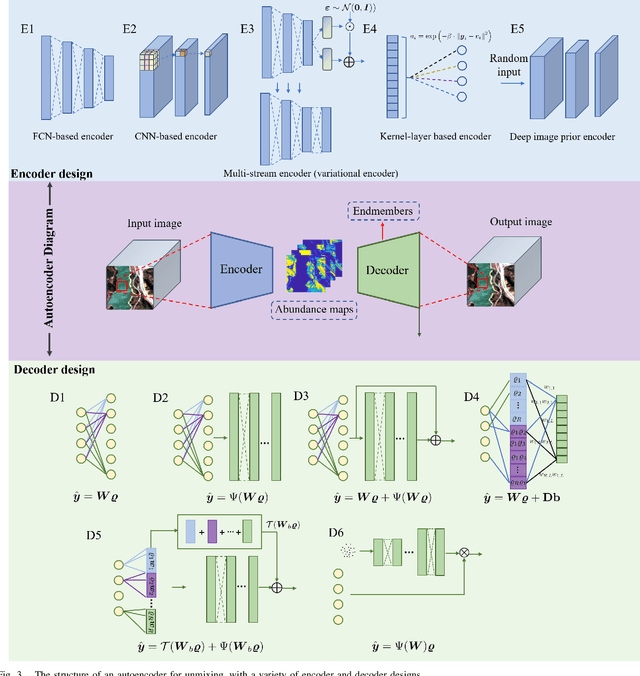

Abstract:Transformers have shown significant success in hyperspectral unmixing (HU). However, challenges remain. While multi-scale and long-range spatial correlations are essential in unmixing tasks, current Transformer-based unmixing networks, built on Vision Transformer (ViT) or Swin-Transformer, struggle to capture them effectively. Additionally, current Transformer-based unmixing networks rely on the linear mixing model, which lacks the flexibility to accommodate scenarios where nonlinear effects are significant. To address these limitations, we propose a multi-scale Dilated Transformer-based unmixing network for nonlinear HU (DTU-Net). The encoder employs two branches. The first one performs multi-scale spatial feature extraction using Multi-Scale Dilated Attention (MSDA) in the Dilated Transformer, which varies dilation rates across attention heads to capture long-range and multi-scale spatial correlations. The second one performs spectral feature extraction utilizing 3D-CNNs with channel attention. The outputs from both branches are then fused to integrate multi-scale spatial and spectral information, which is subsequently transformed to estimate the abundances. The decoder is designed to accommodate both linear and nonlinear mixing scenarios. Its interpretability is enhanced by explicitly modeling the relationships between endmembers, abundances, and nonlinear coefficients in accordance with the polynomial post-nonlinear mixing model (PPNMM). Experiments on synthetic and real datasets validate the effectiveness of the proposed DTU-Net compared to PPNMM-derived methods and several advanced unmixing networks.

Distributed pressure matching strategy using diffusion adaptation

Nov 13, 2023Abstract:Personal sound zone (PSZ) systems, which aim to create listening (bright) and silent (dark) zones in neighboring regions of space, are often based on time-varying acoustics. Conventional adaptive-based methods for handling PSZ tasks suffer from the collection and processing of acoustic transfer functions~(ATFs) between all the matching microphones and all the loudspeakers in a centralized manner, resulting in high calculation complexity and costly accuracy requirements. This paper presents a distributed pressure-matching (PM) method relying on diffusion adaptation (DPM-D) to spread the computational load amongst nodes in order to overcome these issues. The global PM problem is defined as a sum of local costs, and the diffusion adaption approach is then used to create a distributed solution that just needs local information exchanges. Simulations over multi-frequency bins and a computational complexity analysis are conducted to evaluate the properties of the algorithm and to compare it with centralized counterparts.

Spatial Deep Deconvolution U-Net for Traffic Analyses with Distributed Acoustic Sensing

Dec 20, 2022

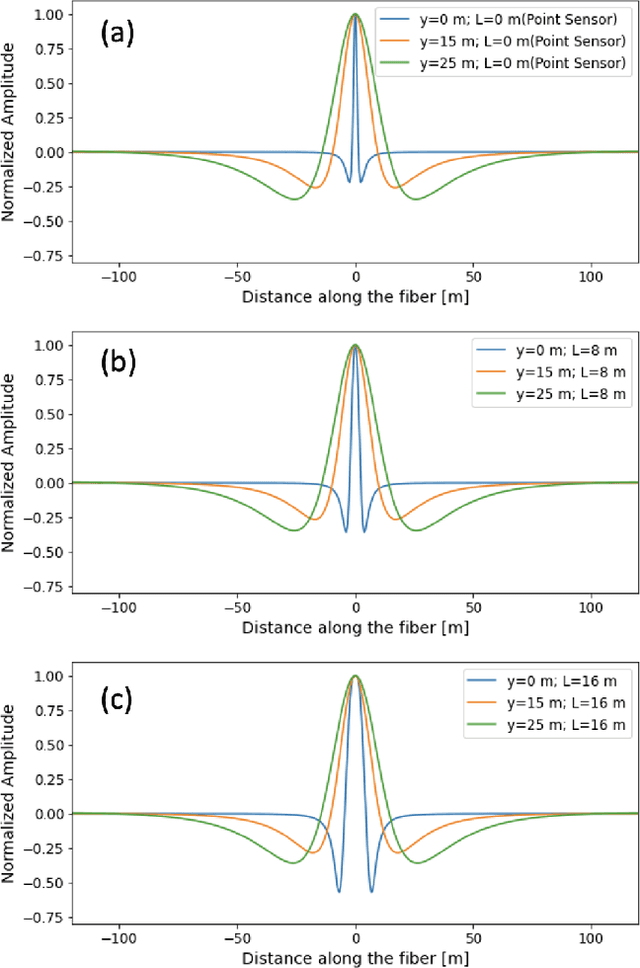

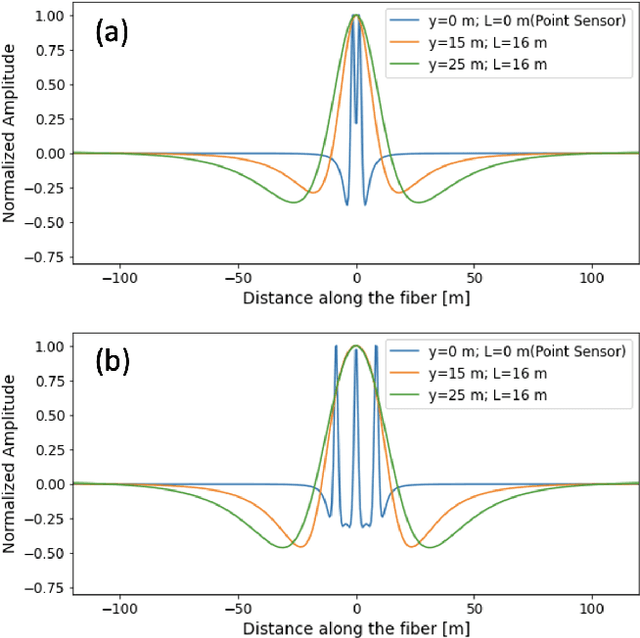

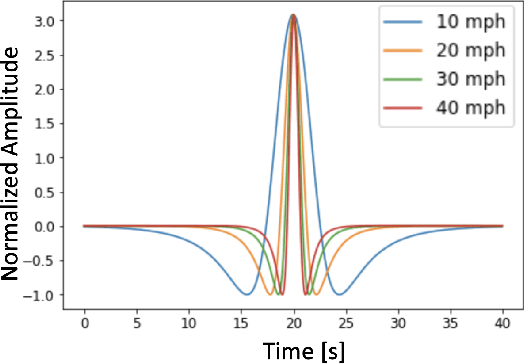

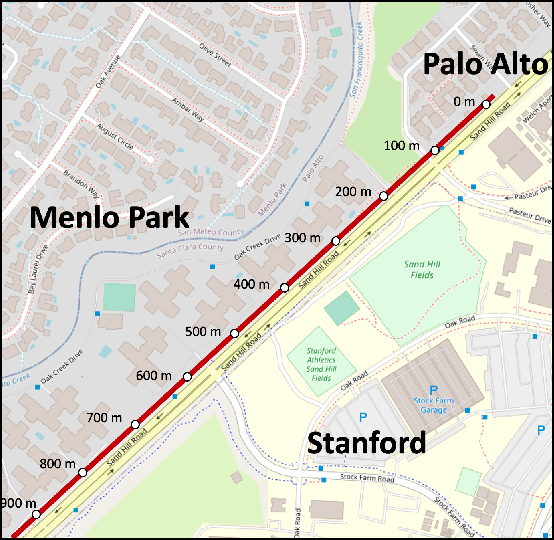

Abstract:Distributed Acoustic Sensing (DAS) that transforms city-wide fiber-optic cables into a large-scale strain sensing array has shown the potential to revolutionize urban traffic monitoring by providing a fine-grained, scalable, and low-maintenance monitoring solution. However, there are challenges that limit DAS's real-world usage: noise contamination and interference among closely traveling cars. To address the issues, we introduce a self-supervised U-Net model that can suppress background noise and compress car-induced DAS signals into high-resolution pulses through spatial deconvolution. To guide the design of the approach, we investigate the fiber response to vehicles through numerical simulation and field experiments. We show that the localized and narrow outputs from our model lead to accurate and highly resolved car position and speed tracking. We evaluate the effectiveness and robustness of our method through field recordings under different traffic conditions and various driving speeds. Our results show that our method can enhance the spatial-temporal resolution and better resolve closely traveling cars. The spatial deconvolution U-Net model also enables the characterization of large-size vehicles to identify axle numbers and estimate the vehicle length. Monitoring large-size vehicles also benefits imaging deep earth by leveraging the surface waves induced by the dynamic vehicle-road interaction.

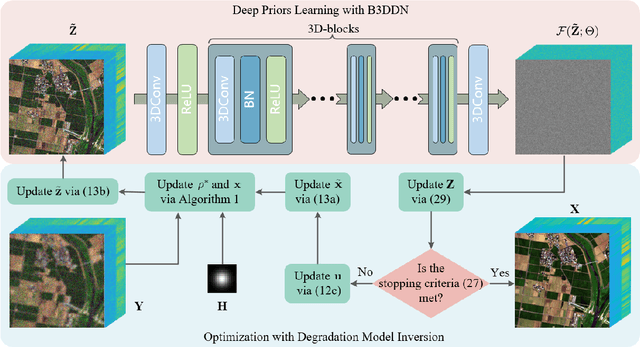

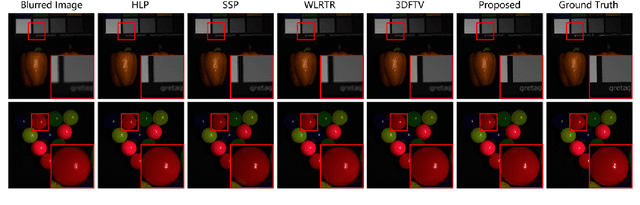

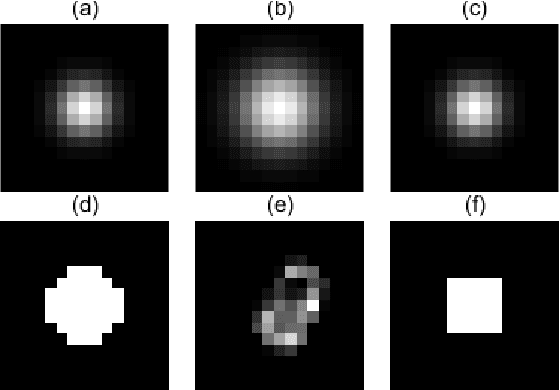

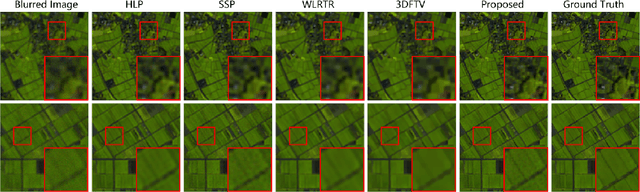

Tuning-free Plug-and-Play Hyperspectral Image Deconvolution with Deep Priors

Nov 28, 2022

Abstract:Deconvolution is a widely used strategy to mitigate the blurring and noisy degradation of hyperspectral images~(HSI) generated by the acquisition devices. This issue is usually addressed by solving an ill-posed inverse problem. While investigating proper image priors can enhance the deconvolution performance, it is not trivial to handcraft a powerful regularizer and to set the regularization parameters. To address these issues, in this paper we introduce a tuning-free Plug-and-Play (PnP) algorithm for HSI deconvolution. Specifically, we use the alternating direction method of multipliers (ADMM) to decompose the optimization problem into two iterative sub-problems. A flexible blind 3D denoising network (B3DDN) is designed to learn deep priors and to solve the denoising sub-problem with different noise levels. A measure of 3D residual whiteness is then investigated to adjust the penalty parameters when solving the quadratic sub-problems, as well as a stopping criterion. Experimental results on both simulated and real-world data with ground-truth demonstrate the superiority of the proposed method.

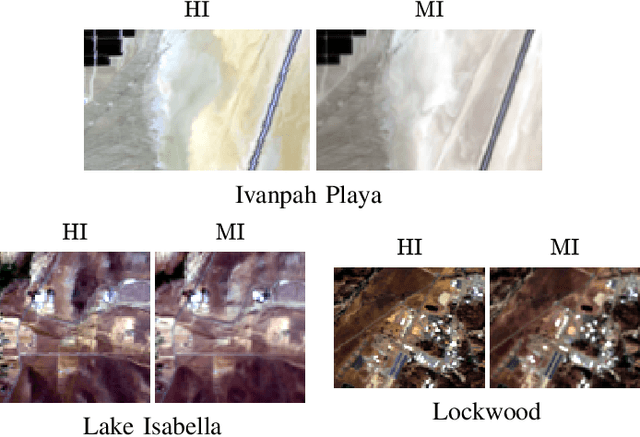

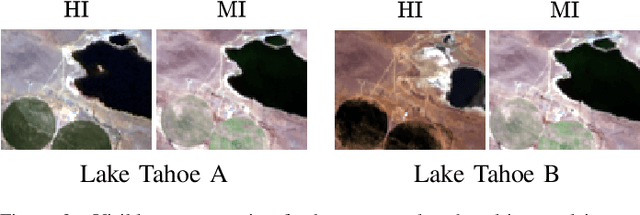

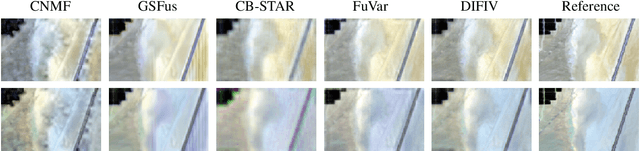

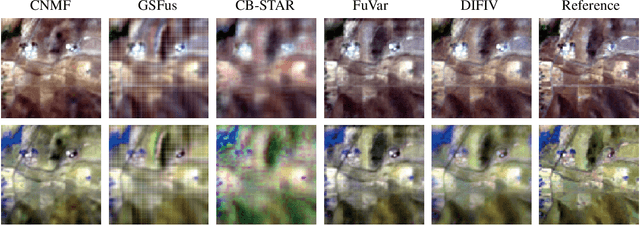

Deep Hyperspectral and Multispectral Image Fusion with Inter-image Variability

Aug 24, 2022

Abstract:Hyperspectral and multispectral image fusion allows us to overcome the hardware limitations of hyperspectral imaging systems inherent to their lower spatial resolution. Nevertheless, existing algorithms usually fail to consider realistic image acquisition conditions. This paper presents a general imaging model that considers inter-image variability of data from heterogeneous sources and flexible image priors. The fusion problem is stated as an optimization problem in the maximum a posteriori framework. We introduce an original image fusion method that, on the one hand, solves the optimization problem accounting for inter-image variability with an iteratively reweighted scheme and, on the other hand, that leverages light-weight CNN-based networks to learn realistic image priors from data. In addition, we propose a zero-shot strategy to directly learn the image-specific prior of the latent images in an unsupervised manner. The performance of the algorithm is illustrated with real data subject to inter-image variability.

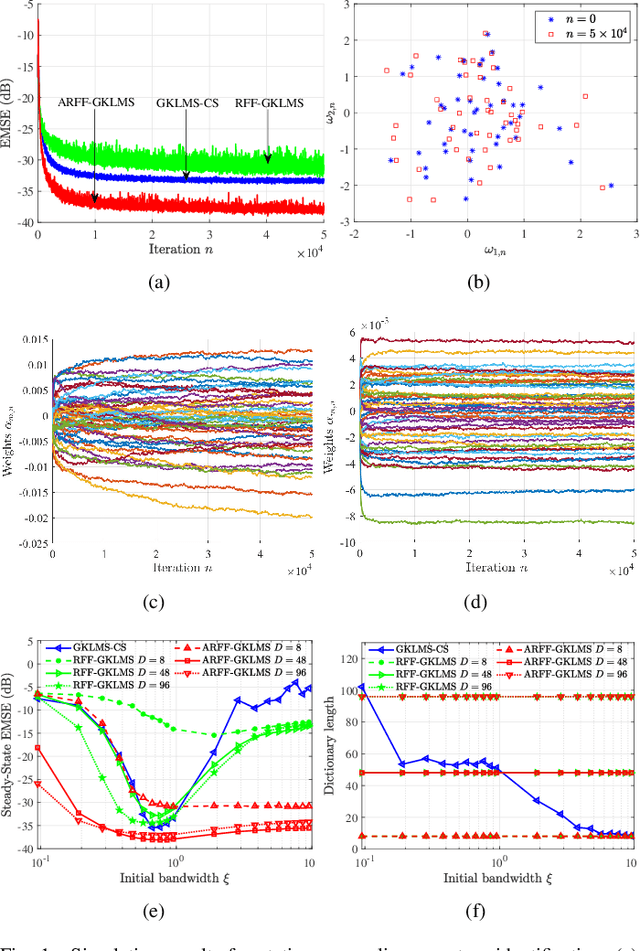

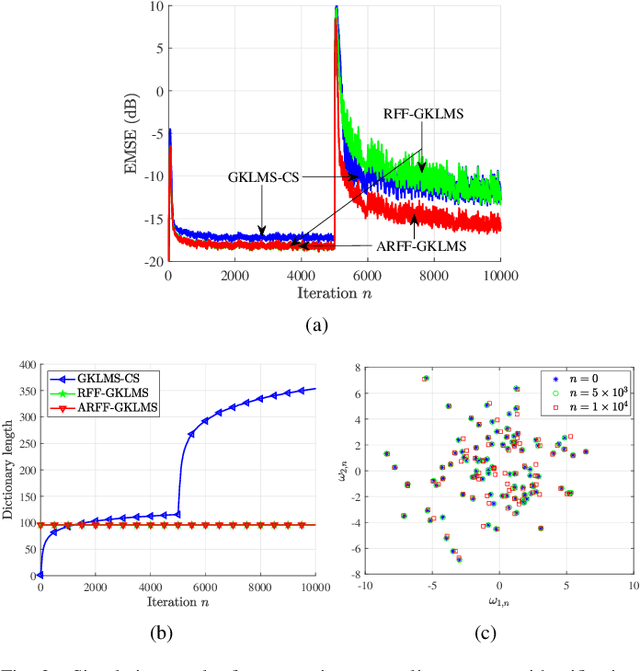

Adaptive Random Fourier Features Kernel LMS

Jul 14, 2022

Abstract:We propose the adaptive random Fourier features Gaussian kernel LMS (ARFF-GKLMS). Like most kernel adaptive filters based on stochastic gradient descent, this algorithm uses a preset number of random Fourier features to save computation cost. However, as an extra flexibility, it can adapt the inherent kernel bandwidth in the random Fourier features in an online manner. This adaptation mechanism allows to alleviate the problem of selecting the kernel bandwidth beforehand for the benefit of an improved tracking in non-stationary circumstances. Simulation results confirm that the proposed algorithm achieves a performance improvement in terms of convergence rate, error at steady-state and tracking ability over other kernel adaptive filters with preset kernel bandwidth.

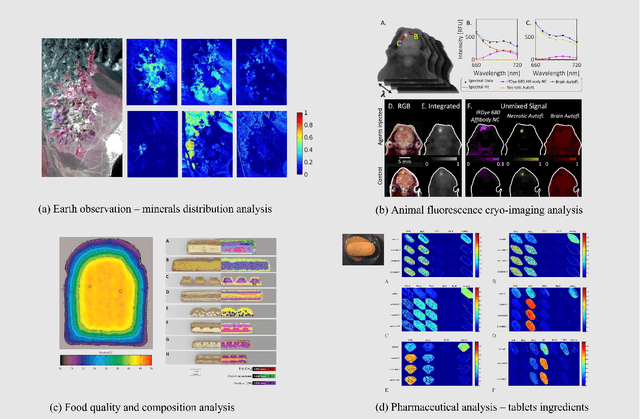

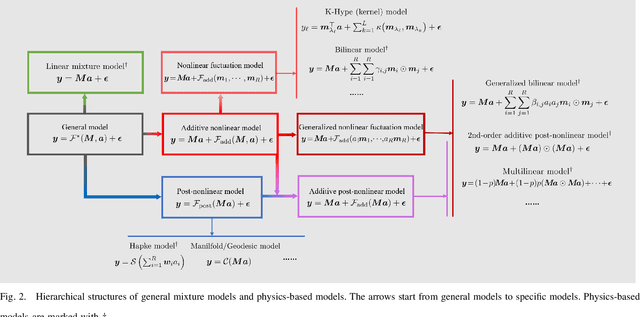

Integration of Physics-Based and Data-Driven Models for Hyperspectral Image Unmixing

Jun 11, 2022

Abstract:Spectral unmixing is one of the most important quantitative analysis tasks in hyperspectral data processing. Conventional physics-based models are characterized by clear interpretation. However, due to the complex mixture mechanism and limited nonlinearity modeling capacity, these models may not be accurate, especially, in analyzing scenes with unknown physical characteristics. Data-driven methods have developed rapidly in recent years, in particular deep learning methods as they possess superior capability in modeling complex and nonlinear systems. Simply transferring these methods as black-boxes to conduct unmixing may lead to low physical interpretability and generalization ability. Consequently, several contributions have been dedicated to integrating advantages of both physics-based models and data-driven methods. In this article, we present an overview of recent advances on this topic from several aspects, including deep neural network (DNN) structures design, prior capturing and loss design, and summarise these methods in a common mathematical optimization framework. In addition, relevant remarks and discussions are conducted made for providing further understanding and prospective improvement of the methods. The related source codes and data are collected and made available at http://github.com/xiuheng-wang/awesome-hyperspectral-image-unmixing.

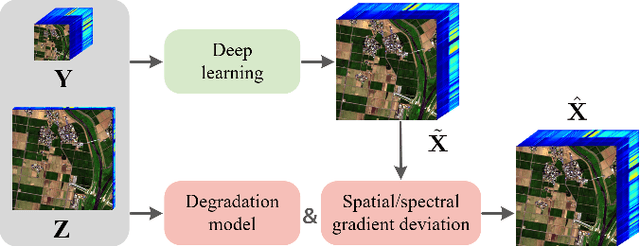

Hyperspectral Image Super-resolution with Deep Priors and Degradation Model Inversion

Jan 24, 2022

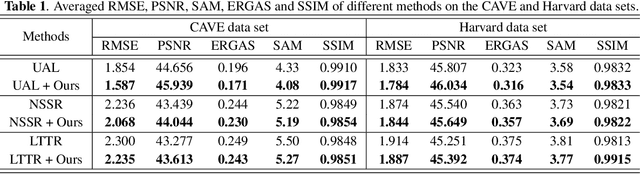

Abstract:To overcome inherent hardware limitations of hyperspectral imaging systems with respect to their spatial resolution, fusion-based hyperspectral image (HSI) super-resolution is attracting increasing attention. This technique aims to fuse a low-resolution (LR) HSI and a conventional high-resolution (HR) RGB image in order to obtain an HR HSI. Recently, deep learning architectures have been used to address the HSI super-resolution problem and have achieved remarkable performance. However, they ignore the degradation model even though this model has a clear physical interpretation and may contribute to improve the performance. We address this problem by proposing a method that, on the one hand, makes use of the linear degradation model in the data-fidelity term of the objective function and, on the other hand, utilizes the output of a convolutional neural network for designing a deep prior regularizer in spectral and spatial gradient domains. Experiments show the performance improvement achieved with this strategy.

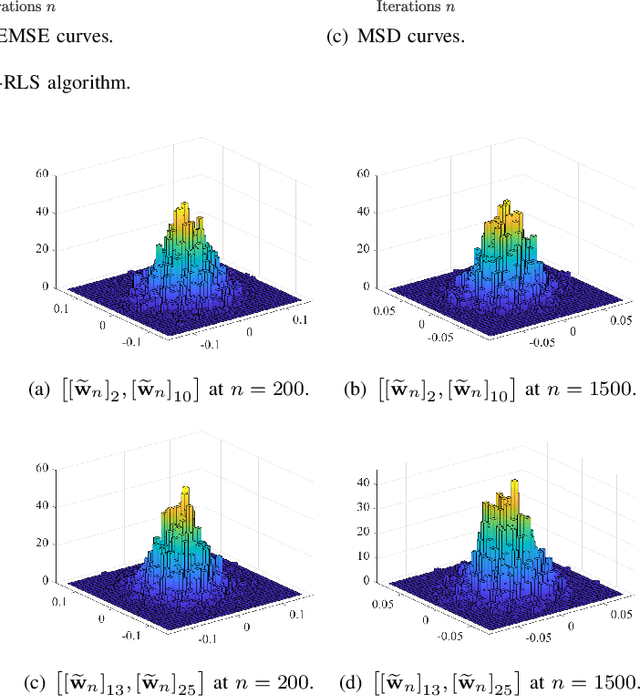

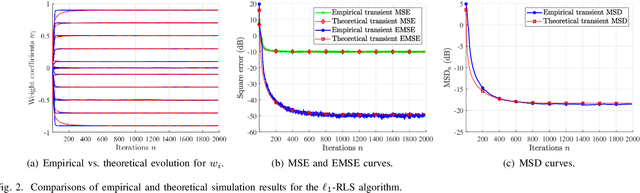

Transient Performance Analysis of the $\ell_1$-RLS

Sep 14, 2021

Abstract:The recursive least-squares algorithm with $\ell_1$-norm regularization ($\ell_1$-RLS) exhibits excellent performance in terms of convergence rate and steady-state error in identification of sparse systems. Nevertheless few works have studied its stochastic behavior, in particular its transient performance. In this letter, we derive analytical models of the transient behavior of the $\ell_1$-RLS in the mean and mean-square sense. Simulation results illustrate the accuracy of these models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge