Jingxiao Liu

Spatial Deep Deconvolution U-Net for Traffic Analyses with Distributed Acoustic Sensing

Dec 20, 2022

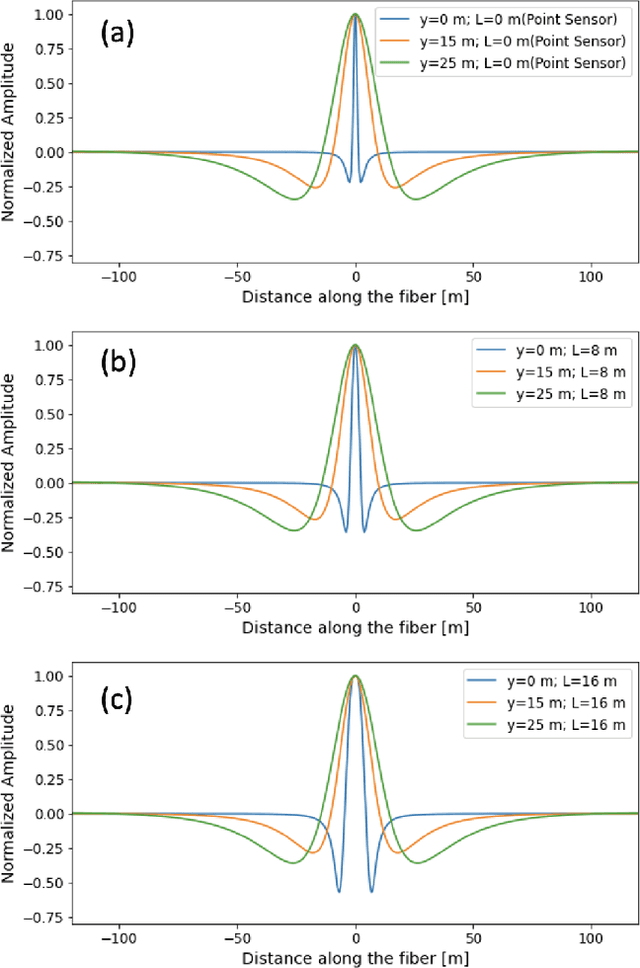

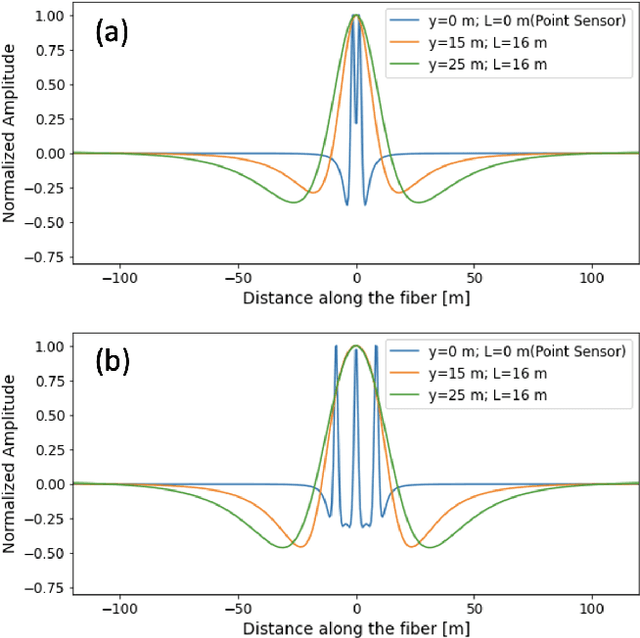

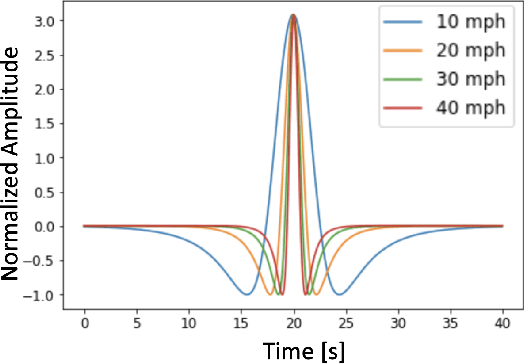

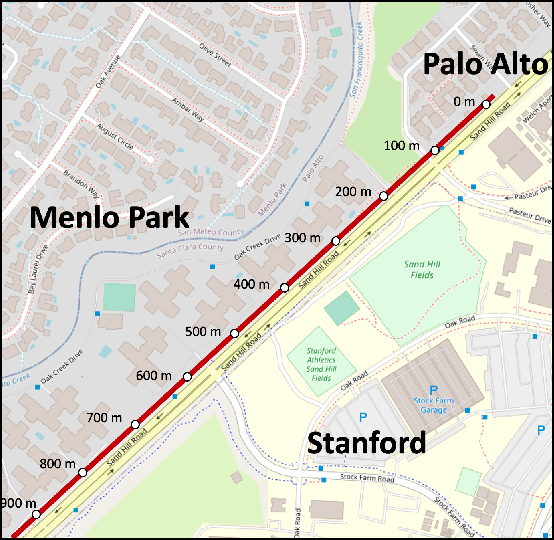

Abstract:Distributed Acoustic Sensing (DAS) that transforms city-wide fiber-optic cables into a large-scale strain sensing array has shown the potential to revolutionize urban traffic monitoring by providing a fine-grained, scalable, and low-maintenance monitoring solution. However, there are challenges that limit DAS's real-world usage: noise contamination and interference among closely traveling cars. To address the issues, we introduce a self-supervised U-Net model that can suppress background noise and compress car-induced DAS signals into high-resolution pulses through spatial deconvolution. To guide the design of the approach, we investigate the fiber response to vehicles through numerical simulation and field experiments. We show that the localized and narrow outputs from our model lead to accurate and highly resolved car position and speed tracking. We evaluate the effectiveness and robustness of our method through field recordings under different traffic conditions and various driving speeds. Our results show that our method can enhance the spatial-temporal resolution and better resolve closely traveling cars. The spatial deconvolution U-Net model also enables the characterization of large-size vehicles to identify axle numbers and estimate the vehicle length. Monitoring large-size vehicles also benefits imaging deep earth by leveraging the surface waves induced by the dynamic vehicle-road interaction.

GaitVibe+: Enhancing Structural Vibration-based Footstep Localization Using Temporary Cameras for In-home Gait Analysis

Dec 07, 2022

Abstract:In-home gait analysis is important for providing early diagnosis and adaptive treatments for individuals with gait disorders. Existing systems include wearables and pressure mats, but they have limited scalability. Recent studies have developed vision-based systems to enable scalable, accurate in-home gait analysis, but it faces privacy concerns due to the exposure of people's appearances. Our prior work developed footstep-induced structural vibration sensing for gait monitoring, which is device-free, wide-ranged, and perceived as more privacy-friendly. Although it has succeeded in temporal gait event extraction, it shows limited performance for spatial gait parameter estimation due to imprecise footstep localization. In particular, the localization error mainly comes from the estimation error of the wave arrival time at the vibration sensors and its error propagation to wave velocity estimations. Therefore, we present GaitVibe+, a vibration-based footstep localization method fused with temporarily installed cameras for in-home gait analysis. Our method has two stages: fusion and operating. In the fusion stage, both cameras and vibration sensors are installed to record only a few trials of the subject's footstep data, through which we characterize the uncertainty in wave arrival time and model the wave velocity profiles for the given structure. In the operating stage, we remove the camera to preserve privacy at home. The footstep localization is conducted by estimating the time difference of arrival (TDoA) over multiple vibration sensors, whose accuracy is improved through the reduced uncertainty and velocity modeling during the fusion stage. We evaluate GaitVibe+ through a real-world experiment with 50 walking trials. With only 3 trials of multi-modal fusion, our approach has an average localization error of 0.22 meters, which reduces the spatial gait parameter error from 111% to 27%.

A hierarchical semantic segmentation framework for computer vision-based bridge damage detection

Jul 18, 2022

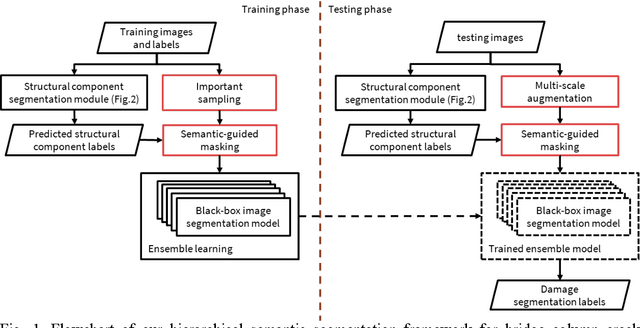

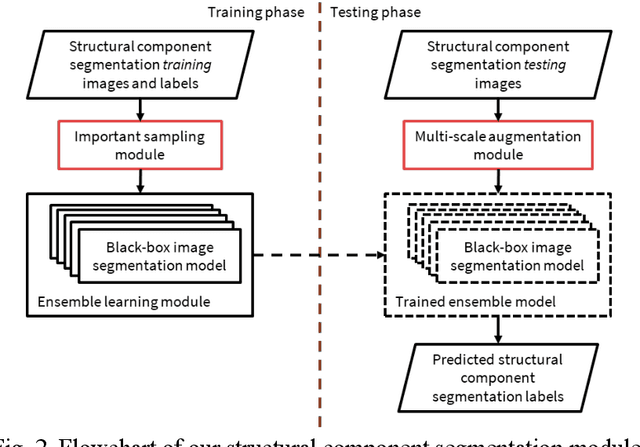

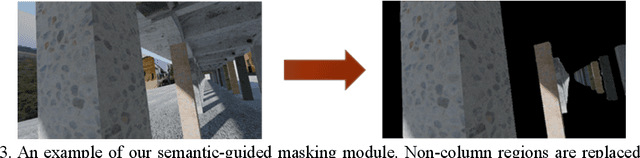

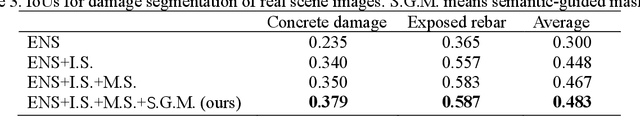

Abstract:Computer vision-based damage detection using remote cameras and unmanned aerial vehicles (UAVs) enables efficient and low-cost bridge health monitoring that reduces labor costs and the needs for sensor installation and maintenance. By leveraging recent semantic image segmentation approaches, we are able to find regions of critical structural components and recognize damage at the pixel level using images as the only input. However, existing methods perform poorly when detecting small damages (e.g., cracks and exposed rebars) and thin objects with limited image samples, especially when the components of interest are highly imbalanced. To this end, this paper introduces a semantic segmentation framework that imposes the hierarchical semantic relationship between component category and damage types. For example, certain concrete cracks only present on bridge columns and therefore the non-column region will be masked out when detecting such damages. In this way, the damage detection model could focus on learning features from possible damaged regions only and avoid the effects of other irrelevant regions. We also utilize multi-scale augmentation that provides views with different scales that preserves contextual information of each image without losing the ability of handling small and thin objects. Furthermore, the proposed framework employs important sampling that repeatedly samples images containing rare components (e.g., railway sleeper and exposed rebars) to provide more data samples, which addresses the imbalanced data challenge.

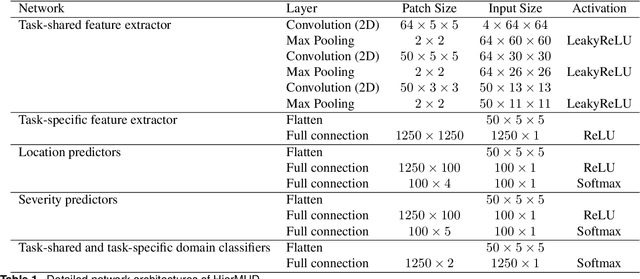

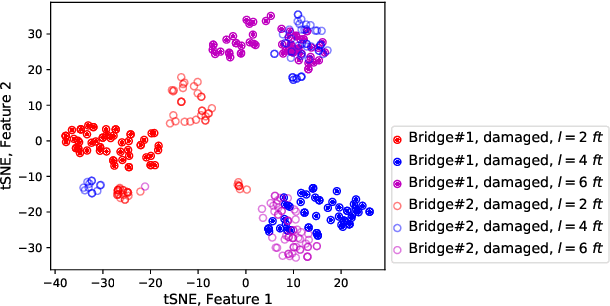

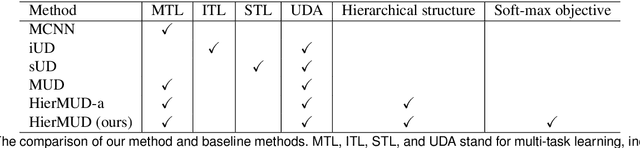

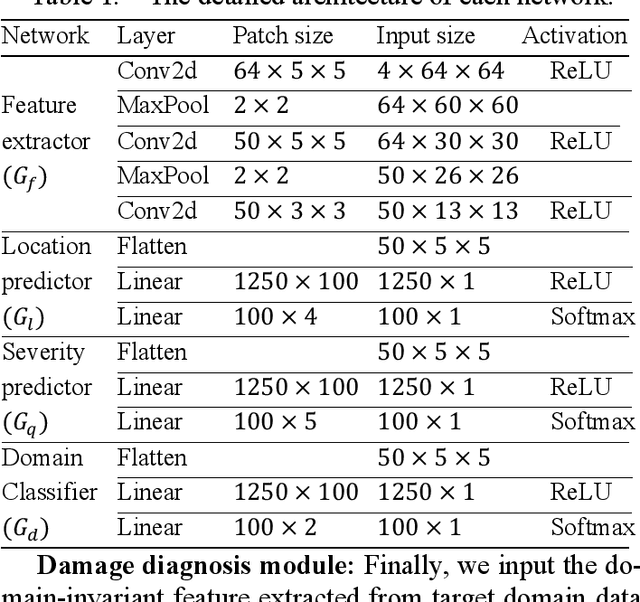

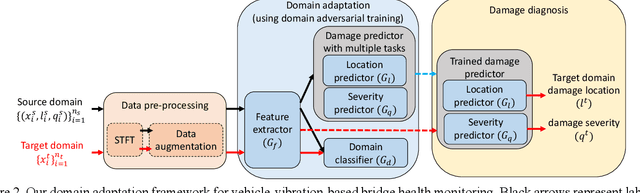

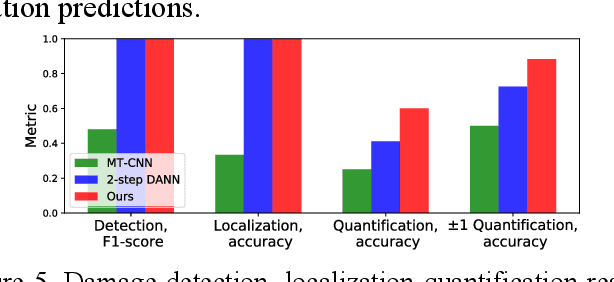

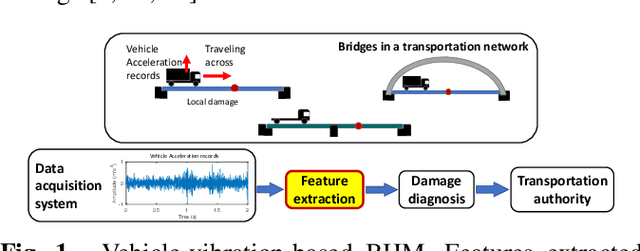

HierMUD: Hierarchical Multi-task Unsupervised Domain Adaptation between Bridges for Drive-by Damage Diagnosis

Jul 23, 2021

Abstract:Monitoring bridge health using vibrations of drive-by vehicles has various benefits, such as no need for directly installing and maintaining sensors on the bridge. However, many of the existing drive-by monitoring approaches are based on supervised learning models that require labeled data from every bridge of interest, which is expensive and time-consuming, if not impossible, to obtain. To this end, we introduce a new framework that transfers the model learned from one bridge to diagnose damage in another bridge without any labels from the target bridge. Our framework trains a hierarchical neural network model in an adversarial way to extract task-shared and task-specific features that are informative to multiple diagnostic tasks and invariant across multiple bridges. We evaluate our framework on experimental data collected from 2 bridges and 3 vehicles. We achieve accuracies of 95% for damage detection, 93% for localization, and up to 72% for quantification, which are ~2 times improvements from baseline methods.

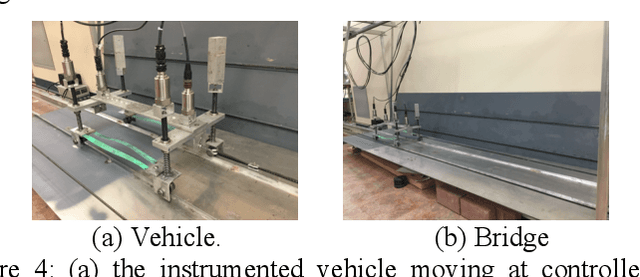

Knowledge transfer between bridges for drive-by monitoring using adversarial and multi-task learning

Jun 05, 2020

Abstract:Monitoring bridge health using the vibrations of drive-by vehicles has various benefits, such as low cost and no need for direct installation or on-site maintenance of equipment on the bridge. However, many such approaches require labeled data from every bridge, which is expensive and time-consuming, if not impossible, to obtain. This is further exacerbated by having multiple diagnostic tasks, such as damage quantification and localization. One way to address this issue is to directly apply the supervised model trained for one bridge to other bridges, although this may significantly reduce the accuracy because of distribution mismatch between different bridges'data. To alleviate these problems, we introduce a transfer learning framework using domain-adversarial training and multi-task learning to detect, localize and quantify damage. Specifically, we train a deep network in an adversarial way to learn features that are 1) sensitive to damage and 2) invariant to different bridges. In addition, to improve the error propagation from one task to the next, our framework learns shared features for all the tasks using multi-task learning. We evaluate our framework using lab-scale experiments with two different bridges. On average, our framework achieves 94%, 97% and 84% accuracy for damage detection, localization and quantification, respectively. within one damage severity level.

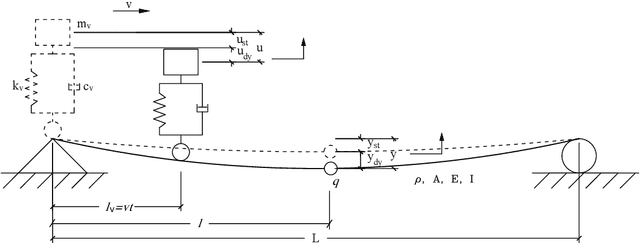

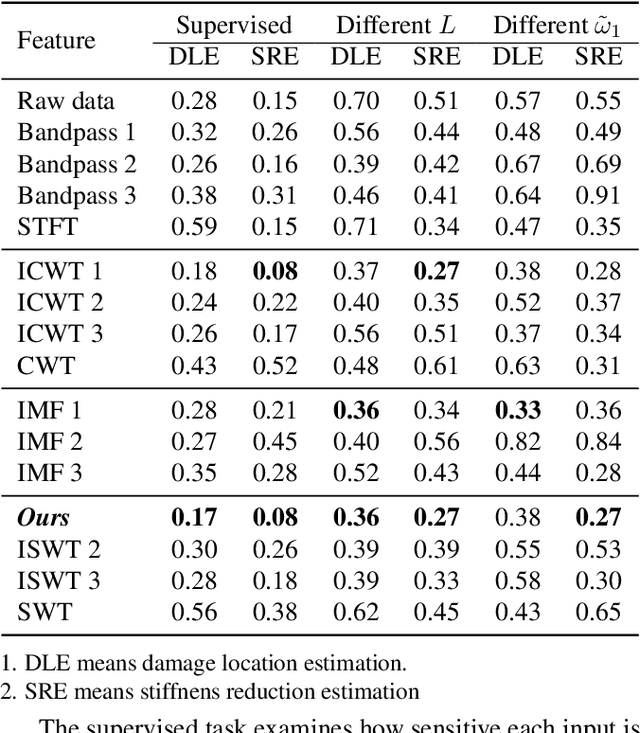

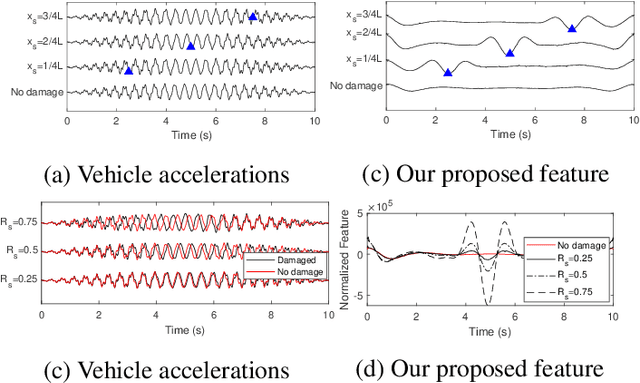

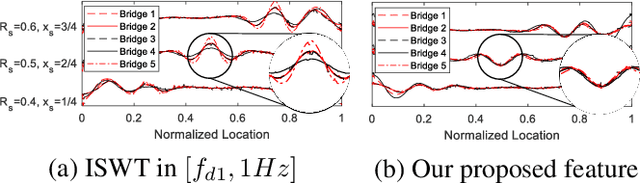

Damage-sensitive and domain-invariant feature extraction for vehicle-vibration-based bridge health monitoring

Feb 06, 2020

Abstract:We introduce a physics-guided signal processing approach to extract a damage-sensitive and domain-invariant (DS & DI) feature from acceleration response data of a vehicle traveling over a bridge to assess bridge health. Motivated by indirect sensing methods' benefits, such as low-cost and low-maintenance, vehicle-vibration-based bridge health monitoring has been studied to efficiently monitor bridges in real-time. Yet applying this approach is challenging because 1) physics-based features extracted manually are generally not damage-sensitive, and 2) features from machine learning techniques are often not applicable to different bridges. Thus, we formulate a vehicle bridge interaction system model and find a physics-guided DS & DI feature, which can be extracted using the synchrosqueezed wavelet transform representing non-stationary signals as intrinsic-mode-type components. We validate the effectiveness of the proposed feature with simulated experiments. Compared to conventional time- and frequency-domain features, our feature provides the best damage quantification and localization results across different bridges in five of six experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge