Mario Bergés

Comparative Field Deployment of Reinforcement Learning and Model Predictive Control for Residential HVAC

Oct 01, 2025

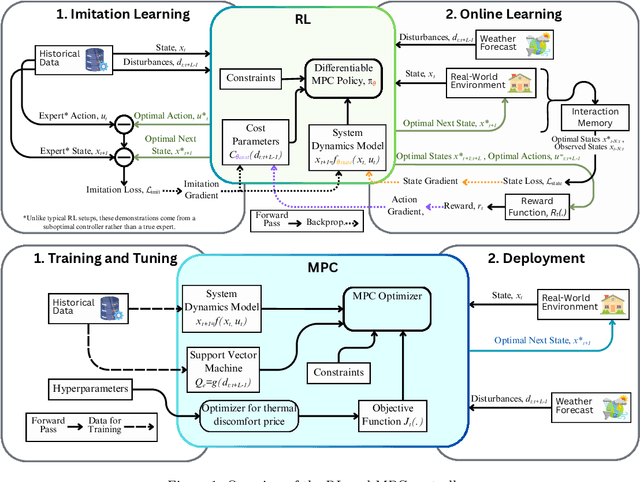

Abstract:Advanced control strategies like Model Predictive Control (MPC) offer significant energy savings for HVAC systems but often require substantial engineering effort, limiting scalability. Reinforcement Learning (RL) promises greater automation and adaptability, yet its practical application in real-world residential settings remains largely undemonstrated, facing challenges related to safety, interpretability, and sample efficiency. To investigate these practical issues, we performed a direct comparison of an MPC and a model-based RL controller, with each controller deployed for a one-month period in an occupied house with a heat pump system in West Lafayette, Indiana. This investigation aimed to explore scalability of the chosen RL and MPC implementations while ensuring safety and comparability. The advanced controllers were evaluated against each other and against the existing controller. RL achieved substantial energy savings (22\% relative to the existing controller), slightly exceeding MPC's savings (20\%), albeit with modestly higher occupant discomfort. However, when energy savings were normalized for the level of comfort provided, MPC demonstrated superior performance. This study's empirical results show that while RL reduces engineering overhead, it introduces practical trade-offs in model accuracy and operational robustness. The key lessons learned concern the difficulties of safe controller initialization, navigating the mismatch between control actions and their practical implementation, and maintaining the integrity of online learning in a live environment. These insights pinpoint the essential research directions needed to advance RL from a promising concept to a truly scalable HVAC control solution.

Street View Sociability: Interpretable Analysis of Urban Social Behavior Across 15 Cities

Aug 08, 2025Abstract:Designing socially active streets has long been a goal of urban planning, yet existing quantitative research largely measures pedestrian volume rather than the quality of social interactions. We hypothesize that street view imagery -- an inexpensive data source with global coverage -- contains latent social information that can be extracted and interpreted through established social science theory. As a proof of concept, we analyzed 2,998 street view images from 15 cities using a multimodal large language model guided by Mehta's taxonomy of passive, fleeting, and enduring sociability -- one illustrative example of a theory grounded in urban design that could be substituted or complemented by other sociological frameworks. We then used linear regression models, controlling for factors like weather, time of day, and pedestrian counts, to test whether the inferred sociability measures correlate with city-level place attachment scores from the World Values Survey and with environmental predictors (e.g., green, sky, and water view indices) derived from individual street view images. Results aligned with long-standing urban planning theory: the sky view index was associated with all three sociability types, the green view index predicted enduring sociability, and place attachment was positively associated with fleeting sociability. These results provide preliminary evidence that street view images can be used to infer relationships between specific types of social interactions and built environment variables. Further research could establish street view imagery as a scalable, privacy-preserving tool for studying urban sociability, enabling cross-cultural theory testing and evidence-based design of socially vibrant cities.

Can Time-Series Foundation Models Perform Building Energy Management Tasks?

Jun 12, 2025Abstract:Building energy management (BEM) tasks require processing and learning from a variety of time-series data. Existing solutions rely on bespoke task- and data-specific models to perform these tasks, limiting their broader applicability. Inspired by the transformative success of Large Language Models (LLMs), Time-Series Foundation Models (TSFMs), trained on diverse datasets, have the potential to change this. Were TSFMs to achieve a level of generalizability across tasks and contexts akin to LLMs, they could fundamentally address the scalability challenges pervasive in BEM. To understand where they stand today, we evaluate TSFMs across four dimensions: (1) generalizability in zero-shot univariate forecasting, (2) forecasting with covariates for thermal behavior modeling, (3) zero-shot representation learning for classification tasks, and (4) robustness to performance metrics and varying operational conditions. Our results reveal that TSFMs exhibit \emph{limited} generalizability, performing only marginally better than statistical models on unseen datasets and modalities for univariate forecasting. Similarly, inclusion of covariates in TSFMs does not yield performance improvements, and their performance remains inferior to conventional models that utilize covariates. While TSFMs generate effective zero-shot representations for downstream classification tasks, they may remain inferior to statistical models in forecasting when statistical models perform test-time fitting. Moreover, TSFMs forecasting performance is sensitive to evaluation metrics, and they struggle in more complex building environments compared to statistical models. These findings underscore the need for targeted advancements in TSFM design, particularly their handling of covariates and incorporating context and temporal dynamics into prediction mechanisms, to develop more adaptable and scalable solutions for BEM.

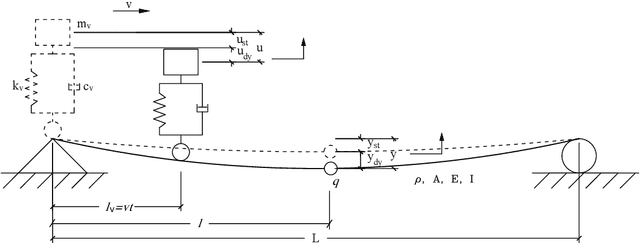

Bridging the Reality Gap in Digital Twins with Context-Aware, Physics-Guided Deep Learning

May 17, 2025Abstract:Digital twins (DTs) enable powerful predictive analytics, but persistent discrepancies between simulations and real systems--known as the reality gap--undermine their reliability. Coined in robotics, the term now applies to DTs, where discrepancies stem from context mismatches, cross-domain interactions, and multi-scale dynamics. Among these, context mismatch is pressing and underexplored, as DT accuracy depends on capturing operational context, often only partially observable. However, DTs have a key advantage: simulators can systematically vary contextual factors and explore scenarios difficult or impossible to observe empirically, informing inference and model alignment. While sim-to-real transfer like domain adaptation shows promise in robotics, their application to DTs poses two key challenges. First, unlike one-time policy transfers, DTs require continuous calibration across an asset's lifecycle--demanding structured information flow, timely detection of out-of-sync states, and integration of historical and new data. Second, DTs often perform inverse modeling, inferring latent states or faults from observations that may reflect multiple evolving contexts. These needs strain purely data-driven models and risk violating physical consistency. Though some approaches preserve validity via reduced-order model, most domain adaptation techniques still lack such constraints. To address this, we propose a Reality Gap Analysis (RGA) module for DTs that continuously integrates new sensor data, detects misalignments, and recalibrates DTs via a query-response framework. Our approach fuses domain-adversarial deep learning with reduced-order simulator guidance to improve context inference and preserve physical consistency. We illustrate the RGA module in a structural health monitoring case study on a steel truss bridge in Pittsburgh, PA, showing faster calibration and better real-world alignment.

State-of-the-Art Review: The Use of Digital Twins to Support Artificial Intelligence-Guided Predictive Maintenance

Jun 19, 2024Abstract:In recent years, predictive maintenance (PMx) has gained prominence for its potential to enhance efficiency, automation, accuracy, and cost-effectiveness while reducing human involvement. Importantly, PMx has evolved in tandem with digital advancements, such as Big Data and the Internet of Things (IOT). These technological strides have enabled Artificial Intelligence (AI) to revolutionize PMx processes, with increasing capacities for real-time automation of monitoring, analysis, and prediction tasks. However, PMx still faces challenges such as poor explainability and sample inefficiency in data-driven methods and high complexity in physics-based models, hindering broader adoption. This paper posits that Digital Twins (DTs) can be integrated into PMx to overcome these challenges, paving the way for more automated PMx applications across various stakeholders. Despite their potential, current DTs have not fully matured to bridge existing gaps. Our paper provides a comprehensive roadmap for DT evolution, addressing current limitations to foster large-scale automated PMx progression. We structure our approach in three stages: First, we reference prior work where we identified and defined the Information Requirements (IRs) and Functional Requirements (FRs) for PMx, forming the blueprint for a unified framework. Second, we conduct a literature review to assess current DT applications integrating these IRs and FRs, revealing standardized DT models and tools that support automated PMx. Lastly, we highlight gaps in current DT implementations, particularly those IRs and FRs not fully supported, and outline the necessary components for a comprehensive, automated PMx system. Our paper concludes with research directions aimed at seamlessly integrating DTs into the PMx paradigm to achieve this ambitious vision.

Unmasking the Role of Remote Sensors in Comfort, Energy and Demand Response

Apr 19, 2024Abstract:In single-zone multi-room houses (SZMRHs), temperature controls rely on a single probe near the thermostat, resulting in temperature discrepancies that cause thermal discomfort and energy waste. Augmenting smart thermostats (STs) with per-room sensors has gained acceptance by major ST manufacturers. This paper leverages additional sensory information to empirically characterize the services provided by buildings, including thermal comfort, energy efficiency, and demand response (DR). Utilizing room-level time-series data from 1,000 houses, metadata from 110,000 houses across the United States, and data from two real-world testbeds, we examine the limitations of SZMRHs and explore the potential of remote sensors. We discovered that comfortable DR durations (CDRDs) for rooms are typically 70% longer or 40% shorter than for the room with the thermostat. When averaging, rooms at the control temperature's bounds are typically deviated around -3{\deg}F to 2.5{\deg}F from the average. Moreover, in 95\% of houses, we identified rooms experiencing notably higher solar gains compared to the rest of the rooms, while 85% and 70% of houses demonstrated lower heat input and poor insulation, respectively. Lastly, it became evident that the consumption of cooling energy escalates with the increase in the number of sensors, whereas heating usage experiences fluctuations ranging from -19% to +25% This study serves as a benchmark for assessing the thermal comfort and DR services in the existing housing stock, while also highlighting the energy efficiency impacts of sensing technologies. Our approach sets the stage for more granular, precise control strategies of SZMRHs.

State-of-the-Art Review and Synthesis: A Requirement-based Roadmap for Standardized Predictive Maintenance Automation Using Digital Twin Technologies

Nov 13, 2023

Abstract:Recent digital advances have popularized predictive maintenance (PMx), offering enhanced efficiency, automation, accuracy, cost savings, and independence in maintenance. Yet, it continues to face numerous limitations such as poor explainability, sample inefficiency of data-driven methods, complexity of physics-based methods, and limited generalizability and scalability of knowledge-based methods. This paper proposes leveraging Digital Twins (DTs) to address these challenges and enable automated PMx adoption at larger scales. While we argue that DTs have this transformative potential, they have not yet reached the level of maturity needed to bridge these gaps in a standardized way. Without a standard definition for such evolution, this transformation lacks a solid foundation upon which to base its development. This paper provides a requirement-based roadmap supporting standardized PMx automation using DT technologies. A systematic approach comprising two primary stages is presented. First, we methodically identify the Informational Requirements (IRs) and Functional Requirements (FRs) for PMx, which serve as a foundation from which any unified framework must emerge. Our approach to defining and using IRs and FRs to form the backbone of any PMx DT is supported by the track record of IRs and FRs being successfully used as blueprints in other areas, such as for product development within the software industry. Second, we conduct a thorough literature review spanning fields to determine the ways in which these IRs and FRs are currently being used within DTs, enabling us to point to the specific areas where further research is warranted to support the progress and maturation of requirement-based PMx DTs.

HierMUD: Hierarchical Multi-task Unsupervised Domain Adaptation between Bridges for Drive-by Damage Diagnosis

Jul 23, 2021

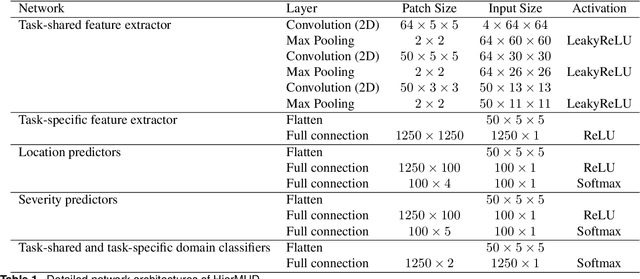

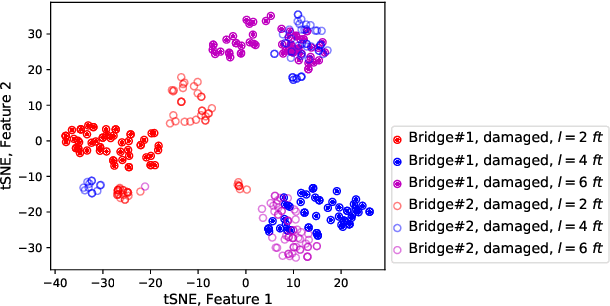

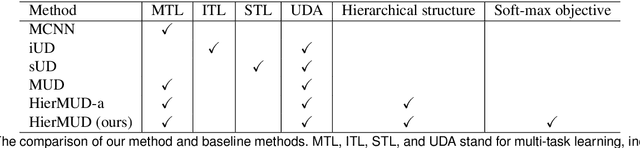

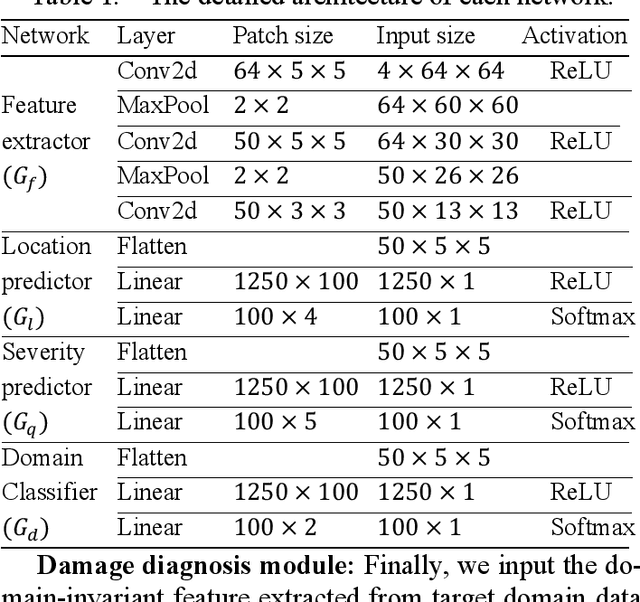

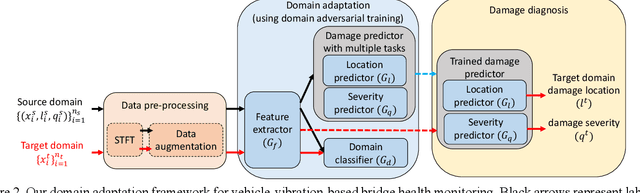

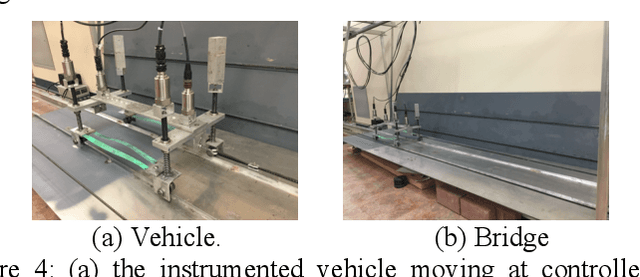

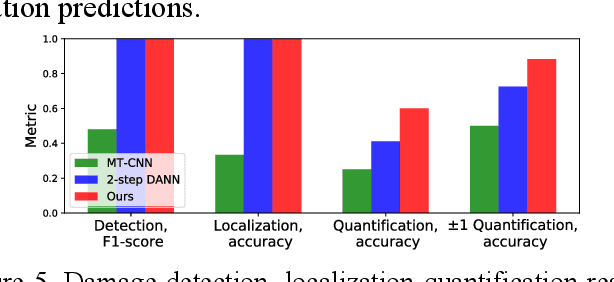

Abstract:Monitoring bridge health using vibrations of drive-by vehicles has various benefits, such as no need for directly installing and maintaining sensors on the bridge. However, many of the existing drive-by monitoring approaches are based on supervised learning models that require labeled data from every bridge of interest, which is expensive and time-consuming, if not impossible, to obtain. To this end, we introduce a new framework that transfers the model learned from one bridge to diagnose damage in another bridge without any labels from the target bridge. Our framework trains a hierarchical neural network model in an adversarial way to extract task-shared and task-specific features that are informative to multiple diagnostic tasks and invariant across multiple bridges. We evaluate our framework on experimental data collected from 2 bridges and 3 vehicles. We achieve accuracies of 95% for damage detection, 93% for localization, and up to 72% for quantification, which are ~2 times improvements from baseline methods.

Knowledge transfer between bridges for drive-by monitoring using adversarial and multi-task learning

Jun 05, 2020

Abstract:Monitoring bridge health using the vibrations of drive-by vehicles has various benefits, such as low cost and no need for direct installation or on-site maintenance of equipment on the bridge. However, many such approaches require labeled data from every bridge, which is expensive and time-consuming, if not impossible, to obtain. This is further exacerbated by having multiple diagnostic tasks, such as damage quantification and localization. One way to address this issue is to directly apply the supervised model trained for one bridge to other bridges, although this may significantly reduce the accuracy because of distribution mismatch between different bridges'data. To alleviate these problems, we introduce a transfer learning framework using domain-adversarial training and multi-task learning to detect, localize and quantify damage. Specifically, we train a deep network in an adversarial way to learn features that are 1) sensitive to damage and 2) invariant to different bridges. In addition, to improve the error propagation from one task to the next, our framework learns shared features for all the tasks using multi-task learning. We evaluate our framework using lab-scale experiments with two different bridges. On average, our framework achieves 94%, 97% and 84% accuracy for damage detection, localization and quantification, respectively. within one damage severity level.

Incremental Real-Time Personalization in Human Activity Recognition Using Domain Adaptive Batch Normalization

May 25, 2020

Abstract:Human Activity Recognition (HAR) from devices like smartphone accelerometers is a fundamental problem in ubiquitous computing. Machine learning based recognition models often perform poorly when applied to new users that were not part of the training data. Previous work has addressed this challenge by personalizing general recognition models to the unique motion pattern of a new user in a static batch setting. They require target user data to be available upfront. The more challenging online setting has received less attention. No samples from the target user are available in advance, but they arrive sequentially. Additionally, the user's motion pattern may change over time. Thus, adapting to new and forgetting old information must be traded off. Finally, the target user should not have to do any work to use the recognition system by, say, labeling any activities. Our work addresses this challenges by proposing an unsupervised online domain adaptation algorithm. Both classification and personalization happen continuously and incrementally in real-time. Our solution works by aligning the feature distribution of all the subjects, sources and target, within deep neural network layers. Experiments with 44 subjects show accuracy improvements of up to 14 % for some individuals. Median improvement is 4 %.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge