Xiuheng Wang

Anisotropic Tensor Deconvolution of Hyperspectral Images

Jan 16, 2026Abstract:Hyperspectral image (HSI) deconvolution is a challenging ill-posed inverse problem, made difficult by the data's high dimensionality.We propose a parameter-parsimonious framework based on a low-rank Canonical Polyadic Decomposition (CPD) of the entire latent HSI $\mathbf{\mathcal{X}} \in \mathbb{R}^{P\times Q \times N}$.This approach recasts the problem from recovering a large-scale image with $PQN$ variables to estimating the CPD factors with $(P+Q+N)R$ variables.This model also enables a structure-aware, anisotropic Total Variation (TV) regularization applied only to the spatial factors, preserving the smooth spectral signatures.An efficient algorithm based on the Proximal Alternating Linearized Minimization (PALM) framework is developed to solve the resulting non-convex optimization problem.Experiments confirm the model's efficiency, showing a numerous parameter reduction of over two orders of magnitude and a compelling trade-off between model compactness and reconstruction accuracy.

Riemannian Change Point Detection on Manifolds with Robust Centroid Estimation

Aug 25, 2025Abstract:Non-parametric change-point detection in streaming time series data is a long-standing challenge in signal processing. Recent advancements in statistics and machine learning have increasingly addressed this problem for data residing on Riemannian manifolds. One prominent strategy involves monitoring abrupt changes in the center of mass of the time series. Implemented in a streaming fashion, this strategy, however, requires careful step size tuning when computing the updates of the center of mass. In this paper, we propose to leverage robust centroid on manifolds from M-estimation theory to address this issue. Our proposal consists of comparing two centroid estimates: the classical Karcher mean (sensitive to change) versus one defined from Huber's function (robust to change). This comparison leads to the definition of a test statistic whose performance is less sensitive to the underlying estimation method. We propose a stochastic Riemannian optimization algorithm to estimate both robust centroids efficiently. Experiments conducted on both simulated and real-world data across two representative manifolds demonstrate the superior performance of our proposed method.

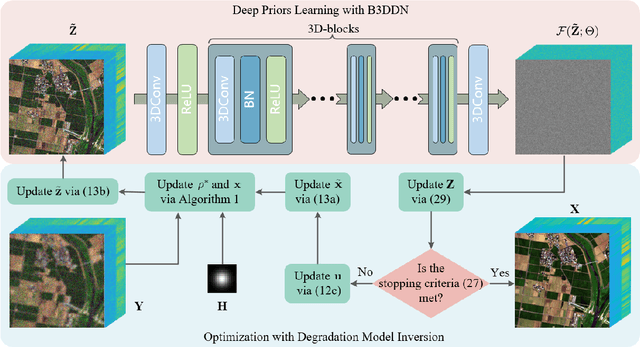

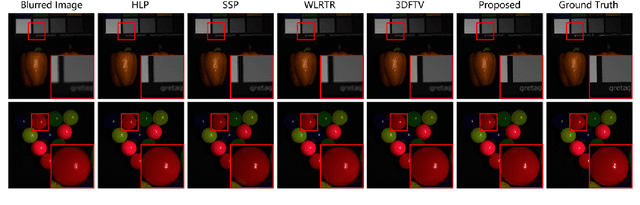

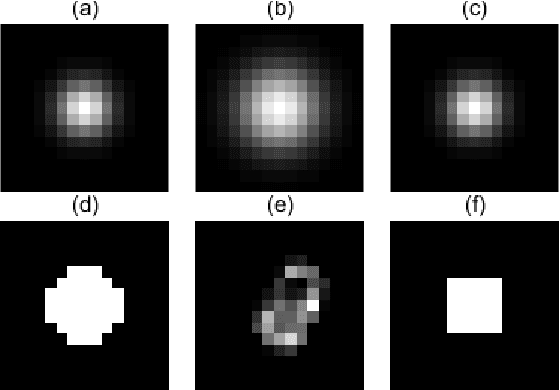

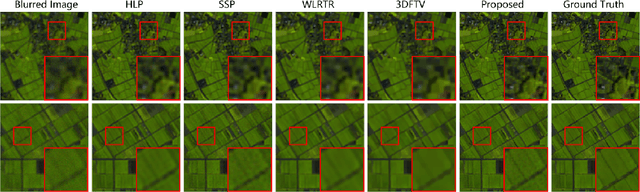

Tuning-free Plug-and-Play Hyperspectral Image Deconvolution with Deep Priors

Nov 28, 2022

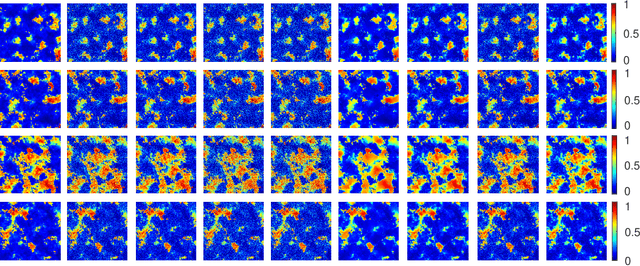

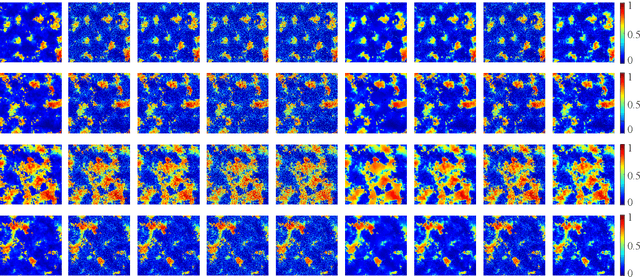

Abstract:Deconvolution is a widely used strategy to mitigate the blurring and noisy degradation of hyperspectral images~(HSI) generated by the acquisition devices. This issue is usually addressed by solving an ill-posed inverse problem. While investigating proper image priors can enhance the deconvolution performance, it is not trivial to handcraft a powerful regularizer and to set the regularization parameters. To address these issues, in this paper we introduce a tuning-free Plug-and-Play (PnP) algorithm for HSI deconvolution. Specifically, we use the alternating direction method of multipliers (ADMM) to decompose the optimization problem into two iterative sub-problems. A flexible blind 3D denoising network (B3DDN) is designed to learn deep priors and to solve the denoising sub-problem with different noise levels. A measure of 3D residual whiteness is then investigated to adjust the penalty parameters when solving the quadratic sub-problems, as well as a stopping criterion. Experimental results on both simulated and real-world data with ground-truth demonstrate the superiority of the proposed method.

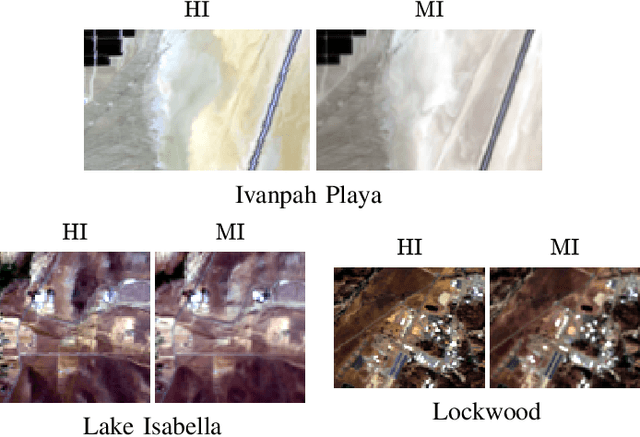

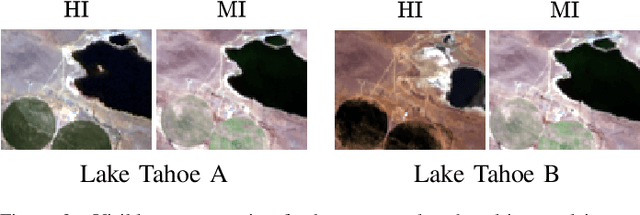

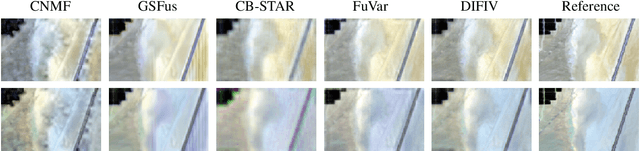

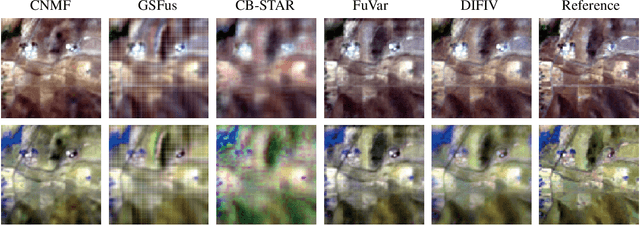

Deep Hyperspectral and Multispectral Image Fusion with Inter-image Variability

Aug 24, 2022

Abstract:Hyperspectral and multispectral image fusion allows us to overcome the hardware limitations of hyperspectral imaging systems inherent to their lower spatial resolution. Nevertheless, existing algorithms usually fail to consider realistic image acquisition conditions. This paper presents a general imaging model that considers inter-image variability of data from heterogeneous sources and flexible image priors. The fusion problem is stated as an optimization problem in the maximum a posteriori framework. We introduce an original image fusion method that, on the one hand, solves the optimization problem accounting for inter-image variability with an iteratively reweighted scheme and, on the other hand, that leverages light-weight CNN-based networks to learn realistic image priors from data. In addition, we propose a zero-shot strategy to directly learn the image-specific prior of the latent images in an unsupervised manner. The performance of the algorithm is illustrated with real data subject to inter-image variability.

Integration of Physics-Based and Data-Driven Models for Hyperspectral Image Unmixing

Jun 11, 2022

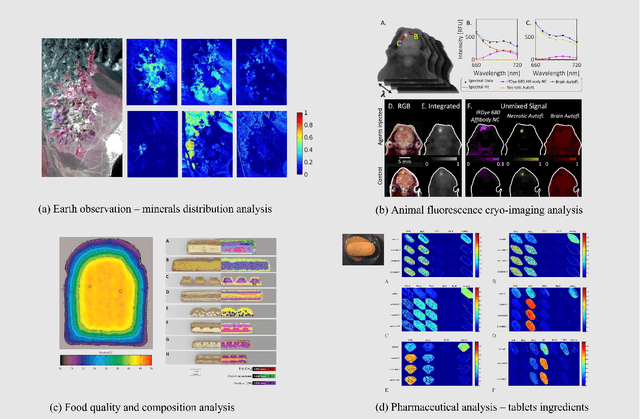

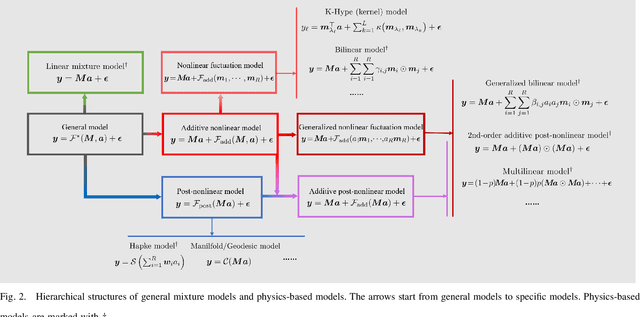

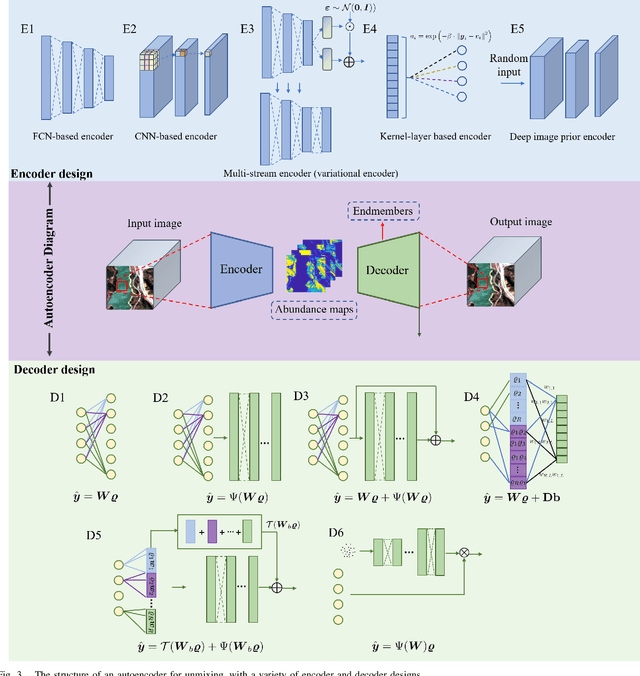

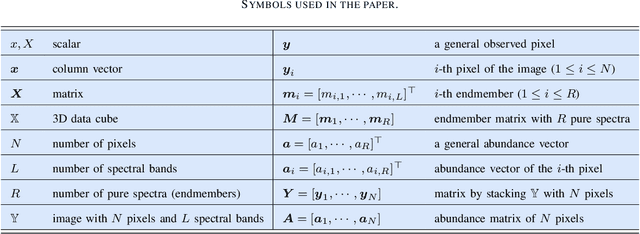

Abstract:Spectral unmixing is one of the most important quantitative analysis tasks in hyperspectral data processing. Conventional physics-based models are characterized by clear interpretation. However, due to the complex mixture mechanism and limited nonlinearity modeling capacity, these models may not be accurate, especially, in analyzing scenes with unknown physical characteristics. Data-driven methods have developed rapidly in recent years, in particular deep learning methods as they possess superior capability in modeling complex and nonlinear systems. Simply transferring these methods as black-boxes to conduct unmixing may lead to low physical interpretability and generalization ability. Consequently, several contributions have been dedicated to integrating advantages of both physics-based models and data-driven methods. In this article, we present an overview of recent advances on this topic from several aspects, including deep neural network (DNN) structures design, prior capturing and loss design, and summarise these methods in a common mathematical optimization framework. In addition, relevant remarks and discussions are conducted made for providing further understanding and prospective improvement of the methods. The related source codes and data are collected and made available at http://github.com/xiuheng-wang/awesome-hyperspectral-image-unmixing.

Hyperspectral Image Super-resolution with Deep Priors and Degradation Model Inversion

Jan 24, 2022

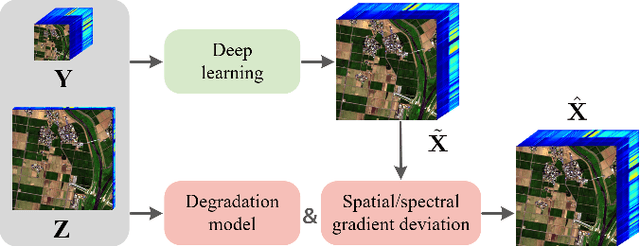

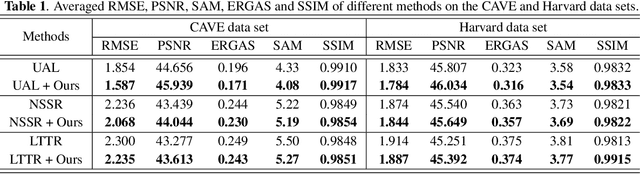

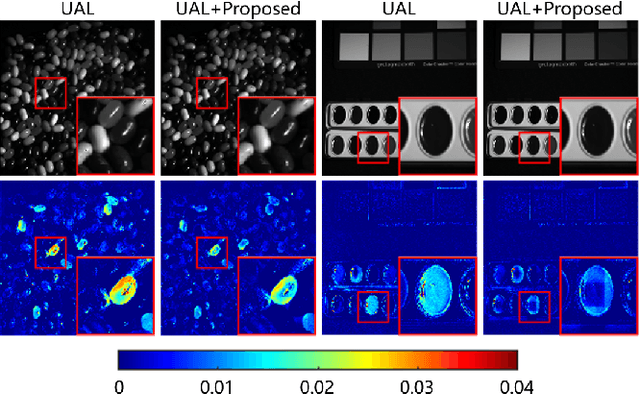

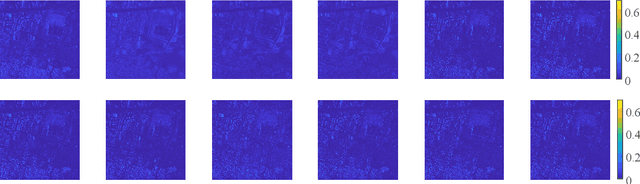

Abstract:To overcome inherent hardware limitations of hyperspectral imaging systems with respect to their spatial resolution, fusion-based hyperspectral image (HSI) super-resolution is attracting increasing attention. This technique aims to fuse a low-resolution (LR) HSI and a conventional high-resolution (HR) RGB image in order to obtain an HR HSI. Recently, deep learning architectures have been used to address the HSI super-resolution problem and have achieved remarkable performance. However, they ignore the degradation model even though this model has a clear physical interpretation and may contribute to improve the performance. We address this problem by proposing a method that, on the one hand, makes use of the linear degradation model in the data-fidelity term of the objective function and, on the other hand, utilizes the output of a convolutional neural network for designing a deep prior regularizer in spectral and spatial gradient domains. Experiments show the performance improvement achieved with this strategy.

A Plug-and-Play Priors Framework for Hyperspectral Unmixing

Dec 31, 2020

Abstract:Spectral unmixing is a widely used technique in hyperspectral image processing and analysis. It aims to separate mixed pixels into the component materials and their corresponding abundances. Early solutions to spectral unmixing are performed independently on each pixel. Nowadays, investigating proper priors into the unmixing problem has been popular as it can significantly enhance the unmixing performance. However, it is non-trivial to handcraft a powerful regularizer, and complex regularizers may introduce extra difficulties in solving optimization problems in which they are involved. To address this issue, we present a plug-and-play (PnP) priors framework for hyperspectral unmixing. More specifically, we use the alternating direction method of multipliers (ADMM) to decompose the optimization problem into two iterative subproblems. One is a regular optimization problem depending on the forward model, and the other is a proximity operator related to the prior model and can be regarded as an image denoising problem. Our framework is flexible and extendable which allows a wide range of denoisers to replace prior models and avoids handcrafting regularizers. Experiments conducted on both synthetic data and real airborne data illustrate the superiority of the proposed strategy compared with other state-of-the-art hyperspectral unmixing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge