Boris Kramer

Dynamic Shape Control of Soft Robots Enabled by Data-Driven Model Reduction

Nov 06, 2025Abstract:Soft robots have shown immense promise in settings where they can leverage dynamic control of their entire bodies. However, effective dynamic shape control requires a controller that accounts for the robot's high-dimensional dynamics--a challenge exacerbated by a lack of general-purpose tools for modeling soft robots amenably for control. In this work, we conduct a comparative study of data-driven model reduction techniques for generating linear models amendable to dynamic shape control. We focus on three methods--the eigensystem realization algorithm, dynamic mode decomposition with control, and the Lagrangian operator inference (LOpInf) method. Using each class of model, we explored their efficacy in model predictive control policies for the dynamic shape control of a simulated eel-inspired soft robot in three experiments: 1) tracking simulated reference trajectories guaranteed to be feasible, 2) tracking reference trajectories generated from a biological model of eel kinematics, and 3) tracking reference trajectories generated by a reduced-scale physical analog. In all experiments, the LOpInf-based policies generated lower tracking errors than policies based on other models.

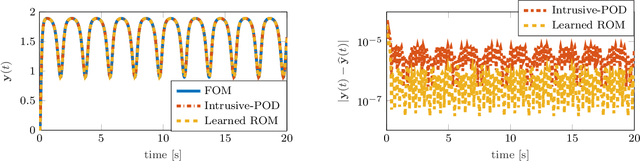

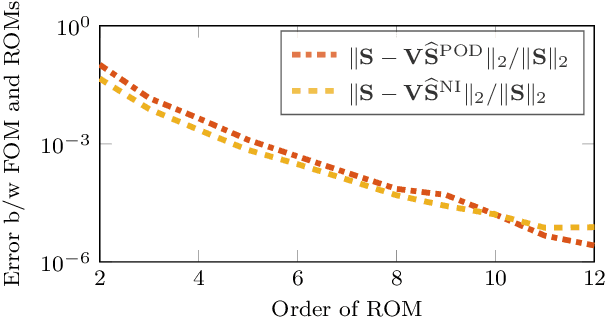

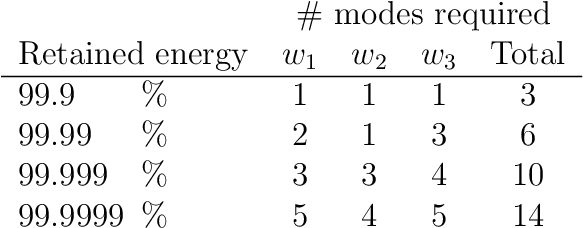

Nonlinear energy-preserving model reduction with lifting transformations that quadratize the energy

Mar 04, 2025

Abstract:Existing model reduction techniques for high-dimensional models of conservative partial differential equations (PDEs) encounter computational bottlenecks when dealing with systems featuring non-polynomial nonlinearities. This work presents a nonlinear model reduction method that employs lifting variable transformations to derive structure-preserving quadratic reduced-order models for conservative PDEs with general nonlinearities. We present an energy-quadratization strategy that defines the auxiliary variable in terms of the nonlinear term in the energy expression to derive an equivalent quadratic lifted system with quadratic system energy. The proposed strategy combined with proper orthogonal decomposition model reduction yields quadratic reduced-order models that conserve the quadratized lifted energy exactly in high dimensions. We demonstrate the proposed model reduction approach on four nonlinear conservative PDEs: the one-dimensional wave equation with exponential nonlinearity, the two-dimensional sine-Gordon equation, the two-dimensional Klein-Gordon equation with parametric dependence, and the two-dimensional Klein-Gordon-Zakharov equations. The numerical results show that the proposed lifting approach is competitive with the state-of-the-art structure-preserving hyper-reduction method in terms of both accuracy and computational efficiency in the online stage while providing significant computational gains in the offline stage.

Physically consistent predictive reduced-order modeling by enhancing Operator Inference with state constraints

Feb 05, 2025

Abstract:Numerical simulations of complex multiphysics systems, such as char combustion considered herein, yield numerous state variables that inherently exhibit physical constraints. This paper presents a new approach to augment Operator Inference -- a methodology within scientific machine learning that enables learning from data a low-dimensional representation of a high-dimensional system governed by nonlinear partial differential equations -- by embedding such state constraints in the reduced-order model predictions. In the model learning process, we propose a new way to choose regularization hyperparameters based on a key performance indicator. Since embedding state constraints improves the stability of the Operator Inference reduced-order model, we compare the proposed state constraints-embedded Operator Inference with the standard Operator Inference and other stability-enhancing approaches. For an application to char combustion, we demonstrate that the proposed approach yields state predictions superior to the other methods regarding stability and accuracy. It extrapolates over 200\% past the training regime while being computationally efficient and physically consistent.

Data-driven Model Reduction for Soft Robots via Lagrangian Operator Inference

Jul 11, 2024Abstract:Data-driven model reduction methods provide a nonintrusive way of constructing computationally efficient surrogates of high-fidelity models for real-time control of soft robots. This work leverages the Lagrangian nature of the model equations to derive structure-preserving linear reduced-order models via Lagrangian Operator Inference and compares their performance with prominent linear model reduction techniques through an anguilliform swimming soft robot model example with 231,336 degrees of freedom. The case studies demonstrate that preserving the underlying Lagrangian structure leads to learned models with higher predictive accuracy and robustness to unseen inputs.

Bayesian identification of nonseparable Hamiltonians with multiplicative noise using deep learning and reduced-order modeling

Jan 23, 2024Abstract:This paper presents a structure-preserving Bayesian approach for learning nonseparable Hamiltonian systems using stochastic dynamic models allowing for statistically-dependent, vector-valued additive and multiplicative measurement noise. The approach is comprised of three main facets. First, we derive a Gaussian filter for a statistically-dependent, vector-valued, additive and multiplicative noise model that is needed to evaluate the likelihood within the Bayesian posterior. Second, we develop a novel algorithm for cost-effective application of Bayesian system identification to high-dimensional systems. Third, we demonstrate how structure-preserving methods can be incorporated into the proposed framework, using nonseparable Hamiltonians as an illustrative system class. We compare the Bayesian method to a state-of-the-art machine learning method on a canonical nonseparable Hamiltonian model and a chaotic double pendulum model with small, noisy training datasets. The results show that using the Bayesian posterior as a training objective can yield upwards of 724 times improvement in Hamiltonian mean squared error using training data with up to 10% multiplicative noise compared to a standard training objective. Lastly, we demonstrate the utility of the novel algorithm for parameter estimation of a 64-dimensional model of the spatially-discretized nonlinear Schr\"odinger equation with data corrupted by up to 20% multiplicative noise.

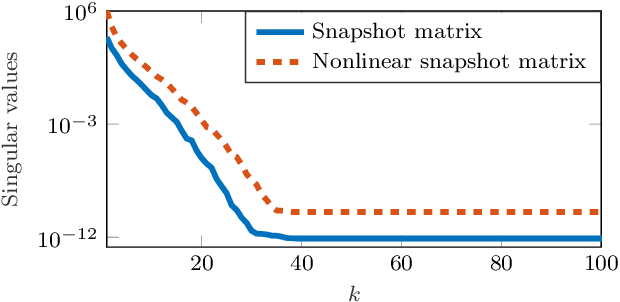

Symplectic model reduction of Hamiltonian systems using data-driven quadratic manifolds

May 24, 2023Abstract:This work presents two novel approaches for the symplectic model reduction of high-dimensional Hamiltonian systems using data-driven quadratic manifolds. Classical symplectic model reduction approaches employ linear symplectic subspaces for representing the high-dimensional system states in a reduced-dimensional coordinate system. While these approximations respect the symplectic nature of Hamiltonian systems, the linearity of the approximation imposes a fundamental limitation to the accuracy that can be achieved. We propose two different model reduction methods based on recently developed quadratic manifolds, each presenting its own advantages and limitations. The addition of quadratic terms in the state approximation, which sits at the heart of the proposed methodologies, enables us to better represent intrinsic low-dimensionality in the problem at hand. Both approaches are effective for issuing predictions in settings well outside the range of their training data while providing more accurate solutions than the linear symplectic reduced-order models.

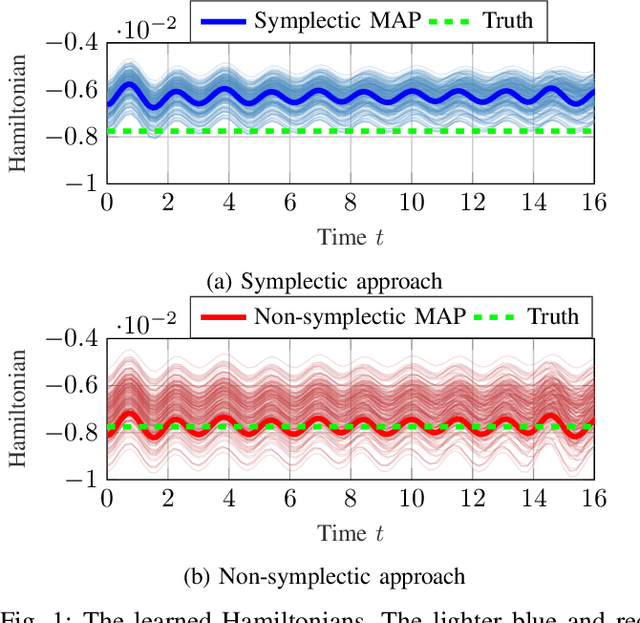

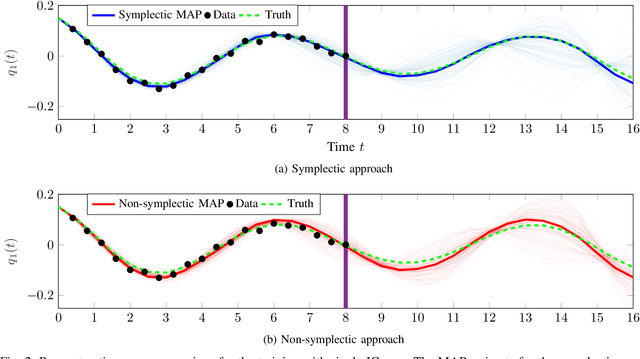

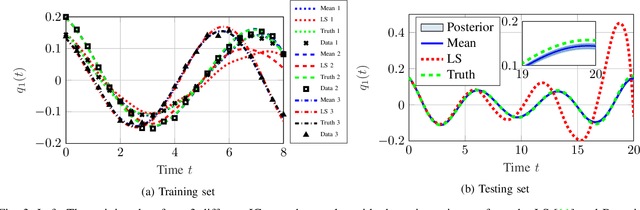

Bayesian Identification of Nonseparable Hamiltonian Systems Using Stochastic Dynamic Models

Sep 15, 2022

Abstract:This paper proposes a probabilistic Bayesian formulation for system identification (ID) and estimation of nonseparable Hamiltonian systems using stochastic dynamic models. Nonseparable Hamiltonian systems arise in models from diverse science and engineering applications such as astrophysics, robotics, vortex dynamics, charged particle dynamics, and quantum mechanics. The numerical experiments demonstrate that the proposed method recovers dynamical systems with higher accuracy and reduced predictive uncertainty compared to state-of-the-art approaches. The results further show that accurate predictions far outside the training time interval in the presence of sparse and noisy measurements are possible, which lends robustness and generalizability to the proposed approach. A quantitative benefit is prediction accuracy with less than 10% relative error for more than 12 times longer than a comparable least-squares-based method on a benchmark problem.

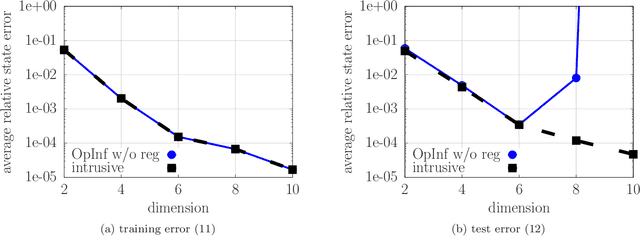

Physics-informed regularization and structure preservation for learning stable reduced models from data with operator inference

Jul 06, 2021

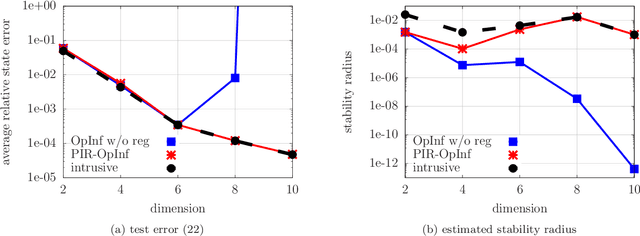

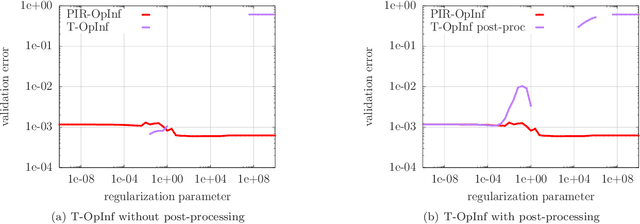

Abstract:Operator inference learns low-dimensional dynamical-system models with polynomial nonlinear terms from trajectories of high-dimensional physical systems (non-intrusive model reduction). This work focuses on the large class of physical systems that can be well described by models with quadratic nonlinear terms and proposes a regularizer for operator inference that induces a stability bias onto quadratic models. The proposed regularizer is physics informed in the sense that it penalizes quadratic terms with large norms and so explicitly leverages the quadratic model form that is given by the underlying physics. This means that the proposed approach judiciously learns from data and physical insights combined, rather than from either data or physics alone. Additionally, a formulation of operator inference is proposed that enforces model constraints for preserving structure such as symmetry and definiteness in the linear terms. Numerical results demonstrate that models learned with operator inference and the proposed regularizer and structure preservation are accurate and stable even in cases where using no regularization or Tikhonov regularization leads to models that are unstable.

Operator inference for non-intrusive model reduction of systems with non-polynomial nonlinear terms

Feb 22, 2020

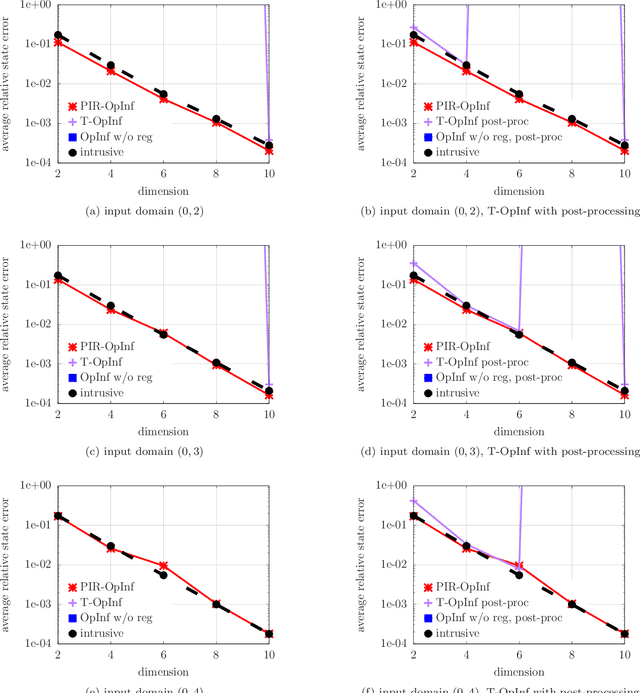

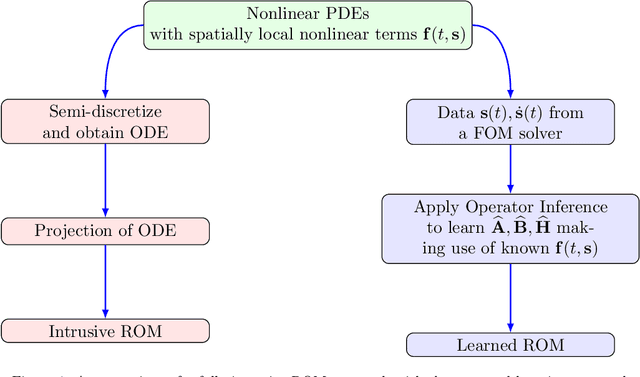

Abstract:This work presents a non-intrusive model reduction method to learn low-dimensional models of dynamical systems with non-polynomial nonlinear terms that are spatially local and that are given in analytic form. In contrast to state-of-the-art model reduction methods that are intrusive and thus require full knowledge of the governing equations and the operators of a full model of the discretized dynamical system, the proposed approach requires only the non-polynomial terms in analytic form and learns the rest of the dynamics from snapshots computed with a potentially black-box full-model solver. The proposed method learns operators for the linear and polynomially nonlinear dynamics via a least-squares problem, where the given non-polynomial terms are incorporated in the right-hand side. The least-squares problem is linear and thus can be solved efficiently in practice. The proposed method is demonstrated on three problems governed by partial differential equations, namely the diffusion-reaction Chafee-Infante model, a tubular reactor model for reactive flows, and a batch-chromatography model that describes a chemical separation process. The numerical results provide evidence that the proposed approach learns reduced models that achieve comparable accuracy as models constructed with state-of-the-art intrusive model reduction methods that require full knowledge of the governing equations.

Lift & Learn: Physics-informed machine learning for large-scale nonlinear dynamical systems

Feb 07, 2020

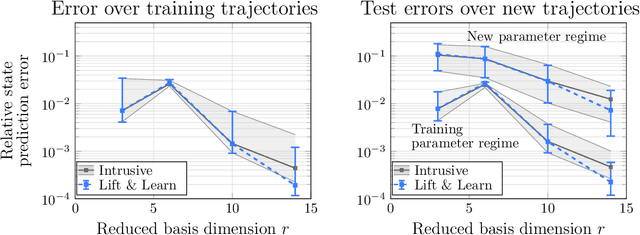

Abstract:We present Lift & Learn, a physics-informed method for learning low-dimensional models for large-scale dynamical systems. The method exploits knowledge of a system's governing equations to identify a coordinate transformation in which the system dynamics have quadratic structure. This transformation is called a lifting map because it often adds auxiliary variables to the system state. The lifting map is applied to data obtained by evaluating a model for the original nonlinear system. This lifted data is projected onto its leading principal components, and low-dimensional linear and quadratic matrix operators are fit to the lifted reduced data using a least-squares operator inference procedure. Analysis of our method shows that the Lift & Learn models are able to capture the system physics in the lifted coordinates at least as accurately as traditional intrusive model reduction approaches. This preservation of system physics makes the Lift & Learn models robust to changes in inputs. Numerical experiments on the FitzHugh-Nagumo neuron activation model and the compressible Euler equations demonstrate the generalizability of our model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge