Bob Coecke

Quantinuum

Quantum Algorithms for Compositional Text Processing

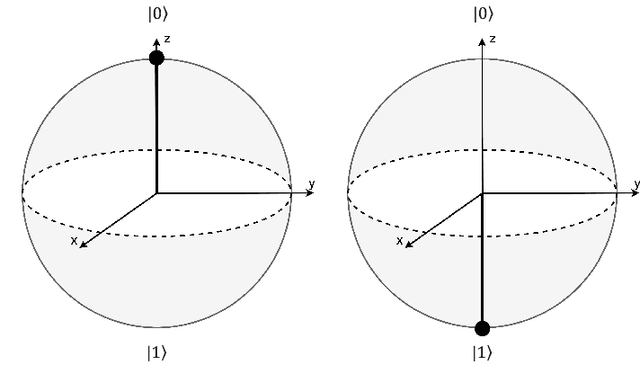

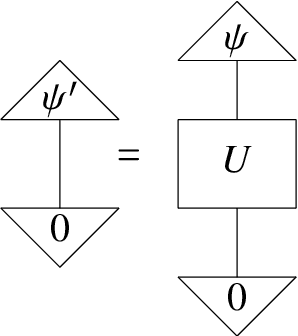

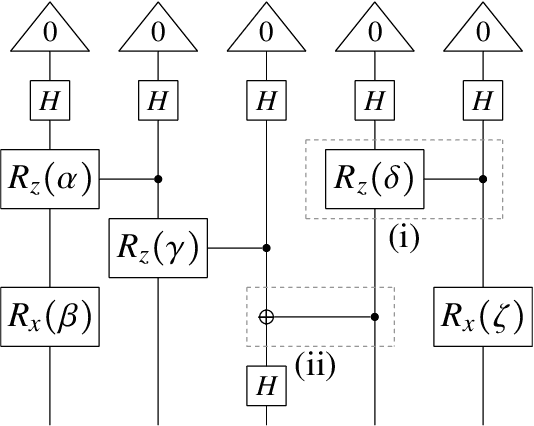

Aug 12, 2024Abstract:Quantum computing and AI have found a fruitful intersection in the field of natural language processing. We focus on the recently proposed DisCoCirc framework for natural language, and propose a quantum adaptation, QDisCoCirc. This is motivated by a compositional approach to rendering AI interpretable: the behavior of the whole can be understood in terms of the behavior of parts, and the way they are put together. For the model-native primitive operation of text similarity, we derive quantum algorithms for fault-tolerant quantum computers to solve the task of question-answering within QDisCoCirc, and show that this is BQP-hard; note that we do not consider the complexity of question-answering in other natural language processing models. Assuming widely-held conjectures, implementing the proposed model classically would require super-polynomial resources. Therefore, it could provide a meaningful demonstration of the power of practical quantum processors. The model construction builds on previous work in compositional quantum natural language processing. Word embeddings are encoded as parameterized quantum circuits, and compositionality here means that the quantum circuits compose according to the linguistic structure of the text. We outline a method for evaluating the model on near-term quantum processors, and elsewhere we report on a recent implementation of this on quantum hardware. In addition, we adapt a quantum algorithm for the closest vector problem to obtain a Grover-like speedup in the fault-tolerant regime for our model. This provides an unconditional quadratic speedup over any classical algorithm in certain circumstances, which we will verify empirically in future work.

* In Proceedings QPL 2024, arXiv:2408.05113

Towards Compositional Interpretability for XAI

Jun 25, 2024Abstract:Artificial intelligence (AI) is currently based largely on black-box machine learning models which lack interpretability. The field of eXplainable AI (XAI) strives to address this major concern, being critical in high-stakes areas such as the finance, legal and health sectors. We present an approach to defining AI models and their interpretability based on category theory. For this we employ the notion of a compositional model, which sees a model in terms of formal string diagrams which capture its abstract structure together with its concrete implementation. This comprehensive view incorporates deterministic, probabilistic and quantum models. We compare a wide range of AI models as compositional models, including linear and rule-based models, (recurrent) neural networks, transformers, VAEs, and causal and DisCoCirc models. Next we give a definition of interpretation of a model in terms of its compositional structure, demonstrating how to analyse the interpretability of a model, and using this to clarify common themes in XAI. We find that what makes the standard 'intrinsically interpretable' models so transparent is brought out most clearly diagrammatically. This leads us to the more general notion of compositionally-interpretable (CI) models, which additionally include, for instance, causal, conceptual space, and DisCoCirc models. We next demonstrate the explainability benefits of CI models. Firstly, their compositional structure may allow the computation of other quantities of interest, and may facilitate inference from the model to the modelled phenomenon by matching its structure. Secondly, they allow for diagrammatic explanations for their behaviour, based on influence constraints, diagram surgery and rewrite explanations. Finally, we discuss many future directions for the approach, raising the question of how to learn such meaningfully structured models in practice.

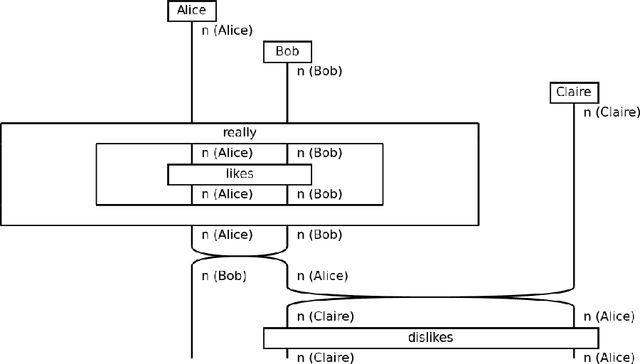

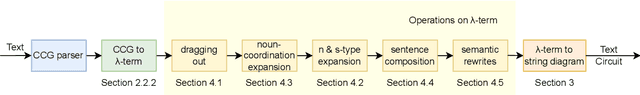

A Pipeline For Discourse Circuits From CCG

Nov 29, 2023

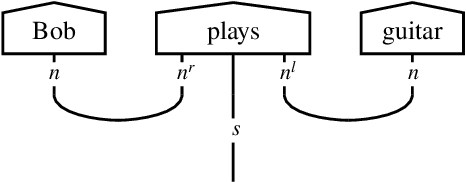

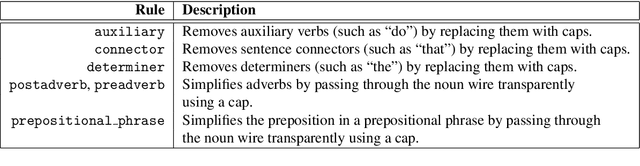

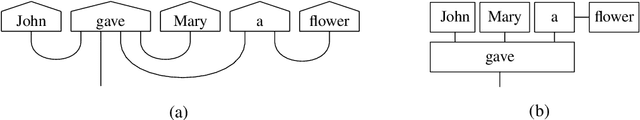

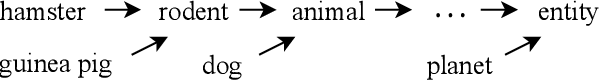

Abstract:There is a significant disconnect between linguistic theory and modern NLP practice, which relies heavily on inscrutable black-box architectures. DisCoCirc is a newly proposed model for meaning that aims to bridge this divide, by providing neuro-symbolic models that incorporate linguistic structure. DisCoCirc represents natural language text as a `circuit' that captures the core semantic information of the text. These circuits can then be interpreted as modular machine learning models. Additionally, DisCoCirc fulfils another major aim of providing an NLP model that can be implemented on near-term quantum computers. In this paper we describe a software pipeline that converts English text to its DisCoCirc representation. The pipeline achieves coverage over a large fragment of the English language. It relies on Combinatory Categorial Grammar (CCG) parses of the input text as well as coreference resolution information. This semantic and syntactic information is used in several steps to convert the text into a simply-typed $\lambda$-calculus term, and then into a circuit diagram. This pipeline will enable the application of the DisCoCirc framework to NLP tasks, using both classical and quantum approaches.

Distilling Text into Circuits

Jan 25, 2023

Abstract:This paper concerns the structure of meanings within natural language. Earlier, a framework named DisCoCirc was sketched that (1) is compositional and distributional (a.k.a. vectorial); (2) applies to general text; (3) captures linguistic `connections' between meanings (cf. grammar) (4) updates word meanings as text progresses; (5) structures sentence types; (6) accommodates ambiguity. Here, we realise DisCoCirc for a substantial fragment of English. When passing to DisCoCirc's text circuits, some `grammatical bureaucracy' is eliminated, that is, DisCoCirc displays a significant degree of (7) inter- and intra-language independence. That is, e.g., independence from word-order conventions that differ across languages, and independence from choices like many short sentences vs. few long sentences. This inter-language independence means our text circuits should carry over to other languages, unlike the language-specific typings of categorial grammars. Hence, text circuits are a lean structure for the `actual substance of text', that is, the inner-workings of meanings within text across several layers of expressiveness (cf. words, sentences, text), and may capture that what is truly universal beneath grammar. The elimination of grammatical bureaucracy also explains why DisCoCirc: (8) applies beyond language, e.g. to spatial, visual and other cognitive modes. While humans could not verbally communicate in terms of text circuits, machines can. We first define a `hybrid grammar' for a fragment of English, i.e. a purpose-built, minimal grammatical formalism needed to obtain text circuits. We then detail a translation process such that all text generated by this grammar yields a text circuit. Conversely, for any text circuit obtained by freely composing the generators, there exists a text (with hybrid grammar) that gives rise to it. Hence: (9) text circuits are generative for text.

Language-independence of DisCoCirc's Text Circuits: English and Urdu

Aug 11, 2022

Abstract:DisCoCirc is a newly proposed framework for representing the grammar and semantics of texts using compositional, generative circuits. While it constitutes a development of the Categorical Distributional Compositional (DisCoCat) framework, it exposes radically new features. In particular, [14] suggested that DisCoCirc goes some way toward eliminating grammatical differences between languages. In this paper we provide a sketch that this is indeed the case for restricted fragments of English and Urdu. We first develop DisCoCirc for a fragment of Urdu, as it was done for English in [14]. There is a simple translation from English grammar to Urdu grammar, and vice versa. We then show that differences in grammatical structure between English and Urdu - primarily relating to the ordering of words and phrases - vanish when passing to DisCoCirc circuits.

* In Proceedings E2ECOMPVEC, arXiv:2208.05313

A Quantum Natural Language Processing Approach to Musical Intelligence

Nov 10, 2021

Abstract:There has been tremendous progress in Artificial Intelligence (AI) for music, in particular for musical composition and access to large databases for commercialisation through the Internet. We are interested in further advancing this field, focusing on composition. In contrast to current black-box AI methods, we are championing an interpretable compositional outlook on generative music systems. In particular, we are importing methods from the Distributional Compositional Categorical (DisCoCat) modelling framework for Natural Language Processing (NLP), motivated by musical grammars. Quantum computing is a nascent technology, which is very likely to impact the music industry in time to come. Thus, we are pioneering a Quantum Natural Language Processing (QNLP) approach to develop a new generation of intelligent musical systems. This work follows from previous experimental implementations of DisCoCat linguistic models on quantum hardware. In this chapter, we present Quanthoven, the first proof-of-concept ever built, which (a) demonstrates that it is possible to program a quantum computer to learn to classify music that conveys different meanings and (b) illustrates how such a capability might be leveraged to develop a system to compose meaningful pieces of music. After a discussion about our current understanding of music as a communication medium and its relationship to natural language, the chapter focuses on the techniques developed to (a) encode musical compositions as quantum circuits, and (b) design a quantum classifier. The chapter ends with demonstrations of compositions created with the system.

Compositionality as we see it, everywhere around us

Oct 25, 2021Abstract:There are different meanings of the term "compositionality" within science: what one researcher would call compositional, is not at all compositional for another researcher. The most established conception is usually attributed to Frege, and is characterised by a bottom-up flow of meanings: the meaning of the whole can be derived from the meanings of the parts, and how these parts are structured together. Inspired by work on compositionality in quantum theory, and categorical quantum mechanics in particular, we propose the notions of Schrodinger, Whitehead, and complete compositionality. Accounting for recent important developments in quantum technology and artificial intelligence, these do not have the bottom-up meaning flow as part of their definitions. Schrodinger compositionality accommodates quantum theory, and also meaning-as-context. Complete compositionality further strengthens Schrodinger compositionality in order to single out theories like ZX-calculus, that are complete with regard to the intended model. All together, our new notions aim to capture the fact that compositionality is at its best when it is `real', `non-trivial', and even more when it also is `complete'. At this point we only put forward the intuitive and/or restricted formal definitions, and leave a fully comprehensive definition to future collaborative work.

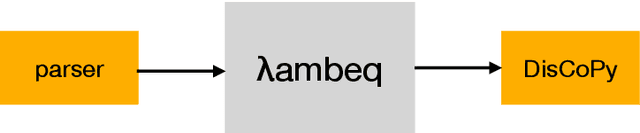

lambeq: An Efficient High-Level Python Library for Quantum NLP

Oct 08, 2021

Abstract:We present lambeq, the first high-level Python library for Quantum Natural Language Processing (QNLP). The open-source toolkit offers a detailed hierarchy of modules and classes implementing all stages of a pipeline for converting sentences to string diagrams, tensor networks, and quantum circuits ready to be used on a quantum computer. lambeq supports syntactic parsing, rewriting and simplification of string diagrams, ansatz creation and manipulation, as well as a number of compositional models for preparing quantum-friendly representations of sentences, employing various degrees of syntax sensitivity. We present the generic architecture and describe the most important modules in detail, demonstrating the usage with illustrative examples. Further, we test the toolkit in practice by using it to perform a number of experiments on simple NLP tasks, implementing both classical and quantum pipelines.

Talking Space: inference from spatial linguistic meanings

Sep 16, 2021Abstract:This paper concerns the intersection of natural language and the physical space around us in which we live, that we observe and/or imagine things within. Many important features of language have spatial connotations, for example, many prepositions (like in, next to, after, on, etc.) are fundamentally spatial. Space is also a key factor of the meanings of many words/phrases/sentences/text, and space is a, if not the key, context for referencing (e.g. pointing) and embodiment. We propose a mechanism for how space and linguistic structure can be made to interact in a matching compositional fashion. Examples include Cartesian space, subway stations, chesspieces on a chess-board, and Penrose's staircase. The starting point for our construction is the DisCoCat model of compositional natural language meaning, which we relax to accommodate physical space. We address the issue of having multiple agents/objects in a space, including the case that each agent has different capabilities with respect to that space, e.g., the specific moves each chesspiece can make, or the different velocities one may be able to reach. Once our model is in place, we show how inferences drawing from the structure of physical space can be made. We also how how linguistic model of space can interact with other such models related to our senses and/or embodiment, such as the conceptual spaces of colour, taste and smell, resulting in a rich compositional model of meaning that is close to human experience and embodiment in the world.

Composing Conversational Negation

Jul 14, 2021

Abstract:Negation in natural language does not follow Boolean logic and is therefore inherently difficult to model. In particular, it takes into account the broader understanding of what is being negated. In previous work, we proposed a framework for negation of words that accounts for `worldly context'. In this paper, we extend that proposal now accounting for the compositional structure inherent in language, within the DisCoCirc framework. We compose the negations of single words to capture the negation of sentences. We also describe how to model the negation of words whose meanings evolve in the text.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge