Alexis Toumi

University of Oxford

Higher-Order DisCoCat (Peirce-Lambek-Montague semantics)

Nov 29, 2023Abstract:We propose a new definition of higher-order DisCoCat (categorical compositional distributional) models where the meaning of a word is not a diagram, but a diagram-valued higher-order function. Our models can be seen as a variant of Montague semantics based on a lambda calculus where the primitives act on string diagrams rather than logical formulae. As a special case, we show how to translate from the Lambek calculus into Peirce's system beta for first-order logic. This allows us to give a purely diagrammatic treatment of higher-order and non-linear processes in natural language semantics: adverbs, prepositions, negation and quantifiers. The theoretical definition presented in this article comes with a proof-of-concept implementation in DisCoPy, the Python library for string diagrams.

Category Theory for Quantum Natural Language Processing

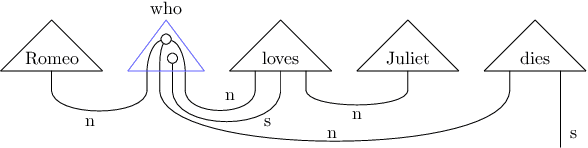

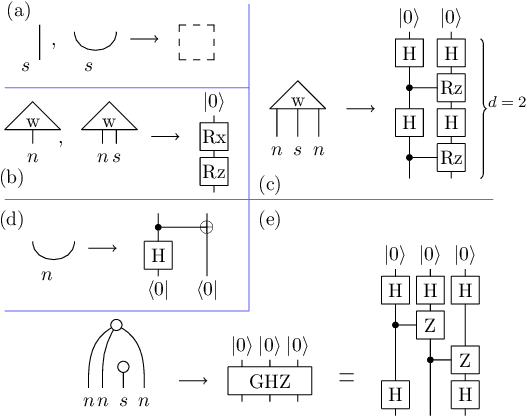

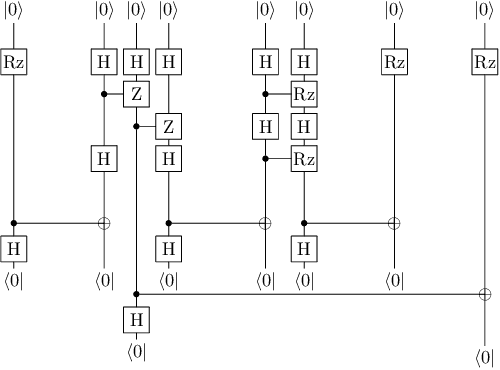

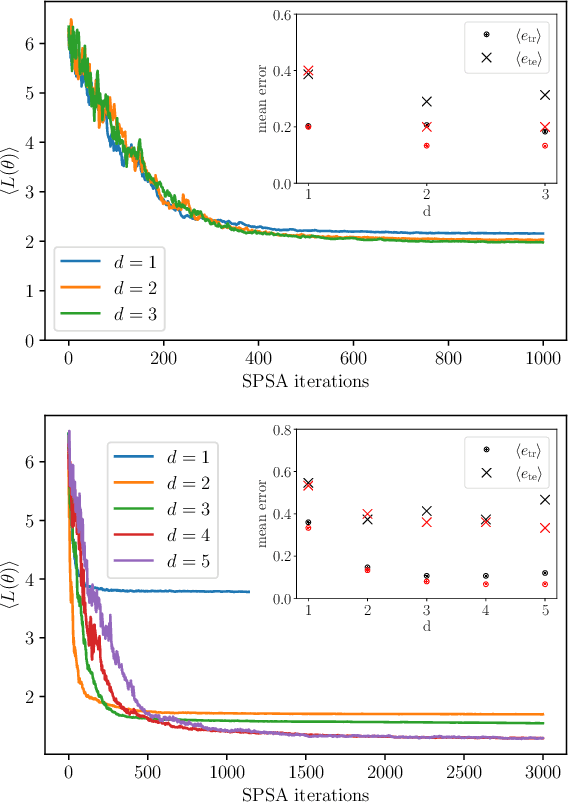

Dec 13, 2022Abstract:This thesis introduces quantum natural language processing (QNLP) models based on a simple yet powerful analogy between computational linguistics and quantum mechanics: grammar as entanglement. The grammatical structure of text and sentences connects the meaning of words in the same way that entanglement structure connects the states of quantum systems. Category theory allows to make this language-to-qubit analogy formal: it is a monoidal functor from grammar to vector spaces. We turn this abstract analogy into a concrete algorithm that translates the grammatical structure onto the architecture of parameterised quantum circuits. We then use a hybrid classical-quantum algorithm to train the model so that evaluating the circuits computes the meaning of sentences in data-driven tasks. The implementation of QNLP models motivated the development of DisCoPy (Distributional Compositional Python), the toolkit for applied category theory of which the first chapter gives a comprehensive overview. String diagrams are the core data structure of DisCoPy, they allow to reason about computation at a high level of abstraction. We show how they can encode both grammatical structures and quantum circuits, but also logical formulae, neural networks or arbitrary Python code. Monoidal functors allow to translate these abstract diagrams into concrete computation, interfacing with optimised task-specific libraries. The second chapter uses DisCopy to implement QNLP models as parameterised functors from grammar to quantum circuits. It gives a first proof-of-concept for the more general concept of functorial learning: generalising machine learning from functions to functors by learning from diagram-like data. In order to learn optimal functor parameters via gradient descent, we introduce the notion of diagrammatic differentiation: a graphical calculus for computing the gradients of parameterised diagrams.

lambeq: An Efficient High-Level Python Library for Quantum NLP

Oct 08, 2021

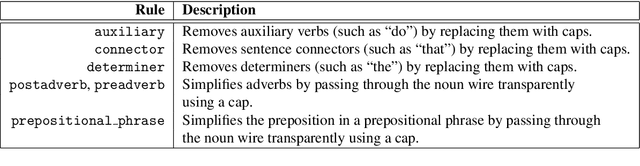

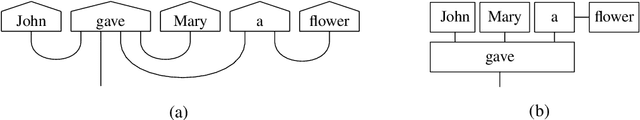

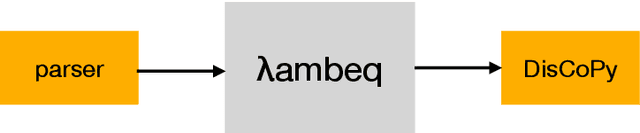

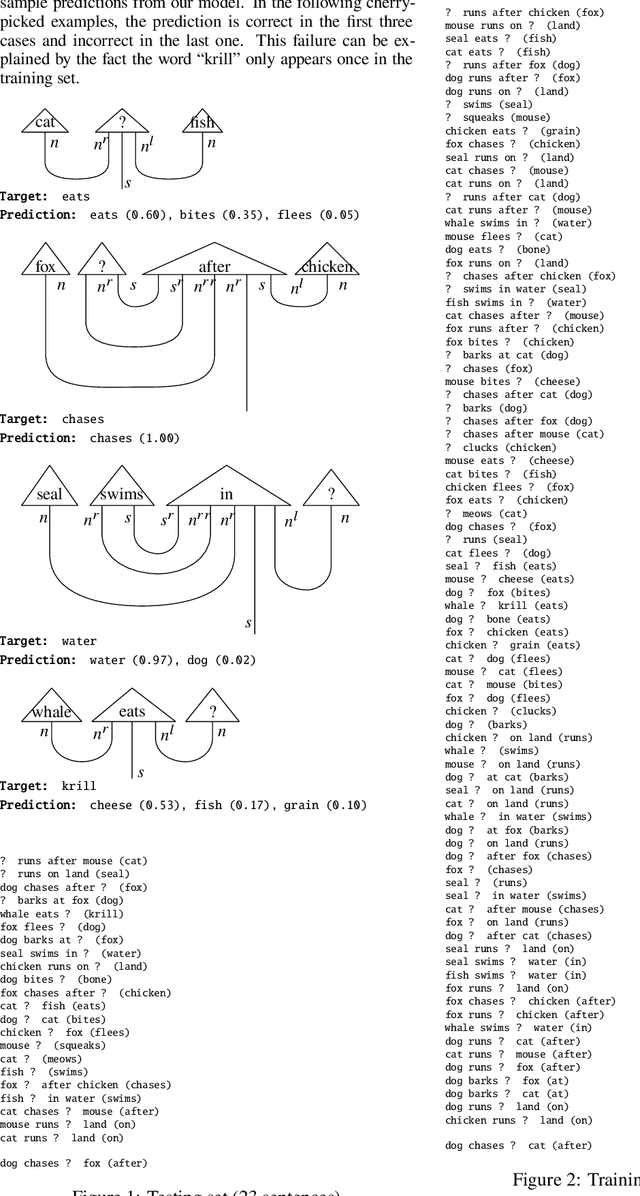

Abstract:We present lambeq, the first high-level Python library for Quantum Natural Language Processing (QNLP). The open-source toolkit offers a detailed hierarchy of modules and classes implementing all stages of a pipeline for converting sentences to string diagrams, tensor networks, and quantum circuits ready to be used on a quantum computer. lambeq supports syntactic parsing, rewriting and simplification of string diagrams, ansatz creation and manipulation, as well as a number of compositional models for preparing quantum-friendly representations of sentences, employing various degrees of syntax sensitivity. We present the generic architecture and describe the most important modules in detail, demonstrating the usage with illustrative examples. Further, we test the toolkit in practice by using it to perform a number of experiments on simple NLP tasks, implementing both classical and quantum pipelines.

How to make qubits speak

Jul 02, 2021

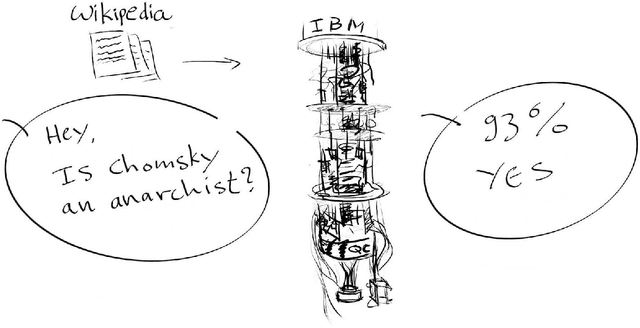

Abstract:This is a story about making quantum computers speak, and doing so in a quantum-native, compositional and meaning-aware manner. Recently we did question-answering with an actual quantum computer. We explain what we did, stress that this was all done in terms of pictures, and provide many pointers to the related literature. In fact, besides natural language, many other things can be implemented in a quantum-native, compositional and meaning-aware manner, and we provide the reader with some indications of that broader pictorial landscape, including our account on the notion of compositionality. We also provide some guidance for the actual execution, so that the reader can give it a go as well.

Functorial Language Models

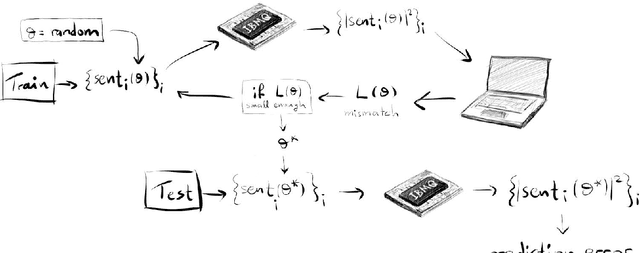

Mar 26, 2021

Abstract:We introduce functorial language models: a principled way to compute probability distributions over word sequences given a monoidal functor from grammar to meaning. This yields a method for training categorical compositional distributional (DisCoCat) models on raw text data. We provide a proof-of-concept implementation in DisCoPy, the Python toolbox for monoidal categories.

Diagrammatic Differentiation for Quantum Machine Learning

Mar 14, 2021Abstract:We introduce diagrammatic differentiation for tensor calculus by generalising the dual number construction from rigs to monoidal categories. Applying this to ZX diagrams, we show how to calculate diagrammatically the gradient of a linear map with respect to a phase parameter. For diagrams of parametrised quantum circuits, we get the well-known parameter-shift rule at the basis of many variational quantum algorithms. We then extend our method to the automatic differentation of hybrid classical-quantum circuits, using diagrams with bubbles to encode arbitrary non-linear operators. Moreover, diagrammatic differentiation comes with an open-source implementation in DisCoPy, the Python library for monoidal categories. Diagrammatic gradients of classical-quantum circuits can then be simplified using the PyZX library and executed on quantum hardware via the tket compiler. This opens the door to many practical applications harnessing both the structure of string diagrams and the computational power of quantum machine learning.

Grammar-Aware Question-Answering on Quantum Computers

Dec 07, 2020

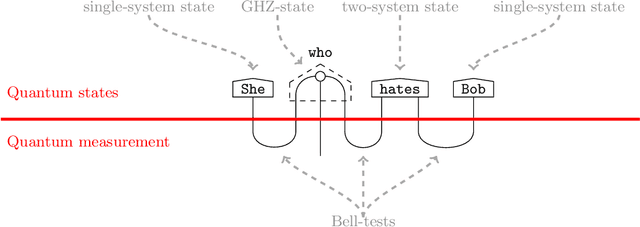

Abstract:Natural language processing (NLP) is at the forefront of great advances in contemporary AI, and it is arguably one of the most challenging areas of the field. At the same time, with the steady growth of quantum hardware and notable improvements towards implementations of quantum algorithms, we are approaching an era when quantum computers perform tasks that cannot be done on classical computers with a reasonable amount of resources. This provides a new range of opportunities for AI, and for NLP specifically. Earlier work has already demonstrated a potential quantum advantage for NLP in a number of manners: (i) algorithmic speedups for search-related or classification tasks, which are the most dominant tasks within NLP, (ii) exponentially large quantum state spaces allow for accommodating complex linguistic structures, (iii) novel models of meaning employing density matrices naturally model linguistic phenomena such as hyponymy and linguistic ambiguity, among others. In this work, we perform the first implementation of an NLP task on noisy intermediate-scale quantum (NISQ) hardware. Sentences are instantiated as parameterised quantum circuits. We encode word-meanings in quantum states and we explicitly account for grammatical structure, which even in mainstream NLP is not commonplace, by faithfully hard-wiring it as entangling operations. This makes our approach to quantum natural language processing (QNLP) particularly NISQ-friendly. Our novel QNLP model shows concrete promise for scalability as the quality of the quantum hardware improves in the near future.

Foundations for Near-Term Quantum Natural Language Processing

Dec 07, 2020Abstract:We provide conceptual and mathematical foundations for near-term quantum natural language processing (QNLP), and do so in quantum computer scientist friendly terms. We opted for an expository presentation style, and provide references for supporting empirical evidence and formal statements concerning mathematical generality. We recall how the quantum model for natural language that we employ canonically combines linguistic meanings with rich linguistic structure, most notably grammar. In particular, the fact that it takes a quantum-like model to combine meaning and structure, establishes QNLP as quantum-native, on par with simulation of quantum systems. Moreover, the now leading Noisy Intermediate-Scale Quantum (NISQ) paradigm for encoding classical data on quantum hardware, variational quantum circuits, makes NISQ exceptionally QNLP-friendly: linguistic structure can be encoded as a free lunch, in contrast to the apparently exponentially expensive classical encoding of grammar. Quantum speed-up for QNLP tasks has already been established in previous work with Will Zeng. Here we provide a broader range of tasks which all enjoy the same advantage. Diagrammatic reasoning is at the heart of QNLP. Firstly, the quantum model interprets language as quantum processes via the diagrammatic formalism of categorical quantum mechanics. Secondly, these diagrams are via ZX-calculus translated into quantum circuits. Parameterisations of meanings then become the circuit variables to be learned. Our encoding of linguistic structure within quantum circuits also embodies a novel approach for establishing word-meanings that goes beyond the current standards in mainstream AI, by placing linguistic structure at the heart of Wittgenstein's meaning-is-context.

Functorial Language Games for Question Answering

May 19, 2020Abstract:We present some categorical investigations into Wittgenstein's language-games, with applications to game-theoretic pragmatics and question-answering in natural language processing.

Quantum Natural Language Processing on Near-Term Quantum Computers

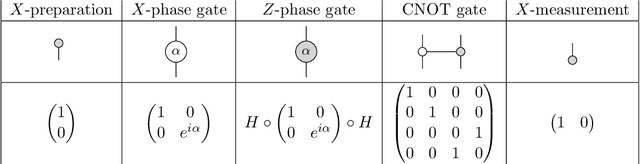

May 08, 2020Abstract:In this work, we describe a full-stack pipeline for natural language processing on near-term quantum computers, aka QNLP. The language modelling framework we employ is that of compositional distributional semantics (DisCoCat), which extends and complements the compositional structure of pregroup grammars. Within this model, the grammatical reduction of a sentence is interpreted as a diagram, encoding a specific interaction of words according to the grammar. It is this interaction which, together with a specific choice of word embedding, realises the meaning (or "semantics") of a sentence. Building on the formal quantum-like nature of such interactions, we present a method for mapping DisCoCat diagrams to quantum circuits. Our methodology is compatible both with NISQ devices and with established Quantum Machine Learning techniques, paving the way to near-term applications of quantum technology to natural language processing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge