Robin Lorenz

Towards Compositional Interpretability for XAI

Jun 25, 2024Abstract:Artificial intelligence (AI) is currently based largely on black-box machine learning models which lack interpretability. The field of eXplainable AI (XAI) strives to address this major concern, being critical in high-stakes areas such as the finance, legal and health sectors. We present an approach to defining AI models and their interpretability based on category theory. For this we employ the notion of a compositional model, which sees a model in terms of formal string diagrams which capture its abstract structure together with its concrete implementation. This comprehensive view incorporates deterministic, probabilistic and quantum models. We compare a wide range of AI models as compositional models, including linear and rule-based models, (recurrent) neural networks, transformers, VAEs, and causal and DisCoCirc models. Next we give a definition of interpretation of a model in terms of its compositional structure, demonstrating how to analyse the interpretability of a model, and using this to clarify common themes in XAI. We find that what makes the standard 'intrinsically interpretable' models so transparent is brought out most clearly diagrammatically. This leads us to the more general notion of compositionally-interpretable (CI) models, which additionally include, for instance, causal, conceptual space, and DisCoCirc models. We next demonstrate the explainability benefits of CI models. Firstly, their compositional structure may allow the computation of other quantities of interest, and may facilitate inference from the model to the modelled phenomenon by matching its structure. Secondly, they allow for diagrammatic explanations for their behaviour, based on influence constraints, diagram surgery and rewrite explanations. Finally, we discuss many future directions for the approach, raising the question of how to learn such meaningfully structured models in practice.

Causal models in string diagrams

Apr 15, 2023

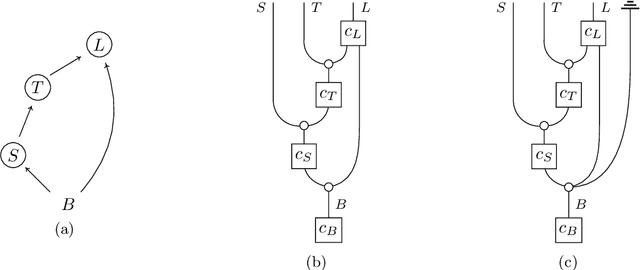

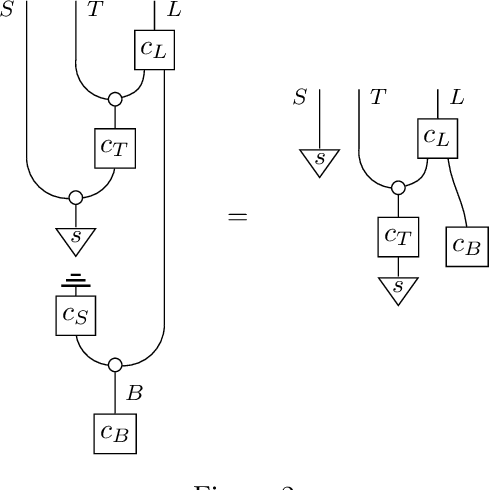

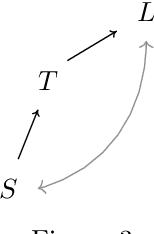

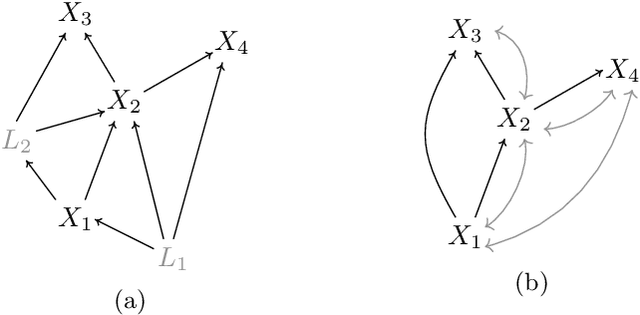

Abstract:The framework of causal models provides a principled approach to causal reasoning, applied today across many scientific domains. Here we present this framework in the language of string diagrams, interpreted formally using category theory. A class of string diagrams, called network diagrams, are in 1-to-1 correspondence with directed acyclic graphs. A causal model is given by such a diagram with its components interpreted as stochastic maps, functions, or general channels in a symmetric monoidal category with a 'copy-discard' structure (cd-category), turning a model into a single mathematical object that can be reasoned with intuitively and yet rigorously. Building on prior works by Fong and Jacobs, Kissinger and Zanasi, as well as Fritz and Klingler, we present diagrammatic definitions of causal models and functional causal models in a cd-category, generalising causal Bayesian networks and structural causal models, respectively. We formalise general interventions on a model, including but beyond do-interventions, and present the natural notion of an open causal model with inputs. We also give an approach to conditioning based on a normalisation box, allowing for causal inference calculations to be done fully diagrammatically. We define counterfactuals in this setup, and treat the problems of the identifiability of causal effects and counterfactuals fully diagrammatically. The benefits of such a presentation of causal models lie in foundational questions in causal reasoning and in their clarificatory role and pedagogical value. This work aims to be accessible to different communities, from causal model practitioners to researchers in applied category theory, and discusses many examples from the literature for illustration. Overall, we argue and demonstrate that causal reasoning according to the causal model framework is most naturally and intuitively done as diagrammatic reasoning.

lambeq: An Efficient High-Level Python Library for Quantum NLP

Oct 08, 2021

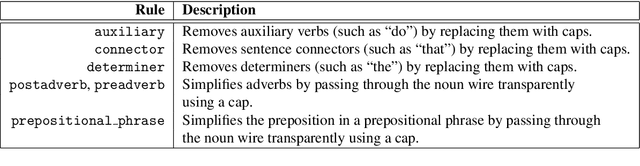

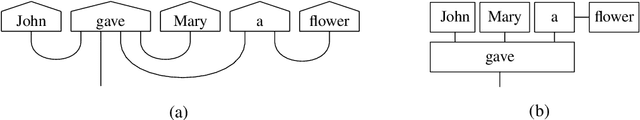

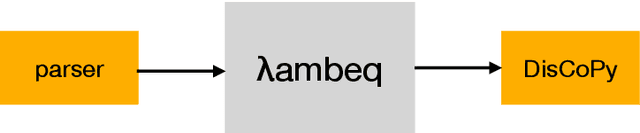

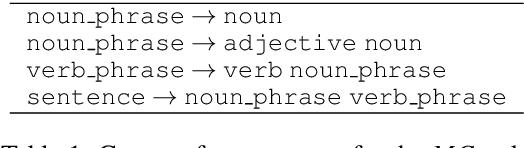

Abstract:We present lambeq, the first high-level Python library for Quantum Natural Language Processing (QNLP). The open-source toolkit offers a detailed hierarchy of modules and classes implementing all stages of a pipeline for converting sentences to string diagrams, tensor networks, and quantum circuits ready to be used on a quantum computer. lambeq supports syntactic parsing, rewriting and simplification of string diagrams, ansatz creation and manipulation, as well as a number of compositional models for preparing quantum-friendly representations of sentences, employing various degrees of syntax sensitivity. We present the generic architecture and describe the most important modules in detail, demonstrating the usage with illustrative examples. Further, we test the toolkit in practice by using it to perform a number of experiments on simple NLP tasks, implementing both classical and quantum pipelines.

QNLP in Practice: Running Compositional Models of Meaning on a Quantum Computer

Feb 25, 2021

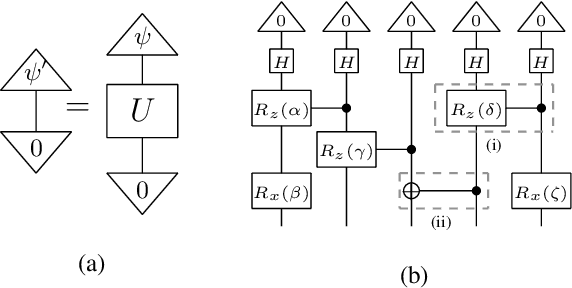

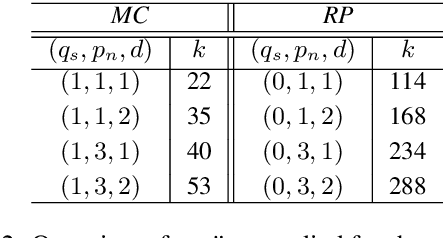

Abstract:Quantum Natural Language Processing (QNLP) deals with the design and implementation of NLP models intended to be run on quantum hardware. In this paper, we present results on the first NLP experiments conducted on Noisy Intermediate-Scale Quantum (NISQ) computers for datasets of size >= 100 sentences. Exploiting the formal similarity of the compositional model of meaning by Coecke et al. (2010) with quantum theory, we create representations for sentences that have a natural mapping to quantum circuits. We use these representations to implement and successfully train two NLP models that solve simple sentence classification tasks on quantum hardware. We describe in detail the main principles, the process and challenges of these experiments, in a way accessible to NLP researchers, thus paving the way for practical Quantum Natural Language Processing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge