Jonathon Liu

Toward Information Theoretic Active Inverse Reinforcement Learning

Dec 31, 2024Abstract:As AI systems become increasingly autonomous, aligning their decision-making to human preferences is essential. In domains like autonomous driving or robotics, it is impossible to write down the reward function representing these preferences by hand. Inverse reinforcement learning (IRL) offers a promising approach to infer the unknown reward from demonstrations. However, obtaining human demonstrations can be costly. Active IRL addresses this challenge by strategically selecting the most informative scenarios for human demonstration, reducing the amount of required human effort. Where most prior work allowed querying the human for an action at one state at a time, we motivate and analyse scenarios where we collect longer trajectories. We provide an information-theoretic acquisition function, propose an efficient approximation scheme, and illustrate its performance through a set of gridworld experiments as groundwork for future work expanding to more general settings.

On the Anatomy of Attention

Jul 02, 2024Abstract:We introduce a category-theoretic diagrammatic formalism in order to systematically relate and reason about machine learning models. Our diagrams present architectures intuitively but without loss of essential detail, where natural relationships between models are captured by graphical transformations, and important differences and similarities can be identified at a glance. In this paper, we focus on attention mechanisms: translating folklore into mathematical derivations, and constructing a taxonomy of attention variants in the literature. As a first example of an empirical investigation underpinned by our formalism, we identify recurring anatomical components of attention, which we exhaustively recombine to explore a space of variations on the attention mechanism.

A Pipeline For Discourse Circuits From CCG

Nov 29, 2023

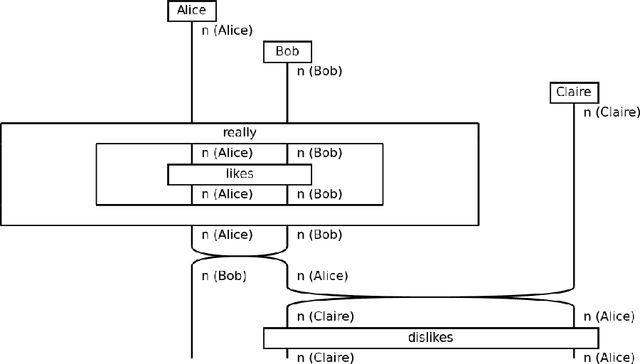

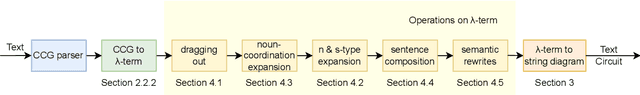

Abstract:There is a significant disconnect between linguistic theory and modern NLP practice, which relies heavily on inscrutable black-box architectures. DisCoCirc is a newly proposed model for meaning that aims to bridge this divide, by providing neuro-symbolic models that incorporate linguistic structure. DisCoCirc represents natural language text as a `circuit' that captures the core semantic information of the text. These circuits can then be interpreted as modular machine learning models. Additionally, DisCoCirc fulfils another major aim of providing an NLP model that can be implemented on near-term quantum computers. In this paper we describe a software pipeline that converts English text to its DisCoCirc representation. The pipeline achieves coverage over a large fragment of the English language. It relies on Combinatory Categorial Grammar (CCG) parses of the input text as well as coreference resolution information. This semantic and syntactic information is used in several steps to convert the text into a simply-typed $\lambda$-calculus term, and then into a circuit diagram. This pipeline will enable the application of the DisCoCirc framework to NLP tasks, using both classical and quantum approaches.

Distilling Text into Circuits

Jan 25, 2023

Abstract:This paper concerns the structure of meanings within natural language. Earlier, a framework named DisCoCirc was sketched that (1) is compositional and distributional (a.k.a. vectorial); (2) applies to general text; (3) captures linguistic `connections' between meanings (cf. grammar) (4) updates word meanings as text progresses; (5) structures sentence types; (6) accommodates ambiguity. Here, we realise DisCoCirc for a substantial fragment of English. When passing to DisCoCirc's text circuits, some `grammatical bureaucracy' is eliminated, that is, DisCoCirc displays a significant degree of (7) inter- and intra-language independence. That is, e.g., independence from word-order conventions that differ across languages, and independence from choices like many short sentences vs. few long sentences. This inter-language independence means our text circuits should carry over to other languages, unlike the language-specific typings of categorial grammars. Hence, text circuits are a lean structure for the `actual substance of text', that is, the inner-workings of meanings within text across several layers of expressiveness (cf. words, sentences, text), and may capture that what is truly universal beneath grammar. The elimination of grammatical bureaucracy also explains why DisCoCirc: (8) applies beyond language, e.g. to spatial, visual and other cognitive modes. While humans could not verbally communicate in terms of text circuits, machines can. We first define a `hybrid grammar' for a fragment of English, i.e. a purpose-built, minimal grammatical formalism needed to obtain text circuits. We then detail a translation process such that all text generated by this grammar yields a text circuit. Conversely, for any text circuit obtained by freely composing the generators, there exists a text (with hybrid grammar) that gives rise to it. Hence: (9) text circuits are generative for text.

Language-independence of DisCoCirc's Text Circuits: English and Urdu

Aug 11, 2022

Abstract:DisCoCirc is a newly proposed framework for representing the grammar and semantics of texts using compositional, generative circuits. While it constitutes a development of the Categorical Distributional Compositional (DisCoCat) framework, it exposes radically new features. In particular, [14] suggested that DisCoCirc goes some way toward eliminating grammatical differences between languages. In this paper we provide a sketch that this is indeed the case for restricted fragments of English and Urdu. We first develop DisCoCirc for a fragment of Urdu, as it was done for English in [14]. There is a simple translation from English grammar to Urdu grammar, and vice versa. We then show that differences in grammatical structure between English and Urdu - primarily relating to the ordering of words and phrases - vanish when passing to DisCoCirc circuits.

* In Proceedings E2ECOMPVEC, arXiv:2208.05313

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge