Beishui Liao

Abstract Argumentation with Subargument Relations

Jan 17, 2026Abstract:Dung's abstract argumentation framework characterises argument acceptability solely via an attack relation, deliberately abstracting from the internal structure of arguments. While this level of abstraction has enabled a rich body of results, it limits the ability to represent structural dependencies that are central in many structured argumentation formalisms, in particular subargument relations. Existing extensions, including bipolar argumentation frameworks, introduce support relations, but these do not capture the asymmetric and constitutive nature of subarguments or their interaction with attacks. In this paper, we study abstract argumentation frameworks enriched with an explicit subargument relation, treated alongside attack as a basic relation. We analyse how subargument relations interact with attacks and examine their impact on fundamental semantic properties. This framework provides a principled abstraction of structural information and clarifies the role of subarguments in abstract acceptability reasoning.

From Noisy to Native: LLM-driven Graph Restoration for Test-Time Graph Domain Adaptation

Oct 09, 2025

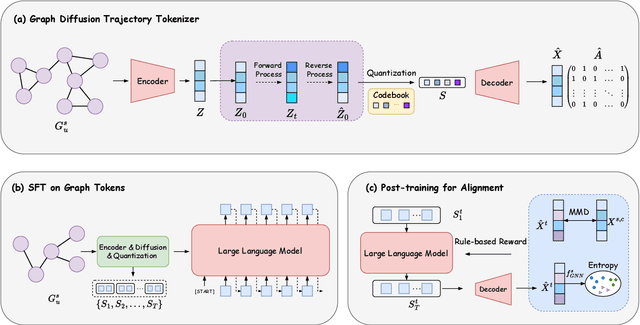

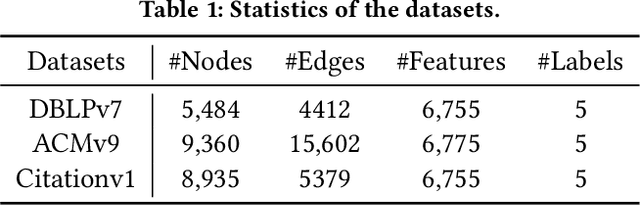

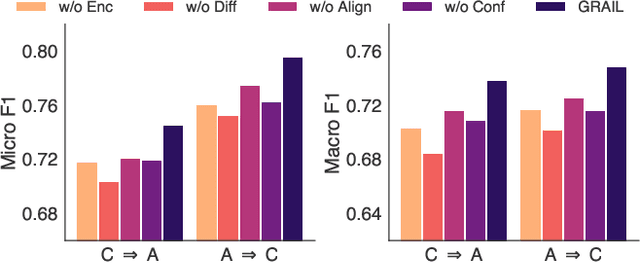

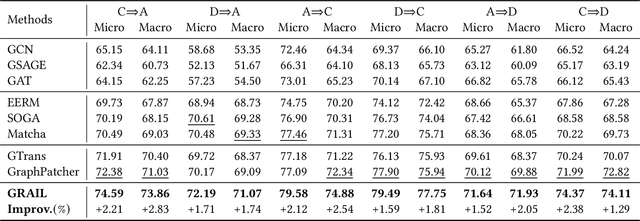

Abstract:Graph domain adaptation (GDA) has achieved great attention due to its effectiveness in addressing the domain shift between train and test data. A significant bottleneck in existing graph domain adaptation methods is their reliance on source-domain data, which is often unavailable due to privacy or security concerns. This limitation has driven the development of Test-Time Graph Domain Adaptation (TT-GDA), which aims to transfer knowledge without accessing the source examples. Inspired by the generative power of large language models (LLMs), we introduce a novel framework that reframes TT-GDA as a generative graph restoration problem, "restoring the target graph to its pristine, source-domain-like state". There are two key challenges: (1) We need to construct a reasonable graph restoration process and design an effective encoding scheme that an LLM can understand, bridging the modality gap. (2) We need to devise a mechanism to ensure the restored graph acquires the intrinsic features of the source domain, even without access to the source data. To ensure the effectiveness of graph restoration, we propose GRAIL, that restores the target graph into a state that is well-aligned with the source domain. Specifically, we first compress the node representations into compact latent features and then use a graph diffusion process to model the graph restoration process. Then a quantization module encodes the restored features into discrete tokens. Building on this, an LLM is fine-tuned as a generative restorer to transform a "noisy" target graph into a "native" one. To further improve restoration quality, we introduce a reinforcement learning process guided by specialized alignment and confidence rewards. Extensive experiments demonstrate the effectiveness of our approach across various datasets.

Argumentation Computation with Large Language Models : A Benchmark Study

Dec 21, 2024

Abstract:In recent years, large language models (LLMs) have made significant advancements in neuro-symbolic computing. However, the combination of LLM with argumentation computation remains an underexplored domain, despite its considerable potential for real-world applications requiring defeasible reasoning. In this paper, we aim to investigate the capability of LLMs in determining the extensions of various abstract argumentation semantics. To achieve this, we develop and curate a benchmark comprising diverse abstract argumentation frameworks, accompanied by detailed explanations of algorithms for computing extensions. Subsequently, we fine-tune LLMs on the proposed benchmark, focusing on two fundamental extension-solving tasks. As a comparative baseline, LLMs are evaluated using a chain-of-thought approach, where they struggle to accurately compute semantics. In the experiments, we demonstrate that the process explanation plays a crucial role in semantics computation learning. Models trained with explanations show superior generalization accuracy compared to those trained solely with question-answer pairs. Furthermore, by leveraging the self-explanation capabilities of LLMs, our approach provides detailed illustrations that mitigate the lack of transparency typically associated with neural networks. Our findings contribute to the broader understanding of LLMs' potential in argumentation computation, offering promising avenues for further research in this domain.

Defense semantics of argumentation: revisit

Nov 22, 2023Abstract:In this paper we introduce a novel semantics, called defense semantics, for Dung's abstract argumentation frameworks in terms of a notion of (partial) defence, which is a triple encoding that one argument is (partially) defended by another argument via attacking the attacker of the first argument. In terms of defense semantics, we show that defenses related to self-attacked arguments and arguments in 3-cycles are unsatifiable under any situation and therefore can be removed without affecting the defense semantics of an AF. Then, we introduce a new notion of defense equivalence of AFs, and compare defense equivalence with standard equivalence and strong equivalence, respectively. Finally, by exploiting defense semantics, we define two kinds of reasons for accepting arguments, i.e., direct reasons and root reasons, and a notion of root equivalence of AFs that can be used in argumentation summarization.

A Concept and Argumentation based Interpretable Model in High Risk Domains

Aug 17, 2022

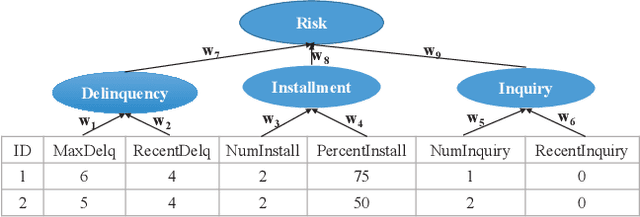

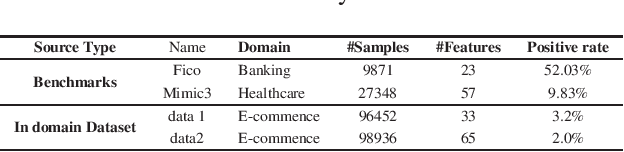

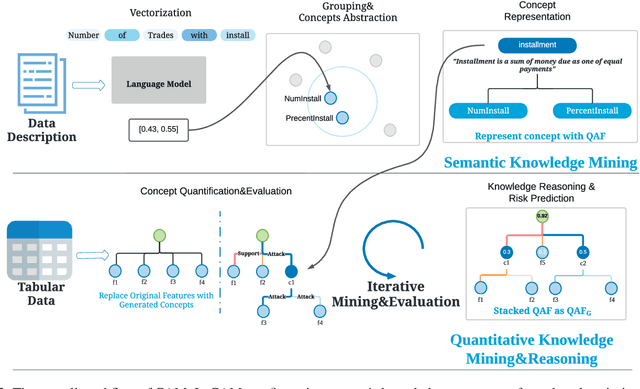

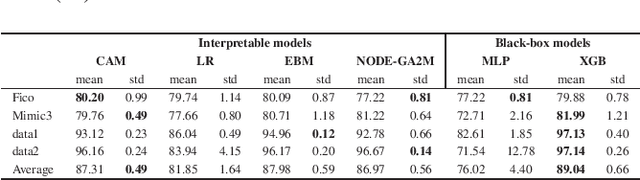

Abstract:Interpretability has become an essential topic for artificial intelligence in some high-risk domains such as healthcare, bank and security. For commonly-used tabular data, traditional methods trained end-to-end machine learning models with numerical and categorical data only, and did not leverage human understandable knowledge such as data descriptions. Yet mining human-level knowledge from tabular data and using it for prediction remain a challenge. Therefore, we propose a concept and argumentation based model (CAM) that includes the following two components: a novel concept mining method to obtain human understandable concepts and their relations from both descriptions of features and the underlying data, and a quantitative argumentation-based method to do knowledge representation and reasoning. As a result of it, CAM provides decisions that are based on human-level knowledge and the reasoning process is intrinsically interpretable. Finally, to visualize the purposed interpretable model, we provide a dialogical explanation that contain dominated reasoning path within CAM. Experimental results on both open source benchmark dataset and real-word business dataset show that (1) CAM is transparent and interpretable, and the knowledge inside the CAM is coherent with human understanding; (2) Our interpretable approach can reach competitive results comparing with other state-of-art models.

Value-based Practical Reasoning: Modal Logic + Argumentation

Apr 11, 2022

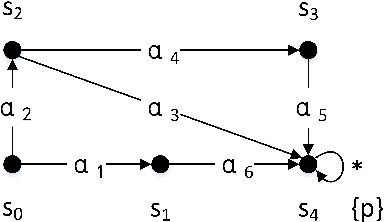

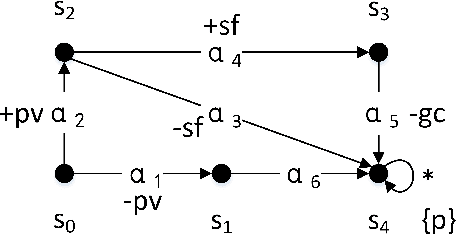

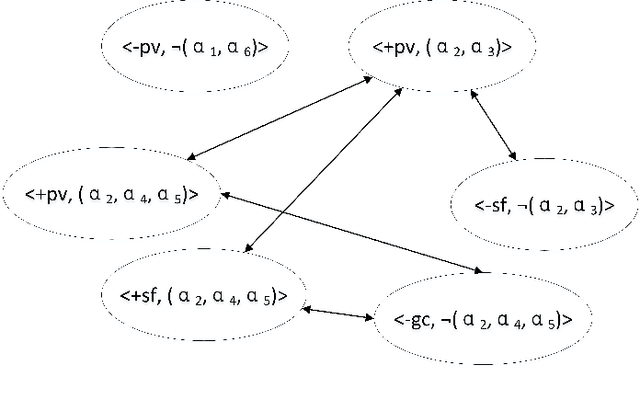

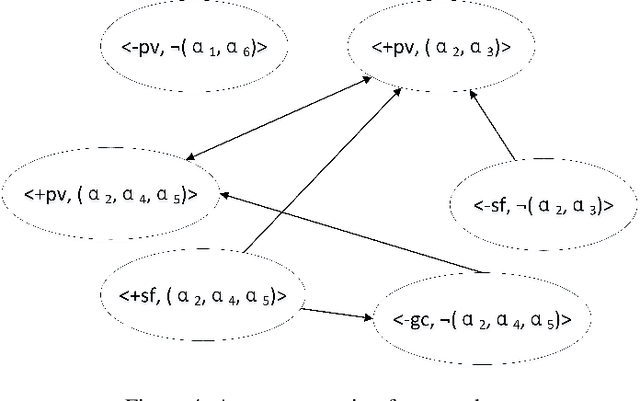

Abstract:Autonomous agents are supposed to be able to finish tasks or achieve goals that are assigned by their users through performing a sequence of actions. Since there might exist multiple plans that an agent can follow and each plan might promote or demote different values along each action, the agent should be able to resolve the conflicts between them and evaluate which plan he should follow. In this paper, we develop a logic-based framework that combines modal logic and argumentation for value-based practical reasoning with plans. Modal logic is used as a technique to represent and verify whether a plan with its local properties of value promotion or demotion can be followed to achieve an agent's goal. We then propose an argumentation-based approach that allows an agent to reason about his plans in the form of supporting or objecting to a plan using the verification results.

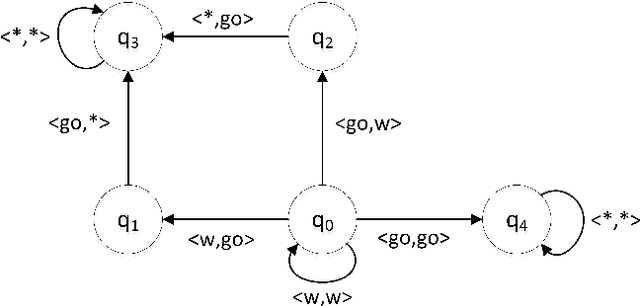

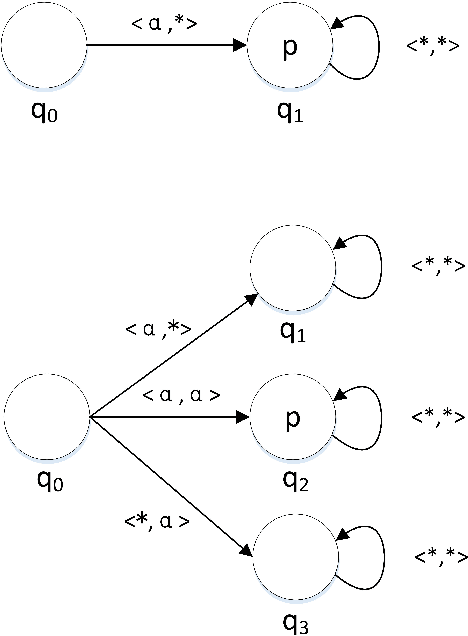

BTPK-based learning: An Interpretable Method for Named Entity Recognition

Jan 24, 2022Abstract:Named entity recognition (NER) is an essential task in natural language processing, but the internal mechanism of most NER models is a black box for users. In some high-stake decision-making areas, improving the interpretability of an NER method is crucial but challenging. In this paper, based on the existing Deterministic Talmudic Public announcement logic (TPK) model, we propose a novel binary tree model (called BTPK) and apply it to two widely used Bi-RNNs to obtain BTPK-based interpretable ones. Then, we design a counterfactual verification module to verify the BTPK-based learning method. Experimental results on three public datasets show that the BTPK-based learning outperform two classical Bi-RNNs with self-attention, especially on small, simple data and relatively large, complex data. Moreover, the counterfactual verification demonstrates that the explanations provided by the BTPK-based learning method are reasonable and accurate in NER tasks. Besides, the logical reasoning based on BTPK shows how Bi-RNNs handle NER tasks, with different distance of public announcements on long and complex sequences.

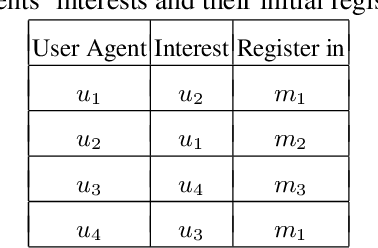

A Formal Framework for Reasoning about Agents' Independence in Self-organizing Multi-agent Systems

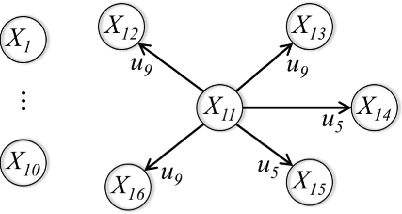

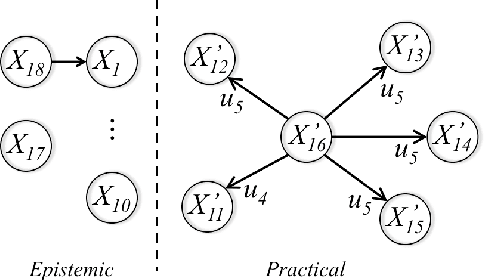

May 26, 2021

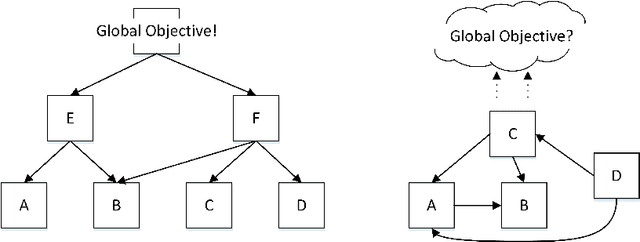

Abstract:Self-organization is a process where a stable pattern is formed by the cooperative behavior between parts of an initially disordered system without external control or influence. It has been introduced to multi-agent systems as an internal control process or mechanism to solve difficult problems spontaneously. However, because a self-organizing multi-agent system has autonomous agents and local interactions between them, it is difficult to predict the behavior of the system from the behavior of the local agents we design. This paper proposes a logic-based framework of self-organizing multi-agent systems, where agents interact with each other by following their prescribed local rules. The dependence relation between coalitions of agents regarding their contributions to the global behavior of the system is reasoned about from the structural and semantic perspectives. We show that the computational complexity of verifying such a self-organizing multi-agent system is in exponential time. We then combine our framework with graph theory to decompose a system into different coalitions located in different layers, which allows us to verify agents' full contributions more efficiently. The resulting information about agents' full contributions allows us to understand the complex link between local agent behavior and system level behavior in a self-organizing multi-agent system. Finally, we show how we can use our framework to model a constraint satisfaction problem.

A Bayesian Approach to Direct and Inverse Abstract Argumentation Problems

Sep 10, 2019

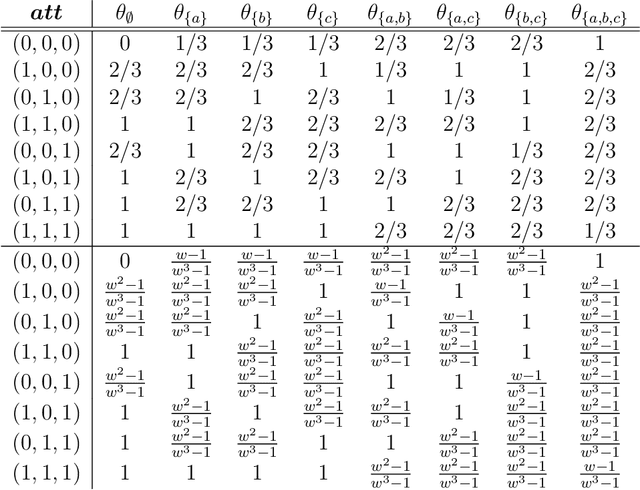

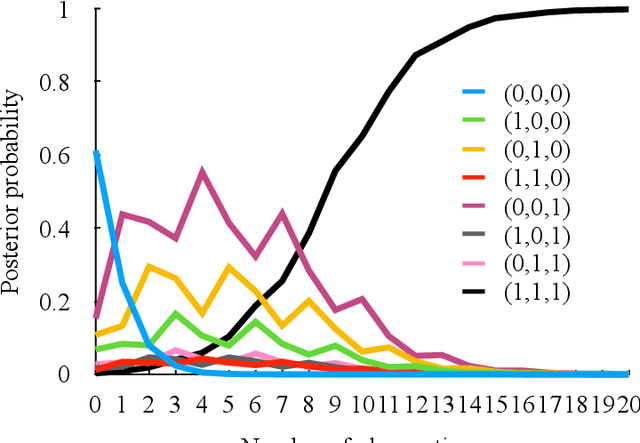

Abstract:This paper studies a fundamental mechanism of how to detect a conflict between arguments given sentiments regarding acceptability of the arguments. We introduce a concept of the inverse problem of the abstract argumentation to tackle the problem. Given noisy sets of acceptable arguments, it aims to find attack relations explaining the sets well in terms of acceptability semantics. It is the inverse of the direct problem corresponding to the traditional problem of the abstract argumentation that focuses on finding sets of acceptable arguments in terms of the semantics given an attack relation between the arguments. We give a probabilistic model handling both of the problems in a way that is faithful to the acceptability semantics. From a theoretical point of view, we show that a solution to both the direct and inverse problems is a special case of the probabilistic inference on the model. We discuss that the model provides a natural extension of the semantics to cope with uncertain attack relations distributed probabilistically. From en empirical point of view, we argue that it reasonably predicts individuals sentiments regarding acceptability of arguments. This paper contributes to lay the foundation for making acceptability semantics data-driven and to provide a way to tackle the knowledge acquisition bottleneck.

Representation, Justification and Explanation in a Value Driven Agent: An Argumentation-Based Approach

Dec 13, 2018

Abstract:For an autonomous system, the ability to justify and explain its decision making is crucial to improve its transparency and trustworthiness. This paper proposes an argumentation-based approach to represent, justify and explain the decision making of a value driven agent (VDA). By using a newly defined formal language, some implicit knowledge of a VDA is made explicit. The selection of an action in each situation is justified by constructing and comparing arguments supporting different actions. In terms of a constructed argumentation framework and its extensions, the reasons for explaining an action are defined in terms of the arguments for or against the action, by exploiting their defeat relation, as well as their premises and conclusions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge