Nir Oren

Eliciting Rational Initial Weights in Gradual Argumentation

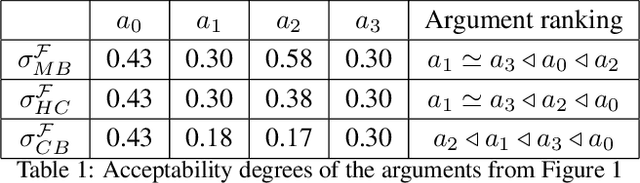

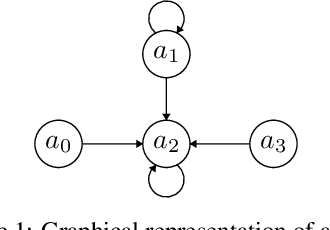

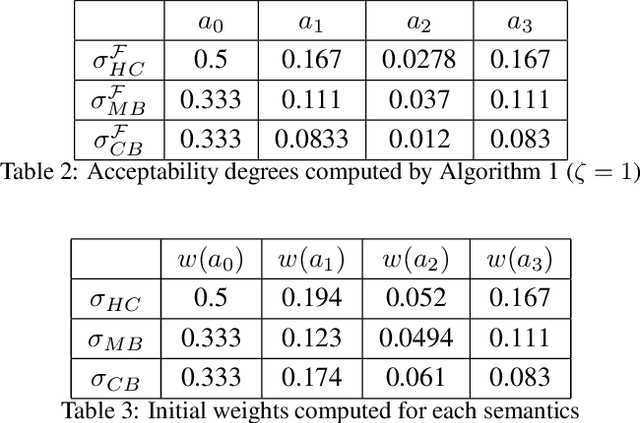

Feb 11, 2025Abstract:Many semantics for weighted argumentation frameworks assume that each argument is associated with an initial weight. However, eliciting these initial weights poses challenges: (1) accurately providing a specific numerical value is often difficult, and (2) individuals frequently confuse initial weights with acceptability degrees in the presence of other arguments. To address these issues, we propose an elicitation pipeline that allows one to specify acceptability degree intervals for each argument. By employing gradual semantics, we can refine these intervals when they are rational, restore rationality when they are not, and ultimately identify possible initial weights for each argument.

Monkey Transfer Learning Can Improve Human Pose Estimation

Dec 20, 2024Abstract:In this study, we investigated whether transfer learning from macaque monkeys could improve human pose estimation. Current state-of-the-art pose estimation techniques, often employing deep neural networks, can match human annotation in non-clinical datasets. However, they underperform in novel situations, limiting their generalisability to clinical populations with pathological movement patterns. Clinical datasets are not widely available for AI training due to ethical challenges and a lack of data collection. We observe that data from other species may be able to bridge this gap by exposing the network to a broader range of motion cues. We found that utilising data from other species and undertaking transfer learning improved human pose estimation in terms of precision and recall compared to the benchmark, which was trained on humans only. Compared to the benchmark, fewer human training examples were needed for the transfer learning approach (1,000 vs 19,185). These results suggest that macaque pose estimation can improve human pose estimation in clinical situations. Future work should further explore the utility of pose estimation trained with monkey data in clinical populations.

Responsibility-aware Strategic Reasoning in Probabilistic Multi-Agent Systems

Oct 31, 2024Abstract:Responsibility plays a key role in the development and deployment of trustworthy autonomous systems. In this paper, we focus on the problem of strategic reasoning in probabilistic multi-agent systems with responsibility-aware agents. We introduce the logic PATL+R, a variant of Probabilistic Alternating-time Temporal Logic. The novelty of PATL+R lies in its incorporation of modalities for causal responsibility, providing a framework for responsibility-aware multi-agent strategic reasoning. We present an approach to synthesise joint strategies that satisfy an outcome specified in PATL+R, while optimising the share of expected causal responsibility and reward. This provides a notion of balanced distribution of responsibility and reward gain among agents. To this end, we utilise the Nash equilibrium as the solution concept for our strategic reasoning problem and demonstrate how to compute responsibility-aware Nash equilibrium strategies via a reduction to parametric model checking of concurrent stochastic multi-player games.

Measuring Responsibility in Multi-Agent Systems

Oct 31, 2024

Abstract:We introduce a family of quantitative measures of responsibility in multi-agent planning, building upon the concepts of causal responsibility proposed by Parker et al.~[ParkerGL23]. These concepts are formalised within a variant of probabilistic alternating-time temporal logic. Unlike existing approaches, our framework ascribes responsibility to agents for a given outcome by linking probabilities between behaviours and responsibility through three metrics, including an entropy-based measurement of responsibility. This latter measure is the first to capture the causal responsibility properties of outcomes over time, offering an asymptotic measurement that reflects the difficulty of achieving these outcomes. Our approach provides a fresh understanding of responsibility in multi-agent systems, illuminating both the qualitative and quantitative aspects of agents' roles in achieving or preventing outcomes.

An Extension-based Approach for Computing and Verifying Preferences in Abstract Argumentation

Mar 26, 2024

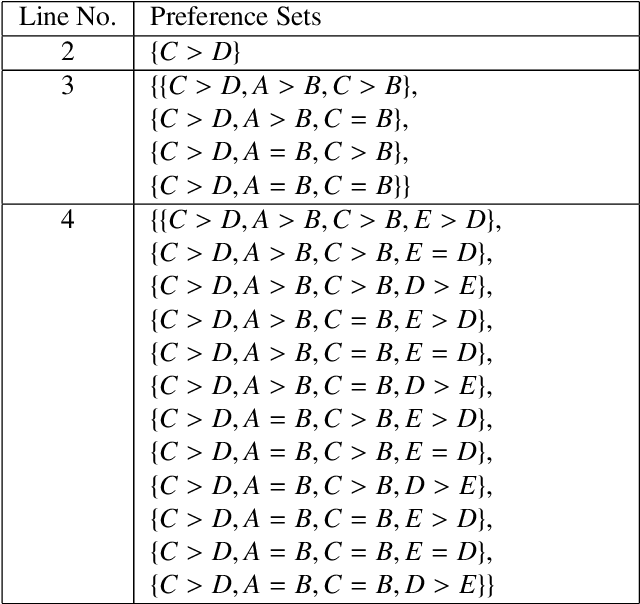

Abstract:We present an extension-based approach for computing and verifying preferences in an abstract argumentation system. Although numerous argumentation semantics have been developed previously for identifying acceptable sets of arguments from an argumentation framework, there is a lack of justification behind their acceptability based on implicit argument preferences. Preference-based argumentation frameworks allow one to determine what arguments are justified given a set of preferences. Our research considers the inverse of the standard reasoning problem, i.e., given an abstract argumentation framework and a set of justified arguments, we compute what the possible preferences over arguments are. Furthermore, there is a need to verify (i.e., assess) that the computed preferences would lead to the acceptable sets of arguments. This paper presents a novel approach and algorithm for exhaustively computing and enumerating all possible sets of preferences (restricted to three identified cases) for a conflict-free set of arguments in an abstract argumentation framework. We prove the soundness, completeness and termination of the algorithm. The research establishes that preferences are determined using an extension-based approach after the evaluation phase (acceptability of arguments) rather than stated beforehand. In this work, we focus our research study on grounded, preferred and stable semantics. We show that the complexity of computing sets of preferences is exponential in the number of arguments, and thus, describe an approximate approach and algorithm to compute the preferences. Furthermore, we present novel algorithms for verifying (i.e., assessing) the computed preferences. We provide details of the implementation of the algorithms (source code has been made available), various experiments performed to evaluate the algorithms and the analysis of the results.

Abstract Weighted Based Gradual Semantics in Argumentation Theory

Jan 21, 2024

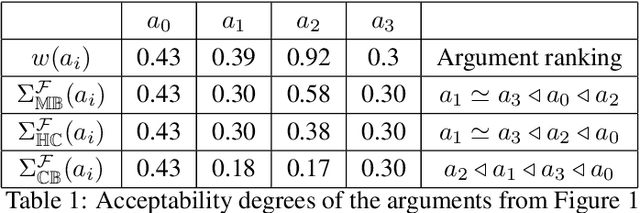

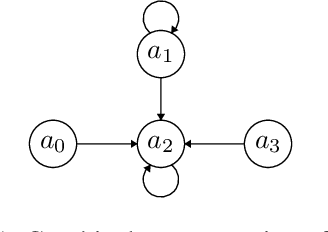

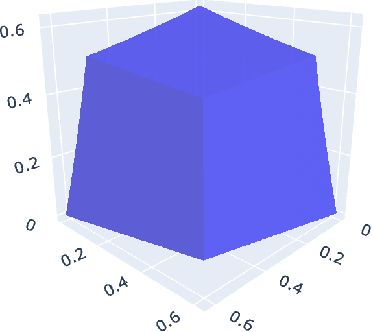

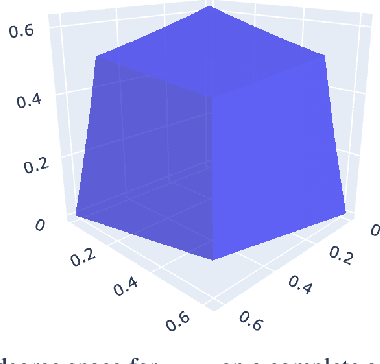

Abstract:Weighted gradual semantics provide an acceptability degree to each argument representing the strength of the argument, computed based on factors including background evidence for the argument, and taking into account interactions between this argument and others. We introduce four important problems linking gradual semantics and acceptability degrees. First, we reexamine the inverse problem, seeking to identify the argument weights of the argumentation framework which lead to a specific final acceptability degree. Second, we ask whether the function mapping between argument weights and acceptability degrees is injective or a homeomorphism onto its image. Third, we ask whether argument weights can be found when preferences, rather than acceptability degrees for arguments are considered. Fourth, we consider the topology of the space of valid acceptability degrees, asking whether gaps exist in this space. While different gradual semantics have been proposed in the literature, in this paper, we identify a large family of weighted gradual semantics, called abstract weighted based gradual semantics. These generalise many of the existing semantics while maintaining desirable properties such as convergence to a unique fixed point. We also show that a sub-family of the weighted gradual semantics, called abstract weighted (Lp,lambda,mu,A)-based gradual semantics and which include well-known semantics, solve all four of the aforementioned problems.

Evaluation of Human-Understandability of Global Model Explanations using Decision Tree

Sep 18, 2023Abstract:In explainable artificial intelligence (XAI) research, the predominant focus has been on interpreting models for experts and practitioners. Model agnostic and local explanation approaches are deemed interpretable and sufficient in many applications. However, in domains like healthcare, where end users are patients without AI or domain expertise, there is an urgent need for model explanations that are more comprehensible and instil trust in the model's operations. We hypothesise that generating model explanations that are narrative, patient-specific and global(holistic of the model) would enable better understandability and enable decision-making. We test this using a decision tree model to generate both local and global explanations for patients identified as having a high risk of coronary heart disease. These explanations are presented to non-expert users. We find a strong individual preference for a specific type of explanation. The majority of participants prefer global explanations, while a smaller group prefers local explanations. A task based evaluation of mental models of these participants provide valuable feedback to enhance narrative global explanations. This, in turn, guides the design of health informatics systems that are both trustworthy and actionable.

Inferring Attack Relations for Gradual Semantics

Nov 29, 2022Abstract:A gradual semantics takes a weighted argumentation framework as input and outputs a final acceptability degree for each argument, with different semantics performing the computation in different manners. In this work, we consider the problem of attack inference. That is, given a gradual semantics, a set of arguments with associated initial weights, and the final desirable acceptability degrees associated with each argument, we seek to determine whether there is a set of attacks on those arguments such that we can obtain these acceptability degrees. The main contribution of our work is to demonstrate that the associated decision problem, i.e., whether a set of attacks can exist which allows the final acceptability degrees to occur for given initial weights, is NP-complete for the weighted h-categoriser and cardinality-based semantics, and is polynomial for the weighted max-based semantics, even for the complete version of the problem (where all initial weights and final acceptability degrees are known). We then briefly discuss how this decision problem can be modified to find the attacks themselves and conclude by examining the partial problem where not all initial weights or final acceptability degrees may be known.

Utility Functions for Human/Robot Interaction

Apr 08, 2022

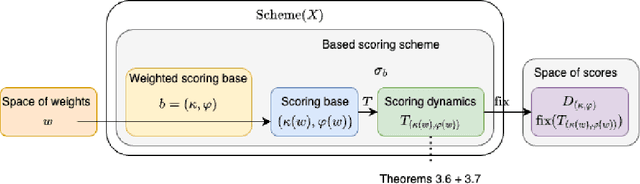

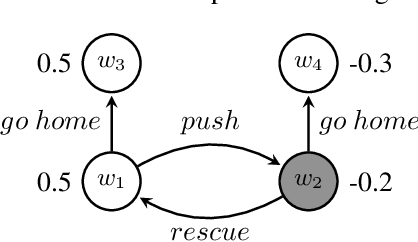

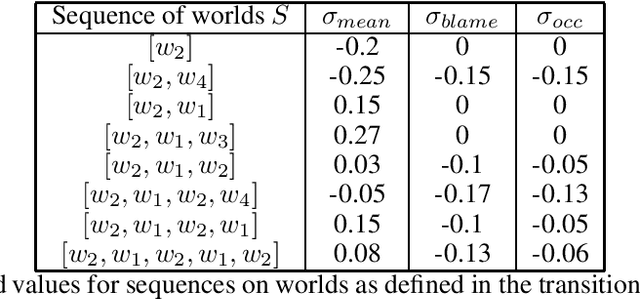

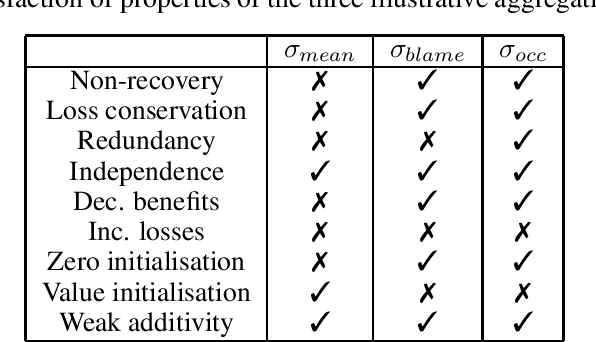

Abstract:In this paper, we place ourselves in the context of human robot interaction and address the problem of cognitive robot modelling. More precisely we are investigating properties of a utility-based model that will govern a robot's actions. The novelty of this approach lies in embedding the responsibility of the robot over the state of affairs into the utility model via a utility aggregation function. We describe desiderata for such a function and consider related properties.

Analytical Solutions for the Inverse Problem within Gradual Semantics

Mar 02, 2022

Abstract:Gradual semantics within abstract argumentation associate a numeric score with every argument in a system, which represents the level of acceptability of this argument, and from which a preference ordering over arguments can be derived. While some semantics operate over standard argumentation frameworks, many utilise a weighted framework, where a numeric initial weight is associated with each argument. Recent work has examined the inverse problem within gradual semantics. Rather than determining a preference ordering given an argumentation framework and a semantics, the inverse problem takes an argumentation framework, a gradual semantics, and a preference ordering as inputs, and identifies what weights are needed to over arguments in the framework to obtain the desired preference ordering. Existing work has attacked the inverse problem numerically, using a root finding algorithm (the bisection method) to identify appropriate initial weights. In this paper we demonstrate that for a class of gradual semantics, an analytical approach can be used to solve the inverse problem. Unlike the current state-of-the-art, such an analytic approach can rapidly find a solution, and is guaranteed to do so. In obtaining this result, we are able to prove several important properties which previous work had posed as conjectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge