Wamberto W. Vasconcelos

An Extension-based Approach for Computing and Verifying Preferences in Abstract Argumentation

Mar 26, 2024

Abstract:We present an extension-based approach for computing and verifying preferences in an abstract argumentation system. Although numerous argumentation semantics have been developed previously for identifying acceptable sets of arguments from an argumentation framework, there is a lack of justification behind their acceptability based on implicit argument preferences. Preference-based argumentation frameworks allow one to determine what arguments are justified given a set of preferences. Our research considers the inverse of the standard reasoning problem, i.e., given an abstract argumentation framework and a set of justified arguments, we compute what the possible preferences over arguments are. Furthermore, there is a need to verify (i.e., assess) that the computed preferences would lead to the acceptable sets of arguments. This paper presents a novel approach and algorithm for exhaustively computing and enumerating all possible sets of preferences (restricted to three identified cases) for a conflict-free set of arguments in an abstract argumentation framework. We prove the soundness, completeness and termination of the algorithm. The research establishes that preferences are determined using an extension-based approach after the evaluation phase (acceptability of arguments) rather than stated beforehand. In this work, we focus our research study on grounded, preferred and stable semantics. We show that the complexity of computing sets of preferences is exponential in the number of arguments, and thus, describe an approximate approach and algorithm to compute the preferences. Furthermore, we present novel algorithms for verifying (i.e., assessing) the computed preferences. We provide details of the implementation of the algorithms (source code has been made available), various experiments performed to evaluate the algorithms and the analysis of the results.

Preference Elicitation in Assumption-Based Argumentation

May 12, 2020

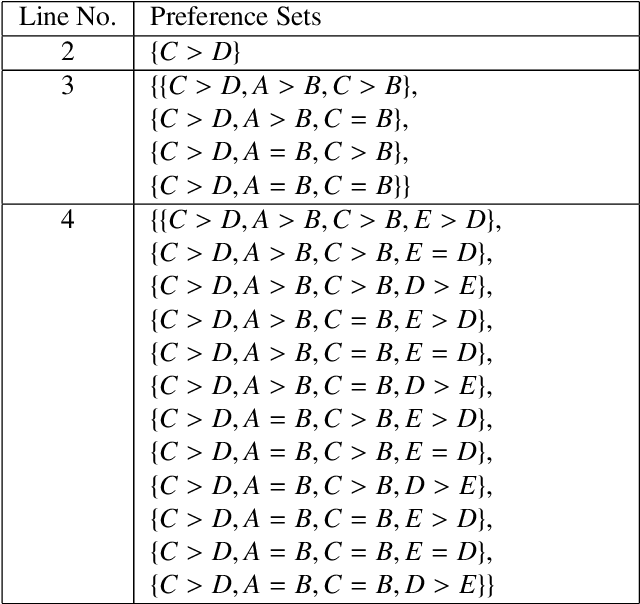

Abstract:Various structured argumentation frameworks utilize preferences as part of their standard inference procedure to enable reasoning with preferences. In this paper, we consider an inverse of the standard reasoning problem, seeking to identify what preferences over assumptions could lead to a given set of conclusions being drawn. We ground our work in the Assumption-Based Argumentation (ABA) framework, and present an algorithm which computes and enumerates all possible sets of preferences over the assumptions in the system from which a desired conflict free set of conclusions can be obtained under a given semantic. After describing our algorithm, we establish its soundness, completeness and complexity.

Practical Reasoning with Norms for Autonomous Software Agents (Full Edition)

Jan 28, 2017

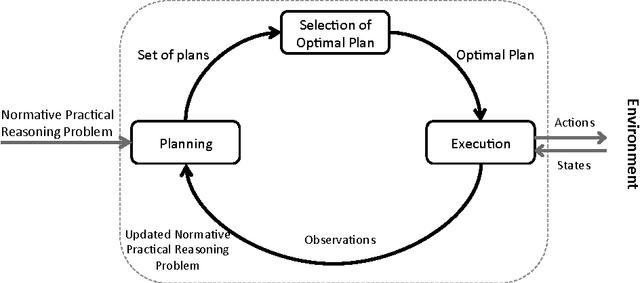

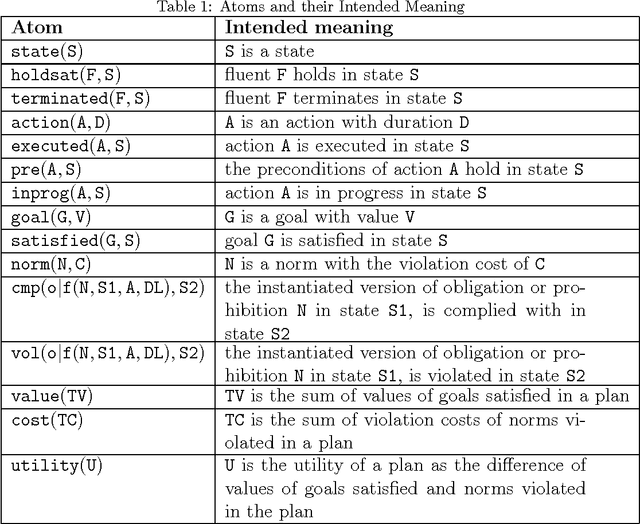

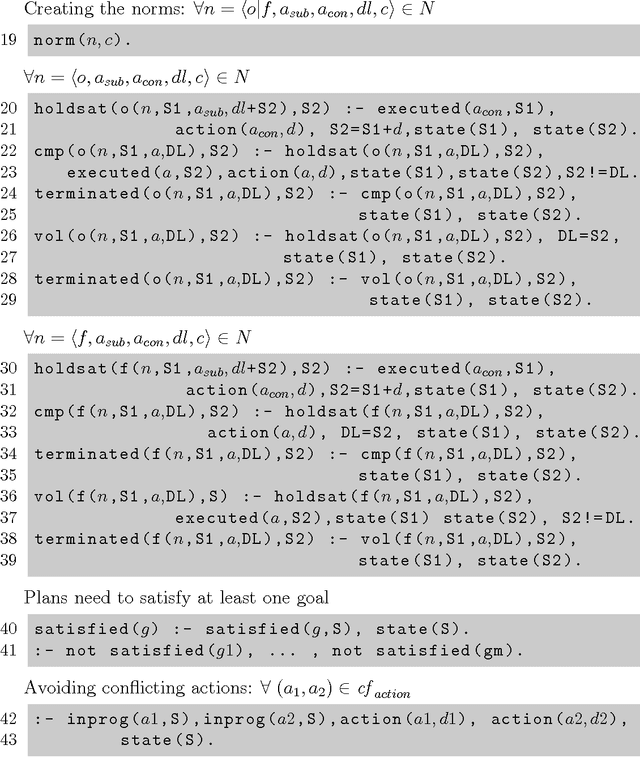

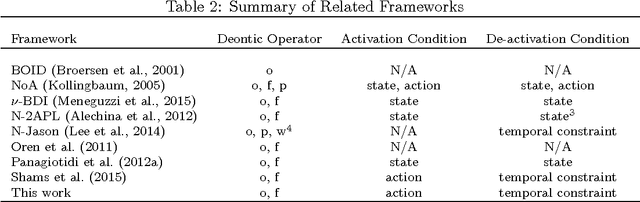

Abstract:Autonomous software agents operating in dynamic environments need to constantly reason about actions in pursuit of their goals, while taking into consideration norms which might be imposed on those actions. Normative practical reasoning supports agents making decisions about what is best for them to (not) do in a given situation. What makes practical reasoning challenging is the interplay between goals that agents are pursuing and the norms that the agents are trying to uphold. We offer a formalisation to allow agents to plan for multiple goals and norms in the presence of durative actions that can be executed concurrently. We compare plans based on decision-theoretic notions (i.e. utility) such that the utility gain of goals and utility loss of norm violations are the basis for this comparison. The set of optimal plans consists of plans that maximise the overall utility, each of which can be chosen by the agent to execute. We provide an implementation of our proposal in Answer Set Programming, thus allowing us to state the original problem in terms of a logic program that can be queried for solutions with specific properties. The implementation is proven to be sound and complete.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge