Gaojie Cui

RATSF: Empowering Customer Service Volume Management through Retrieval-Augmented Time-Series Forecasting

Mar 07, 2024Abstract:An efficient customer service management system hinges on precise forecasting of service volume. In this scenario, where data non-stationarity is pronounced, successful forecasting heavily relies on identifying and leveraging similar historical data rather than merely summarizing periodic patterns. Existing models based on RNN or Transformer architectures often struggle with this flexible and effective utilization. To address this challenge, we propose an efficient and adaptable cross-attention module termed RACA, which effectively leverages historical segments in forecasting task, and we devised a precise representation scheme for querying historical sequences, coupled with the design of a knowledge repository. These critical components collectively form our Retrieval-Augmented Temporal Sequence Forecasting framework (RATSF). RATSF not only significantly enhances performance in the context of Fliggy hotel service volume forecasting but, more crucially, can be seamlessly integrated into other Transformer-based time-series forecasting models across various application scenarios. Extensive experimentation has validated the effectiveness and generalizability of this system design across multiple diverse contexts.

A Concept and Argumentation based Interpretable Model in High Risk Domains

Aug 17, 2022

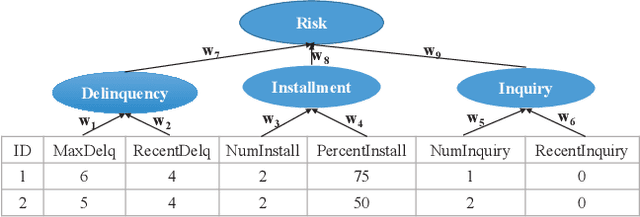

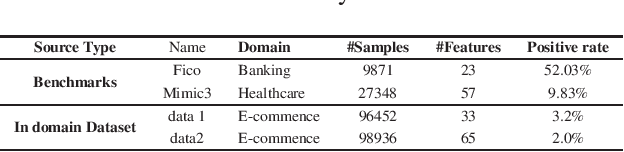

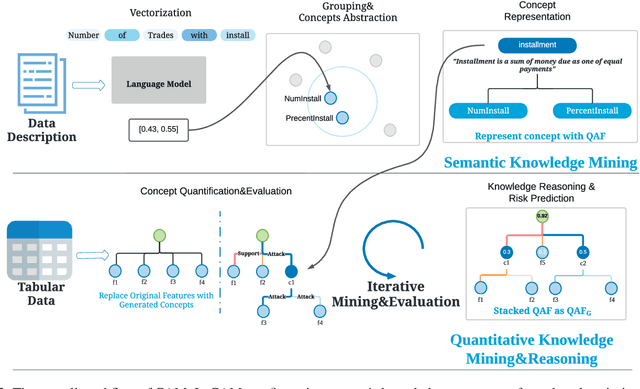

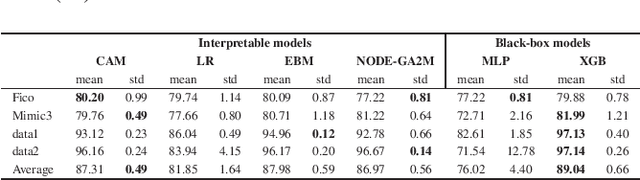

Abstract:Interpretability has become an essential topic for artificial intelligence in some high-risk domains such as healthcare, bank and security. For commonly-used tabular data, traditional methods trained end-to-end machine learning models with numerical and categorical data only, and did not leverage human understandable knowledge such as data descriptions. Yet mining human-level knowledge from tabular data and using it for prediction remain a challenge. Therefore, we propose a concept and argumentation based model (CAM) that includes the following two components: a novel concept mining method to obtain human understandable concepts and their relations from both descriptions of features and the underlying data, and a quantitative argumentation-based method to do knowledge representation and reasoning. As a result of it, CAM provides decisions that are based on human-level knowledge and the reasoning process is intrinsically interpretable. Finally, to visualize the purposed interpretable model, we provide a dialogical explanation that contain dominated reasoning path within CAM. Experimental results on both open source benchmark dataset and real-word business dataset show that (1) CAM is transparent and interpretable, and the knowledge inside the CAM is coherent with human understanding; (2) Our interpretable approach can reach competitive results comparing with other state-of-art models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge