Hiroyuki Kido

Inference of Abstraction for Grounded Predicate Logic

Feb 19, 2025Abstract:An important open question in AI is what simple and natural principle enables a machine to reason logically for meaningful abstraction with grounded symbols. This paper explores a conceptually new approach to combining probabilistic reasoning and predicative symbolic reasoning over data. We return to the era of reasoning with a full joint distribution before the advent of Bayesian networks. We then discuss that a full joint distribution over models of exponential size in propositional logic and of infinite size in predicate logic should be simply derived from a full joint distribution over data of linear size. We show that the same process is not only enough to generalise the logical consequence relation of predicate logic but also to provide a new perspective to rethink well-known limitations such as the undecidability of predicate logic, the symbol grounding problem and the principle of explosion. The reproducibility of this theoretical work is fully demonstrated by the included proofs.

Inference of Abstraction for a Unified Account of Reasoning and Learning

Feb 14, 2024

Abstract:Inspired by Bayesian approaches to brain function in neuroscience, we give a simple theory of probabilistic inference for a unified account of reasoning and learning. We simply model how data cause symbolic knowledge in terms of its satisfiability in formal logic. The underlying idea is that reasoning is a process of deriving symbolic knowledge from data via abstraction, i.e., selective ignorance. The logical consequence relation is discussed for its proof-based theoretical correctness. The MNIST dataset is discussed for its experiment-based empirical correctness.

Inference of Abstraction for a Unified Account of Symbolic Reasoning from Data

Feb 13, 2024Abstract:Inspired by empirical work in neuroscience for Bayesian approaches to brain function, we give a unified probabilistic account of various types of symbolic reasoning from data. We characterise them in terms of formal logic using the classical consequence relation, an empirical consequence relation, maximal consistent sets, maximal possible sets and maximum likelihood estimation. The theory gives new insights into reasoning towards human-like machine intelligence.

A Simple Generative Model of Logical Reasoning and Statistical Learning

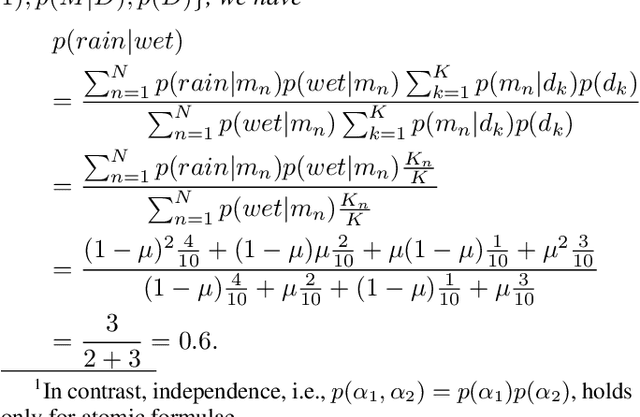

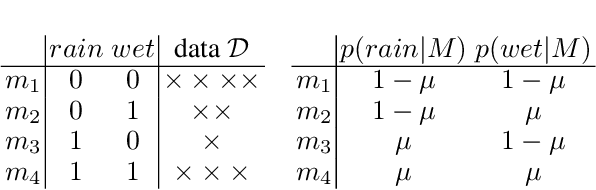

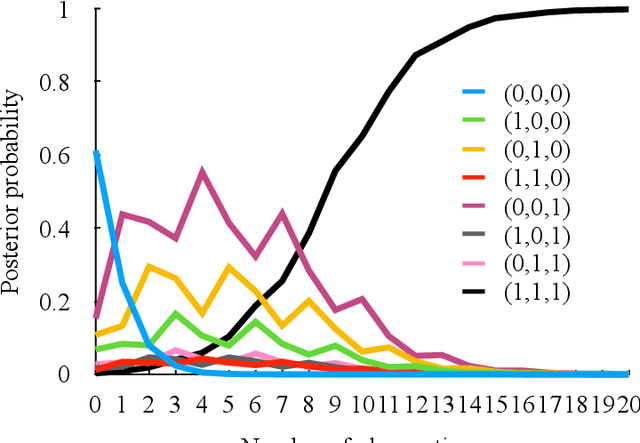

May 18, 2023Abstract:Statistical learning and logical reasoning are two major fields of AI expected to be unified for human-like machine intelligence. Most existing work considers how to combine existing logical and statistical systems. However, there is no theory of inference so far explaining how basic approaches to statistical learning and logical reasoning stem from a common principle. Inspired by the fact that much empirical work in neuroscience suggests Bayesian (or probabilistic generative) approaches to brain function including learning and reasoning, we here propose a simple Bayesian model of logical reasoning and statistical learning. The theory is statistically correct as it satisfies Kolmogorov's axioms, is consistent with both Fenstad's representation theorem and maximum likelihood estimation and performs exact Bayesian inference with a linear-time complexity. The theory is logically correct as it is a data-driven generalisation of uncertain reasoning from consistency, possibility, inconsistency and impossibility. The theory is correct in terms of machine learning as its solution to generation and prediction tasks on the MNIST dataset is not only empirically reasonable but also theoretically correct against the K nearest neighbour method. We simply model how data causes symbolic knowledge in terms of its satisfiability in formal logic. Symbolic reasoning emerges as a result of the process of going the causality forwards and backwards. The forward and backward processes correspond to an interpretation and inverse interpretation in formal logic, respectively. The inverse interpretation differentiates our work from the mainstream often referred to as inverse entailment, inverse deduction or inverse resolution. The perspective gives new insights into learning and reasoning towards human-like machine intelligence.

Generative Logic with Time: Beyond Logical Consistency and Statistical Possibility

Jan 20, 2023Abstract:This paper gives a theory of inference to logically reason symbolic knowledge fully from data over time. We propose a temporal probabilistic model that generates symbolic knowledge from data. The statistical correctness of the model is justified in terms of consistency with Kolmogorov's axioms, Fenstad's theorems and maximum likelihood estimation. The logical correctness of the model is justified in terms of logical consequence relations on propositional logic and its extension. We show that the theory is applicable to localisation problems.

Towards Unifying Perceptual Reasoning and Logical Reasoning

Jun 27, 2022

Abstract:An increasing number of scientific experiments support the view of perception as Bayesian inference, which is rooted in Helmholtz's view of perception as unconscious inference. Recent study of logic presents a view of logical reasoning as Bayesian inference. In this paper, we give a simple probabilistic model that is applicable to both perceptual reasoning and logical reasoning. We show that the model unifies the two essential processes common in perceptual and logical systems: on the one hand, the process by which perceptual and logical knowledge is derived from another knowledge, and on the other hand, the process by which such knowledge is derived from data. We fully characterise the model in terms of logical consequence relations.

Towards Unifying Logical Entailment and Statistical Estimation

Feb 27, 2022

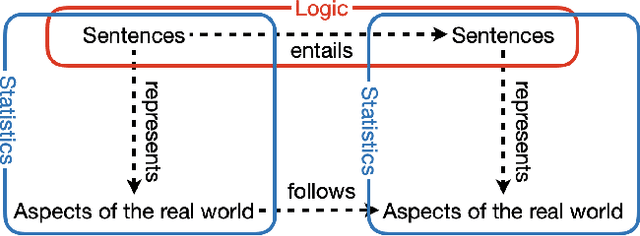

Abstract:This paper gives a generative model of the interpretation of formal logic for data-driven logical reasoning. The key idea is to represent the interpretation as likelihood of a formula being true given a model of formal logic. Using the likelihood, Bayes' theorem gives the posterior of the model being the case given the formula. The posterior represents an inverse interpretation of formal logic that seeks models making the formula true. The likelihood and posterior cause Bayesian learning that gives the probability of the conclusion being true in the models where all the premises are true. This paper looks at statistical and logical properties of the Bayesian learning. It is shown that the generative model is a unified theory of several different types of reasoning in logic and statistics.

Bayes Meets Entailment and Prediction: Commonsense Reasoning with Non-monotonicity, Paraconsistency and Predictive Accuracy

Jan 27, 2021

Abstract:The recent success of Bayesian methods in neuroscience and artificial intelligence gives rise to the hypothesis that the brain is a Bayesian machine. Since logic and learning are both practices of the human brain, it leads to another hypothesis that there is a Bayesian interpretation underlying both logical reasoning and machine learning. In this paper, we introduce a generative model of logical consequence relations. It formalises the process of how the truth value of a sentence is probabilistically generated from the probability distribution over states of the world. We show that the generative model characterises a classical consequence relation, paraconsistent consequence relation and nonmonotonic consequence relation. In particular, the generative model gives a new consequence relation that outperforms them in reasoning with inconsistent knowledge. We also show that the generative model gives a new classification algorithm that outperforms several representative algorithms in predictive accuracy and complexity on the Kaggle Titanic dataset.

Bayesian Entailment Hypothesis: How Brains Implement Monotonic and Non-monotonic Reasoning

May 06, 2020

Abstract:Recent success of Bayesian methods in neuroscience and artificial intelligence gives rise to the hypothesis that the brain is a Bayesian machine. Since logic, as the laws of thought, is a product and practice of the human brain, it leads to another hypothesis that there is a Bayesian algorithm and data-structure for logical reasoning. In this paper, we give a Bayesian account of entailment and characterize its abstract inferential properties. The Bayesian entailment is shown to be a monotonic consequence relation in an extreme case. In general, it is a sort of non-monotonic consequence relation without Cautious monotony or Cut. The preferential entailment, which is a representative non-monotonic consequence relation, is shown to be maximum a posteriori entailment, which is an approximation of the Bayesian entailment. We finally discuss merits of our proposals in terms of encoding preferences on defaults, handling change and contradiction, and modeling human entailment.

A Bayesian Approach to Direct and Inverse Abstract Argumentation Problems

Sep 10, 2019

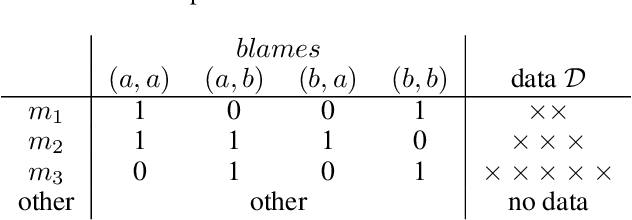

Abstract:This paper studies a fundamental mechanism of how to detect a conflict between arguments given sentiments regarding acceptability of the arguments. We introduce a concept of the inverse problem of the abstract argumentation to tackle the problem. Given noisy sets of acceptable arguments, it aims to find attack relations explaining the sets well in terms of acceptability semantics. It is the inverse of the direct problem corresponding to the traditional problem of the abstract argumentation that focuses on finding sets of acceptable arguments in terms of the semantics given an attack relation between the arguments. We give a probabilistic model handling both of the problems in a way that is faithful to the acceptability semantics. From a theoretical point of view, we show that a solution to both the direct and inverse problems is a special case of the probabilistic inference on the model. We discuss that the model provides a natural extension of the semantics to cope with uncertain attack relations distributed probabilistically. From en empirical point of view, we argue that it reasonably predicts individuals sentiments regarding acceptability of arguments. This paper contributes to lay the foundation for making acceptability semantics data-driven and to provide a way to tackle the knowledge acquisition bottleneck.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge