Jieting Luo

Providing personalized Explanations: a Conversational Approach

Jul 21, 2023Abstract:The increasing applications of AI systems require personalized explanations for their behaviors to various stakeholders since the stakeholders may have various knowledge and backgrounds. In general, a conversation between explainers and explainees not only allows explainers to obtain the explainees' background, but also allows explainees to better understand the explanations. In this paper, we propose an approach for an explainer to communicate personalized explanations to an explainee through having consecutive conversations with the explainee. We prove that the conversation terminates due to the explainee's justification of the initial claim as long as there exists an explanation for the initial claim that the explainee understands and the explainer is aware of.

Value-based Practical Reasoning: Modal Logic + Argumentation

Apr 11, 2022

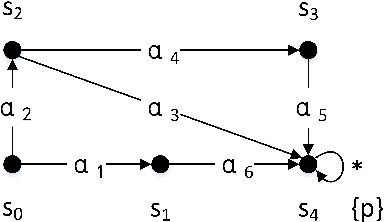

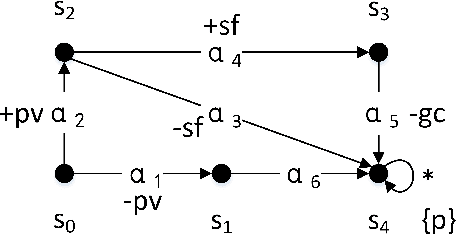

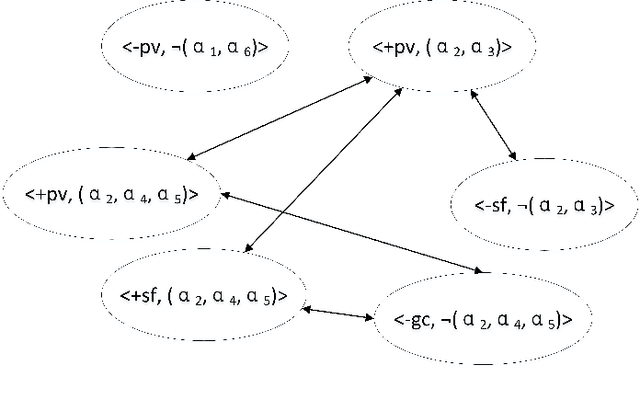

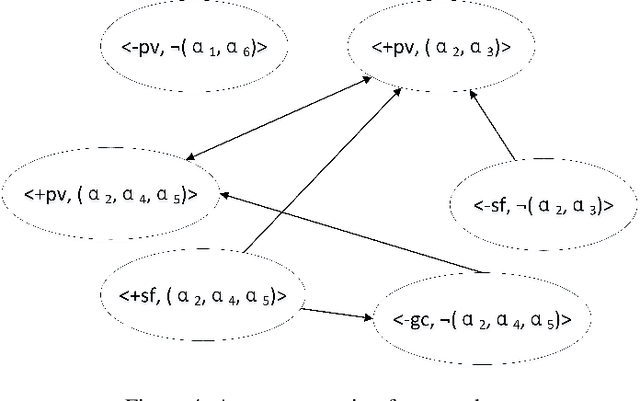

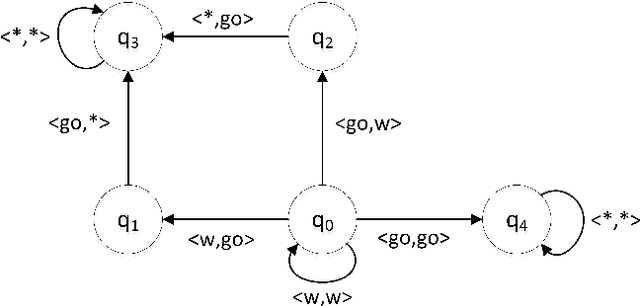

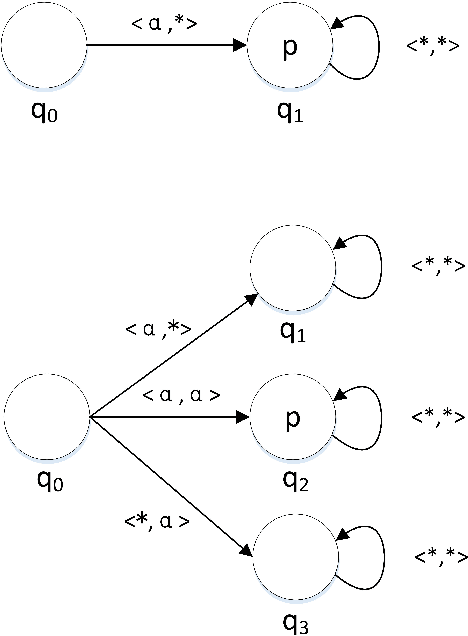

Abstract:Autonomous agents are supposed to be able to finish tasks or achieve goals that are assigned by their users through performing a sequence of actions. Since there might exist multiple plans that an agent can follow and each plan might promote or demote different values along each action, the agent should be able to resolve the conflicts between them and evaluate which plan he should follow. In this paper, we develop a logic-based framework that combines modal logic and argumentation for value-based practical reasoning with plans. Modal logic is used as a technique to represent and verify whether a plan with its local properties of value promotion or demotion can be followed to achieve an agent's goal. We then propose an argumentation-based approach that allows an agent to reason about his plans in the form of supporting or objecting to a plan using the verification results.

A Formal Framework for Reasoning about Agents' Independence in Self-organizing Multi-agent Systems

May 26, 2021

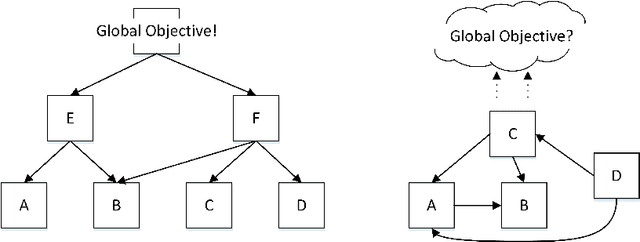

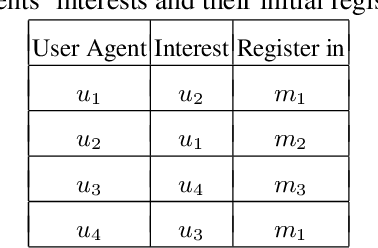

Abstract:Self-organization is a process where a stable pattern is formed by the cooperative behavior between parts of an initially disordered system without external control or influence. It has been introduced to multi-agent systems as an internal control process or mechanism to solve difficult problems spontaneously. However, because a self-organizing multi-agent system has autonomous agents and local interactions between them, it is difficult to predict the behavior of the system from the behavior of the local agents we design. This paper proposes a logic-based framework of self-organizing multi-agent systems, where agents interact with each other by following their prescribed local rules. The dependence relation between coalitions of agents regarding their contributions to the global behavior of the system is reasoned about from the structural and semantic perspectives. We show that the computational complexity of verifying such a self-organizing multi-agent system is in exponential time. We then combine our framework with graph theory to decompose a system into different coalitions located in different layers, which allows us to verify agents' full contributions more efficiently. The resulting information about agents' full contributions allows us to understand the complex link between local agent behavior and system level behavior in a self-organizing multi-agent system. Finally, we show how we can use our framework to model a constraint satisfaction problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge