Leendert van der Torre

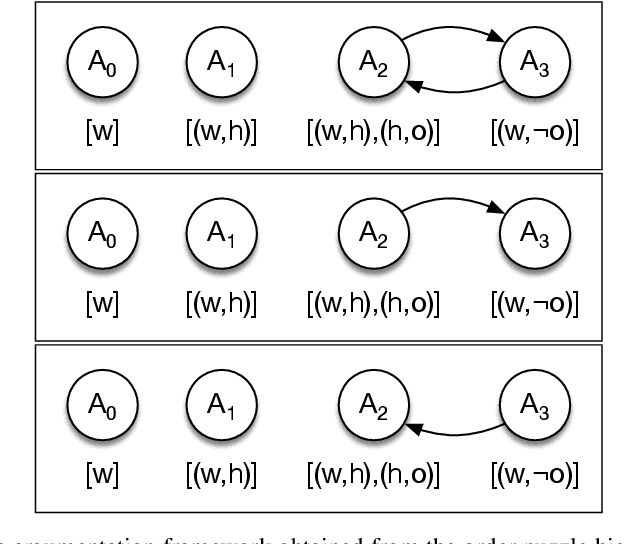

Defense semantics of argumentation: revisit

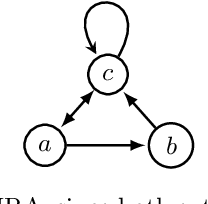

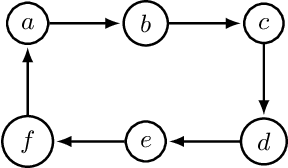

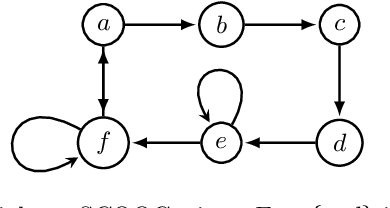

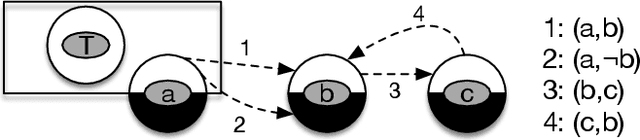

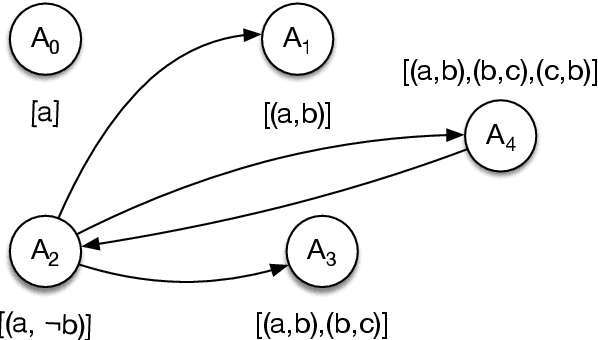

Nov 22, 2023Abstract:In this paper we introduce a novel semantics, called defense semantics, for Dung's abstract argumentation frameworks in terms of a notion of (partial) defence, which is a triple encoding that one argument is (partially) defended by another argument via attacking the attacker of the first argument. In terms of defense semantics, we show that defenses related to self-attacked arguments and arguments in 3-cycles are unsatifiable under any situation and therefore can be removed without affecting the defense semantics of an AF. Then, we introduce a new notion of defense equivalence of AFs, and compare defense equivalence with standard equivalence and strong equivalence, respectively. Finally, by exploiting defense semantics, we define two kinds of reasons for accepting arguments, i.e., direct reasons and root reasons, and a notion of root equivalence of AFs that can be used in argumentation summarization.

Towards AI Logic for Social Reasoning

Oct 09, 2021

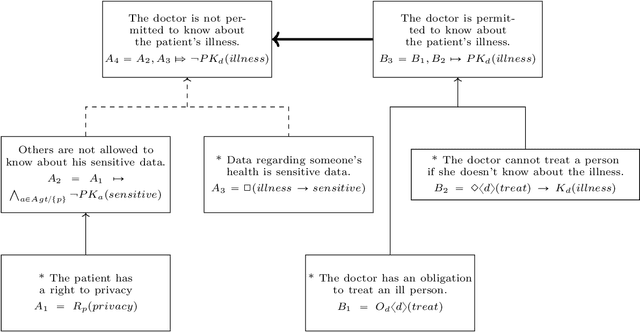

Abstract:Artificial Intelligence (AI) logic formalizes the reasoning of intelligent agents. In this paper, we discuss how an argumentation-based AI logic could be used also to formalize important aspects of social reasoning. Besides reasoning about the knowledge and actions of individual agents, social AI logic can reason also about social dependencies among agents using the rights, obligations and permissions of the agents. We discuss four aspects of social AI logic. First, we discuss how rights represent relations between the obligations and permissions of intelligent agents. Second, we discuss how to argue about the right-to-know, a central issue in the recent discussion of privacy and ethics. Third, we discuss how a wide variety of conflicts among intelligent agents can be identified and (sometimes) resolved by comparing formal arguments. Importantly, to cover a wide range of arguments occurring in daily life, also fallacious arguments can be represented and reasoned about. Fourth, we discuss how to argue about the freedom to act for intelligent agents. Examples from social, legal and ethical reasoning highlight the challenges in developing social AI logic. The discussion of the four challenges leads to a research program for argumentation-based social AI logic, contributing towards the future development of AI logic.

Artificial intelligence in space

Jun 22, 2020

Abstract:In the next coming years, space activities are expected to undergo a radical transformation with the emergence of new satellite systems or new services which will incorporate the contributions of artificial intelligence and machine learning defined as covering a wide range of innovations from autonomous objects with their own decision-making power to increasingly sophisticated services exploiting very large volumes of information from space. This chapter identifies some of the legal and ethical challenges linked to its use. These legal and ethical challenges call for solutions which the international treaties in force are not sufficient to determine and implement. For this reason, a legal methodology must be developed that makes it possible to link intelligent systems and services to a system of rules applicable thereto. It discusses existing legal AI-based tools amenable for making space law actionable, interoperable and machine readable for future compliance tools.

Intention as Commitment toward Time

Apr 17, 2020

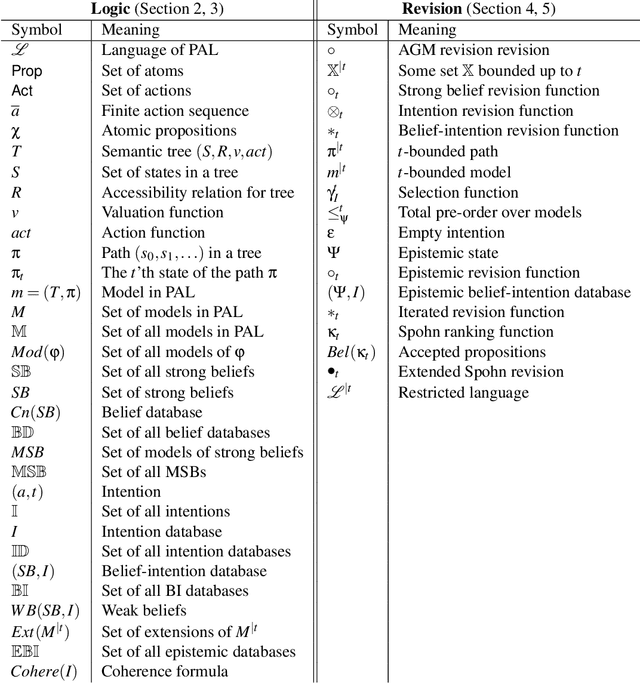

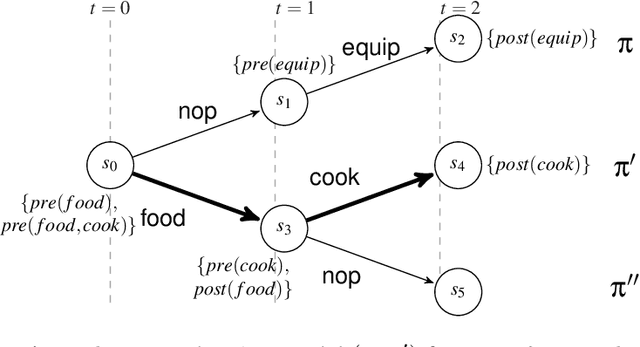

Abstract:In this paper we address the interplay among intention, time, and belief in dynamic environments. The first contribution is a logic for reasoning about intention, time and belief, in which assumptions of intentions are represented by preconditions of intended actions. Intentions and beliefs are coherent as long as these assumptions are not violated, i.e. as long as intended actions can be performed such that their preconditions hold as well. The second contribution is the formalization of what-if scenarios: what happens with intentions and beliefs if a new (possibly conflicting) intention is adopted, or a new fact is learned? An agent is committed to its intended actions as long as its belief-intention database is coherent. We conceptualize intention as commitment toward time and we develop AGM-based postulates for the iterated revision of belief-intention databases, and we prove a Katsuno-Mendelzon-style representation theorem.

* 83 pages, 4 figures, Artificial Intelligence journal pre-print

SCF2 -- an Argumentation Semantics for Rational Human Judgments on Argument Acceptability: Technical Report

Aug 22, 2019

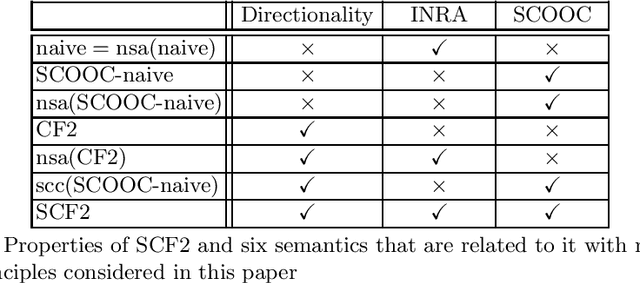

Abstract:In abstract argumentation theory, many argumentation semantics have been proposed for evaluating argumentation frameworks. This paper is based on the following research question: Which semantics corresponds well to what humans consider a rational judgment on the acceptability of arguments? There are two systematic ways to approach this research question: A normative perspective is provided by the principle-based approach, in which semantics are evaluated based on their satisfaction of various normatively desirable principles. A descriptive perspective is provided by the empirical approach, in which cognitive studies are conducted to determine which semantics best predicts human judgments about arguments. In this paper, we combine both approaches to motivate a new argumentation semantics called SCF2. For this purpose, we introduce and motivate two new principles and show that no semantics from the literature satisfies both of them. We define SCF2 and prove that it satisfies both new principles. Furthermore, we discuss findings of a recent empirical cognitive study that provide additional support to SCF2.

Designing Normative Theories of Ethical Reasoning: Formal Framework, Methodology, and Tool Support

Mar 25, 2019

Abstract:The area of formal ethics is experiencing a shift from a unique or standard approach to normative reasoning, as exemplified by so-called standard deontic logic, to a variety of application-specific theories. However, the adequate handling of normative concepts such as obligation, permission, prohibition, and moral commitment is challenging, as illustrated by the notorious paradoxes of deontic logic. In this article we introduce an approach to design and evaluate theories of normative reasoning. In particular, we present a formal framework based on higher-order logic, a design methodology, and we discuss tool support. Moreover, we illustrate the approach using an example of an implementation, we demonstrate different ways of using it, and we discuss how the design of normative theories is now made accessible to non-specialist users and developers.

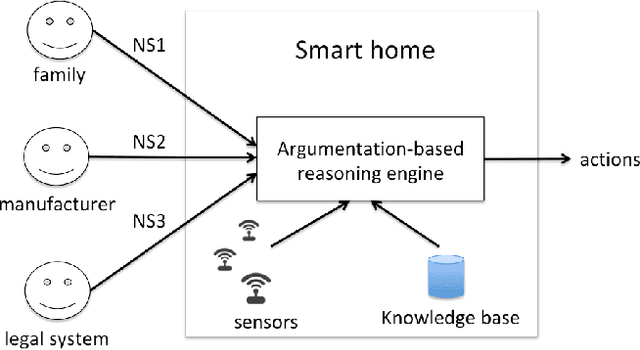

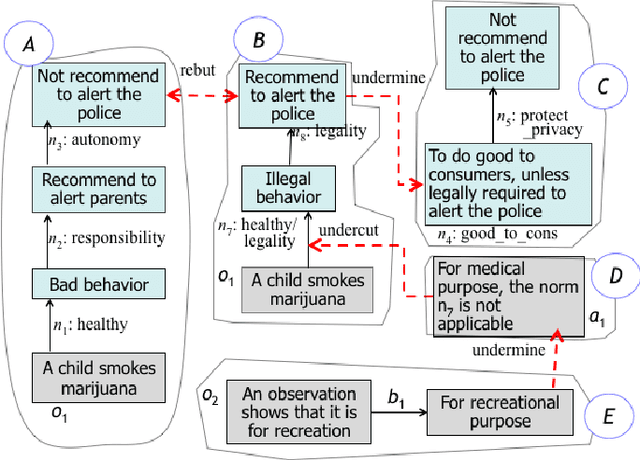

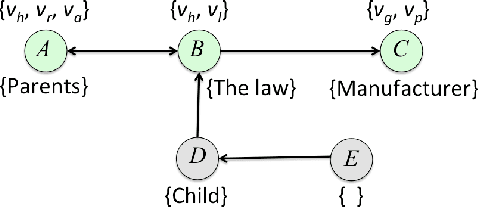

Building Jiminy Cricket: An Architecture for Moral Agreements Among Stakeholders

Dec 11, 2018

Abstract:An autonomous system is constructed by a manufacturer, operates in a society subject to norms and laws, and is interacting with end-users. We address the challenge of how the moral values and views of all stakeholders can be integrated and reflected in the moral behaviour of the autonomous system. We propose an artificial moral agent architecture that uses techniques from normative systems and formal argumentation to reach moral agreements among stakeholders. We show how our architecture can be used not only for ethical practical reasoning and collaborative decision-making, but also for the explanation of such moral behavior.

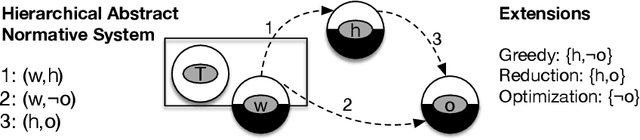

Prioritized Norms in Formal Argumentation

Feb 28, 2018

Abstract:To resolve conflicts among norms, various nonmonotonic formalisms can be used to perform prioritized normative reasoning. Meanwhile, formal argumentation provides a way to represent nonmonotonic logics. In this paper, we propose a representation of prioritized normative reasoning by argumentation. Using hierarchical abstract normative systems, we define three kinds of prioritized normative reasoning approaches, called Greedy, Reduction, and Optimization. Then, after formulating an argumentation theory for a hierarchical abstract normative system, we show that for a totally ordered hierarchical abstract normative system, Greedy and Reduction can be represented in argumentation by applying the weakest link and the last link principles respectively, and Optimization can be represented by introducing additional defeats capturing the idea that for each argument that contains a norm not belonging to the maximal obeyable set then this argument should be rejected.

Defense semantics of argumentation: encoding reasons for accepting arguments

Aug 02, 2017Abstract:In this paper we show how the defense relation among abstract arguments can be used to encode the reasons for accepting arguments. After introducing a novel notion of defenses and defense graphs, we propose a defense semantics together with a new notion of defense equivalence of argument graphs, and compare defense equivalence with standard equivalence and strong equivalence, respectively. Then, based on defense semantics, we define two kinds of reasons for accepting arguments, i.e., direct reasons and root reasons, and a notion of root equivalence of argument graphs. Finally, we show how the notion of root equivalence can be used in argumentation summarization.

Reasoning in Non-Probabilistic Uncertainty: Logic Programming and Neural-Symbolic Computing as Examples

Mar 01, 2017

Abstract:This article aims to achieve two goals: to show that probability is not the only way of dealing with uncertainty (and even more, that there are kinds of uncertainty which are for principled reasons not addressable with probabilistic means); and to provide evidence that logic-based methods can well support reasoning with uncertainty. For the latter claim, two paradigmatic examples are presented: Logic Programming with Kleene semantics for modelling reasoning from information in a discourse, to an interpretation of the state of affairs of the intended model, and a neural-symbolic implementation of Input/Output logic for dealing with uncertainty in dynamic normative contexts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge