Baoxing Qin

Towards Practical Multi-Robot Hybrid Tasks Allocation for Autonomous Cleaning

Apr 04, 2023

Abstract:Task allocation plays a vital role in multi-robot autonomous cleaning systems, where multiple robots work together to clean a large area. However, most current studies mainly focus on deterministic, single-task allocation for cleaning robots, without considering hybrid tasks in uncertain working environments. Moreover, there is a lack of datasets and benchmarks for relevant research. In this paper, to address these problems, we formulate multi-robot hybrid-task allocation under the uncertain cleaning environment as a robust optimization problem. Firstly, we propose a novel robust mixed-integer linear programming model with practical constraints including the task order constraint for different tasks and the ability constraints of hybrid robots. Secondly, we establish a dataset of \emph{100} instances made from floor plans, each of which has 2D manually-labeled images and a 3D model. Thirdly, we provide comprehensive results on the collected dataset using three traditional optimization approaches and a deep reinforcement learning-based solver. The evaluation results show that our solution meets the needs of multi-robot cleaning task allocation and the robust solver can protect the system from worst-case scenarios with little additional cost. The benchmark will be available at {https://github.com/iamwangyabin/Multi-robot-Cleaning-Task-Allocation}.

A General Framework for Lifelong Localization and Mapping in Changing Environment

Nov 22, 2021

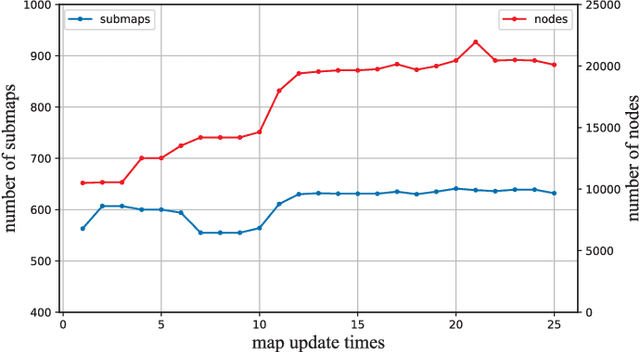

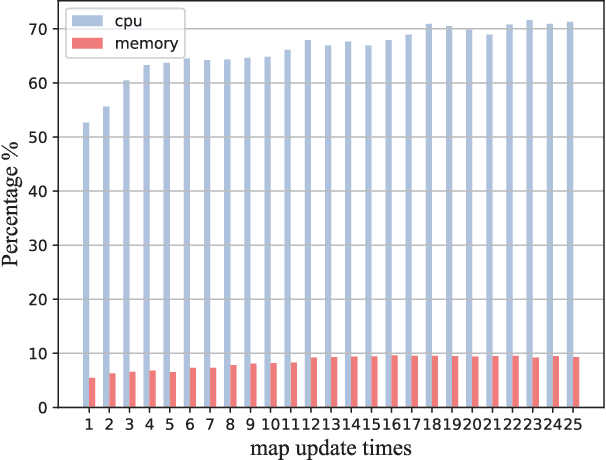

Abstract:The environment of most real-world scenarios such as malls and supermarkets changes at all times. A pre-built map that does not account for these changes becomes out-of-date easily. Therefore, it is necessary to have an up-to-date model of the environment to facilitate long-term operation of a robot. To this end, this paper presents a general lifelong simultaneous localization and mapping (SLAM) framework. Our framework uses a multiple session map representation, and exploits an efficient map updating strategy that includes map building, pose graph refinement and sparsification. To mitigate the unbounded increase of memory usage, we propose a map-trimming method based on the Chow-Liu maximum-mutual-information spanning tree. The proposed SLAM framework has been comprehensively validated by over a month of robot deployment in real supermarket environment. Furthermore, we release the dataset collected from the indoor and outdoor changing environment with the hope to accelerate lifelong SLAM research in the community. Our dataset is available at https://github.com/sanduan168/lifelong-SLAM-dataset.

Are We Ready for Service Robots? The OpenLORIS-Scene Datasets for Lifelong SLAM

Nov 13, 2019

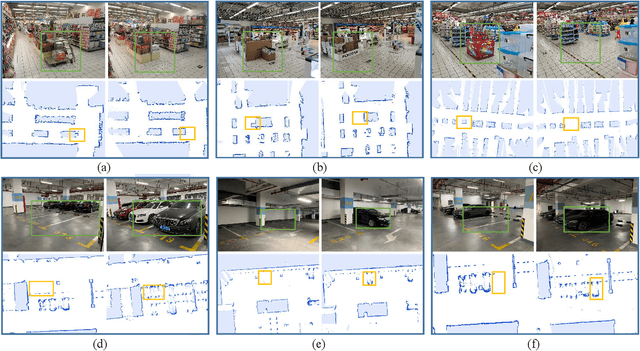

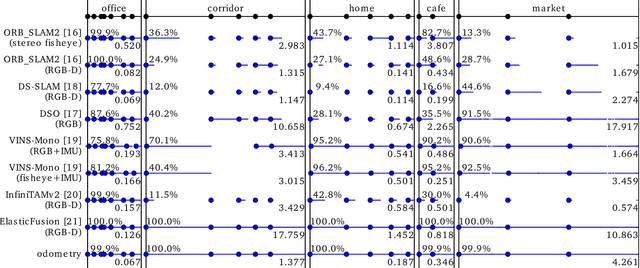

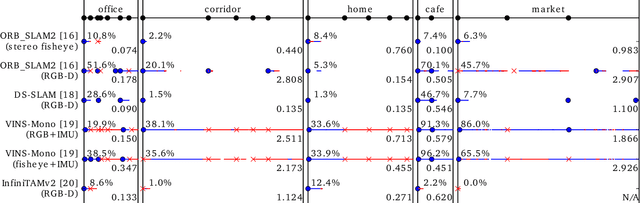

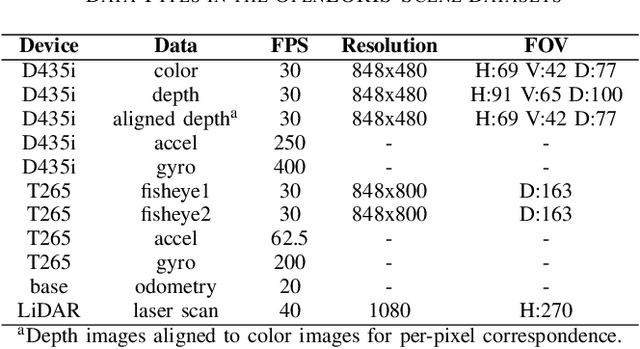

Abstract:Service robots should be able to operate autonomously in dynamic and daily changing environments over an extended period of time. While Simultaneous Localization And Mapping (SLAM) is one of the most fundamental problems for robotic autonomy, most existing SLAM works are evaluated with data sequences that are recorded in a short period of time. In real-world deployment, there can be out-of-sight scene changes caused by both natural factors and human activities. For example, in home scenarios, most objects may be movable, replaceable or deformable, and the visual features of the same place may be significantly different in some successive days. Such out-of-sight dynamics pose great challenges to the robustness of pose estimation, and hence a robot's long-term deployment and operation. To differentiate the forementioned problem from the conventional works which are usually evaluated in a static setting in a single run, the term lifelong SLAM is used here to address SLAM problems in an ever-changing environment over a long period of time. To accelerate lifelong SLAM research, we release the OpenLORIS-Scene datasets. The data are collected in real-world indoor scenes, for multiple times in each place to include scene changes in real life. We also design benchmarking metrics for lifelong SLAM, with which the robustness and accuracy of pose estimation are evaluated separately. The datasets and benchmark are available online at https://lifelong-robotic-vision.github.io/dataset/scene.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge