Assaf Marron

Data and System Perspectives of Sustainable Artificial Intelligence

Jan 13, 2025Abstract:Sustainable AI is a subfield of AI for concerning developing and using AI systems in ways of aiming to reduce environmental impact and achieve sustainability. Sustainable AI is increasingly important given that training of and inference with AI models such as large langrage models are consuming a large amount of computing power. In this article, we discuss current issues, opportunities and example solutions for addressing these issues, and future challenges to tackle, from the data and system perspectives, related to data acquisition, data processing, and AI model training and inference.

Human or Machine: Reflections on Turing-Inspired Testing for the Everyday

May 07, 2023Abstract:Turing's 1950 paper introduced the famed "imitation game", a test originally proposed to capture the notion of machine intelligence. Over the years, the Turing test spawned a large amount of interest, which resulted in several variants, as well as heated discussions and controversy. Here we sidestep the question of whether a particular machine can be labeled intelligent, or can be said to match human capabilities in a given context. Instead, but inspired by Turing, we draw attention to the seemingly simpler challenge of determining whether one is interacting with a human or with a machine, in the context of everyday life. We are interested in reflecting upon the importance of this Human-or-Machine question and the use one may make of a reliable answer thereto. Whereas Turing's original test is widely considered to be more of a thought experiment, the Human-or-Machine question as discussed here has obvious practical significance. And while the jury is still not in regarding the possibility of machines that can mimic human behavior with high fidelity in everyday contexts, we argue that near-term exploration of the issues raised here can contribute to development methods for computerized systems, and may also improve our understanding of human behavior in general.

The biosphere computes evolution by autoencoding interacting organisms into species and decoding species into ecosystems

Apr 12, 2022

Abstract:Autoencoding is a machine-learning technique for extracting a compact representation of the essential features of input data; this representation then enables a variety of applications that rely on encoding and subsequent reconstruction based on decoding of the relevant data. Here, we document our discovery that the biosphere evolves by a natural process akin to computer autoencoding. We establish the following points: (1) A species is defined by its species interaction code. The species code consists of the fundamental, core interactions of the species with its external and internal environments; core interactions are encoded by multi-scale networks including molecules-cells-organisms. (2) Evolution expresses sustainable changes in species interaction codes; these changing codes both map and construct the species environment. The survival of species is computed by what we term \textit{natural autoencoding}: arrays of input interactions generate species codes, which survive by decoding into sustained ecosystem interactions. This group process, termed survival-of-the-fitted, supplants the Darwinian struggle of individuals and survival-of-the-fittest only. DNA is only one element in natural autoencoding. (3) Natural autoencoding and artificial autoencoding techniques manifest defined similarities and differences. Biosphere autoencoding and survival-of-the-fitted sheds a new light on the mechanism of evolution. Evolutionary autoencoding renders evolution amenable to new approaches to computer modeling.

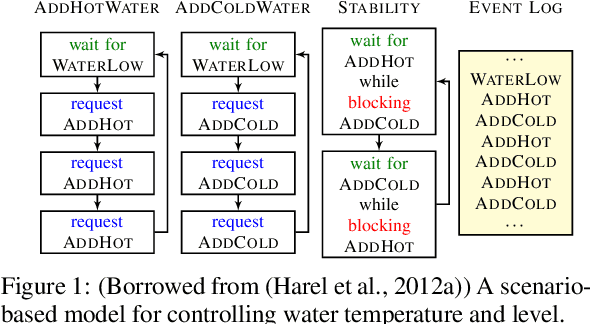

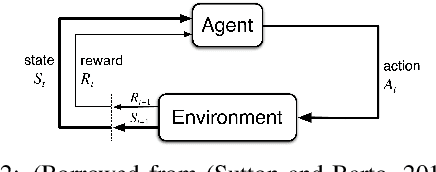

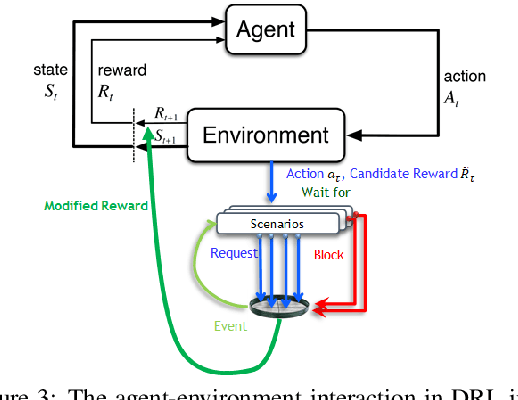

Scenario-Assisted Deep Reinforcement Learning

Feb 09, 2022

Abstract:Deep reinforcement learning has proven remarkably useful in training agents from unstructured data. However, the opacity of the produced agents makes it difficult to ensure that they adhere to various requirements posed by human engineers. In this work-in-progress report, we propose a technique for enhancing the reinforcement learning training process (specifically, its reward calculation), in a way that allows human engineers to directly contribute their expert knowledge, making the agent under training more likely to comply with various relevant constraints. Moreover, our proposed approach allows formulating these constraints using advanced model engineering techniques, such as scenario-based modeling. This mix of black-box learning-based tools with classical modeling approaches could produce systems that are effective and efficient, but are also more transparent and maintainable. We evaluated our technique using a case-study from the domain of internet congestion control, obtaining promising results.

Expecting the Unexpected: Developing Autonomous-System Design Principles for Reacting to Unpredicted Events and Conditions

Jan 25, 2020Abstract:When developing autonomous systems, engineers and other stakeholders make great effort to prepare the system for all foreseeable events and conditions. However, these systems are still bound to encounter events and conditions that were not considered at design time. For reasons like safety, cost, or ethics, it is often highly desired that these new situations be handled correctly upon first encounter. In this paper we first justify our position that there will always exist unpredicted events and conditions, driven among others by: new inventions in the real world; the diversity of world-wide system deployments and uses; and, the non-negligible probability that multiple seemingly unlikely events, which may be neglected at design time, will not only occur, but occur together. We then argue that despite this unpredictability property, handling these events and conditions is indeed possible. Hence, we offer and exemplify design principles that when applied in advance, can enable systems to deal, in the future, with unpredicted circumstances. We conclude with a discussion of how this work and a broader theoretical study of the unexpected can contribute toward a foundation of engineering principles for developing trustworthy next-generation autonomous systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge