Raz Yerushalmi

DEM: A Method for Certifying Deep Neural Network Classifier Outputs in Aerospace

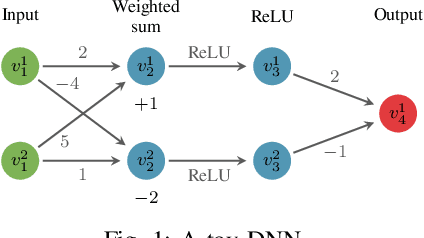

Jan 04, 2024Abstract:Software development in the aerospace domain requires adhering to strict, high-quality standards. While there exist regulatory guidelines for commercial software in this domain (e.g., ARP-4754 and DO-178), these do not apply to software with deep neural network (DNN) components. Consequently, it is unclear how to allow aerospace systems to benefit from the deep learning revolution. Our work here seeks to address this challenge with a novel, output-centric approach for DNN certification. Our method employs statistical verification techniques, and has the key advantage of being able to flag specific inputs for which the DNN's output may be unreliable - so that they may be later inspected by a human expert. To achieve this, our method conducts a statistical analysis of the DNN's predictions for other, nearby inputs, in order to detect inconsistencies. This is in contrast to existing techniques, which typically attempt to certify the entire DNN, as opposed to individual outputs. Our method uses the DNN as a black-box, and makes no assumptions about its topology. We hope that this work constitutes another step towards integrating DNNs in safety-critical applications - especially in the aerospace domain, where high standards of quality and reliability are crucial.

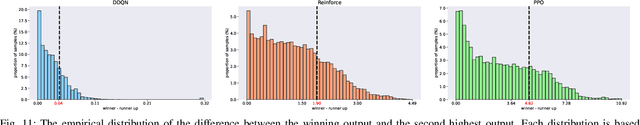

gRoMA: a Tool for Measuring Deep Neural Networks Global Robustness

Jan 05, 2023Abstract:Deep neural networks (DNNs) are a state-of-the-art technology, capable of outstanding performance in many key tasks. However, it is challenging to integrate DNNs into safety-critical systems, such as those in the aerospace or automotive domains, due to the risk of adversarial inputs: slightly perturbed inputs that can cause the DNN to make grievous mistakes. Adversarial inputs have been shown to plague even modern DNNs; and so the risks they pose must be measured and mitigated to allow the safe deployment of DNNs in safety-critical systems. Here, we present a novel and scalable tool called gRoMA, which uses a statistical approach for formally measuring the global categorial robustness of a DNN - i.e., the probability of randomly encountering an adversarial input for a specific output category. Our tool operates on pre-trained, black-box classification DNNs. It randomly generates input samples that belong to an output category of interest, measures the DNN's susceptibility to adversarial inputs around these inputs, and then aggregates the results to infer the overall global robustness of the DNN up to some small bounded error. For evaluation purposes, we used gRoMA to measure the global robustness of the widespread Densenet DNN model over the CIFAR10 dataset and our results exposed significant gaps in the robustness of the different output categories. This experiment demonstrates the scalability of the new approach and showcases its potential for allowing DNNs to be deployed within critical systems of interest.

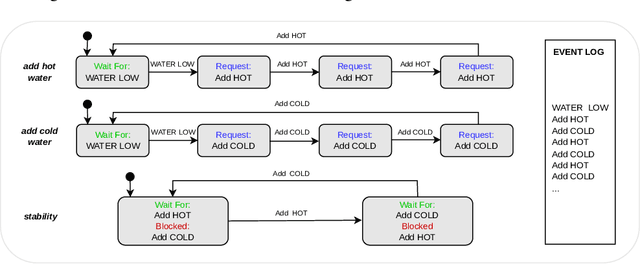

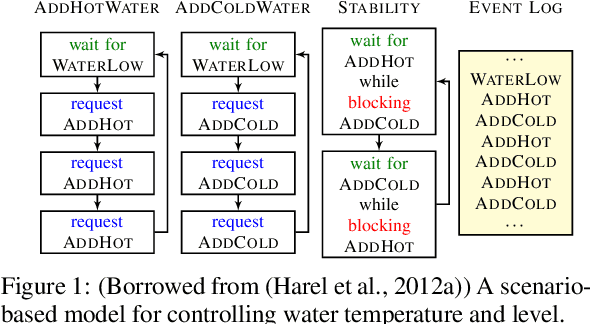

Constrained Reinforcement Learning for Robotics via Scenario-Based Programming

Jun 20, 2022

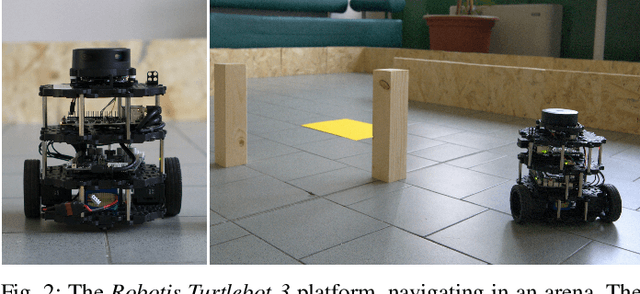

Abstract:Deep reinforcement learning (DRL) has achieved groundbreaking successes in a wide variety of robotic applications. A natural consequence is the adoption of this paradigm for safety-critical tasks, where human safety and expensive hardware can be involved. In this context, it is crucial to optimize the performance of DRL-based agents while providing guarantees about their behavior. This paper presents a novel technique for incorporating domain-expert knowledge into a constrained DRL training loop. Our technique exploits the scenario-based programming paradigm, which is designed to allow specifying such knowledge in a simple and intuitive way. We validated our method on the popular robotic mapless navigation problem, in simulation, and on the actual platform. Our experiments demonstrate that using our approach to leverage expert knowledge dramatically improves the safety and the performance of the agent.

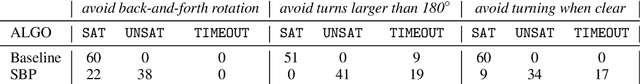

Verifying Learning-Based Robotic Navigation Systems

May 26, 2022

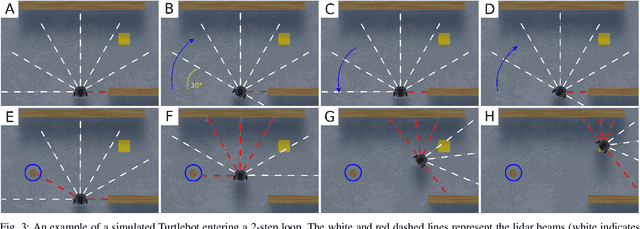

Abstract:Deep reinforcement learning (DRL) has become a dominant deep-learning paradigm for various tasks in which complex policies are learned within reactive systems. In parallel, there has recently been significant research on verifying deep neural networks. However, to date, there has been little work demonstrating the use of modern verification tools on real, DRL-controlled systems. In this case-study paper, we attempt to begin bridging this gap, and focus on the important task of mapless robotic navigation -- a classic robotics problem, in which a robot, usually controlled by a DRL agent, needs to efficiently and safely navigate through an unknown arena towards a desired target. We demonstrate how modern verification engines can be used for effective model selection, i.e., the process of selecting the best available policy for the robot in question from a pool of candidate policies. Specifically, we use verification to detect and rule out policies that may demonstrate suboptimal behavior, such as collisions and infinite loops. We also apply verification to identify models with overly conservative behavior, thus allowing users to choose superior policies that are better at finding an optimal, shorter path to a target. To validate our work, we conducted extensive experiments on an actual robot, and confirmed that the suboptimal policies detected by our method were indeed flawed. We also compared our verification-driven approach to state-of-the-art gradient attacks, and our results demonstrate that gradient-based methods are inadequate in this setting. Our work is the first to demonstrate the use of DNN verification backends for recognizing suboptimal DRL policies in real-world robots, and for filtering out unwanted policies. We believe that the methods presented in this work can be applied to a large range of application domains that incorporate deep-learning-based agents.

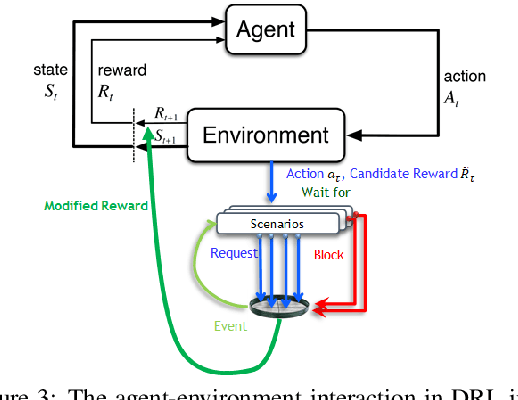

Scenario-Assisted Deep Reinforcement Learning

Feb 09, 2022

Abstract:Deep reinforcement learning has proven remarkably useful in training agents from unstructured data. However, the opacity of the produced agents makes it difficult to ensure that they adhere to various requirements posed by human engineers. In this work-in-progress report, we propose a technique for enhancing the reinforcement learning training process (specifically, its reward calculation), in a way that allows human engineers to directly contribute their expert knowledge, making the agent under training more likely to comply with various relevant constraints. Moreover, our proposed approach allows formulating these constraints using advanced model engineering techniques, such as scenario-based modeling. This mix of black-box learning-based tools with classical modeling approaches could produce systems that are effective and efficient, but are also more transparent and maintainable. We evaluated our technique using a case-study from the domain of internet congestion control, obtaining promising results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge