Ashirbani Saha

Performance of externally validated machine learning models based on histopathology images for the diagnosis, classification, prognosis, or treatment outcome prediction in female breast cancer: A systematic review

Dec 09, 2023

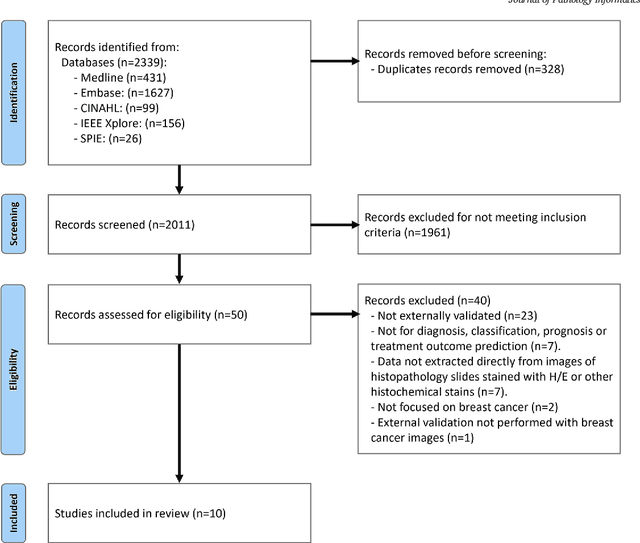

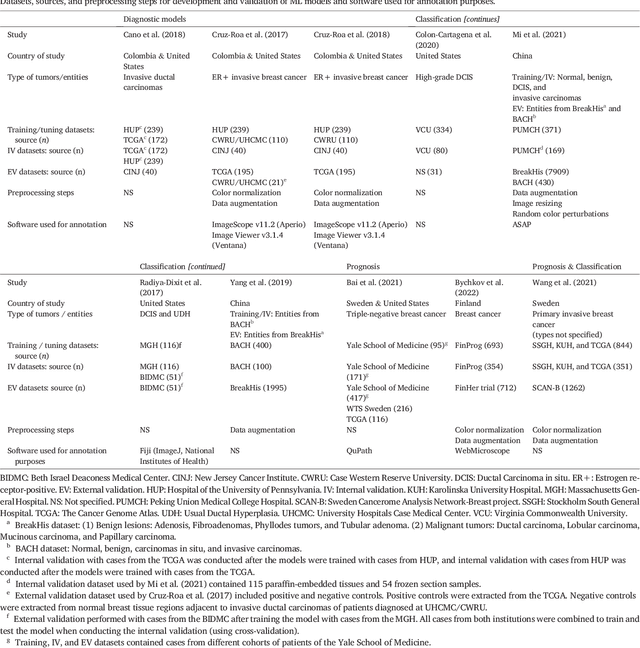

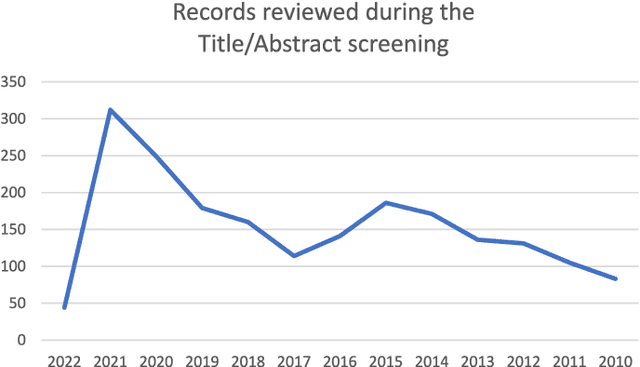

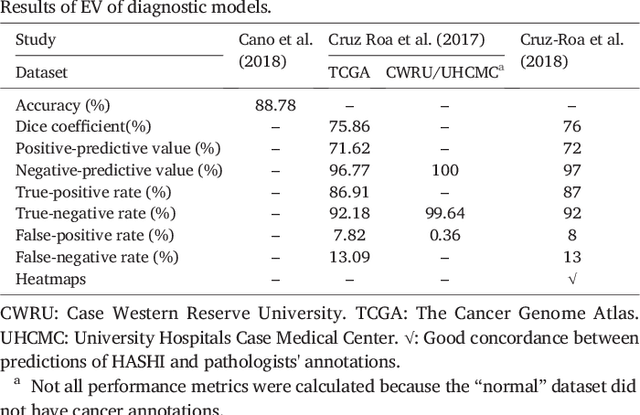

Abstract:Numerous machine learning (ML) models have been developed for breast cancer using various types of data. Successful external validation (EV) of ML models is important evidence of their generalizability. The aim of this systematic review was to assess the performance of externally validated ML models based on histopathology images for diagnosis, classification, prognosis, or treatment outcome prediction in female breast cancer. A systematic search of MEDLINE, EMBASE, CINAHL, IEEE, MICCAI, and SPIE conferences was performed for studies published between January 2010 and February 2022. The Prediction Model Risk of Bias Assessment Tool (PROBAST) was employed, and the results were narratively described. Of the 2011 non-duplicated citations, 8 journal articles and 2 conference proceedings met inclusion criteria. Three studies externally validated ML models for diagnosis, 4 for classification, 2 for prognosis, and 1 for both classification and prognosis. Most studies used Convolutional Neural Networks and one used logistic regression algorithms. For diagnostic/classification models, the most common performance metrics reported in the EV were accuracy and area under the curve, which were greater than 87% and 90%, respectively, using pathologists' annotations as ground truth. The hazard ratios in the EV of prognostic ML models were between 1.7 (95% CI, 1.2-2.6) and 1.8 (95% CI, 1.3-2.7) to predict distant disease-free survival; 1.91 (95% CI, 1.11-3.29) for recurrence, and between 0.09 (95% CI, 0.01-0.70) and 0.65 (95% CI, 0.43-0.98) for overall survival, using clinical data as ground truth. Despite EV being an important step before the clinical application of a ML model, it hasn't been performed routinely. The large variability in the training/validation datasets, methods, performance metrics, and reported information limited the comparison of the models and the analysis of their results (...)

Seeing the random forest through the decision trees. Supporting learning health systems from histopathology with machine learning models: Challenges and opportunities

Dec 06, 2023Abstract:This paper discusses some overlooked challenges faced when working with machine learning models for histopathology and presents a novel opportunity to support "Learning Health Systems" with them. Initially, the authors elaborate on these challenges after separating them according to their mitigation strategies: those that need innovative approaches, time, or future technological capabilities and those that require a conceptual reappraisal from a critical perspective. Then, a novel opportunity to support "Learning Health Systems" by integrating hidden information extracted by ML models from digitalized histopathology slides with other healthcare big data is presented.

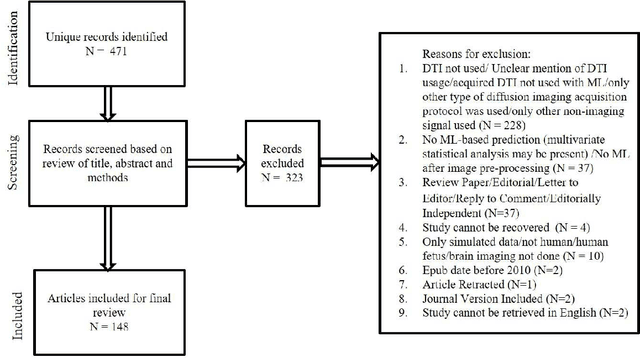

Machine learning applications using diffusion tensor imaging of human brain: A PubMed literature review

Dec 18, 2020

Abstract:We performed a PubMed search to find 148 papers published between January 2010 and December 2019 related to human brain, Diffusion Tensor Imaging (DTI), and Machine Learning (ML). The studies focused on healthy cohorts (n = 15), mental health disorders (n = 25), tumor (n = 19), trauma (n = 5), dementia (n = 24), developmental disorders (n = 5), movement disorders (n = 9), other neurological disorders (n = 27), miscellaneous non-neurological disorders, or without stating the disease of focus (n = 7), and multiple combinations of the aforementioned categories (n = 12). Classification of patients using information from DTI stands out to be the most commonly (n = 114) performed ML application. A significant number (n = 93) of studies used support vector machines (SVM) as the preferred choice of ML model for classification. A significant portion (31/44) of publications in the recent years (2018-2019) continued to use SVM, support vector regression, and random forest which are a part of traditional ML. Though many types of applications across various health conditions (including healthy) were conducted, majority of the studies were based on small cohorts (less than 100) and did not conduct independent/external validation on test sets.

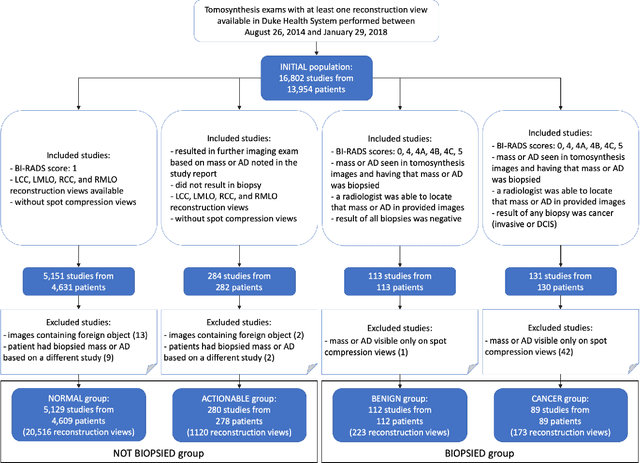

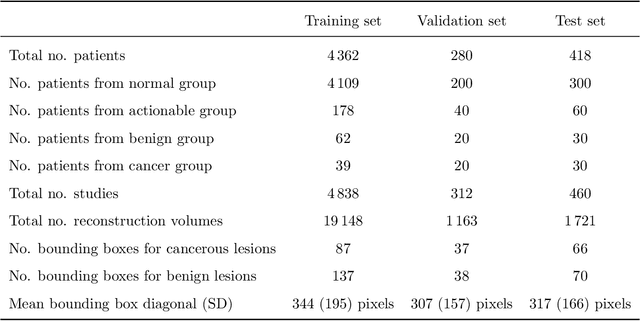

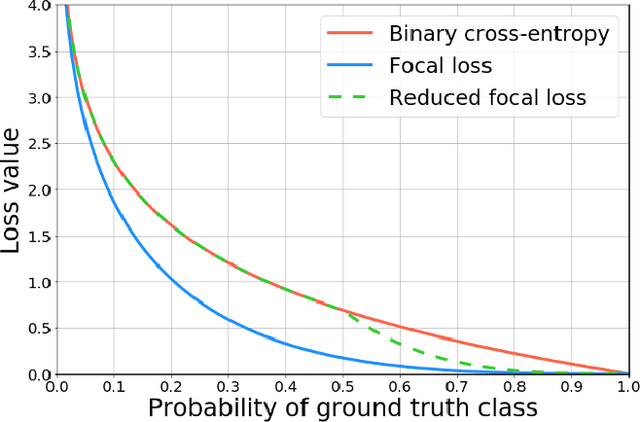

Detection of masses and architectural distortions in digital breast tomosynthesis: a publicly available dataset of 5,060 patients and a deep learning model

Nov 13, 2020

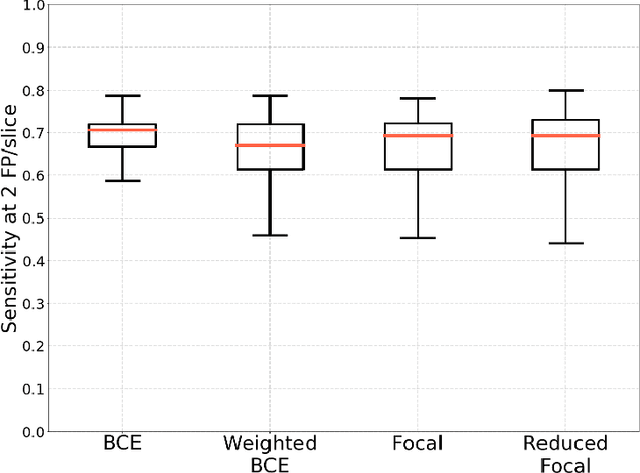

Abstract:Breast cancer screening is one of the most common radiological tasks with over 39 million exams performed each year. While breast cancer screening has been one of the most studied medical imaging applications of artificial intelligence, the development and evaluation of the algorithms are hindered due to the lack of well-annotated large-scale publicly available datasets. This is particularly an issue for digital breast tomosynthesis (DBT) which is a relatively new breast cancer screening modality. We have curated and made publicly available a large-scale dataset of digital breast tomosynthesis images. It contains 22,032 reconstructed DBT volumes belonging to 5,610 studies from 5,060 patients. This included four groups: (1) 5,129 normal studies, (2) 280 studies where additional imaging was needed but no biopsy was performed, (3) 112 benign biopsied studies, and (4) 89 studies with cancer. Our dataset included masses and architectural distortions which were annotated by two experienced radiologists. Additionally, we developed a single-phase deep learning detection model and tested it using our dataset to serve as a baseline for future research. Our model reached a sensitivity of 65% at 2 false positives per breast. Our large, diverse, and highly-curated dataset will facilitate development and evaluation of AI algorithms for breast cancer screening through providing data for training as well as common set of cases for model validation. The performance of the model developed in our study shows that the task remains challenging and will serve as a baseline for future model development.

Association of genomic subtypes of lower-grade gliomas with shape features automatically extracted by a deep learning algorithm

Jun 09, 2019

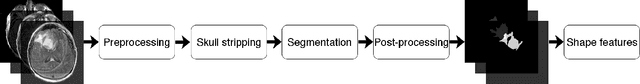

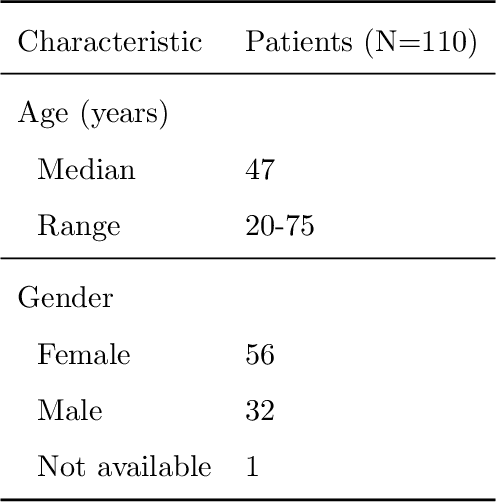

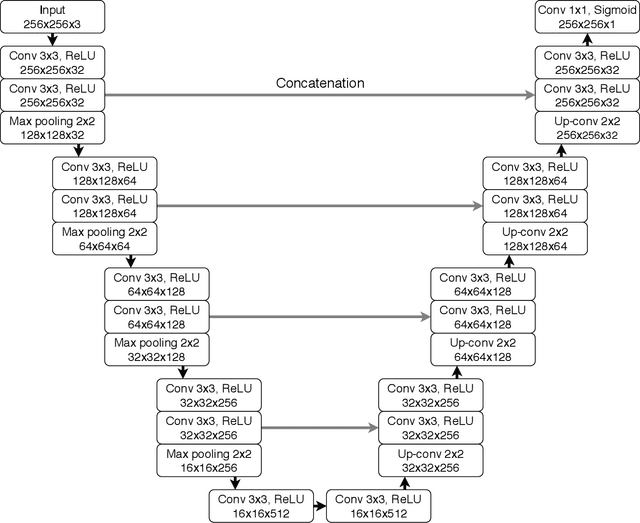

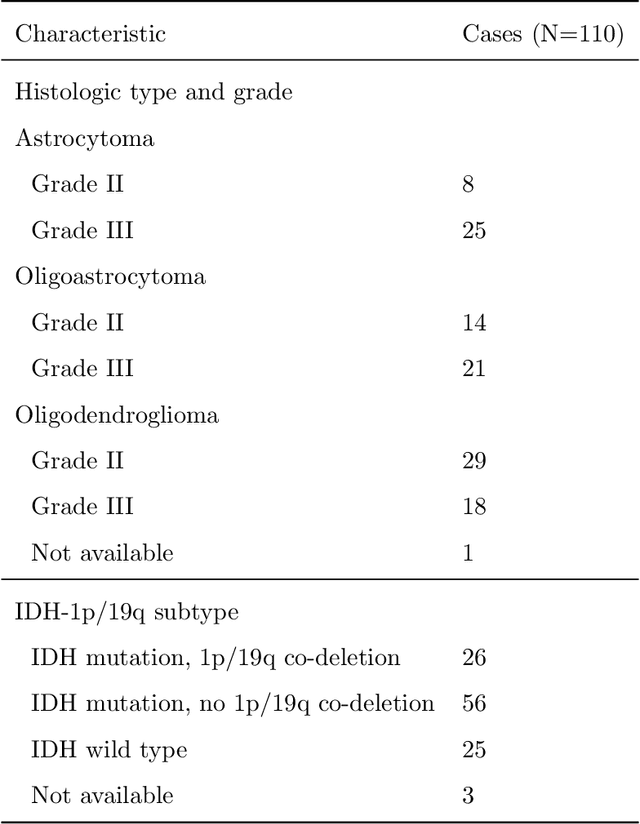

Abstract:Recent analysis identified distinct genomic subtypes of lower-grade glioma tumors which are associated with shape features. In this study, we propose a fully automatic way to quantify tumor imaging characteristics using deep learning-based segmentation and test whether these characteristics are predictive of tumor genomic subtypes. We used preoperative imaging and genomic data of 110 patients from 5 institutions with lower-grade gliomas from The Cancer Genome Atlas. Based on automatic deep learning segmentations, we extracted three features which quantify two-dimensional and three-dimensional characteristics of the tumors. Genomic data for the analyzed cohort of patients consisted of previously identified genomic clusters based on IDH mutation and 1p/19q co-deletion, DNA methylation, gene expression, DNA copy number, and microRNA expression. To analyze the relationship between the imaging features and genomic clusters, we conducted the Fisher exact test for 10 hypotheses for each pair of imaging feature and genomic subtype. To account for multiple hypothesis testing, we applied a Bonferroni correction. P-values lower than 0.005 were considered statistically significant. We found the strongest association between RNASeq clusters and the bounding ellipsoid volume ratio ($p<0.0002$) and between RNASeq clusters and margin fluctuation ($p<0.005$). In addition, we identified associations between bounding ellipsoid volume ratio and all tested molecular subtypes ($p<0.02$) as well as between angular standard deviation and RNASeq cluster ($p<0.02$). In terms of automatic tumor segmentation that was used to generate the quantitative image characteristics, our deep learning algorithm achieved a mean Dice coefficient of 82% which is comparable to human performance.

Automatic deep learning-based normalization of breast dynamic contrast-enhanced magnetic resonance images

Jul 05, 2018

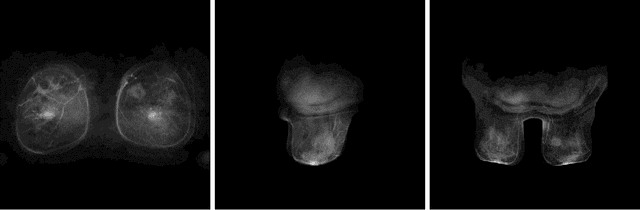

Abstract:Objective: To develop an automatic image normalization algorithm for intensity correction of images from breast dynamic contrast-enhanced magnetic resonance imaging (DCE-MRI) acquired by different MRI scanners with various imaging parameters, using only image information. Methods: DCE-MR images of 460 subjects with breast cancer acquired by different scanners were used in this study. Each subject had one T1-weighted pre-contrast image and three T1-weighted post-contrast images available. Our normalization algorithm operated under the assumption that the same type of tissue in different patients should be represented by the same voxel value. We used four tissue/material types as the anchors for the normalization: 1) air, 2) fat tissue, 3) dense tissue, and 4) heart. The algorithm proceeded in the following two steps: First, a state-of-the-art deep learning-based algorithm was applied to perform tissue segmentation accurately and efficiently. Then, based on the segmentation results, a subject-specific piecewise linear mapping function was applied between the anchor points to normalize the same type of tissue in different patients into the same intensity ranges. We evaluated the algorithm with 300 subjects used for training and the rest used for testing. Results: The application of our algorithm to images with different scanning parameters resulted in highly improved consistency in pixel values and extracted radiomics features. Conclusion: The proposed image normalization strategy based on tissue segmentation can perform intensity correction fully automatically, without the knowledge of the scanner parameters. Significance: We have thoroughly tested our algorithm and showed that it successfully normalizes the intensity of DCE-MR images. We made our software publicly available for others to apply in their analyses.

Deep learning in radiology: an overview of the concepts and a survey of the state of the art

Feb 10, 2018

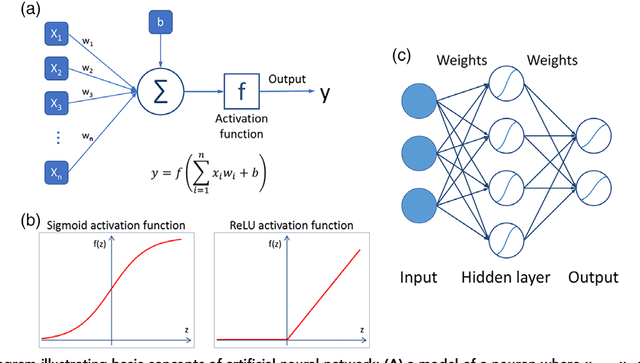

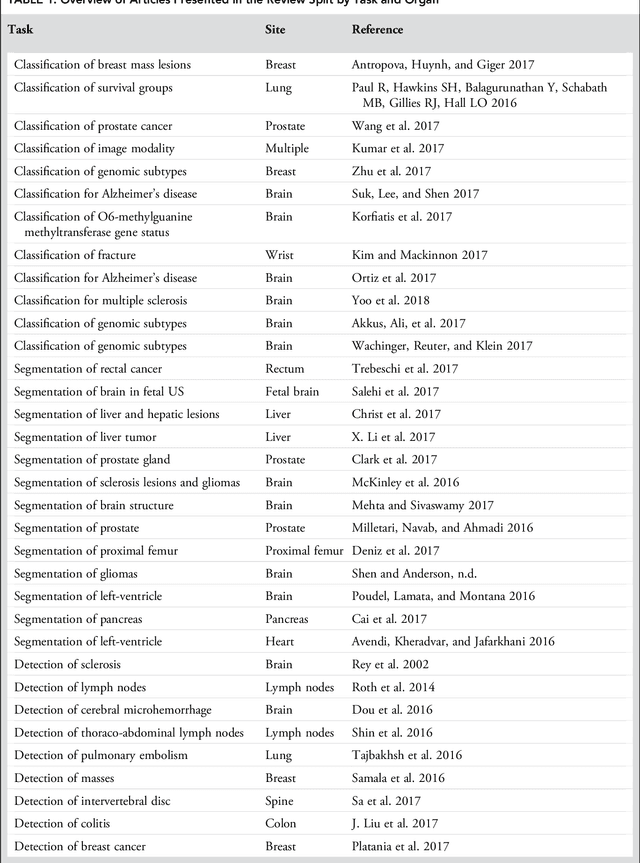

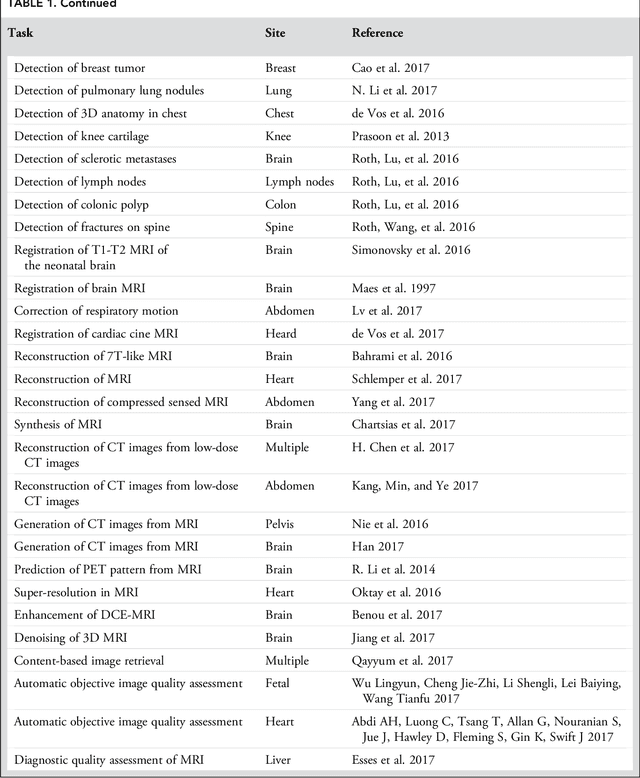

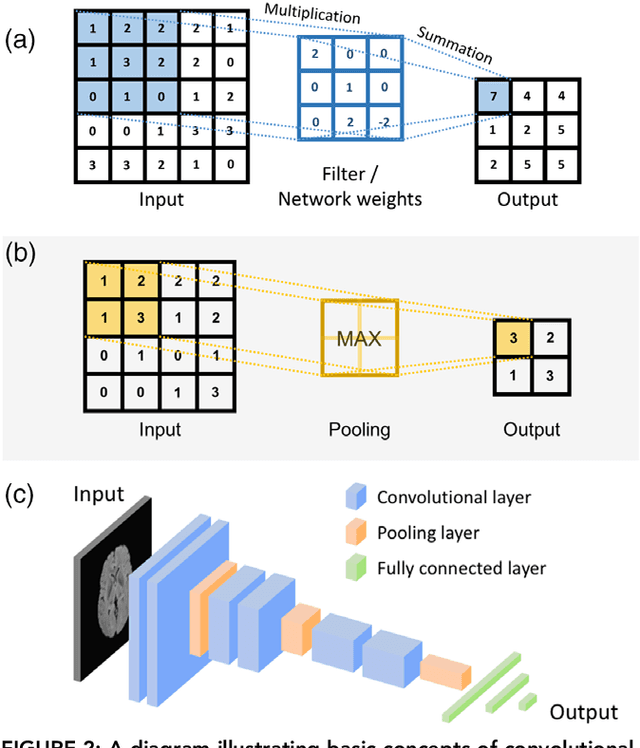

Abstract:Deep learning is a branch of artificial intelligence where networks of simple interconnected units are used to extract patterns from data in order to solve complex problems. Deep learning algorithms have shown groundbreaking performance in a variety of sophisticated tasks, especially those related to images. They have often matched or exceeded human performance. Since the medical field of radiology mostly relies on extracting useful information from images, it is a very natural application area for deep learning, and research in this area has rapidly grown in recent years. In this article, we review the clinical reality of radiology and discuss the opportunities for application of deep learning algorithms. We also introduce basic concepts of deep learning including convolutional neural networks. Then, we present a survey of the research in deep learning applied to radiology. We organize the studies by the types of specific tasks that they attempt to solve and review the broad range of utilized deep learning algorithms. Finally, we briefly discuss opportunities and challenges for incorporating deep learning in the radiology practice of the future.

Deep Learning for identifying radiogenomic associations in breast cancer

Nov 29, 2017

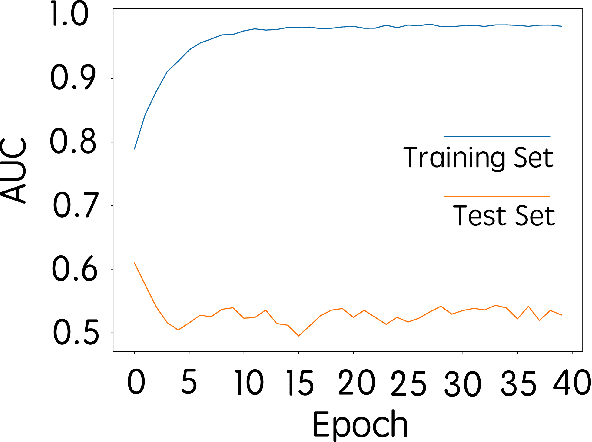

Abstract:Purpose: To determine whether deep learning models can distinguish between breast cancer molecular subtypes based on dynamic contrast-enhanced magnetic resonance imaging (DCE-MRI). Materials and methods: In this institutional review board-approved single-center study, we analyzed DCE-MR images of 270 patients at our institution. Lesions of interest were identified by radiologists. The task was to automatically determine whether the tumor is of the Luminal A subtype or of another subtype based on the MR image patches representing the tumor. Three different deep learning approaches were used to classify the tumor according to their molecular subtypes: learning from scratch where only tumor patches were used for training, transfer learning where networks pre-trained on natural images were fine-tuned using tumor patches, and off-the-shelf deep features where the features extracted by neural networks trained on natural images were used for classification with a support vector machine. Network architectures utilized in our experiments were GoogleNet, VGG, and CIFAR. We used 10-fold crossvalidation method for validation and area under the receiver operating characteristic (AUC) as the measure of performance. Results: The best AUC performance for distinguishing molecular subtypes was 0.65 (95% CI:[0.57,0.71]) and was achieved by the off-the-shelf deep features approach. The highest AUC performance for training from scratch was 0.58 (95% CI:[0.51,0.64]) and the best AUC performance for transfer learning was 0.60 (95% CI:[0.52,0.65]) respectively. For the off-the-shelf approach, the features extracted from the fully connected layer performed the best. Conclusion: Deep learning may play a role in discovering radiogenomic associations in breast cancer.

Deep learning analysis of breast MRIs for prediction of occult invasive disease in ductal carcinoma in situ

Nov 28, 2017

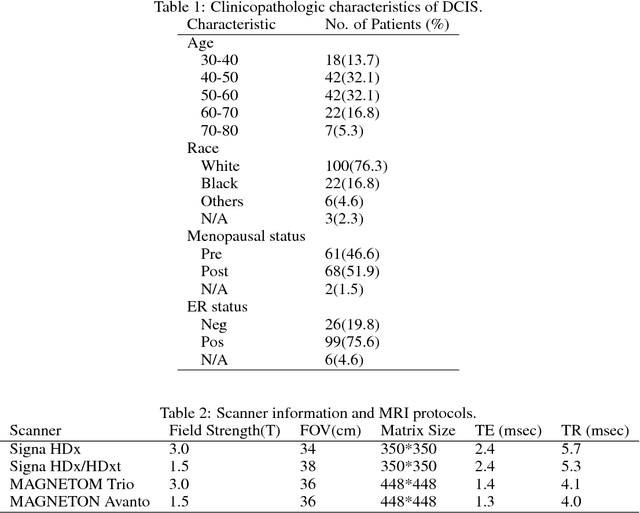

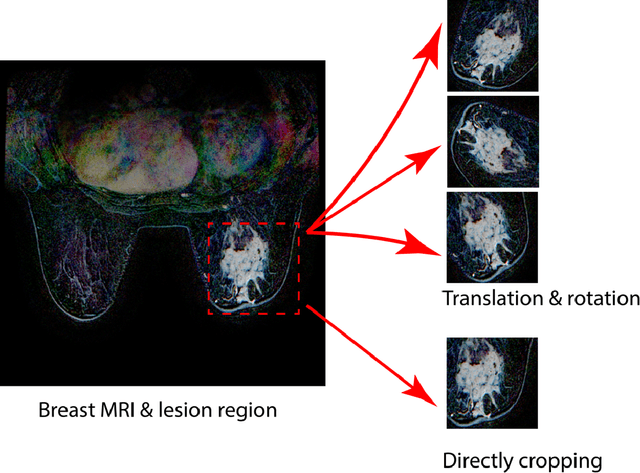

Abstract:Purpose: To determine whether deep learning-based algorithms applied to breast MR images can aid in the prediction of occult invasive disease following the di- agnosis of ductal carcinoma in situ (DCIS) by core needle biopsy. Material and Methods: In this institutional review board-approved study, we analyzed dynamic contrast-enhanced fat-saturated T1-weighted MRI sequences of 131 patients at our institution with a core needle biopsy-confirmed diagnosis of DCIS. The patients had no preoperative therapy before breast MRI and no prior history of breast cancer. We explored two different deep learning approaches to predict whether there was a hidden (occult) invasive component in the analyzed tumors that was ultimately detected at surgical excision. In the first approach, we adopted the transfer learning strategy, in which a network pre-trained on a large dataset of natural images is fine-tuned with our DCIS images. Specifically, we used the GoogleNet model pre-trained on the ImageNet dataset. In the second approach, we used a pre-trained network to extract deep features, and a support vector machine (SVM) that utilizes these features to predict the upstaging of the DCIS. We used 10-fold cross validation and the area under the ROC curve (AUC) to estimate the performance of the predictive models. Results: The best classification performance was obtained using the deep features approach with GoogleNet model pre-trained on ImageNet as the feature extractor and a polynomial kernel SVM used as the classifier (AUC = 0.70, 95% CI: 0.58- 0.79). For the transfer learning based approach, the highest AUC obtained was 0.53 (95% CI: 0.41-0.62). Conclusion: Convolutional neural networks could potentially be used to identify occult invasive disease in patients diagnosed with DCIS at the initial core needle biopsy.

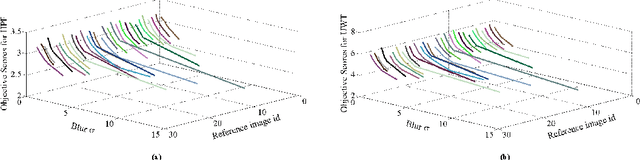

High Frequency Content based Stimulus for Perceptual Sharpness Assessment in Natural Images

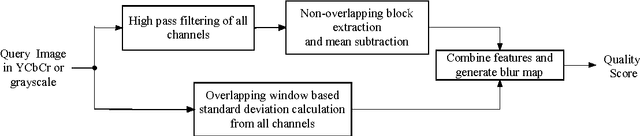

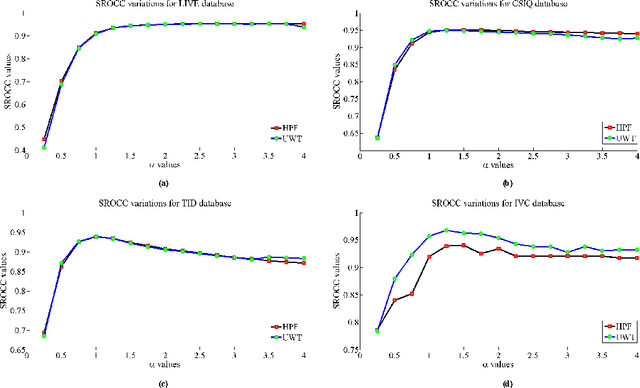

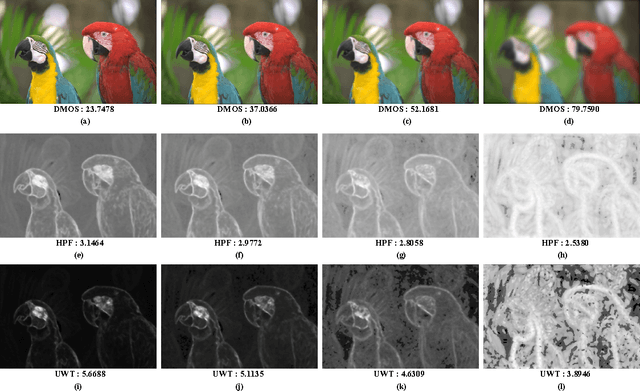

Dec 18, 2014

Abstract:A blind approach to evaluate the perceptual sharpness present in a natural image is proposed. Though the literature demonstrates a set of variegated visual cues to detect or evaluate the absence or presence of sharpness, we emphasize in the current work that high frequency content and local standard deviation can form strong features to compute perceived sharpness in any natural image, and can be considered an able alternative for the existing cues. Unsharp areas in a natural image happen to exhibit uniform intensity or lack of sharp changes between regions. Sharp region transitions in an image are caused by the presence of spatial high frequency content. Therefore, in the proposed approach, we hypothesize that using the high frequency content as the principal stimulus, the perceived sharpness can be quantified in an image. When an image is convolved with a high pass filter, higher values at any pixel location signify the presence of high frequency content at those locations. Considering these values as the stimulus, the exponent of the stimulus is weighted by local standard deviation to impart the contribution of the local contrast within the formation of the sharpness map. The sharpness map highlights the relatively sharper regions in the image and is used to calculate the perceived sharpness score of the image. The advantages of the proposed method lie in its use of simple visual cues of high frequency content and local contrast to arrive at the perceptual score, and requiring no training with the images. The promise of the proposed method is demonstrated by its ability to compute perceived sharpness for within image and across image sharpness changes and for blind evaluation of perceptual degradation resulting due to presence of blur. Experiments conducted on several databases demonstrate improved performance of the proposed method over that of the state-of-the-art techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge