Q. M. Jonathan Wu

Department of Electrical and Computer Engineering, University of Windsor, Canada

SAM2Auto: Auto Annotation Using FLASH

Jun 09, 2025Abstract:Vision-Language Models (VLMs) lag behind Large Language Models due to the scarcity of annotated datasets, as creating paired visual-textual annotations is labor-intensive and expensive. To address this bottleneck, we introduce SAM2Auto, the first fully automated annotation pipeline for video datasets requiring no human intervention or dataset-specific training. Our approach consists of two key components: SMART-OD, a robust object detection system that combines automatic mask generation with open-world object detection capabilities, and FLASH (Frame-Level Annotation and Segmentation Handler), a multi-object real-time video instance segmentation (VIS) that maintains consistent object identification across video frames even with intermittent detection gaps. Unlike existing open-world detection methods that require frame-specific hyperparameter tuning and suffer from numerous false positives, our system employs statistical approaches to minimize detection errors while ensuring consistent object tracking throughout entire video sequences. Extensive experimental validation demonstrates that SAM2Auto achieves comparable accuracy to manual annotation while dramatically reducing annotation time and eliminating labor costs. The system successfully handles diverse datasets without requiring retraining or extensive parameter adjustments, making it a practical solution for large-scale dataset creation. Our work establishes a new baseline for automated video annotation and provides a pathway for accelerating VLM development by addressing the fundamental dataset bottleneck that has constrained progress in vision-language understanding.

One-Shot Federated Unsupervised Domain Adaptation with Scaled Entropy Attention and Multi-Source Smoothed Pseudo Labeling

Mar 13, 2025

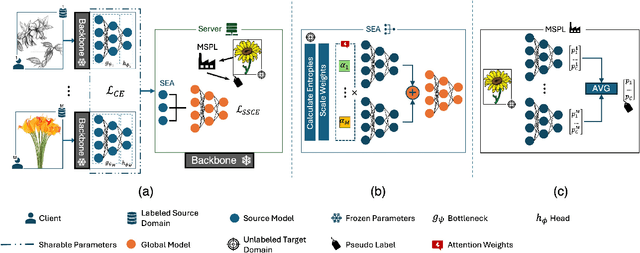

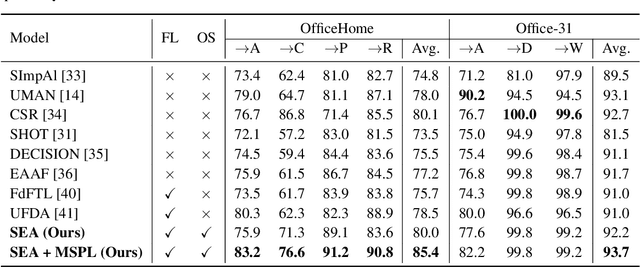

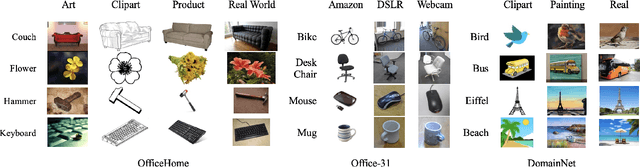

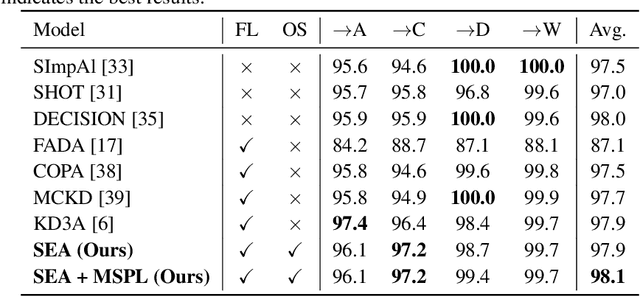

Abstract:Federated Learning (FL) is a promising approach for privacy-preserving collaborative learning. However, it faces significant challenges when dealing with domain shifts, especially when each client has access only to its source data and cannot share it during target domain adaptation. Moreover, FL methods often require high communication overhead due to multiple rounds of model updates between clients and the server. We propose a one-shot Federated Unsupervised Domain Adaptation (FUDA) method to address these limitations. Specifically, we introduce Scaled Entropy Attention (SEA) for model aggregation and Multi-Source Pseudo Labeling (MSPL) for target domain adaptation. SEA uses scaled prediction entropy on target domain to assign higher attention to reliable models. This improves the global model quality and ensures balanced weighting of contributions. MSPL distills knowledge from multiple source models to generate pseudo labels and manage noisy labels using smoothed soft-label cross-entropy (SSCE). Our approach outperforms state-of-the-art methods across four standard benchmarks while reducing communication and computation costs, making it highly suitable for real-world applications. The implementation code will be made publicly available upon publication.

EUDA: An Efficient Unsupervised Domain Adaptation via Self-Supervised Vision Transformer

Jul 31, 2024Abstract:Unsupervised domain adaptation (UDA) aims to mitigate the domain shift issue, where the distribution of training (source) data differs from that of testing (target) data. Many models have been developed to tackle this problem, and recently vision transformers (ViTs) have shown promising results. However, the complexity and large number of trainable parameters of ViTs restrict their deployment in practical applications. This underscores the need for an efficient model that not only reduces trainable parameters but also allows for adjustable complexity based on specific needs while delivering comparable performance. To achieve this, in this paper we introduce an Efficient Unsupervised Domain Adaptation (EUDA) framework. EUDA employs the DINOv2, which is a self-supervised ViT, as a feature extractor followed by a simplified bottleneck of fully connected layers to refine features for enhanced domain adaptation. Additionally, EUDA employs the synergistic domain alignment loss (SDAL), which integrates cross-entropy (CE) and maximum mean discrepancy (MMD) losses, to balance adaptation by minimizing classification errors in the source domain while aligning the source and target domain distributions. The experimental results indicate the effectiveness of EUDA in producing comparable results as compared with other state-of-the-art methods in domain adaptation with significantly fewer trainable parameters, between 42% to 99.7% fewer. This showcases the ability to train the model in a resource-limited environment. The code of the model is available at: https://github.com/A-Abedi/EUDA.

Towards the in-situ Trunk Identification and Length Measurement of Sea Cucumbers via Bézier Curve Modelling

Jun 20, 2024

Abstract:We introduce a novel vision-based framework for in-situ trunk identification and length measurement of sea cucumbers, which plays a crucial role in the monitoring of marine ranching resources and mechanized harvesting. To model sea cucumber trunk curves with varying degrees of bending, we utilize the parametric B\'{e}zier curve due to its computational simplicity, stability, and extensive range of transformation possibilities. Then, we propose an end-to-end unified framework that combines parametric B\'{e}zier curve modeling with the widely used You-Only-Look-Once (YOLO) pipeline, abbreviated as TISC-Net, and incorporates effective funnel activation and efficient multi-scale attention modules to enhance curve feature perception and learning. Furthermore, we propose incorporating trunk endpoint loss as an additional constraint to effectively mitigate the impact of endpoint deviations on the overall curve. Finally, by utilizing the depth information of pixels located along the trunk curve captured by a binocular camera, we propose accurately estimating the in-situ length of sea cucumbers through space curve integration. We established two challenging benchmark datasets for curve-based in-situ sea cucumber trunk identification. These datasets consist of over 1,000 real-world marine environment images of sea cucumbers, accompanied by B\'{e}zier format annotations. We conduct evaluation on SC-ISTI, for which our method achieves mAP50 above 0.9 on both object detection and trunk identification tasks. Extensive length measurement experiments demonstrate that the average absolute relative error is around 0.15.

Methods for Class-Imbalanced Learning with Support Vector Machines: A Review and an Empirical Evaluation

Jun 05, 2024Abstract:This paper presents a review on methods for class-imbalanced learning with the Support Vector Machine (SVM) and its variants. We first explain the structure of SVM and its variants and discuss their inefficiency in learning with class-imbalanced data sets. We introduce a hierarchical categorization of SVM-based models with respect to class-imbalanced learning. Specifically, we categorize SVM-based models into re-sampling, algorithmic, and fusion methods, and discuss the principles of the representative models in each category. In addition, we conduct a series of empirical evaluations to compare the performances of various representative SVM-based models in each category using benchmark imbalanced data sets, ranging from low to high imbalanced ratios. Our findings reveal that while algorithmic methods are less time-consuming owing to no data pre-processing requirements, fusion methods, which combine both re-sampling and algorithmic approaches, generally perform the best, but with a higher computational load. A discussion on research gaps and future research directions is provided.

You Only Look at Once for Real-time and Generic Multi-Task

Oct 10, 2023

Abstract:High precision, lightweight, and real-time responsiveness are three essential requirements for implementing autonomous driving. In this study, we present an adaptive, real-time, and lightweight multi-task model designed to concurrently address object detection, drivable area segmentation, and lane line segmentation tasks. Specifically, we developed an end-to-end multi-task model with a unified and streamlined segmentation structure. We introduced a learnable parameter that adaptively concatenate features in segmentation necks, using the same loss function for all segmentation tasks. This eliminates the need for customizations and enhances the model's generalization capabilities. We also introduced a segmentation head composed only of a series of convolutional layers, which reduces the inference time. We achieved competitive results on the BDD100k dataset, particularly in visualization outcomes. The performance results show a mAP50 of 81.1% for object detection, a mIoU of 91.0% for drivable area segmentation, and an IoU of 28.8% for lane line segmentation. Additionally, we introduced real-world scenarios to evaluate our model's performance in a real scene, which significantly outperforms competitors. This demonstrates that our model not only exhibits competitive performance but is also more flexible and faster than existing multi-task models. The source codes and pre-trained models are released at https://github.com/JiayuanWang-JW/YOLOv8-multi-task

An Attentive-based Generative Model for Medical Image Synthesis

Jun 02, 2023Abstract:Magnetic resonance (MR) and computer tomography (CT) imaging are valuable tools for diagnosing diseases and planning treatment. However, limitations such as radiation exposure and cost can restrict access to certain imaging modalities. To address this issue, medical image synthesis can generate one modality from another, but many existing models struggle with high-quality image synthesis when multiple slices are present in the dataset. This study proposes an attention-based dual contrast generative model, called ADC-cycleGAN, which can synthesize medical images from unpaired data with multiple slices. The model integrates a dual contrast loss term with the CycleGAN loss to ensure that the synthesized images are distinguishable from the source domain. Additionally, an attention mechanism is incorporated into the generators to extract informative features from both channel and spatial domains. To improve performance when dealing with multiple slices, the $K$-means algorithm is used to cluster the dataset into $K$ groups, and each group is used to train a separate ADC-cycleGAN. Experimental results demonstrate that the proposed ADC-cycleGAN model produces comparable samples to other state-of-the-art generative models, achieving the highest PSNR and SSIM values of 19.04385 and 0.68551, respectively. We publish the code at https://github.com/JiayuanWang-JW/ADC-cycleGAN.

An Ensemble Semi-Supervised Adaptive Resonance Theory Model with Explanation Capability for Pattern Classification

May 19, 2023Abstract:Most semi-supervised learning (SSL) models entail complex structures and iterative training processes as well as face difficulties in interpreting their predictions to users. To address these issues, this paper proposes a new interpretable SSL model using the supervised and unsupervised Adaptive Resonance Theory (ART) family of networks, which is denoted as SSL-ART. Firstly, SSL-ART adopts an unsupervised fuzzy ART network to create a number of prototype nodes using unlabeled samples. Then, it leverages a supervised fuzzy ARTMAP structure to map the established prototype nodes to the target classes using labeled samples. Specifically, a one-to-many (OtM) mapping scheme is devised to associate a prototype node with more than one class label. The main advantages of SSL-ART include the capability of: (i) performing online learning, (ii) reducing the number of redundant prototype nodes through the OtM mapping scheme and minimizing the effects of noisy samples, and (iii) providing an explanation facility for users to interpret the predicted outcomes. In addition, a weighted voting strategy is introduced to form an ensemble SSL-ART model, which is denoted as WESSL-ART. Every ensemble member, i.e., SSL-ART, assigns {\color{black}a different weight} to each class based on its performance pertaining to the corresponding class. The aim is to mitigate the effects of training data sequences on all SSL-ART members and improve the overall performance of WESSL-ART. The experimental results on eighteen benchmark data sets, three artificially generated data sets, and a real-world case study indicate the benefits of the proposed SSL-ART and WESSL-ART models for tackling pattern classification problems.

Auto-Focus Contrastive Learning for Image Manipulation Detection

Nov 20, 2022

Abstract:Generally, current image manipulation detection models are simply built on manipulation traces. However, we argue that those models achieve sub-optimal detection performance as it tends to: 1) distinguish the manipulation traces from a lot of noisy information within the entire image, and 2) ignore the trace relations among the pixels of each manipulated region and its surroundings. To overcome these limitations, we propose an Auto-Focus Contrastive Learning (AF-CL) network for image manipulation detection. It contains two main ideas, i.e., multi-scale view generation (MSVG) and trace relation modeling (TRM). Specifically, MSVG aims to generate a pair of views, each of which contains the manipulated region and its surroundings at a different scale, while TRM plays a role in modeling the trace relations among the pixels of each manipulated region and its surroundings for learning the discriminative representation. After learning the AF-CL network by minimizing the distance between the representations of corresponding views, the learned network is able to automatically focus on the manipulated region and its surroundings and sufficiently explore their trace relations for accurate manipulation detection. Extensive experiments demonstrate that, compared to the state-of-the-arts, AF-CL provides significant performance improvements, i.e., up to 2.5%, 7.5%, and 0.8% F1 score, on CAISA, NIST, and Coverage datasets, respectively.

DC-cycleGAN: Bidirectional CT-to-MR Synthesis from Unpaired Data

Nov 02, 2022Abstract:Magnetic resonance (MR) and computer tomography (CT) images are two typical types of medical images that provide mutually-complementary information for accurate clinical diagnosis and treatment. However, obtaining both images may be limited due to some considerations such as cost, radiation dose and modality missing. Recently, medical image synthesis has aroused gaining research interest to cope with this limitation. In this paper, we propose a bidirectional learning model, denoted as dual contrast cycleGAN (DC-cycleGAN), to synthesis medical images from unpaired data. Specifically, a dual contrast loss is introduced into the discriminators to indirectly build constraints between MR and CT images by taking the advantage of samples from the source domain as negative sample and enforce the synthetic images fall far away from the source domain. In addition, cross entropy and structural similarity index (SSIM) are integrated into the cycleGAN in order to consider both luminance and structure of samples when synthesizing images. The experimental results indicates that DC-cycleGAN is able to produce promising results as compared with other cycleGAN-based medical image synthesis methods such as cycleGAN, RegGAN, DualGAN and NiceGAN. The code will be available at https://github.com/JiayuanWang-JW/DC-cycleGAN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge