Association of genomic subtypes of lower-grade gliomas with shape features automatically extracted by a deep learning algorithm

Paper and Code

Jun 09, 2019

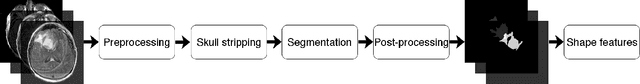

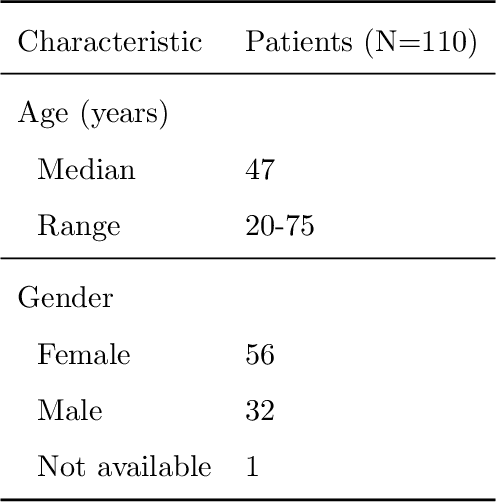

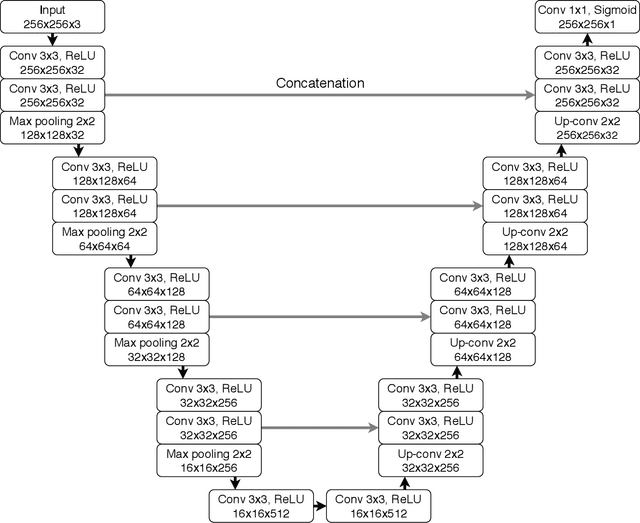

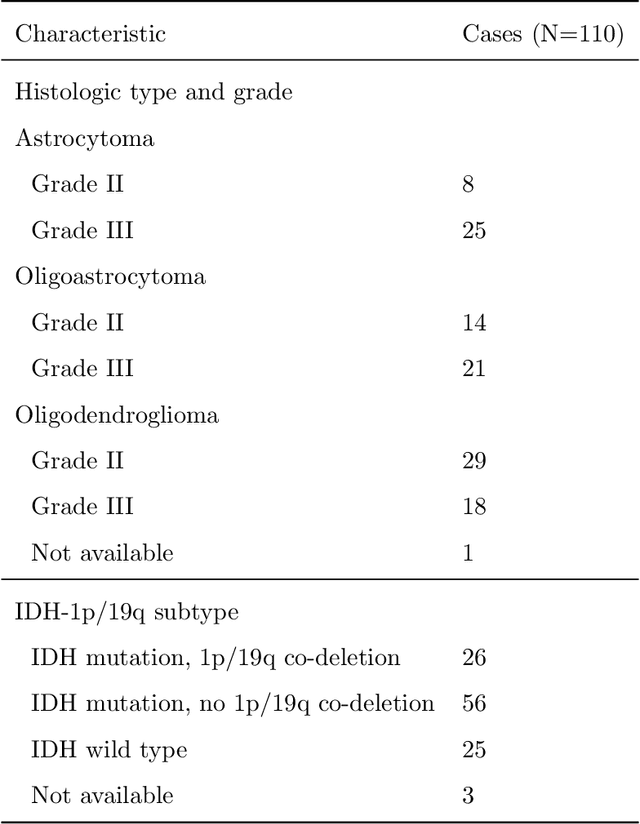

Recent analysis identified distinct genomic subtypes of lower-grade glioma tumors which are associated with shape features. In this study, we propose a fully automatic way to quantify tumor imaging characteristics using deep learning-based segmentation and test whether these characteristics are predictive of tumor genomic subtypes. We used preoperative imaging and genomic data of 110 patients from 5 institutions with lower-grade gliomas from The Cancer Genome Atlas. Based on automatic deep learning segmentations, we extracted three features which quantify two-dimensional and three-dimensional characteristics of the tumors. Genomic data for the analyzed cohort of patients consisted of previously identified genomic clusters based on IDH mutation and 1p/19q co-deletion, DNA methylation, gene expression, DNA copy number, and microRNA expression. To analyze the relationship between the imaging features and genomic clusters, we conducted the Fisher exact test for 10 hypotheses for each pair of imaging feature and genomic subtype. To account for multiple hypothesis testing, we applied a Bonferroni correction. P-values lower than 0.005 were considered statistically significant. We found the strongest association between RNASeq clusters and the bounding ellipsoid volume ratio ($p<0.0002$) and between RNASeq clusters and margin fluctuation ($p<0.005$). In addition, we identified associations between bounding ellipsoid volume ratio and all tested molecular subtypes ($p<0.02$) as well as between angular standard deviation and RNASeq cluster ($p<0.02$). In terms of automatic tumor segmentation that was used to generate the quantitative image characteristics, our deep learning algorithm achieved a mean Dice coefficient of 82% which is comparable to human performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge