Armelle Brun

Combining Objective and Subjective Perspectives for Political News Understanding

Aug 20, 2024

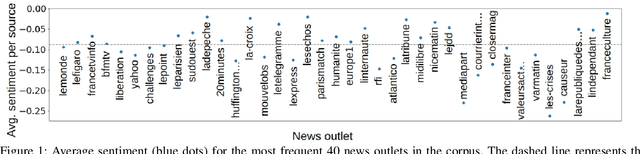

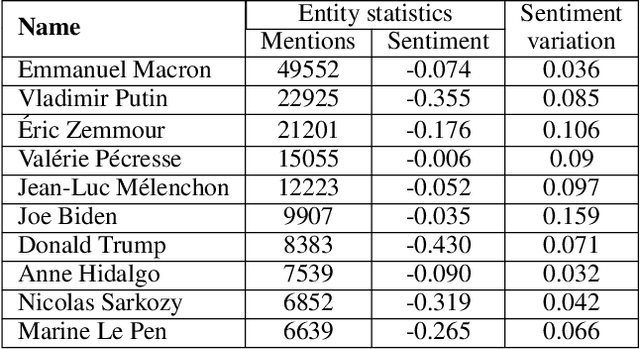

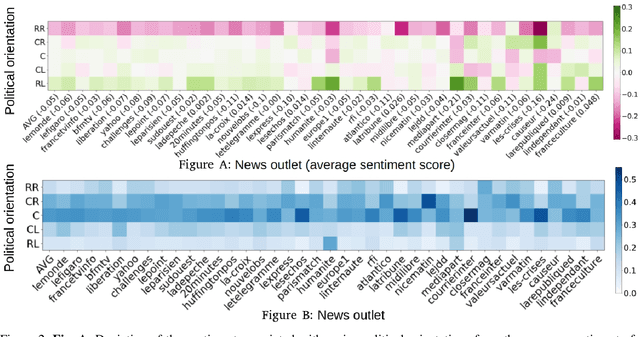

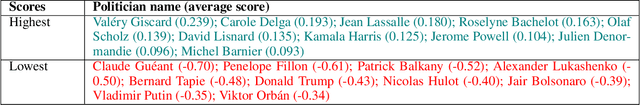

Abstract:Researchers and practitioners interested in computational politics rely on automatic content analysis tools to make sense of the large amount of political texts available on the Web. Such tools should provide objective and subjective aspects at different granularity levels to make the analyses useful in practice. Existing methods produce interesting insights for objective aspects, but are limited for subjective ones, are often limited to national contexts, and have limited explainability. We introduce a text analysis framework which integrates both perspectives and provides a fine-grained processing of subjective aspects. Information retrieval techniques and knowledge bases complement powerful natural language processing components to allow a flexible aggregation of results at different granularity levels. Importantly, the proposed bottom-up approach facilitates the explainability of the obtained results. We illustrate its functioning with insights on news outlets, political orientations, topics, individual entities, and demographic segments. The approach is instantiated on a large corpus of French news, but is designed to work seamlessly for other languages and countries.

Do Similar Entities have Similar Embeddings?

Dec 16, 2023

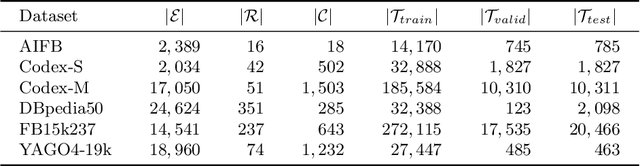

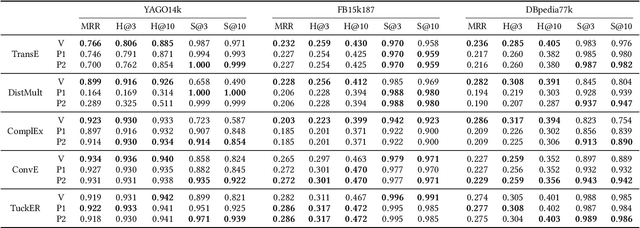

Abstract:Knowledge graph embedding models (KGEMs) developed for link prediction learn vector representations for graph entities, known as embeddings. A common tacit assumption is the KGE entity similarity assumption, which states that these KGEMs retain the graph's structure within their embedding space, i.e., position similar entities close to one another. This desirable property make KGEMs widely used in downstream tasks such as recommender systems or drug repurposing. Yet, the alignment of graph similarity with embedding space similarity has rarely been formally evaluated. Typically, KGEMs are assessed based on their sole link prediction capabilities, using ranked-based metrics such as Hits@K or Mean Rank. This paper challenges the prevailing assumption that entity similarity in the graph is inherently mirrored in the embedding space. Therefore, we conduct extensive experiments to measure the capability of KGEMs to cluster similar entities together, and investigate the nature of the underlying factors. Moreover, we study if different KGEMs expose a different notion of similarity. Datasets, pre-trained embeddings and code are available at: https://github.com/nicolas-hbt/similar-embeddings.

PyGraft: Configurable Generation of Schemas and Knowledge Graphs at Your Fingertips

Sep 07, 2023Abstract:Knowledge graphs (KGs) have emerged as a prominent data representation and management paradigm. Being usually underpinned by a schema (e.g. an ontology), KGs capture not only factual information but also contextual knowledge. In some tasks, a few KGs established themselves as standard benchmarks. However, recent works outline that relying on a limited collection of datasets is not sufficient to assess the generalization capability of an approach. In some data-sensitive fields such as education or medicine, access to public datasets is even more limited. To remedy the aforementioned issues, we release PyGraft, a Python-based tool that generates highly customized, domain-agnostic schemas and knowledge graphs. The synthesized schemas encompass various RDFS and OWL constructs, while the synthesized KGs emulate the characteristics and scale of real-world KGs. Logical consistency of the generated resources is ultimately ensured by running a description logic (DL) reasoner. By providing a way of generating both a schema and KG in a single pipeline, PyGraft's aim is to empower the generation of a more diverse array of KGs for benchmarking novel approaches in areas such as graph-based machine learning (ML), or more generally KG processing. In graph-based ML in particular, this should foster a more holistic evaluation of model performance and generalization capability, thereby going beyond the limited collection of available benchmarks. PyGraft is available at: https://github.com/nicolas-hbt/pygraft.

Schema First! Learn Versatile Knowledge Graph Embeddings by Capturing Semantics with MASCHInE

Jun 06, 2023

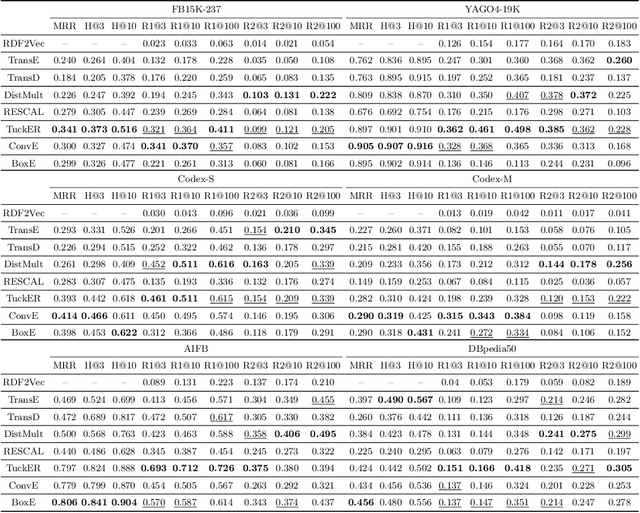

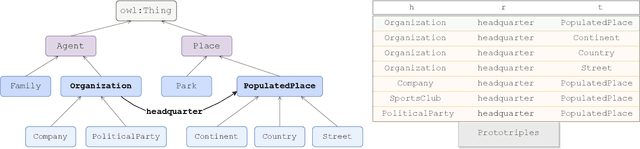

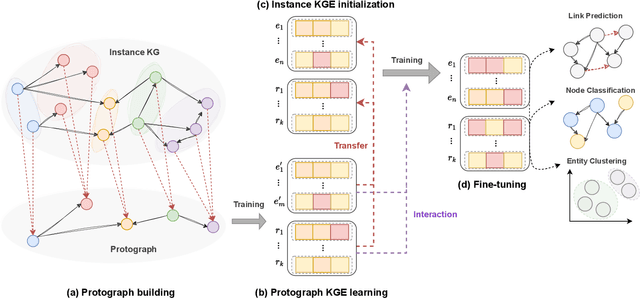

Abstract:Knowledge graph embedding models (KGEMs) have gained considerable traction in recent years. These models learn a vector representation of knowledge graph entities and relations, a.k.a. knowledge graph embeddings (KGEs). Learning versatile KGEs is desirable as it makes them useful for a broad range of tasks. However, KGEMs are usually trained for a specific task, which makes their embeddings task-dependent. In parallel, the widespread assumption that KGEMs actually create a semantic representation of the underlying entities and relations (e.g., project similar entities closer than dissimilar ones) has been challenged. In this work, we design heuristics for generating protographs -- small, modified versions of a KG that leverage schema-based information. The learnt protograph-based embeddings are meant to encapsulate the semantics of a KG, and can be leveraged in learning KGEs that, in turn, also better capture semantics. Extensive experiments on various evaluation benchmarks demonstrate the soundness of this approach, which we call Modular and Agnostic SCHema-based Integration of protograph Embeddings (MASCHInE). In particular, MASCHInE helps produce more versatile KGEs that yield substantially better performance for entity clustering and node classification tasks. For link prediction, using MASCHInE has little impact on rank-based performance but increases the number of semantically valid predictions.

Enhancing Knowledge Graph Embedding Models with Semantic-driven Loss Functions

Mar 09, 2023Abstract:Knowledge graph embedding models (KGEMs) are used for various tasks related to knowledge graphs (KGs), including link prediction. They are trained with loss functions that are computed considering a batch of scored triples and their corresponding labels. Traditional approaches consider the label of a triple to be either true or false. However, recent works suggest that all negative triples should not be valued equally. In line with this recent assumption, we posit that semantically valid negative triples might be high-quality negative triples. As such, loss functions should treat them differently from semantically invalid negative ones. To this aim, we propose semantic-driven versions for the three main loss functions for link prediction. In particular, we treat the scores of negative triples differently by injecting background knowledge about relation domains and ranges into the loss functions. In an extensive and controlled experimental setting, we show that the proposed loss functions systematically provide satisfying results on three public benchmark KGs underpinned with different schemas, which demonstrates both the generality and superiority of our proposed approach. In fact, the proposed loss functions do (1) lead to better MRR and Hits@$10$ values, (2) drive KGEMs towards better semantic awareness. This highlights that semantic information globally improves KGEMs, and thus should be incorporated into loss functions. Domains and ranges of relations being largely available in schema-defined KGs, this makes our approach both beneficial and widely usable in practice.

Characterizing Financial Market Coverage using Artificial Intelligence

Feb 07, 2023

Abstract:This paper scrutinizes a database of over 4900 YouTube videos to characterize financial market coverage. Financial market coverage generates a large number of videos. Therefore, watching these videos to derive actionable insights could be challenging and complex. In this paper, we leverage Whisper, a speech-to-text model from OpenAI, to generate a text corpus of market coverage videos from Bloomberg and Yahoo Finance. We employ natural language processing to extract insights regarding language use from the market coverage. Moreover, we examine the prominent presence of trending topics and their evolution over time, and the impacts that some individuals and organizations have on the financial market. Our characterization highlights the dynamics of the financial market coverage and provides valuable insights reflecting broad discussions regarding recent financial events and the world economy.

Sem@$K$: Is my knowledge graph embedding model semantic-aware?

Jan 13, 2023

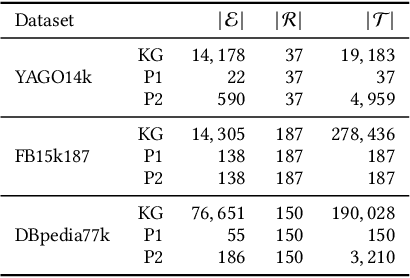

Abstract:Using knowledge graph embedding models (KGEMs) is a popular approach for predicting links in knowledge graphs (KGs). Traditionally, the performance of KGEMs for link prediction is assessed using rank-based metrics, which evaluate their ability to give high scores to ground-truth entities. However, the literature claims that the KGEM evaluation procedure would benefit from adding supplementary dimensions to assess. That is why, in this paper, we extend our previously introduced metric Sem@$K$ that measures the capability of models to predict valid entities w.r.t. domain and range constrains. In particular, we consider a broad range of KGs and take their respective characteristics into account to propose different versions of Sem@$K$. We also perform an extensive study of KGEM semantic awareness. Our experiments show that Sem@$K$ provides a new perspective on KGEM quality. Its joint analysis with rank-based metrics offer different conclusions on the predictive power of models. Regarding Sem@$K$, some KGEMs are inherently better than others, but this semantic superiority is not indicative of their performance w.r.t. rank-based metrics. In this work, we generalize conclusions about the relative performance of KGEMs w.r.t. rank-based and semantic-oriented metrics at the level of families of models. The joint analysis of the aforementioned metrics gives more insight into the peculiarities of each model. This work paves the way for a more comprehensive evaluation of KGEM adequacy for specific downstream tasks.

Multi-source Data Mining for e-Learning

Sep 17, 2020

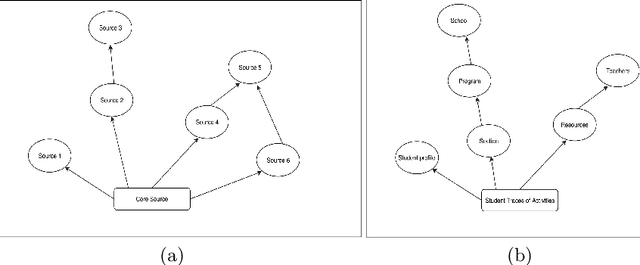

Abstract:Data mining is the task of discovering interesting, unexpected or valuable structures in large datasets and transforming them into an understandable structure for further use . Different approaches in the domain of data mining have been proposed, among which pattern mining is the most important one. Pattern mining mining involves extracting interesting frequent patterns from data. Pattern mining has grown to be a topic of high interest where it is used for different purposes, for example, recommendations. Some of the most common challenges in this domain include reducing the complexity of the process and avoiding the redundancy within the patterns. So far, pattern mining has mainly focused on the mining of a single data source. However, with the increase in the amount of data, in terms of volume, diversity of sources and nature of data, mining multi-source and heterogeneous data has become an emerging challenge in this domain. This challenge is the main focus of our work where we propose to mine multi-source data in order to extract interesting frequent patterns.

Lead2Gold: Towards exploiting the full potential of noisy transcriptions for speech recognition

Oct 16, 2019

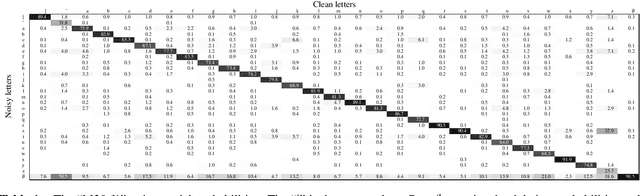

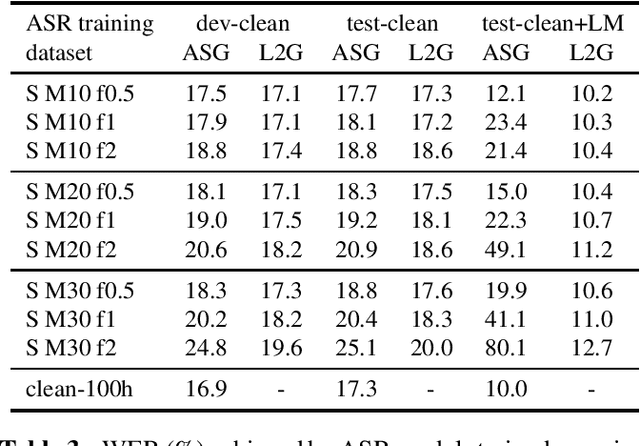

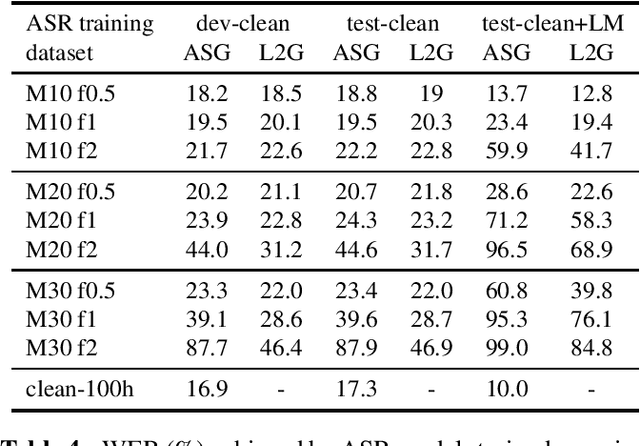

Abstract:The transcriptions used to train an Automatic Speech Recognition (ASR) system may contain errors. Usually, either a quality control stage discards transcriptions with too many errors, or the noisy transcriptions are used as is. We introduce Lead2Gold, a method to train an ASR system that exploits the full potential of noisy transcriptions. Based on a noise model of transcription errors, Lead2Gold searches for better transcriptions of the training data with a beam search that takes this noise model into account. The beam search is differentiable and does not require a forced alignment step, thus the whole system is trained end-to-end. Lead2Gold can be viewed as a new loss function that can be used on top of any sequence-to-sequence deep neural network. We conduct proof-of-concept experiments on noisy transcriptions generated from letter corruptions with different noise levels. We show that Lead2Gold obtains a better ASR accuracy than a competitive baseline which does not account for the (artificially-introduced) transcription noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge