Antoine Marot

RTE

Machine Learning for Physical Simulation Challenge Results and Retrospective Analysis: Power Grid Use Case

May 02, 2025

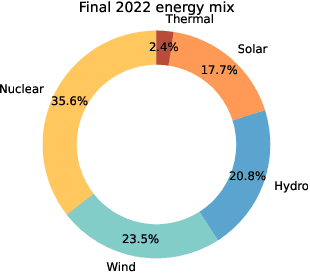

Abstract:This paper addresses the growing computational challenges of power grid simulations, particularly with the increasing integration of renewable energy sources like wind and solar. As grid operators must analyze significantly more scenarios in near real-time to prevent failures and ensure stability, traditional physical-based simulations become computationally impractical. To tackle this, a competition was organized to develop AI-driven methods that accelerate power flow simulations by at least an order of magnitude while maintaining operational reliability. This competition utilized a regional-scale grid model with a 30\% renewable energy mix, mirroring the anticipated near-future composition of the French power grid. A key contribution of this work is through the use of LIPS (Learning Industrial Physical Systems), a benchmarking framework that evaluates solutions based on four critical dimensions: machine learning performance, physical compliance, industrial readiness, and generalization to out-of-distribution scenarios. The paper provides a comprehensive overview of the Machine Learning for Physical Simulation (ML4PhySim) competition, detailing the benchmark suite, analyzing top-performing solutions that outperformed traditional simulation methods, and sharing key organizational insights and best practices for running large-scale AI competitions. Given the promising results achieved, the study aims to inspire further research into more efficient, scalable, and sustainable power network simulation methodologies.

A Conceptual Framework for AI-based Decision Systems in Critical Infrastructures

Apr 21, 2025

Abstract:The interaction between humans and AI in safety-critical systems presents a unique set of challenges that remain partially addressed by existing frameworks. These challenges stem from the complex interplay of requirements for transparency, trust, and explainability, coupled with the necessity for robust and safe decision-making. A framework that holistically integrates human and AI capabilities while addressing these concerns is notably required, bridging the critical gaps in designing, deploying, and maintaining safe and effective systems. This paper proposes a holistic conceptual framework for critical infrastructures by adopting an interdisciplinary approach. It integrates traditionally distinct fields such as mathematics, decision theory, computer science, philosophy, psychology, and cognitive engineering and draws on specialized engineering domains, particularly energy, mobility, and aeronautics. The flexibility in its adoption is also demonstrated through its instantiation on an already existing framework.

RL2Grid: Benchmarking Reinforcement Learning in Power Grid Operations

Mar 29, 2025Abstract:Reinforcement learning (RL) can transform power grid operations by providing adaptive and scalable controllers essential for grid decarbonization. However, existing methods struggle with the complex dynamics, aleatoric uncertainty, long-horizon goals, and hard physical constraints that occur in real-world systems. This paper presents RL2Grid, a benchmark designed in collaboration with power system operators to accelerate progress in grid control and foster RL maturity. Built on a power simulation framework developed by RTE France, RL2Grid standardizes tasks, state and action spaces, and reward structures within a unified interface for a systematic evaluation and comparison of RL approaches. Moreover, we integrate real control heuristics and safety constraints informed by the operators' expertise to ensure RL2Grid aligns with grid operation requirements. We benchmark popular RL baselines on the grid control tasks represented within RL2Grid, establishing reference performance metrics. Our results and discussion highlight the challenges that power grids pose for RL methods, emphasizing the need for novel algorithms capable of handling real-world physical systems.

A Pioneering Roadmap for ML-Driven Algorithmic Advancements in Electrical Networks

May 28, 2024

Abstract:To advance control, operation and planning tools of electrical networks with ML is not straightforward. 110 experts were surveyed showing where and how ML algorithmis could advance. This paper assesses this survey and research environment. Then it develops an innovation roadmap that helps align our research community towards a goal-oriented realisation of the opportunities that AI upholds. This paper finds that the R\&D environment of system operators (and the surrounding research ecosystem) needs adaptation to enable faster developments with AI while maintaining high testing quality and safety. This roadmap may interest research centre managers in system operators, academics, and labs dedicated to advancing the next generation of tooling for electrical networks.

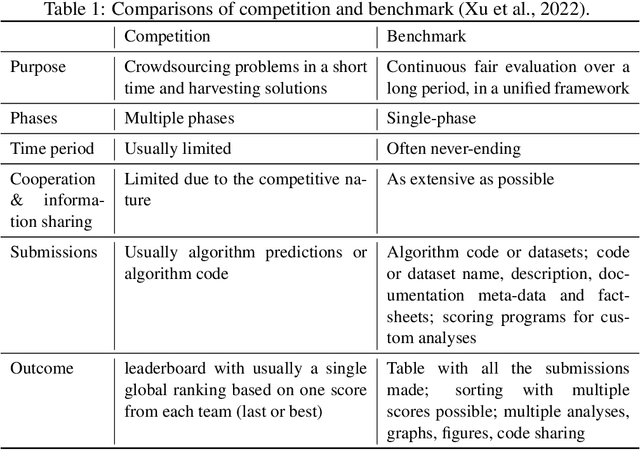

AI Competitions and Benchmarks: towards impactful challenges with post-challenge papers, benchmarks and other dissemination actions

Dec 14, 2023

Abstract:Organising an AI challenge does not end with the final event. The long-lasting impact also needs to be organised. This chapter covers the various activities after the challenge is formally finished. The target audience of different post-challenge activities is identified. The various outputs of the challenge are listed with the means to collect them. The main part of the chapter is a template for a typical post-challenge paper, including possible graphs as well as advice on how to turn the challenge into a long-lasting benchmark.

Managing power grids through topology actions: A comparative study between advanced rule-based and reinforcement learning agents

Apr 17, 2023

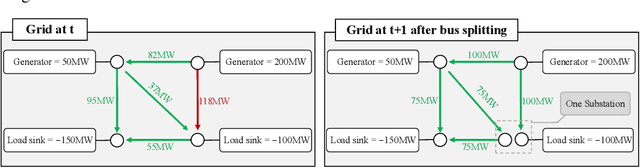

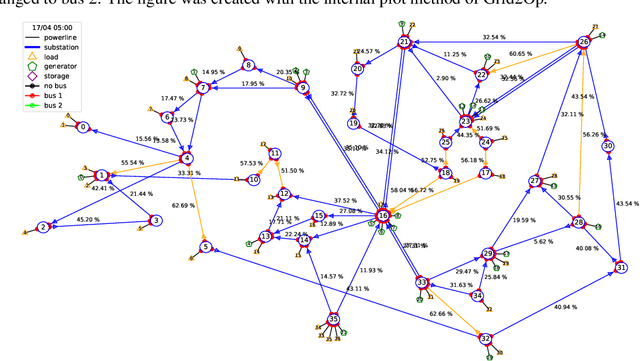

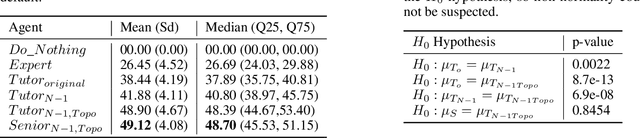

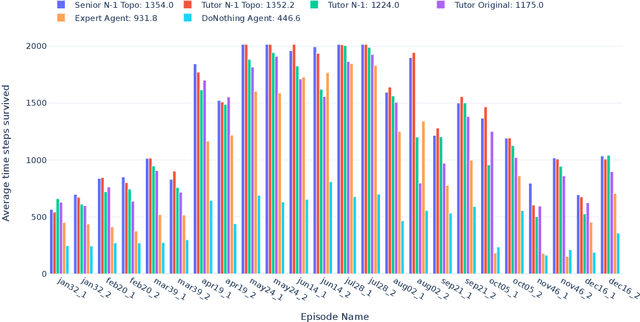

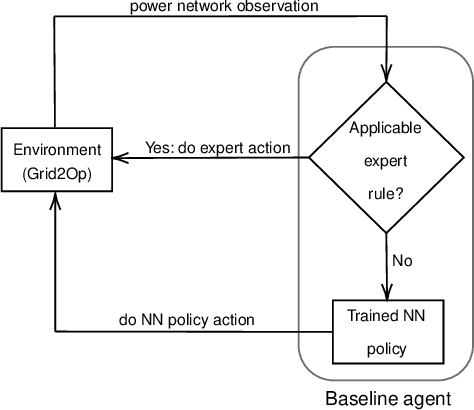

Abstract:The operation of electricity grids has become increasingly complex due to the current upheaval and the increase in renewable energy production. As a consequence, active grid management is reaching its limits with conventional approaches. In the context of the Learning to Run a Power Network challenge, it has been shown that Reinforcement Learning (RL) is an efficient and reliable approach with considerable potential for automatic grid operation. In this article, we analyse the submitted agent from Binbinchen and provide novel strategies to improve the agent, both for the RL and the rule-based approach. The main improvement is a N-1 strategy, where we consider topology actions that keep the grid stable, even if one line is disconnected. More, we also propose a topology reversion to the original grid, which proved to be beneficial. The improvements are tested against reference approaches on the challenge test sets and are able to increase the performance of the rule-based agent by 27%. In direct comparison between rule-based and RL agent we find similar performance. However, the RL agent has a clear computational advantage. We also analyse the behaviour in an exemplary case in more detail to provide additional insights. Here, we observe that through the N-1 strategy, the actions of the agents become more diversified.

Reinforcement learning for Energies of the future and carbon neutrality: a Challenge Design

Jul 21, 2022

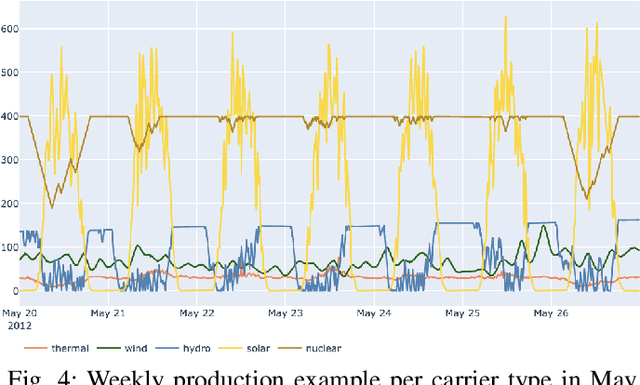

Abstract:Current rapid changes in climate increase the urgency to change energy production and consumption management, to reduce carbon and other green-house gas production. In this context, the French electricity network management company RTE (R{\'e}seau de Transport d'{\'E}lectricit{\'e}) has recently published the results of an extensive study outlining various scenarios for tomorrow's French power management. We propose a challenge that will test the viability of such a scenario. The goal is to control electricity transportation in power networks, while pursuing multiple objectives: balancing production and consumption, minimizing energetic losses, and keeping people and equipment safe and particularly avoiding catastrophic failures. While the importance of the application provides a goal in itself, this challenge also aims to push the state-of-the-art in a branch of Artificial Intelligence (AI) called Reinforcement Learning (RL), which offers new possibilities to tackle control problems. In particular, various aspects of the combination of Deep Learning and RL called Deep Reinforcement Learning remain to be harnessed in this application domain. This challenge belongs to a series started in 2019 under the name "Learning to run a power network" (L2RPN). In this new edition, we introduce new more realistic scenarios proposed by RTE to reach carbon neutrality by 2050, retiring fossil fuel electricity production, increasing proportions of renewable and nuclear energy and introducing batteries. Furthermore, we provide a baseline using state-of-the-art reinforcement learning algorithm to stimulate the future participants.

Learning to run a power network with trust

Oct 21, 2021

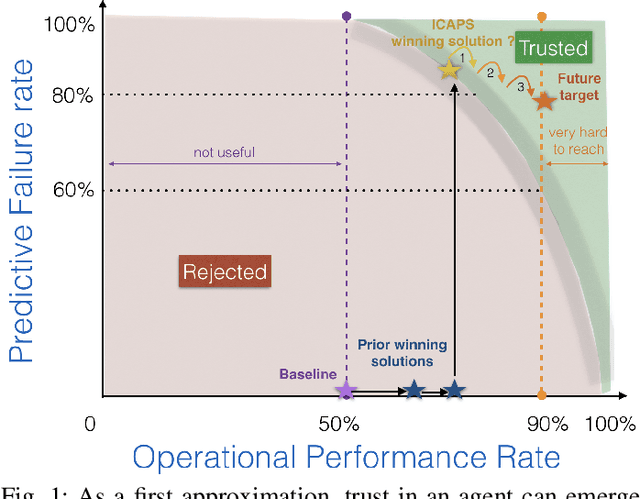

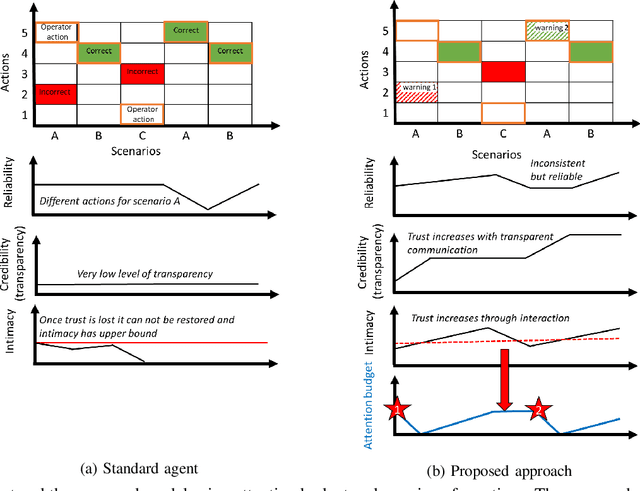

Abstract:Artificial agents are promising for realtime power system operations, particularly, to compute remedial actions for congestion management. Currently, these agents are limited to only autonomously run by themselves. However, autonomous agents will not be deployed any time soon. Operators will still be in charge of taking action in the future. Aiming at designing an assistant for operators, we here consider humans in the loop and propose an original formulation for this problem. We first advance an agent with the ability to send to the operator alarms ahead of time when the proposed actions are of low confidence. We further model the operator's available attention as a budget that decreases when alarms are sent. We present the design and results of our competition "Learning to run a power network with trust" in which we benchmark the ability of submitted agents to send relevant alarms while operating the network to their best.

Learning to run a Power Network Challenge: a Retrospective Analysis

Mar 02, 2021

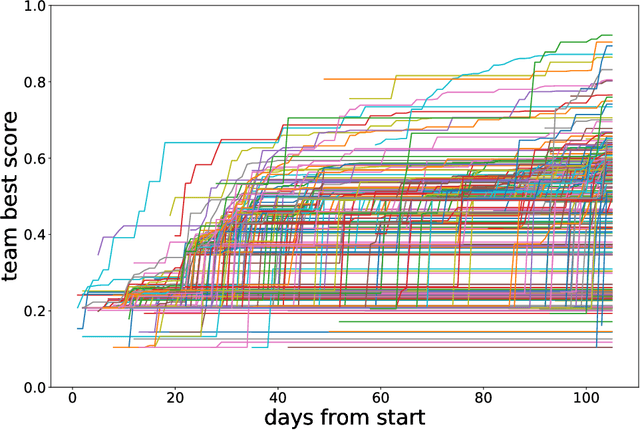

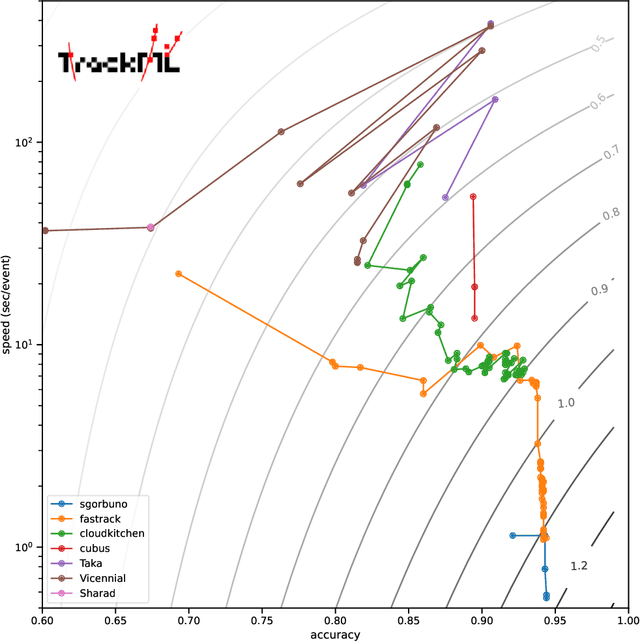

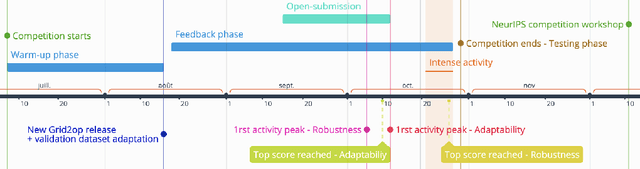

Abstract:Power networks, responsible for transporting electricity across large geographical regions, are complex infrastructures on which modern life critically depend. Variations in demand and production profiles, with increasing renewable energy integration, as well as the high voltage network technology, constitute a real challenge for human operators when optimizing electricity transportation while avoiding blackouts. Motivated to investigate the potential of Artificial Intelligence methods in enabling adaptability in power network operation, we have designed a L2RPN challenge to encourage the development of reinforcement learning solutions to key problems present in the next-generation power networks. The NeurIPS 2020 competition was well received by the international community attracting over 300 participants worldwide. The main contribution of this challenge is our proposed comprehensive Grid2Op framework, and associated benchmark, which plays realistic sequential network operations scenarios. The framework is open-sourced and easily re-usable to define new environments with its companion GridAlive ecosystem. It relies on existing non-linear physical simulators and let us create a series of perturbations and challenges that are representative of two important problems: a) the uncertainty resulting from the increased use of unpredictable renewable energy sources, and b) the robustness required with contingent line disconnections. In this paper, we provide details about the competition highlights. We present the benchmark suite and analyse the winning solutions of the challenge, observing one super-human performance demonstration by the best agent. We propose our organizational insights for a successful competition and conclude on open research avenues. We expect our work will foster research to create more sustainable solutions for power network operations.

Adversarial Training for a Continuous Robustness Control Problem in Power Systems

Jan 14, 2021

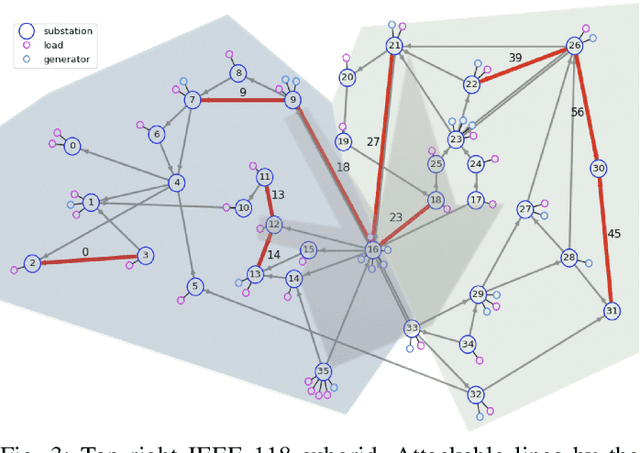

Abstract:We propose a new adversarial training approach for injecting robustness when designing controllers for upcoming cyber-physical power systems. Previous approaches relying deeply on simulations are not able to cope with the rising complexity and are too costly when used online in terms of computation budget. In comparison, our method proves to be computationally efficient online while displaying useful robustness properties. To do so we model an adversarial framework, propose the implementation of a fixed opponent policy and test it on a L2RPN (Learning to Run a Power Network) environment. That environment is a synthetic but realistic modeling of a cyber-physical system accounting for one third of the IEEE 118 grid. Using adversarial testing, we analyze the results of submitted trained agents from the robustness track of the L2RPN competition. We then further assess the performance of those agents in regards to the continuous N-1 problem through tailored evaluation metrics. We discover that some agents trained in an adversarial way demonstrate interesting preventive behaviors in that regard, which we discuss.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge