Patrick Panciatici

TAU

Decision-making Oriented Clustering: Application to Pricing and Power Consumption Scheduling

Jun 02, 2021

Abstract:Data clustering is an instrumental tool in the area of energy resource management. One problem with conventional clustering is that it does not take the final use of the clustered data into account, which may lead to a very suboptimal use of energy or computational resources. When clustered data are used by a decision-making entity, it turns out that significant gains can be obtained by tailoring the clustering scheme to the final task performed by the decision-making entity. The key to having good final performance is to automatically extract the important attributes of the data space that are inherently relevant to the subsequent decision-making entity, and partition the data space based on these attributes instead of partitioning the data space based on predefined conventional metrics. For this purpose, we formulate the framework of decision-making oriented clustering and propose an algorithm providing a decision-based partition of the data space and good representative decisions. By applying this novel framework and algorithm to a typical problem of real-time pricing and that of power consumption scheduling, we obtain several insightful analytical results such as the expression of the best representative price profiles for real-time pricing and a very significant reduction in terms of required clusters to perform power consumption scheduling as shown by our simulations.

* Published in Applied Energy

Learning to run a Power Network Challenge: a Retrospective Analysis

Mar 02, 2021

Abstract:Power networks, responsible for transporting electricity across large geographical regions, are complex infrastructures on which modern life critically depend. Variations in demand and production profiles, with increasing renewable energy integration, as well as the high voltage network technology, constitute a real challenge for human operators when optimizing electricity transportation while avoiding blackouts. Motivated to investigate the potential of Artificial Intelligence methods in enabling adaptability in power network operation, we have designed a L2RPN challenge to encourage the development of reinforcement learning solutions to key problems present in the next-generation power networks. The NeurIPS 2020 competition was well received by the international community attracting over 300 participants worldwide. The main contribution of this challenge is our proposed comprehensive Grid2Op framework, and associated benchmark, which plays realistic sequential network operations scenarios. The framework is open-sourced and easily re-usable to define new environments with its companion GridAlive ecosystem. It relies on existing non-linear physical simulators and let us create a series of perturbations and challenges that are representative of two important problems: a) the uncertainty resulting from the increased use of unpredictable renewable energy sources, and b) the robustness required with contingent line disconnections. In this paper, we provide details about the competition highlights. We present the benchmark suite and analyse the winning solutions of the challenge, observing one super-human performance demonstration by the best agent. We propose our organizational insights for a successful competition and conclude on open research avenues. We expect our work will foster research to create more sustainable solutions for power network operations.

Decision Set Optimization and Energy-Efficient MIMO Communications

Sep 16, 2019

Abstract:Assuming that the number of possible decisions for a transmitter (e.g., the number of possible beamforming vectors) has to be finite and is given, this paper investigates for the first time the problem of determining the best decision set when energy-efficiency maximization is pursued. We propose a framework to find a good (finite) decision set which induces a minimal performance loss w.r.t. to the continuous case. We exploit this framework for a scenario of energy-efficient MIMO communications in which transmit power and beamforming vectors have to be adapted jointly to the channel given under finite-rate feedback. To determine a good decision set we propose an algorithm which combines the approach of Invasive Weed Optimization (IWO) and an Evolutionary Algorithm (EA). We provide a numerical analysis which illustrates the benefits of our point of view. In particular, given a performance loss level, the feedback rate can by reduced by 2 when the transmit decision set has been designed properly by using our algorithm. The impact on energy-efficiency is also seen to be significant.

* 7 pages, 5 figures

LEAP nets for power grid perturbations

Aug 22, 2019

Abstract:We propose a novel neural network embedding approach to model power transmission grids, in which high voltage lines are disconnected and reconnected with one-another from time to time, either accidentally or willfully. We call our architeture LEAP net, for Latent Encoding of Atypical Perturbation. Our method implements a form of transfer learning, permitting to train on a few source domains, then generalize to new target domains, without learning on any example of that domain. We evaluate the viability of this technique to rapidly assess cu-rative actions that human operators take in emergency situations, using real historical data, from the French high voltage power grid.

Decision-Oriented Communications: Application to Energy-Efficient Resource Allocation

May 17, 2019

Abstract:In this paper, we introduce the problem of decision-oriented communications, that is, the goal of the source is to send the right amount of information in order for the intended destination to execute a task. More specifically, we restrict our attention to how the source should quantize information so that the destination can maximize a utility function which represents the task to be executed only knowing the quantized information. For example, for utility functions under the form $u\left(\boldsymbol{x};\ \boldsymbol{g}\right)$, $\boldsymbol{x}$ might represent a decision in terms of using some radio resources and $\boldsymbol{g}$ the system state which is only observed through its quantized version $Q(\boldsymbol{g})$. Both in the case where the utility function is known and the case where it is only observed through its realizations, we provide solutions to determine such a quantizer. We show how this approach applies to energy-efficient power allocation. In particular, it is seen that quantizing the state very roughly is perfectly suited to sum-rate-type function maximization, whereas energy-efficiency metrics are more sensitive to imperfections.

Optimization of computational budget for power system risk assessment

May 03, 2018

Abstract:We address the problem of maintaining high voltage power transmission networks in security at all time, namely anticipating exceeding of thermal limit for eventual single line disconnection (whatever its cause may be) by running slow, but accurate, physical grid simulators. New conceptual frameworks are calling for a probabilistic risk-based security criterion. However, these approaches suffer from high requirements in terms of tractability. Here, we propose a new method to assess the risk. This method uses both machine learning techniques (artificial neural networks) and more standard simulators based on physical laws. More specifically we train neural networks to estimate the overall dangerousness of a grid state. A classical benchmark problem (manpower 118 buses test case) is used to show the strengths of the proposed method.

Anticipating contingengies in power grids using fast neural net screening

May 03, 2018

Abstract:We address the problem of maintaining high voltage power transmission networks in security at all time. This requires that power flowing through all lines remain below a certain nominal thermal limit above which lines might melt, break or cause other damages. Current practices include enforcing the deterministic "N-1" reliability criterion, namely anticipating exceeding of thermal limit for any eventual single line disconnection (whatever its cause may be) by running a slow, but accurate, physical grid simulator. New conceptual frameworks are calling for a probabilistic risk based security criterion and are in need of new methods to assess the risk. To tackle this difficult assessment, we address in this paper the problem of rapidly ranking higher order contingencies including all pairs of line disconnections, to better prioritize simulations. We present a novel method based on neural networks, which ranks "N-1" and "N-2" contingencies in decreasing order of presumed severity. We demonstrate on a classical benchmark problem that the residual risk of contingencies decreases dramatically compared to considering solely all "N-1" cases, at no additional computational cost. We evaluate that our method scales up to power grids of the size of the French high voltage power grid (over 1000 power lines).

Guided Machine Learning for power grid segmentation

Mar 30, 2018

Abstract:The segmentation of large scale power grids into zones is crucial for control room operators when managing the grid complexity near real time. In this paper we propose a new method in two steps which is able to automatically do this segmentation, while taking into account the real time context, in order to help them handle shifting dynamics. Our method relies on a "guided" machine learning approach. As a first step, we define and compute a task specific "Influence Graph" in a guided manner. We indeed simulate on a grid state chosen interventions, representative of our task of interest (managing active power flows in our case). For visualization and interpretation, we then build a higher representation of the grid relevant to this task by applying the graph community detection algorithm \textit{Infomap} on this Influence Graph. To illustrate our method and demonstrate its practical interest, we apply it on commonly used systems, the IEEE-14 and IEEE-118. We show promising and original interpretable results, especially on the previously well studied RTS-96 system for grid segmentation. We eventually share initial investigation and results on a large-scale system, the French power grid, whose segmentation had a surprising resemblance with RTE's historical partitioning.

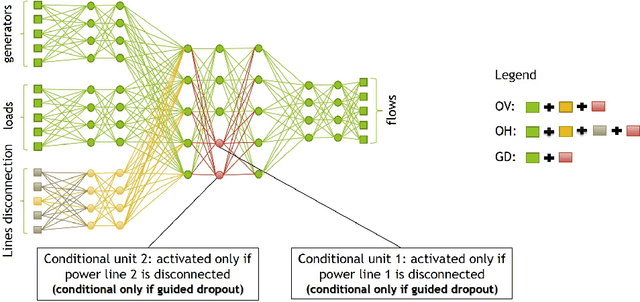

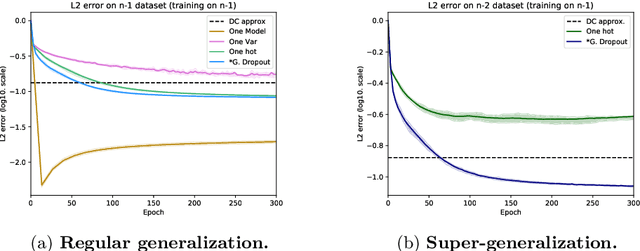

Fast Power system security analysis with Guided Dropout

Jan 30, 2018

Abstract:We propose a new method to efficiently compute load-flows (the steady-state of the power-grid for given productions, consumptions and grid topology), substituting conventional simulators based on differential equation solvers. We use a deep feed-forward neural network trained with load-flows precomputed by simulation. Our architecture permits to train a network on so-called "n-1" problems, in which load flows are evaluated for every possible line disconnection, then generalize to "n-2" problems without retraining (a clear advantage because of the combinatorial nature of the problem). To that end, we developed a technique bearing similarity with "dropout", which we named "guided dropout".

Introducing machine learning for power system operation support

Sep 27, 2017

Abstract:We address the problem of assisting human dispatchers in operating power grids in today's changing context using machine learning, with theaim of increasing security and reducing costs. Power networks are highly regulated systems, which at all times must meet varying demands of electricity with a complex production system, including conventional power plants, less predictable renewable energies (such as wind or solar power), and the possibility of buying/selling electricity on the international market with more and more actors involved at a Europeanscale. This problem is becoming ever more challenging in an aging network infrastructure. One of the primary goals of dispatchers is to protect equipment (e.g. avoid that transmission lines overheat) with few degrees of freedom: we are considering in this paper solely modifications in network topology, i.e. re-configuring the way in which lines, transformers, productions and loads are connected in sub-stations. Using years of historical data collected by the French Transmission Service Operator (TSO) "R\'eseau de Transport d'Electricit\'e" (RTE), we develop novel machine learning techniques (drawing on "deep learning") to mimic human decisions to devise "remedial actions" to prevent any line to violate power flow limits (so-called "thermal limits"). The proposed technique is hybrid. It does not rely purely on machine learning: every action will be tested with actual simulators before being proposed to the dispatchers or implemented on the grid.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge