Andreas Schulze-Bonhage

SeizeIT2: Wearable Dataset Of Patients With Focal Epilepsy

Feb 03, 2025

Abstract:The increasing technological advancements towards miniaturized physiological measuring devices have enabled continuous monitoring of epileptic patients outside of specialized environments. The large amounts of data that can be recorded with such devices holds significant potential for developing automated seizure detection frameworks. In this work, we present SeizeIT2, the first open dataset of wearable data recorded in patients with focal epilepsy. The dataset comprises more than 11,000 hours of multimodal data, including behind-the-ear electroencephalography, electrocardiography, electromyography and movement (accelerometer and gyroscope) data. The dataset contains 886 focal seizures recorded from 125 patients across five different European Epileptic Monitoring Centers. We present a suggestive training/validation split to propel the development of AI methodologies for seizure detection, as well as two benchmark approaches and evaluation metrics. The dataset can be accessed on OpenNeuro and is stored in Brain Imaging Data Structure (BIDS) format.

Multimodal wearable EEG, EMG and accelerometry measurements improve the accuracy of tonic-clonic seizure detection in-hospital

Mar 19, 2024

Abstract:Objective: Most current wearable tonic-clonic seizure (TCS) detection systems are based on extra-cerebral signals, such as electromyography (EMG) or accelerometry (ACC). Although many of these devices show good sensitivity in seizure detection, their false positive rates (FPR) are still relatively high. Wearable EEG may improve performance; however, studies investigating this remain scarce. This paper aims 1) to investigate the possibility of detecting TCSs with a behind-the-ear, two-channel wearable EEG, and 2) to evaluate the added value of wearable EEG to other non-EEG modalities in multimodal TCS detection. Method: We included 27 participants with a total of 44 TCSs from the European multicenter study SeizeIT2. The multimodal wearable detection system Sensor Dot (Byteflies) was used to measure two-channel, behind-the-ear EEG, EMG, electrocardiography (ECG), ACC and gyroscope (GYR). First, we evaluated automatic unimodal detection of TCSs, using performance metrics such as sensitivity, precision, FPR and F1-score. Secondly, we fused the different modalities and again assessed performance. Algorithm-labeled segments were then provided to a neurologist and a wearable data expert, who reviewed and annotated the true positive TCSs, and discarded false positives (FPs). Results: Wearable EEG outperformed the other modalities in unimodal TCS detection by achieving a sensitivity of 100.0% and a FPR of 10.3/24h (compared to 97.7% sensitivity and 30.9/24h FPR for EMG; 95.5% sensitivity and 13.9 FPR for ACC). The combination of wearable EEG and EMG achieved overall the most clinically useful performance in offline TCS detection with a sensitivity of 97.7%, a FPR of 0.4/24 h, a precision of 43.0%, and a F1-score of 59.7%. Subsequent visual review of the automated detections resulted in maximal sensitivity and zero FPs.

Machine-Learning-Based Diagnostics of EEG Pathology

Feb 11, 2020

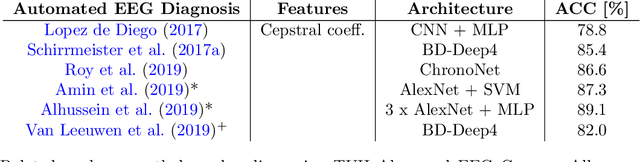

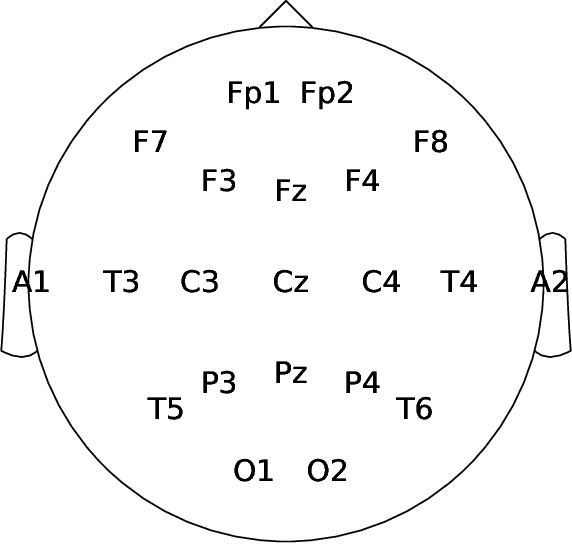

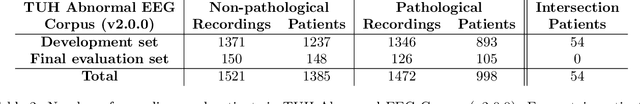

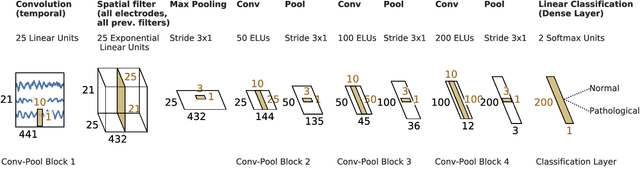

Abstract:Machine learning (ML) methods have the potential to automate clinical EEG analysis. They can be categorized into feature-based (with handcrafted features), and end-to-end approaches (with learned features). Previous studies on EEG pathology decoding have typically analyzed a limited number of features, decoders, or both. For a I) more elaborate feature-based EEG analysis, and II) in-depth comparisons of both approaches, here we first develop a comprehensive feature-based framework, and then compare this framework to state-of-the-art end-to-end methods. To this aim, we apply the proposed feature-based framework and deep neural networks including an EEG-optimized temporal convolutional network (TCN) to the task of pathological versus non-pathological EEG classification. For a robust comparison, we chose the Temple University Hospital (TUH) Abnormal EEG Corpus (v2.0.0), which contains approximately 3000 EEG recordings. The results demonstrate that the proposed feature-based decoding framework can achieve accuracies on the same level as state-of-the-art deep neural networks. We find accuracies across both approaches in an astonishingly narrow range from 81--86\%. Moreover, visualizations and analyses indicated that both approaches used similar aspects of the data, e.g., delta and theta band power at temporal electrode locations. We argue that the accuracies of current binary EEG pathology decoders could saturate near 90\% due to the imperfect inter-rater agreement of the clinical labels, and that such decoders are already clinically useful, such as in areas where clinical EEG experts are rare. We make the proposed feature-based framework available open source and thus offer a new tool for EEG machine learning research.

Intracranial Error Detection via Deep Learning

Nov 02, 2018

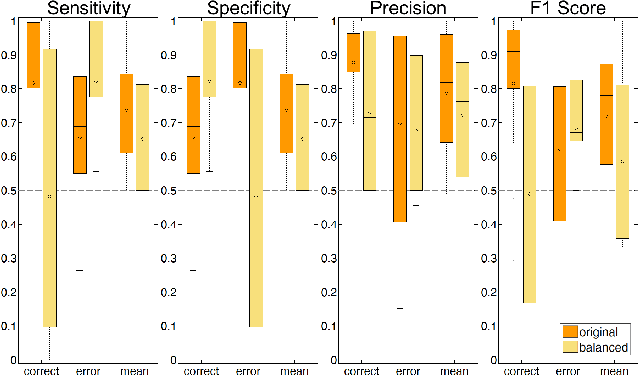

Abstract:Deep learning techniques have revolutionized the field of machine learning and were recently successfully applied to various classification problems in noninvasive electroencephalography (EEG). However, these methods were so far only rarely evaluated for use in intracranial EEG. We employed convolutional neural networks (CNNs) to classify and characterize the error-related brain response as measured in 24 intracranial EEG recordings. Decoding accuracies of CNNs were significantly higher than those of a regularized linear discriminant analysis. Using time-resolved deep decoding, it was possible to classify errors in various regions in the human brain, and further to decode errors over 200 ms before the actual erroneous button press, e.g., in the precentral gyrus. Moreover, deeper networks performed better than shallower networks in distinguishing correct from error trials in all-channel decoding. In single recordings, up to 100 % decoding accuracy was achieved. Visualization of the networks' learned features indicated that multivariate decoding on an ensemble of channels yields related, albeit non-redundant information compared to single-channel decoding. In summary, here we show the usefulness of deep learning for both intracranial error decoding and mapping of the spatio-temporal structure of the human error processing network.

Early Seizure Detection with an Energy-Efficient Convolutional Neural Network on an Implantable Microcontroller

Jun 12, 2018

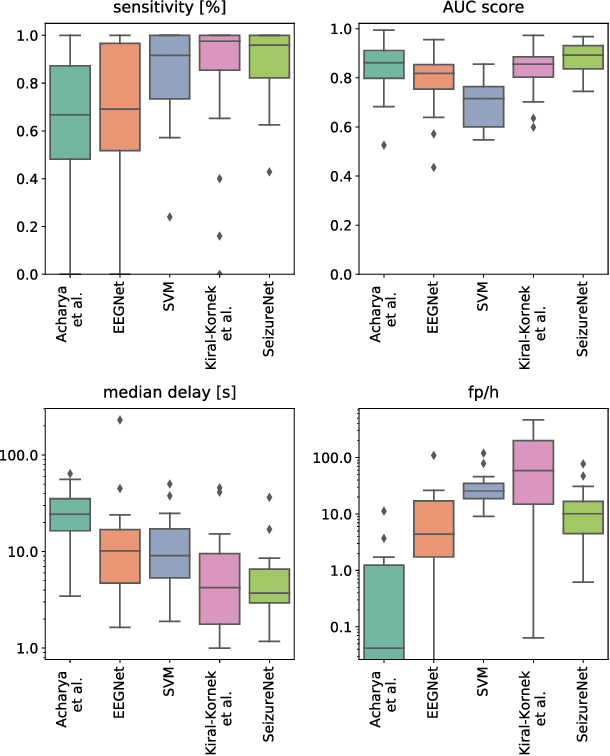

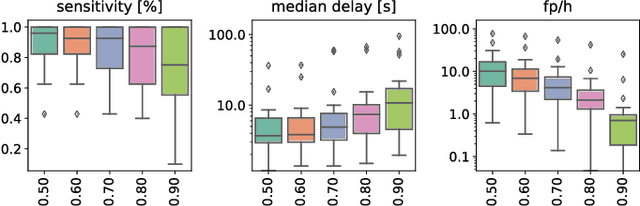

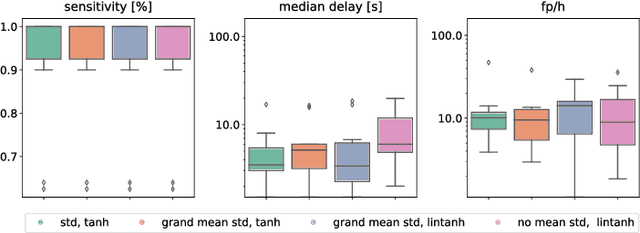

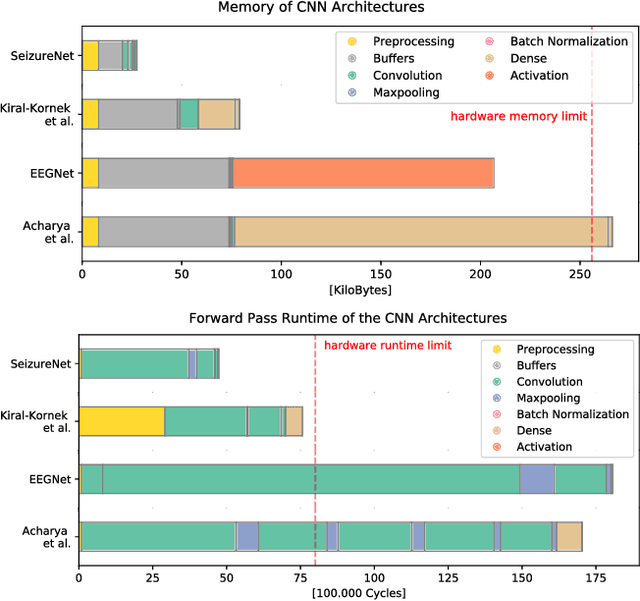

Abstract:Implantable, closed-loop devices for automated early detection and stimulation of epileptic seizures are promising treatment options for patients with severe epilepsy that cannot be treated with traditional means. Most approaches for early seizure detection in the literature are, however, not optimized for implementation on ultra-low power microcontrollers required for long-term implantation. In this paper we present a convolutional neural network for the early detection of seizures from intracranial EEG signals, designed specifically for this purpose. In addition, we investigate approximations to comply with hardware limits while preserving accuracy. We compare our approach to three previously proposed convolutional neural networks and a feature-based SVM classifier with respect to detection accuracy, latency and computational needs. Evaluation is based on a comprehensive database with long-term EEG recordings. The proposed method outperforms the other detectors with a median sensitivity of 0.96, false detection rate of 10.1 per hour and median detection delay of 3.7 seconds, while being the only approach suited to be realized on a low power microcontroller due to its parsimonious use of computational and memory resources.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge