Martin Völker

Intracranial Error Detection via Deep Learning

Nov 02, 2018

Abstract:Deep learning techniques have revolutionized the field of machine learning and were recently successfully applied to various classification problems in noninvasive electroencephalography (EEG). However, these methods were so far only rarely evaluated for use in intracranial EEG. We employed convolutional neural networks (CNNs) to classify and characterize the error-related brain response as measured in 24 intracranial EEG recordings. Decoding accuracies of CNNs were significantly higher than those of a regularized linear discriminant analysis. Using time-resolved deep decoding, it was possible to classify errors in various regions in the human brain, and further to decode errors over 200 ms before the actual erroneous button press, e.g., in the precentral gyrus. Moreover, deeper networks performed better than shallower networks in distinguishing correct from error trials in all-channel decoding. In single recordings, up to 100 % decoding accuracy was achieved. Visualization of the networks' learned features indicated that multivariate decoding on an ensemble of channels yields related, albeit non-redundant information compared to single-channel decoding. In summary, here we show the usefulness of deep learning for both intracranial error decoding and mapping of the spatio-temporal structure of the human error processing network.

A large-scale evaluation framework for EEG deep learning architectures

Jul 25, 2018

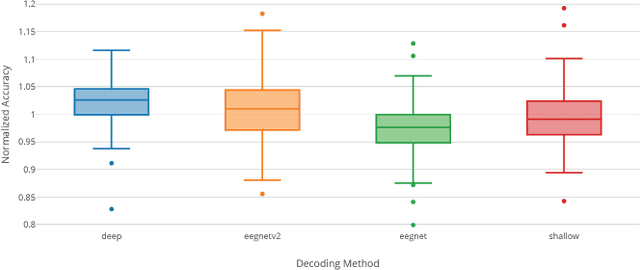

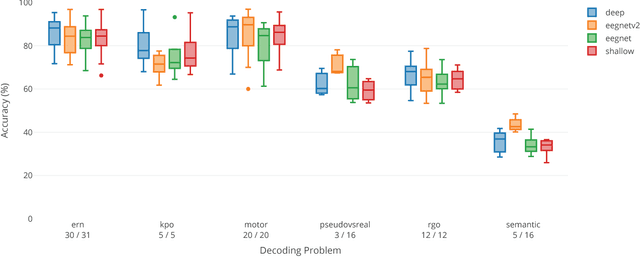

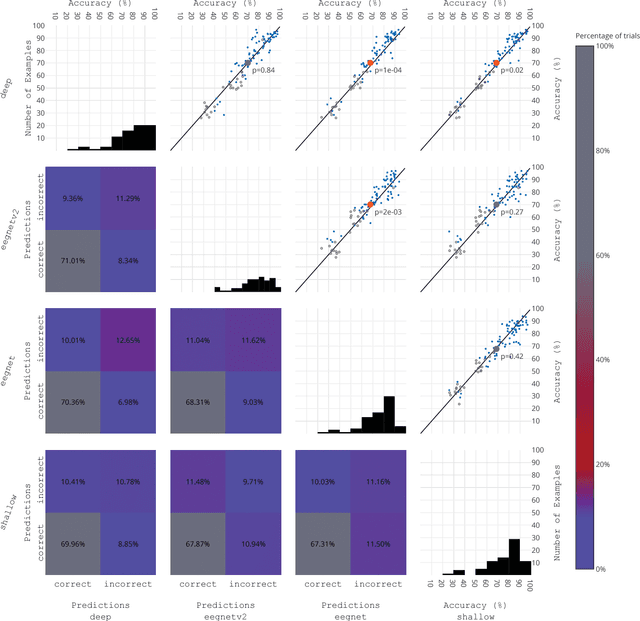

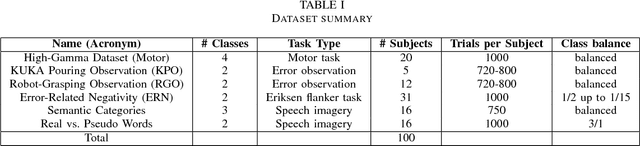

Abstract:EEG is the most common signal source for noninvasive BCI applications. For such applications, the EEG signal needs to be decoded and translated into appropriate actions. A recently emerging EEG decoding approach is deep learning with Convolutional or Recurrent Neural Networks (CNNs, RNNs) with many different architectures already published. Here we present a novel framework for the large-scale evaluation of different deep-learning architectures on different EEG datasets. This framework comprises (i) a collection of EEG datasets currently including 100 examples (recording sessions) from six different classification problems, (ii) a collection of different EEG decoding algorithms, and (iii) a wrapper linking the decoders to the data as well as handling structured documentation of all settings and (hyper-) parameters and statistics, designed to ensure transparency and reproducibility. As an applications example we used our framework by comparing three publicly available CNN architectures: the Braindecode Deep4 ConvNet, Braindecode Shallow ConvNet, and two versions of EEGNet. We also show how our framework can be used to study similarities and differences in the performance of different decoding methods across tasks. We argue that the deep learning EEG framework as described here could help to tap the full potential of deep learning for BCI applications.

Acting Thoughts: Towards a Mobile Robotic Service Assistant for Users with Limited Communication Skills

Jun 12, 2018

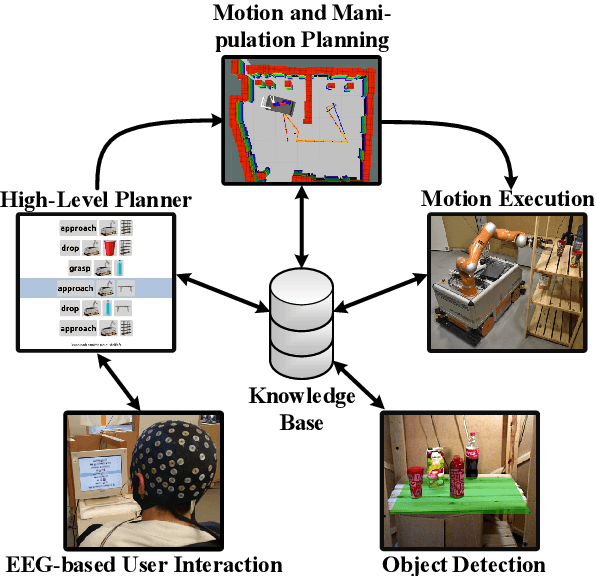

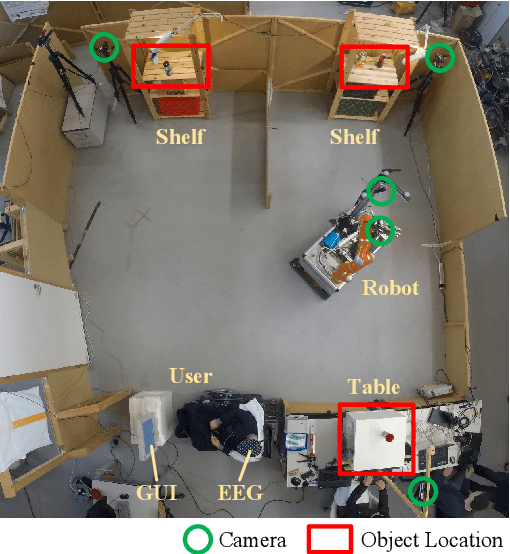

Abstract:As autonomous service robots become more affordable and thus available also for the general public, there is a growing need for user friendly interfaces to control the robotic system. Currently available control modalities typically expect users to be able to express their desire through either touch, speech or gesture commands. While this requirement is fulfilled for the majority of users, paralyzed users may not be able to use such systems. In this paper, we present a novel framework, that allows these users to interact with a robotic service assistant in a closed-loop fashion, using only thoughts. The brain-computer interface (BCI) system is composed of several interacting components, i.e., non-invasive neuronal signal recording and decoding, high-level task planning, motion and manipulation planning as well as environment perception. In various experiments, we demonstrate its applicability and robustness in real world scenarios, considering fetch-and-carry tasks and tasks involving human-robot interaction. As our results demonstrate, our system is capable of adapting to frequent changes in the environment and reliably completing given tasks within a reasonable amount of time. Combined with high-level planning and autonomous robotic systems, interesting new perspectives open up for non-invasive BCI-based human-robot interactions.

* * FB, LDJF, DK, MV and JA contributed equally to the work. Accepted as a conference paper at the European Conference on Mobile Robotics 2017 (ECMR 2017), 6 pages, 3 figures

Deep Transfer Learning for Error Decoding from Non-Invasive EEG

Jan 10, 2018

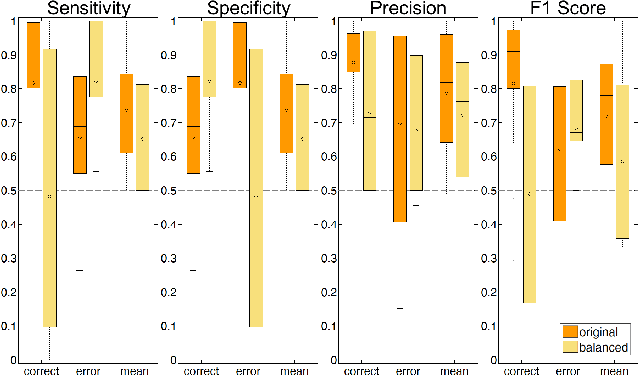

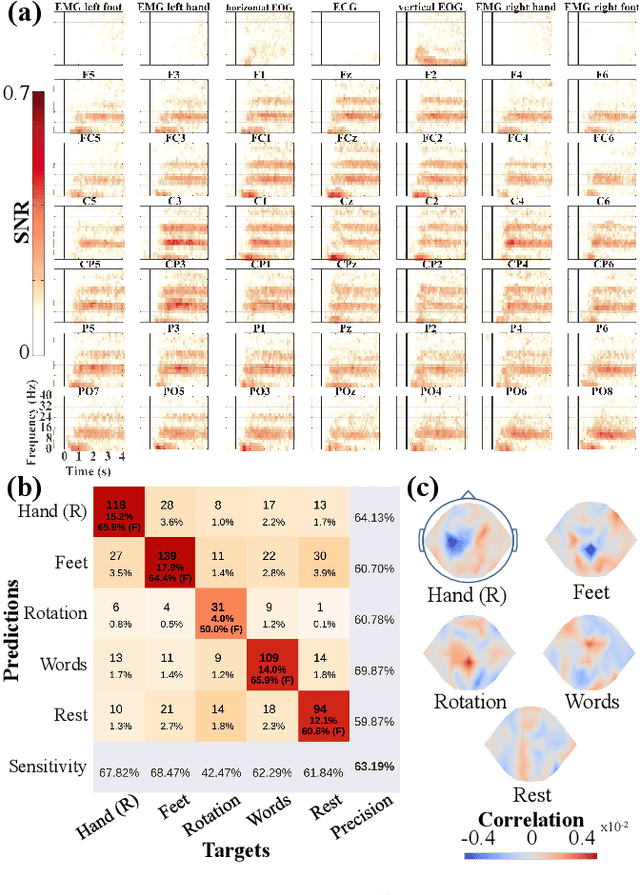

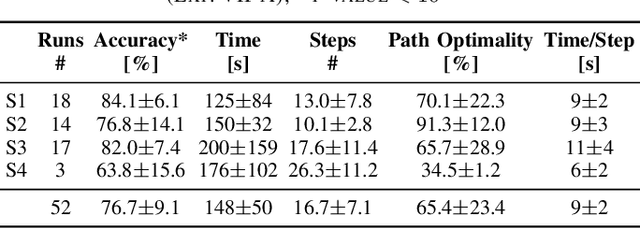

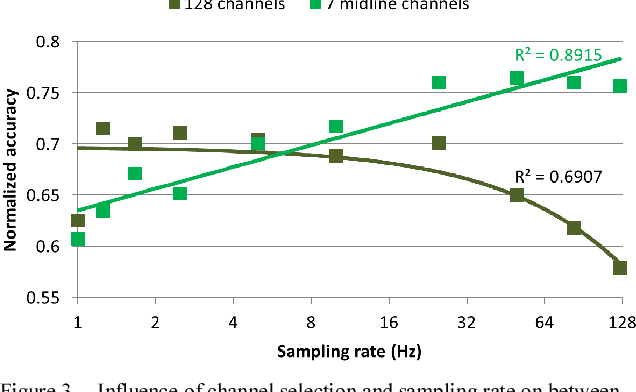

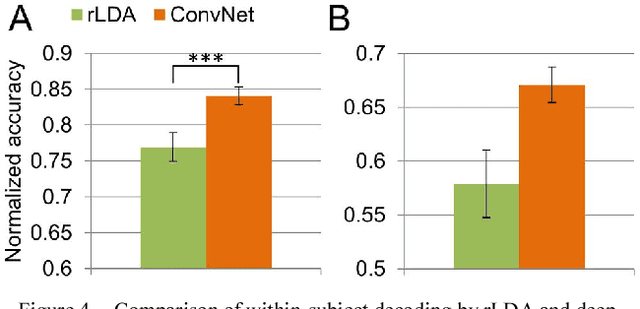

Abstract:We recorded high-density EEG in a flanker task experiment (31 subjects) and an online BCI control paradigm (4 subjects). On these datasets, we evaluated the use of transfer learning for error decoding with deep convolutional neural networks (deep ConvNets). In comparison with a regularized linear discriminant analysis (rLDA) classifier, ConvNets were significantly better in both intra- and inter-subject decoding, achieving an average accuracy of 84.1 % within subject and 81.7 % on unknown subjects (flanker task). Neither method was, however, able to generalize reliably between paradigms. Visualization of features the ConvNets learned from the data showed plausible patterns of brain activity, revealing both similarities and differences between the different kinds of errors. Our findings indicate that deep learning techniques are useful to infer information about the correctness of action in BCI applications, particularly for the transfer of pre-trained classifiers to new recording sessions or subjects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge