Andreas Schuh

Morphology-based non-rigid registration of coronary computed tomography and intravascular images through virtual catheter path optimization

Dec 30, 2022

Abstract:Coronary Computed Tomography Angiography (CCTA) provides information on the presence, extent, and severity of obstructive coronary artery disease. Large-scale clinical studies analyzing CCTA-derived metrics typically require ground-truth validation in the form of high-fidelity 3D intravascular imaging. However, manual rigid alignment of intravascular images to corresponding CCTA images is both time consuming and user-dependent. Moreover, intravascular modalities suffer from several non-rigid motion-induced distortions arising from distortions in the imaging catheter path. To address these issues, we here present a semi-automatic segmentation-based framework for both rigid and non-rigid matching of intravascular images to CCTA images. We formulate the problem in terms of finding the optimal \emph{virtual catheter path} that samples the CCTA data to recapitulate the coronary artery morphology found in the intravascular image. We validate our co-registration framework on a cohort of $n=40$ patients using bifurcation landmarks as ground truth for longitudinal and rotational registration. Our results indicate that our non-rigid registration significantly outperforms other co-registration approaches for luminal bifurcation alignment in both longitudinal (mean mismatch: 3.3 frames) and rotational directions (mean mismatch: 28.6 degrees). By providing a differentiable framework for automatic multi-modal intravascular data fusion, our developed co-registration modules significantly reduces the manual effort required to conduct large-scale multi-modal clinical studies while also providing a solid foundation for the development of machine learning-based co-registration approaches.

Atlas-ISTN: Joint Segmentation, Registration and Atlas Construction with Image-and-Spatial Transformer Networks

Dec 18, 2020

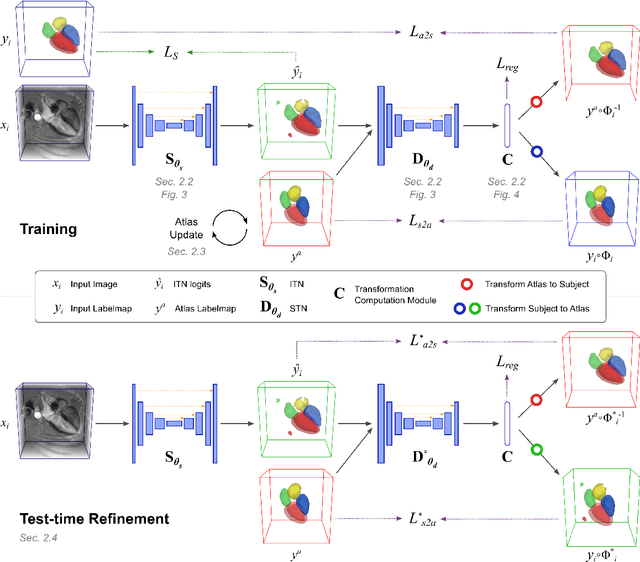

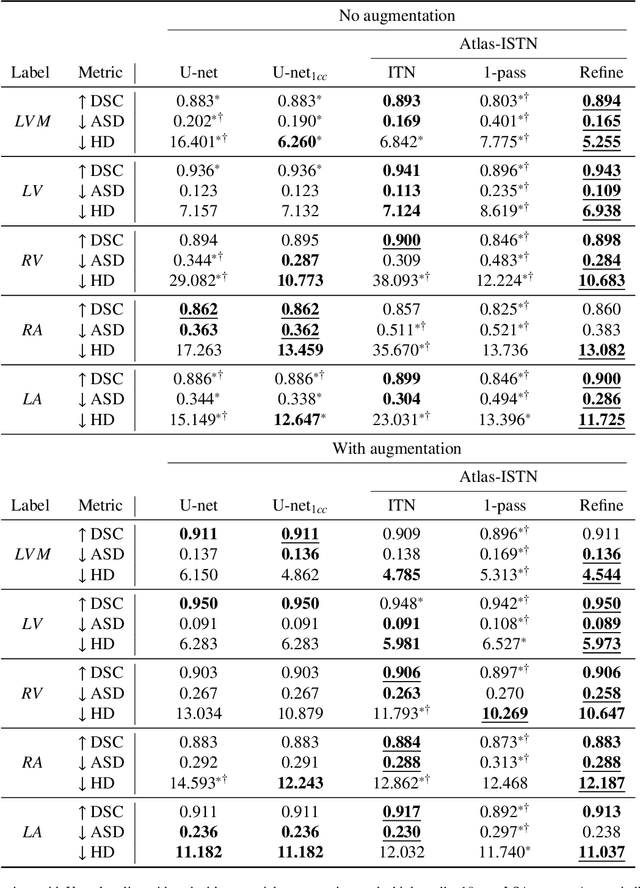

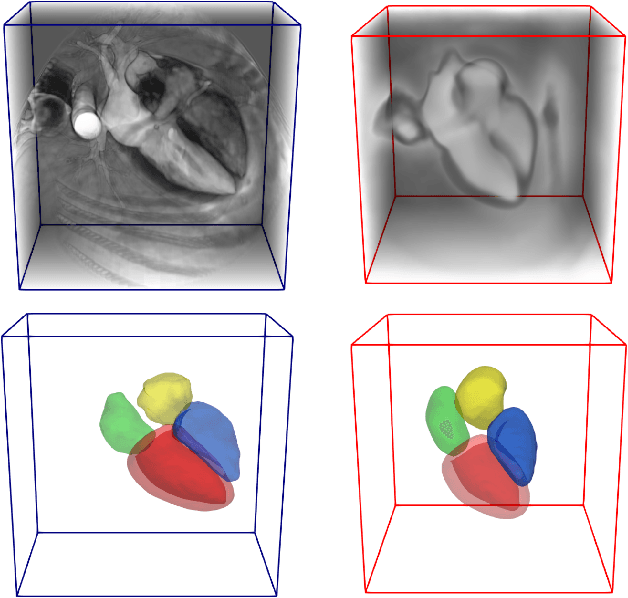

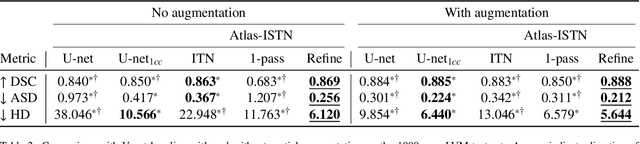

Abstract:Deep learning models for semantic segmentation are able to learn powerful representations for pixel-wise predictions, but are sensitive to noise at test time and do not guarantee a plausible topology. Image registration models on the other hand are able to warp known topologies to target images as a means of segmentation, but typically require large amounts of training data, and have not widely been benchmarked against pixel-wise segmentation models. We propose Atlas-ISTN, a framework that jointly learns segmentation and registration on 2D and 3D image data, and constructs a population-derived atlas in the process. Atlas-ISTN learns to segment multiple structures of interest and to register the constructed, topologically consistent atlas labelmap to an intermediate pixel-wise segmentation. Additionally, Atlas-ISTN allows for test time refinement of the model's parameters to optimize the alignment of the atlas labelmap to an intermediate pixel-wise segmentation. This process both mitigates for noise in the target image that can result in spurious pixel-wise predictions, as well as improves upon the one-pass prediction of the model. Benefits of the Atlas-ISTN framework are demonstrated qualitatively and quantitatively on 2D synthetic data and 3D cardiac computed tomography and brain magnetic resonance image data, out-performing both segmentation and registration baseline models. Atlas-ISTN also provides inter-subject correspondence of the structures of interest, enabling population-level shape and motion analysis.

Geometric Deep Learning for Post-Menstrual Age Prediction based on the Neonatal White Matter Cortical Surface

Aug 13, 2020

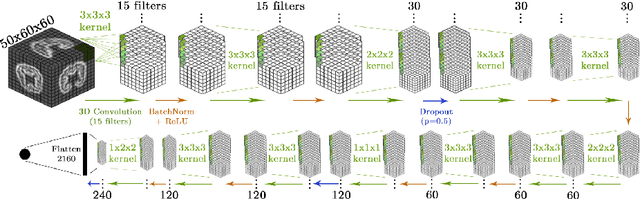

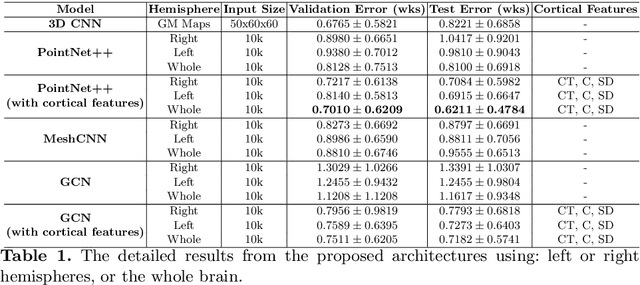

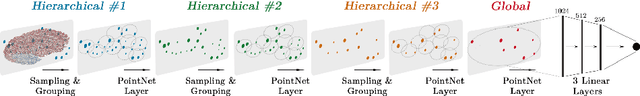

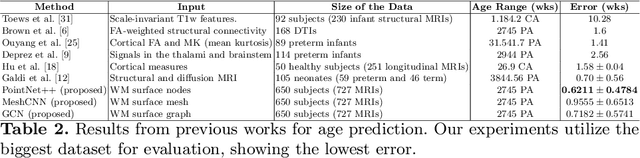

Abstract:Accurate estimation of the age in neonates is essential for measuring neurodevelopmental, medical, and growth outcomes. In this paper, we propose a novel approach to predict the post-menstrual age (PA) at scan, using techniques from geometric deep learning, based on the neonatal white matter cortical surface. We utilize and compare multiple specialized neural network architectures that predict the age using different geometric representations of the cortical surface; we compare MeshCNN, Pointnet++, GraphCNN, and a volumetric benchmark. The dataset is part of the Developing Human Connectome Project (dHCP), and is a cohort of healthy and premature neonates. We evaluate our approach on 650 subjects (727scans) with PA ranging from 27 to 45 weeks. Our results show accurate prediction of the estimated PA, with mean error less than one week.

Image-and-Spatial Transformer Networks for Structure-Guided Image Registration

Jul 22, 2019

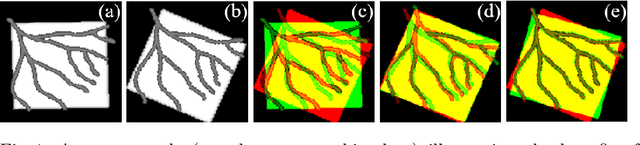

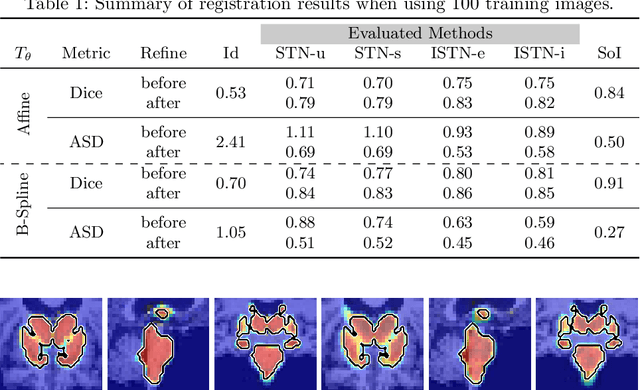

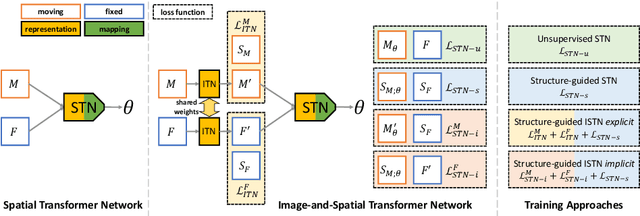

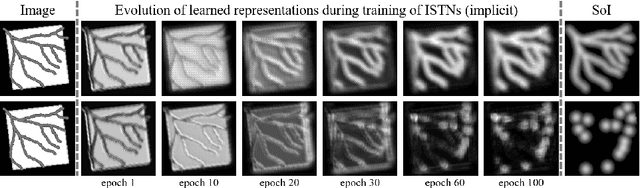

Abstract:Image registration with deep neural networks has become an active field of research and exciting avenue for a long standing problem in medical imaging. The goal is to learn a complex function that maps the appearance of input image pairs to parameters of a spatial transformation in order to align corresponding anatomical structures. We argue and show that the current direct, non-iterative approaches are sub-optimal, in particular if we seek accurate alignment of Structures-of-Interest (SoI). Information about SoI is often available at training time, for example, in form of segmentations or landmarks. We introduce a novel, generic framework, Image-and-Spatial Transformer Networks (ISTNs), to leverage SoI information allowing us to learn new image representations that are optimised for the downstream registration task. Thanks to these representations we can employ a test-specific, iterative refinement over the transformation parameters which yields highly accurate registration even with very limited training data. Performance is demonstrated on pairwise 3D brain registration and illustrative synthetic data.

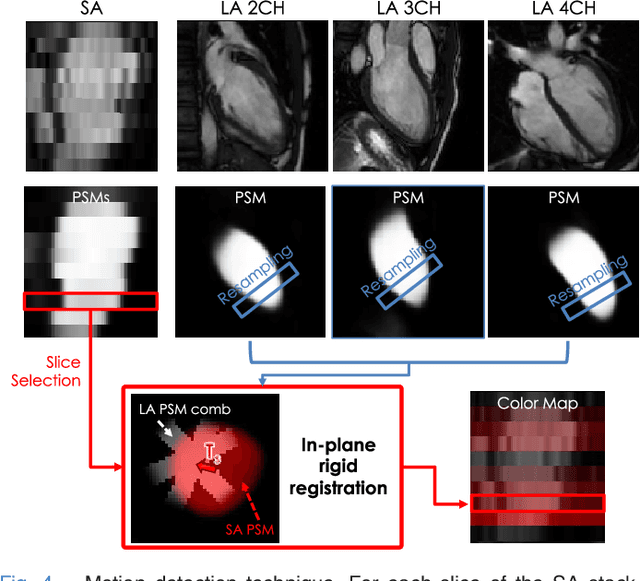

A Comprehensive Approach for Learning-based Fully-Automated Inter-slice Motion Correction for Short-Axis Cine Cardiac MR Image Stacks

Oct 03, 2018

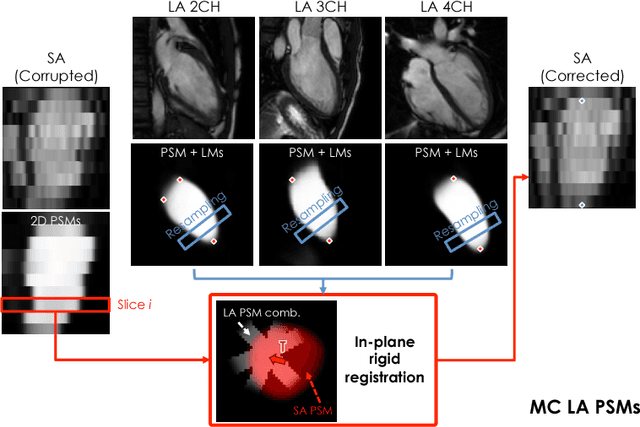

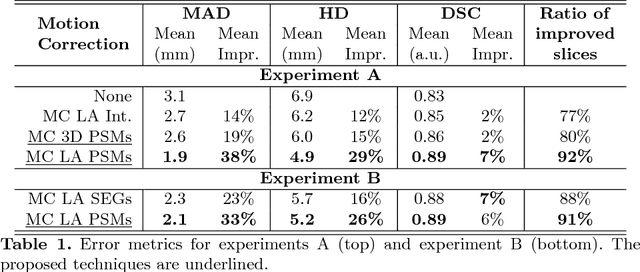

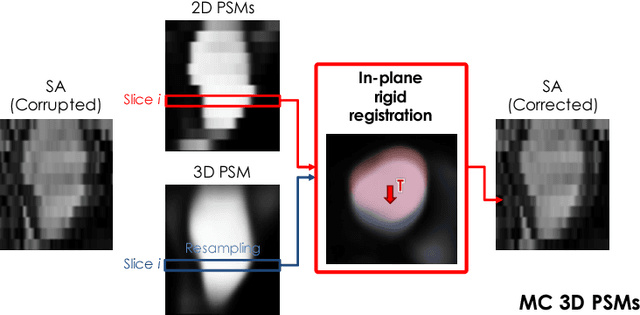

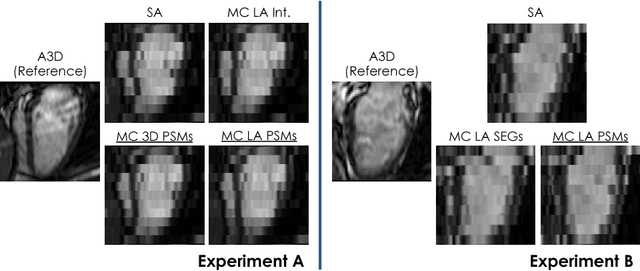

Abstract:In the clinical routine, short axis (SA) cine cardiac MR (CMR) image stacks are acquired during multiple subsequent breath-holds. If the patient cannot consistently hold the breath at the same position, the acquired image stack will be affected by inter-slice respiratory motion and will not correctly represent the cardiac volume, introducing potential errors in the following analyses and visualisations. We propose an approach to automatically correct inter-slice respiratory motion in SA CMR image stacks. Our approach makes use of probabilistic segmentation maps (PSMs) of the left ventricular (LV) cavity generated with decision forests. PSMs are generated for each slice of the SA stack and rigidly registered in-plane to a target PSM. If long axis (LA) images are available, PSMs are generated for them and combined to create the target PSM; if not, the target PSM is produced from the same stack using a 3D model trained from motion-free stacks. The proposed approach was tested on a dataset of SA stacks acquired from 24 healthy subjects (for which anatomical 3D cardiac images were also available as reference) and compared to two techniques which use LA intensity images and LA segmentations as targets, respectively. The results show the accuracy and robustness of the proposed approach in motion compensation.

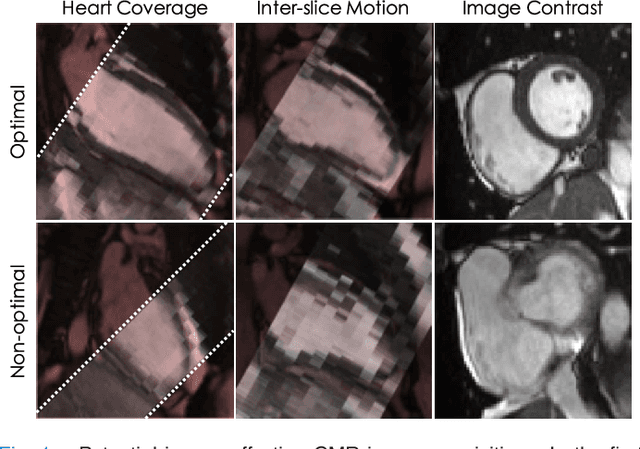

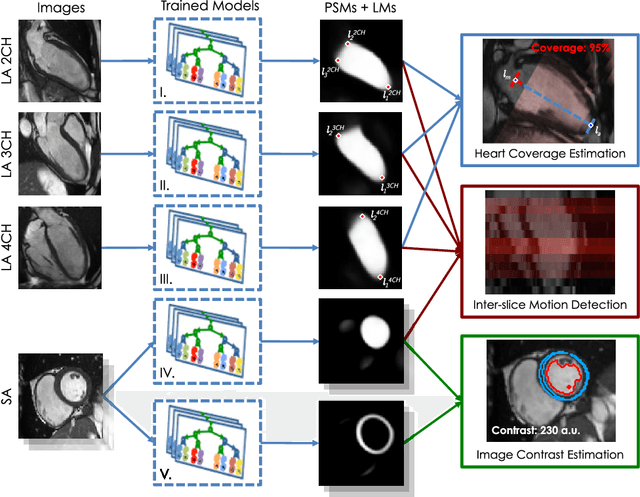

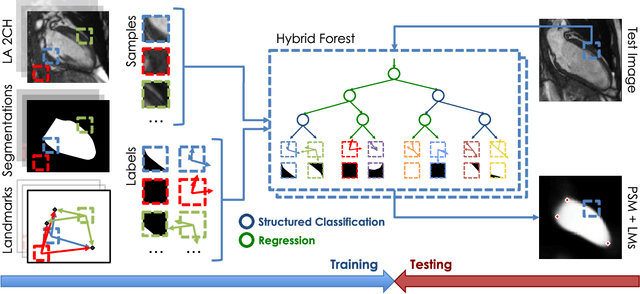

Learning-Based Quality Control for Cardiac MR Images

Sep 15, 2018

Abstract:The effectiveness of a cardiovascular magnetic resonance (CMR) scan depends on the ability of the operator to correctly tune the acquisition parameters to the subject being scanned and on the potential occurrence of imaging artefacts such as cardiac and respiratory motion. In the clinical practice, a quality control step is performed by visual assessment of the acquired images: however, this procedure is strongly operator-dependent, cumbersome and sometimes incompatible with the time constraints in clinical settings and large-scale studies. We propose a fast, fully-automated, learning-based quality control pipeline for CMR images, specifically for short-axis image stacks. Our pipeline performs three important quality checks: 1) heart coverage estimation, 2) inter-slice motion detection, 3) image contrast estimation in the cardiac region. The pipeline uses a hybrid decision forest method - integrating both regression and structured classification models - to extract landmarks as well as probabilistic segmentation maps from both long- and short-axis images as a basis to perform the quality checks. The technique was tested on up to 3000 cases from the UK Biobank as well as on 100 cases from the UK Digital Heart Project, and validated against manual annotations and visual inspections performed by expert interpreters. The results show the capability of the proposed pipeline to correctly detect incomplete or corrupted scans (e.g. on UK Biobank, sensitivity and specificity respectively 88% and 99% for heart coverage estimation, 85% and 95% for motion detection), allowing their exclusion from the analysed dataset or the triggering of a new acquisition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge