Amir Weiss

Learning to Separate RF Signals Under Uncertainty: Detect-Then-Separate vs. Unified Joint Models

Feb 04, 2026Abstract:The increasingly crowded radio frequency (RF) spectrum forces communication signals to coexist, creating heterogeneous interferers whose structure often departs from Gaussian models. Recovering the interference-contaminated signal of interest in such settings is a central challenge, especially in single-channel RF processing. Existing data-driven methods often assume that the interference type is known, yielding ensembles of specialized models that scale poorly with the number of interferers. We show that detect-then-separate (DTS) strategies admit an analytical justification: within a Gaussian mixture framework, a plug-in maximum a posteriori detector followed by type-conditioned optimal estimation achieves asymptotic minimum mean-square error optimality under a mild temporal-diversity condition. This makes DTS a principled benchmark, but its reliance on multiple type-specific models limits scalability. Motivated by this, we propose a unified joint model (UJM), in which a single deep neural architecture learns to jointly detect and separate when applied directly to the received signal. Using tailored UNet architectures for baseband (complex-valued) RF signals, we compare DTS and UJM on synthetic and recorded interference types, showing that a capacity-matched UJM can match oracle-aided DTS performance across diverse signal-to-interference-and-noise ratios, interference types, and constellation orders, including mismatched training and testing type-uncertainty proportions. These findings highlight UJM as a scalable and practical alternative to DTS, while opening new directions for unified separation under broader regimes.

Extremum Encoding for Joint Baseband Signal Compression and Time-Delay Estimation for Distributed Systems

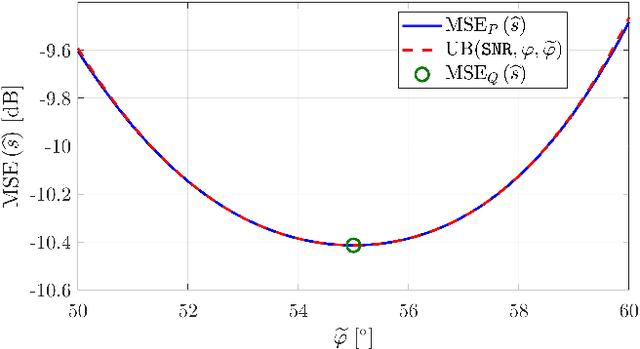

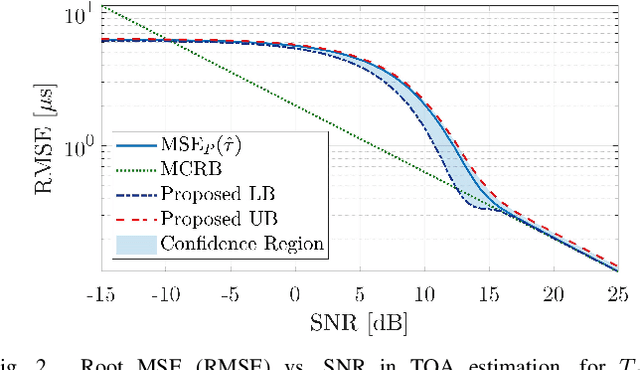

Dec 24, 2024Abstract:The ubiquitous time-delay estimation (TDE) problem becomes nontrivial when sensors are non-co-located and communication between them is limited. Building on the recently proposed "extremum encoding" compression-estimation scheme, we address the critical extension to complex-valued signals, suitable for radio-frequency (RF) baseband processing. This extension introduces new challenges, e.g., due to unknown phase of the signal of interest and random phase of the noise, rendering a na\"ive application of the original scheme inapplicable and irrelevant. In the face of these challenges, we propose a judiciously adapted, though natural, extension of the scheme, paving its way to RF applications. While our extension leads to a different statistical analysis, including extremes of non-Gaussian distributions, we show that, ultimately, its asymptotic behavior is akin to the original scheme. We derive an exponentially tight upper bound on its error probability, corroborate our results via simulation experiments, and demonstrate the superior performance compared to two benchmark approaches.

Achieving Robustness in Blind Modulo Analog-to-Digital Conversion

Dec 24, 2024Abstract:The need to digitize signals with intricate spectral characteristics often challenges traditional analog-to-digital converters (ADCs). The recently proposed modulo-ADC architecture offers a promising alternative by leveraging inherent features of the input signals. This approach can dramatically reduce the number of bits required for the conversion while maintaining the desired fidelity. However, the core algorithm of this architecture, which utilizes a prediction filter, functions properly only when the respective prediction error is bounded. In practice, this assumption may not always hold, leading to considerable instability and performance degradation. To address this limitation, we propose an enhanced modulo-unfolding solution without this assumption. We develop a reliable detector to successfully unfold the signals, yielding a robust solution. Consequently, the reinforced system maintains proper operation in scenarios where the original approach fails, while also reducing the quantization noise. We present simulation results that demonstrate the superior performance of our approach in a representative setting.

RF Challenge: The Data-Driven Radio Frequency Signal Separation Challenge

Sep 13, 2024

Abstract:This paper addresses the critical problem of interference rejection in radio-frequency (RF) signals using a novel, data-driven approach that leverages state-of-the-art AI models. Traditionally, interference rejection algorithms are manually tailored to specific types of interference. This work introduces a more scalable data-driven solution and contains the following contributions. First, we present an insightful signal model that serves as a foundation for developing and analyzing interference rejection algorithms. Second, we introduce the RF Challenge, a publicly available dataset featuring diverse RF signals along with code templates, which facilitates data-driven analysis of RF signal problems. Third, we propose novel AI-based rejection algorithms, specifically architectures like UNet and WaveNet, and evaluate their performance across eight different signal mixture types. These models demonstrate superior performance exceeding traditional methods like matched filtering and linear minimum mean square error estimation by up to two orders of magnitude in bit-error rate. Fourth, we summarize the results from an open competition hosted at 2024 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2024) based on the RF Challenge, highlighting the significant potential for continued advancements in this area. Our findings underscore the promise of deep learning algorithms in mitigating interference, offering a strong foundation for future research.

A Joint Data Compression and Time-Delay Estimation Method For Distributed Systems via Extremum Encoding

Apr 14, 2024Abstract:Motivated by the proliferation of mobile devices, we consider a basic form of the ubiquitous problem of time-delay estimation (TDE), but with communication constraints between two non co-located sensors. In this setting, when joint processing of the received signals is not possible, a compression technique that is tailored to TDE is desirable. For our basic TDE formulation, we develop such a joint compression-estimation strategy based on the notion of what we term "extremum encoding", whereby we send the index of the maximum of a finite-length time-series from one sensor to another. Subsequent joint processing of the encoded message with locally observed data gives rise to our proposed time-delay "maximum-index"-based estimator. We derive an exponentially tight upper bound on its error probability, establishing its consistency with respect to the number of transmitted bits. We further validate our analysis via simulations, and comment on potential extensions and generalizations of the basic methodology.

Score-based Source Separation with Applications to Digital Communication Signals

Jun 26, 2023

Abstract:We propose a new method for separating superimposed sources using diffusion-based generative models. Our method relies only on separately trained statistical priors of independent sources to establish a new objective function guided by maximum a posteriori estimation with an $\alpha$-posterior, across multiple levels of Gaussian smoothing. Motivated by applications in radio-frequency (RF) systems, we are interested in sources with underlying discrete nature and the recovery of encoded bits from a signal of interest, as measured by the bit error rate (BER). Experimental results with RF mixtures demonstrate that our method results in a BER reduction of 95% over classical and existing learning-based methods. Our analysis demonstrates that our proposed method yields solutions that asymptotically approach the modes of an underlying discrete distribution. Furthermore, our method can be viewed as a multi-source extension to the recently proposed score distillation sampling scheme, shedding additional light on its use beyond conditional sampling.

Online Segmented Recursive Least-Squares for Multipath Doppler Tracking

May 30, 2023

Abstract:Underwater communication signals typically suffer from distortion due to motion-induced Doppler. Especially in shallow water environments, recovering the signal is challenging due to the time-varying Doppler effects distorting each path differently. However, conventional Doppler estimation algorithms typically model uniform Doppler across all paths and often fail to provide robust Doppler tracking in multipath environments. In this paper, we propose a dynamic programming-inspired method, called online segmented recursive least-squares (OSRLS) to sequentially estimate the time-varying non-uniform Doppler across different multipath arrivals. By approximating the non-linear time distortion as a piece-wise-linear Markov model, we formulate the problem in a dynamic programming framework known as segmented least-squares (SLS). In order to circumvent an ill-conditioned formulation, perturbations are added to the Doppler model during the linearization process. The successful operation of the algorithm is demonstrated in a simulation on a synthetic channel with time-varying non-uniform Doppler.

Towards Robust Data-Driven Underwater Acoustic Localization: A Deep CNN Solution with Performance Guarantees for Model Mismatch

May 29, 2023

Abstract:Key challenges in developing underwater acoustic localization methods are related to the combined effects of high reverberation in intricate environments. To address such challenges, recent studies have shown that with a properly designed architecture, neural networks can lead to unprecedented localization capabilities and enhanced accuracy. However, the robustness of such methods to environmental mismatch is typically hard to characterize, and is usually assessed only empirically. In this work, we consider the recently proposed data-driven method [19] based on a deep convolutional neural network, and demonstrate that it can learn to localize in complex and mismatched environments. To explain this robustness, we provide an upper bound on the localization mean squared error (MSE) in the ``true" environment, in terms of the MSE in a ``presumed" environment and an additional penalty term related to the environmental discrepancy. Our theoretical results are corroborated via simulation results in a rich, highly reverberant, and mismatch channel.

A Bilateral Bound on the Mean-Square Error for Estimation in Model Mismatch

May 14, 2023

Abstract:A bilateral (i.e., upper and lower) bound on the mean-square error under a general model mismatch is developed. The bound, which is derived from the variational representation of the chi-square divergence, is applicable in the Bayesian and nonBayesian frameworks to biased and unbiased estimators. Unlike other classical MSE bounds that depend only on the model, our bound is also estimator-dependent. Thus, it is applicable as a tool for characterizing the MSE of a specific estimator. The proposed bounding technique has a variety of applications, one of which is a tool for proving the consistency of estimators for a class of models. Furthermore, it provides insight as to why certain estimators work well under general model mismatch conditions.

On Neural Architectures for Deep Learning-based Source Separation of Co-Channel OFDM Signals

Mar 15, 2023

Abstract:We study the single-channel source separation problem involving orthogonal frequency-division multiplexing (OFDM) signals, which are ubiquitous in many modern-day digital communication systems. Related efforts have been pursued in monaural source separation, where state-of-the-art neural architectures have been adopted to train an end-to-end separator for audio signals (as 1-dimensional time series). In this work, through a prototype problem based on the OFDM source model, we assess -- and question -- the efficacy of using audio-oriented neural architectures in separating signals based on features pertinent to communication waveforms. Perhaps surprisingly, we demonstrate that in some configurations, where perfect separation is theoretically attainable, these audio-oriented neural architectures perform poorly in separating co-channel OFDM waveforms. Yet, we propose critical domain-informed modifications to the network parameterization, based on insights from OFDM structures, that can confer about 30 dB improvement in performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge