A Bilateral Bound on the Mean-Square Error for Estimation in Model Mismatch

Paper and Code

May 14, 2023

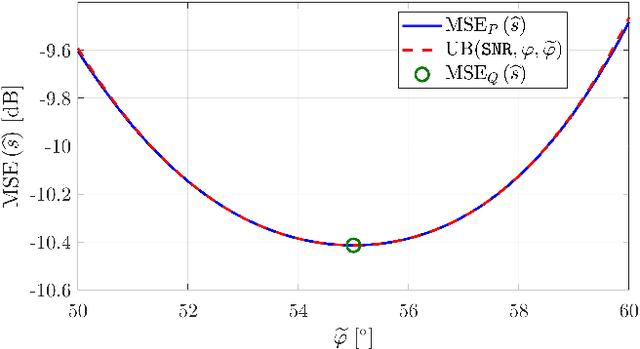

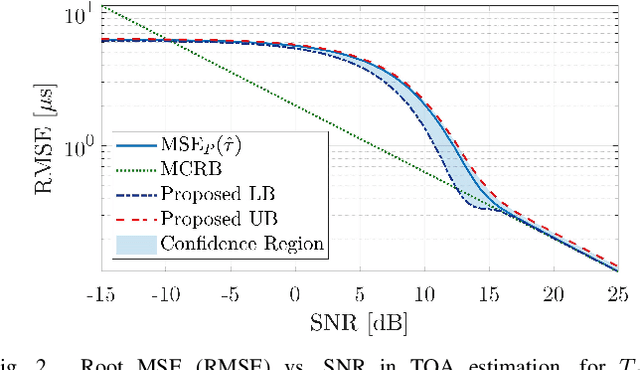

A bilateral (i.e., upper and lower) bound on the mean-square error under a general model mismatch is developed. The bound, which is derived from the variational representation of the chi-square divergence, is applicable in the Bayesian and nonBayesian frameworks to biased and unbiased estimators. Unlike other classical MSE bounds that depend only on the model, our bound is also estimator-dependent. Thus, it is applicable as a tool for characterizing the MSE of a specific estimator. The proposed bounding technique has a variety of applications, one of which is a tool for proving the consistency of estimators for a class of models. Furthermore, it provides insight as to why certain estimators work well under general model mismatch conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge