Alejandro Lancho

Learning to Separate RF Signals Under Uncertainty: Detect-Then-Separate vs. Unified Joint Models

Feb 04, 2026Abstract:The increasingly crowded radio frequency (RF) spectrum forces communication signals to coexist, creating heterogeneous interferers whose structure often departs from Gaussian models. Recovering the interference-contaminated signal of interest in such settings is a central challenge, especially in single-channel RF processing. Existing data-driven methods often assume that the interference type is known, yielding ensembles of specialized models that scale poorly with the number of interferers. We show that detect-then-separate (DTS) strategies admit an analytical justification: within a Gaussian mixture framework, a plug-in maximum a posteriori detector followed by type-conditioned optimal estimation achieves asymptotic minimum mean-square error optimality under a mild temporal-diversity condition. This makes DTS a principled benchmark, but its reliance on multiple type-specific models limits scalability. Motivated by this, we propose a unified joint model (UJM), in which a single deep neural architecture learns to jointly detect and separate when applied directly to the received signal. Using tailored UNet architectures for baseband (complex-valued) RF signals, we compare DTS and UJM on synthetic and recorded interference types, showing that a capacity-matched UJM can match oracle-aided DTS performance across diverse signal-to-interference-and-noise ratios, interference types, and constellation orders, including mismatched training and testing type-uncertainty proportions. These findings highlight UJM as a scalable and practical alternative to DTS, while opening new directions for unified separation under broader regimes.

A Foundation Model for Patient Behavior Monitoring and Suicide Detection

Mar 19, 2025

Abstract:Foundation models (FMs) have achieved remarkable success across various domains, yet their adoption in healthcare remains limited. While significant advances have been made in medical imaging, genetic biomarkers, and time series from electronic health records, the potential of FMs for patient behavior monitoring through wearable devices remains underexplored. These datasets are inherently heterogeneous, multisource, and often exhibit high rates of missing data, posing unique challenges. This paper introduces a novel FM based on a modified vector quantized variational autoencoder (VQ-VAE), specifically designed to process real-world data from wearable devices. We demonstrate that our pretrained FM, trained on a broad cohort of psychiatric patients, performs downstream tasks via its latent representation without fine-tuning on a held-out cohort of suicidal patients. To illustrate this, we develop a probabilistic change-point detection algorithm for suicide detection and demonstrate the FM's effectiveness in predicting emotional states. Our results show that the discrete latent structure of the VQ-VAE outperforms a state-of-the-art Informer architecture in unsupervised suicide detection, while matching its performance in supervised emotion prediction when the latent dimensionality is increased, though at the cost of reduced unsupervised accuracy. This trade-off highlights the need for future FMs to integrate hybrid discrete-continuous structures for balanced performance across tasks.

RF Challenge: The Data-Driven Radio Frequency Signal Separation Challenge

Sep 13, 2024

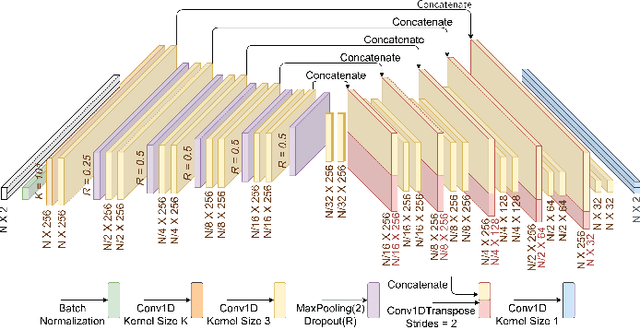

Abstract:This paper addresses the critical problem of interference rejection in radio-frequency (RF) signals using a novel, data-driven approach that leverages state-of-the-art AI models. Traditionally, interference rejection algorithms are manually tailored to specific types of interference. This work introduces a more scalable data-driven solution and contains the following contributions. First, we present an insightful signal model that serves as a foundation for developing and analyzing interference rejection algorithms. Second, we introduce the RF Challenge, a publicly available dataset featuring diverse RF signals along with code templates, which facilitates data-driven analysis of RF signal problems. Third, we propose novel AI-based rejection algorithms, specifically architectures like UNet and WaveNet, and evaluate their performance across eight different signal mixture types. These models demonstrate superior performance exceeding traditional methods like matched filtering and linear minimum mean square error estimation by up to two orders of magnitude in bit-error rate. Fourth, we summarize the results from an open competition hosted at 2024 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2024) based on the RF Challenge, highlighting the significant potential for continued advancements in this area. Our findings underscore the promise of deep learning algorithms in mitigating interference, offering a strong foundation for future research.

Score-based Source Separation with Applications to Digital Communication Signals

Jun 26, 2023

Abstract:We propose a new method for separating superimposed sources using diffusion-based generative models. Our method relies only on separately trained statistical priors of independent sources to establish a new objective function guided by maximum a posteriori estimation with an $\alpha$-posterior, across multiple levels of Gaussian smoothing. Motivated by applications in radio-frequency (RF) systems, we are interested in sources with underlying discrete nature and the recovery of encoded bits from a signal of interest, as measured by the bit error rate (BER). Experimental results with RF mixtures demonstrate that our method results in a BER reduction of 95% over classical and existing learning-based methods. Our analysis demonstrates that our proposed method yields solutions that asymptotically approach the modes of an underlying discrete distribution. Furthermore, our method can be viewed as a multi-source extension to the recently proposed score distillation sampling scheme, shedding additional light on its use beyond conditional sampling.

A Bilateral Bound on the Mean-Square Error for Estimation in Model Mismatch

May 14, 2023

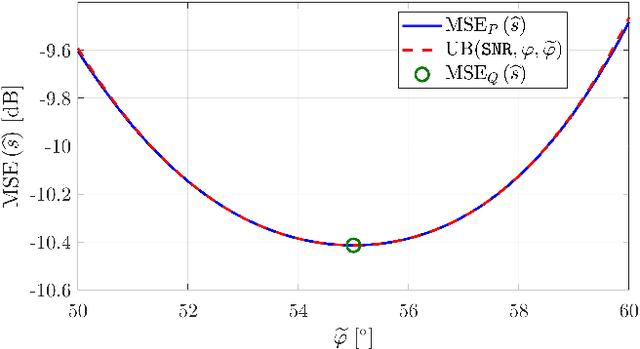

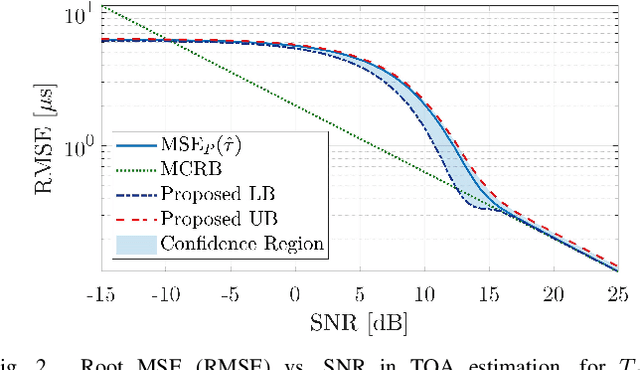

Abstract:A bilateral (i.e., upper and lower) bound on the mean-square error under a general model mismatch is developed. The bound, which is derived from the variational representation of the chi-square divergence, is applicable in the Bayesian and nonBayesian frameworks to biased and unbiased estimators. Unlike other classical MSE bounds that depend only on the model, our bound is also estimator-dependent. Thus, it is applicable as a tool for characterizing the MSE of a specific estimator. The proposed bounding technique has a variety of applications, one of which is a tool for proving the consistency of estimators for a class of models. Furthermore, it provides insight as to why certain estimators work well under general model mismatch conditions.

On Neural Architectures for Deep Learning-based Source Separation of Co-Channel OFDM Signals

Mar 15, 2023

Abstract:We study the single-channel source separation problem involving orthogonal frequency-division multiplexing (OFDM) signals, which are ubiquitous in many modern-day digital communication systems. Related efforts have been pursued in monaural source separation, where state-of-the-art neural architectures have been adopted to train an end-to-end separator for audio signals (as 1-dimensional time series). In this work, through a prototype problem based on the OFDM source model, we assess -- and question -- the efficacy of using audio-oriented neural architectures in separating signals based on features pertinent to communication waveforms. Perhaps surprisingly, we demonstrate that in some configurations, where perfect separation is theoretically attainable, these audio-oriented neural architectures perform poorly in separating co-channel OFDM waveforms. Yet, we propose critical domain-informed modifications to the network parameterization, based on insights from OFDM structures, that can confer about 30 dB improvement in performance.

Data-Driven Blind Synchronization and Interference Rejection for Digital Communication Signals

Sep 11, 2022

Abstract:We study the potential of data-driven deep learning methods for separation of two communication signals from an observation of their mixture. In particular, we assume knowledge on the generation process of one of the signals, dubbed signal of interest (SOI), and no knowledge on the generation process of the second signal, referred to as interference. This form of the single-channel source separation problem is also referred to as interference rejection. We show that capturing high-resolution temporal structures (nonstationarities), which enables accurate synchronization to both the SOI and the interference, leads to substantial performance gains. With this key insight, we propose a domain-informed neural network (NN) design that is able to improve upon both "off-the-shelf" NNs and classical detection and interference rejection methods, as demonstrated in our simulations. Our findings highlight the key role communication-specific domain knowledge plays in the development of data-driven approaches that hold the promise of unprecedented gains.

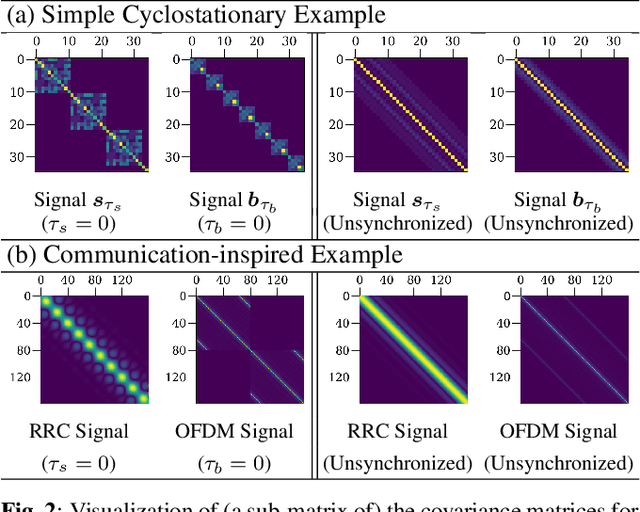

Exploiting Temporal Structures of Cyclostationary Signals for Data-Driven Single-Channel Source Separation

Aug 22, 2022

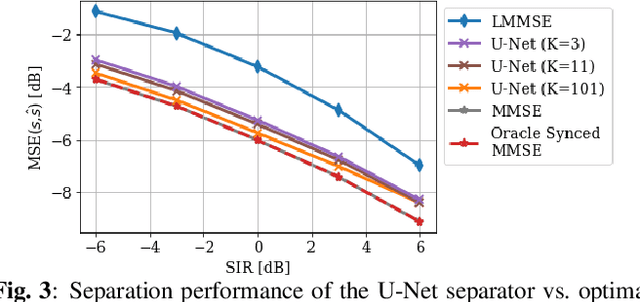

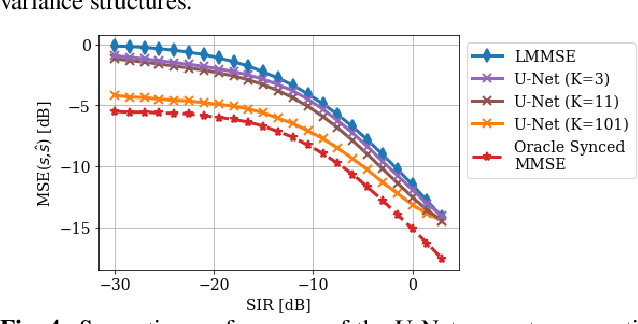

Abstract:We study the problem of single-channel source separation (SCSS), and focus on cyclostationary signals, which are particularly suitable in a variety of application domains. Unlike classical SCSS approaches, we consider a setting where only examples of the sources are available rather than their models, inspiring a data-driven approach. For source models with underlying cyclostationary Gaussian constituents, we establish a lower bound on the attainable mean squared error (MSE) for any separation method, model-based or data-driven. Our analysis further reveals the operation for optimal separation and the associated implementation challenges. As a computationally attractive alternative, we propose a deep learning approach using a U-Net architecture, which is competitive with the minimum MSE estimator. We demonstrate in simulation that, with suitable domain-informed architectural choices, our U-Net method can approach the optimal performance with substantially reduced computational burden.

Cell-Free Massive MIMO for URLLC: A Finite-Blocklength Analysis

Jul 02, 2022

Abstract:We present a general framework for the characterization of the packet error probability achievable in cell-free Massive multiple-input multiple output (MIMO) architectures deployed to support ultra-reliable low lantecy (URLLC) traffic. The framework is general and encompasses both centralized and distributed cell-free architectures, arbitrary fading channels and channel estimation algorithms at both network and user-equipment (UE) sides, as well as arbitrary combing and precoding schemes. The framework is used to perform numerical experiments that clearly show the superiority of cell-free architectures compared to cellular architectures in supporting URLLC traffic in uplink and downlink. Also, they provide the following novel insights into the optimal design of cell-free architectures for URLLC: i) minimum mean square error (MMSE) spatial processing must be used to achieve the URLLC targets; ii) for a given total number of antennas per coverage area, centralized cell-free solutions involving single-antenna access points (APs) offer the best performance in the uplink, thereby highlighting the importance of reducing the average distance between APs and UEs in the URLLC regime; iii) this observation applies also to the downlink, provided that the APs transmit precoded pilots to allow the UEs to estimate accurately the precoded channel.

Cell-free Massive MIMO with Short Packets

Jul 22, 2021

Abstract:In this paper, we adapt to cell-free Massive MIMO (multiple-input multiple-output) the finite-blocklength framework introduced by \"Ostman et al. (2020) for the characterization of the packet error probability achievable with Massive MIMO, in the ultra-reliable low-latency communications (URLLC) regime. The framework considered in this paper encompasses a cell-free architecture with imperfect channel-state information, and arbitrary linear signal processing performed at a central-processing unit connected to the access points via fronthaul links. By means of numerical simulations, we show that, to achieve the high reliability requirements in URLLC, MMSE signal processing must be used. Comparisons are also made with both small-cell and Massive MIMO cellular networks. Both require a much larger number of antennas to achieve comparable performance to cell-free Massive MIMO.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge