Amaury Lendasse

CIS

Extreme AutoML: Analysis of Classification, Regression, and NLP Performance

Dec 09, 2024Abstract:Utilizing machine learning techniques has always required choosing hyperparameters. This is true whether one uses a classical technique such as a KNN or very modern neural networks such as Deep Learning. Though in many applications, hyperparameters are chosen by hand, automated methods have become increasingly more common. These automated methods have become collectively known as automated machine learning, or AutoML. Several automated selection algorithms have shown similar or improved performance over state-of-the-art methods. This breakthrough has led to the development of cloud-based services like Google AutoML, which is based on Deep Learning and is widely considered to be the industry leader in AutoML services. Extreme Learning Machines (ELMs) use a fundamentally different type of neural architecture, producing better results at a significantly discounted computational cost. We benchmark the Extreme AutoML technology against Google's AutoML using several popular classification data sets from the University of California at Irvine's (UCI) repository, and several other data sets, observing significant advantages for Extreme AutoML in accuracy, Jaccard Indices, the variance of Jaccard Indices across classes (i.e. class variance) and training times.

Mislabel Detection of Finnish Publication Ranks

Dec 19, 2019

Abstract:The paper proposes to analyze a data set of Finnish ranks of academic publication channels with Extreme Learning Machine (ELM). The purpose is to introduce and test recently proposed ELM-based mislabel detection approach with a rich set of features characterizing a publication channel. We will compare the architecture, accuracy, and, especially, the set of detected mislabels of the ELM-based approach to the corresponding reference results on the reference paper.

Per-sample Prediction Intervals for Extreme Learning Machines

Dec 19, 2019

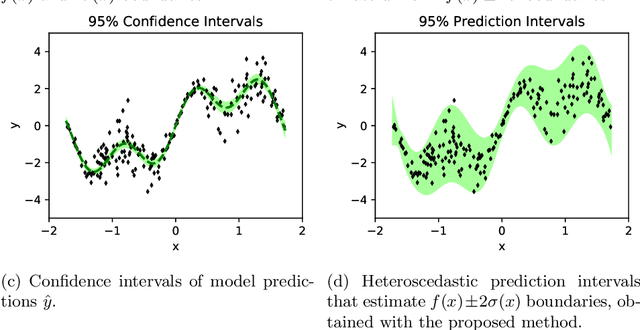

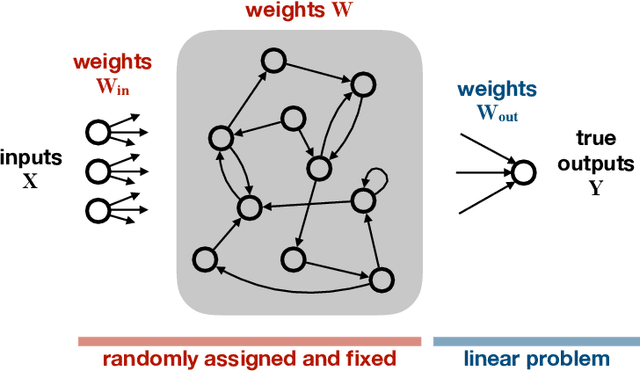

Abstract:Prediction intervals in supervised Machine Learning bound the region where the true outputs of new samples may fall. They are necessary in the task of separating reliable predictions of a trained model from near random guesses, minimizing the rate of False Positives, and other problem-specific tasks in applied Machine Learning. Many real problems have heteroscedastic stochastic outputs, which explains the need of input-dependent prediction intervals. This paper proposes to estimate the input-dependent prediction intervals by a separate Extreme Learning Machine model, using variance of its predictions as a correction term accounting for the model uncertainty. The variance is estimated from the model's linear output layer with a weighted Jackknife method. The methodology is very fast, robust to heteroscedastic outputs, and handles both extremely large datasets and insufficient amount of training data.

Extreme Learning Tree

Dec 19, 2019

Abstract:The paper proposes a new variant of a decision tree, called an Extreme Learning Tree. It consists of an extremely random tree with non-linear data transformation, and a linear observer that provides predictions based on the leaf index where the data samples fall. The proposed method outperforms linear models on a benchmark dataset, and may be a building block for a future variant of Random Forest.

Spiking Networks for Improved Cognitive Abilities of Edge Computing Devices

Dec 19, 2019

Abstract:This concept paper highlights a recently opened opportunity for large scale analytical algorithms to be trained directly on edge devices. Such approach is a response to the arising need of processing data generated by natural person (a human being), also known as personal data. Spiking Neural networks are the core method behind it: suitable for a low latency energy-constrained hardware, enabling local training or re-training, while not taking advantage of scalability available in the Cloud.

A Web Page Classifier Library Based on Random Image Content Analysis Using Deep Learning

Dec 18, 2019

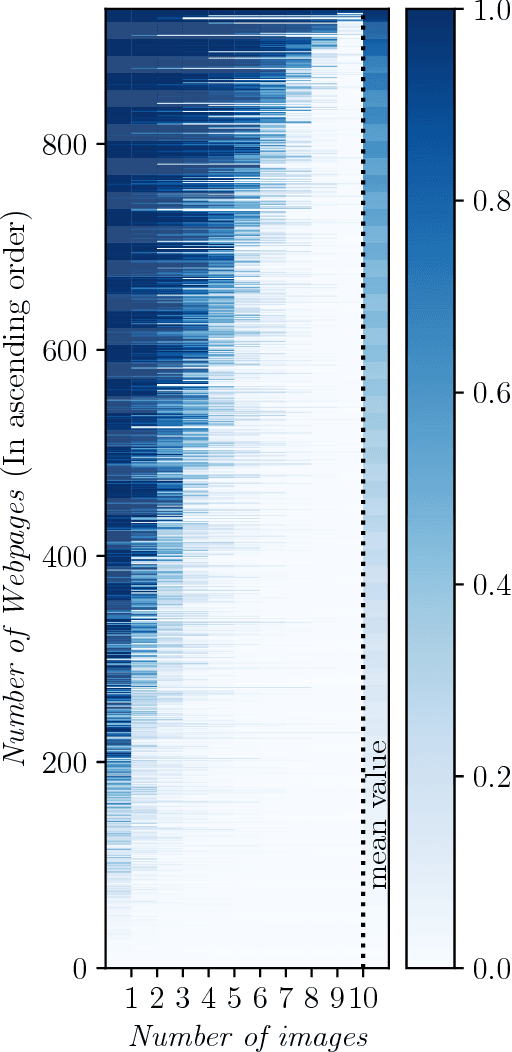

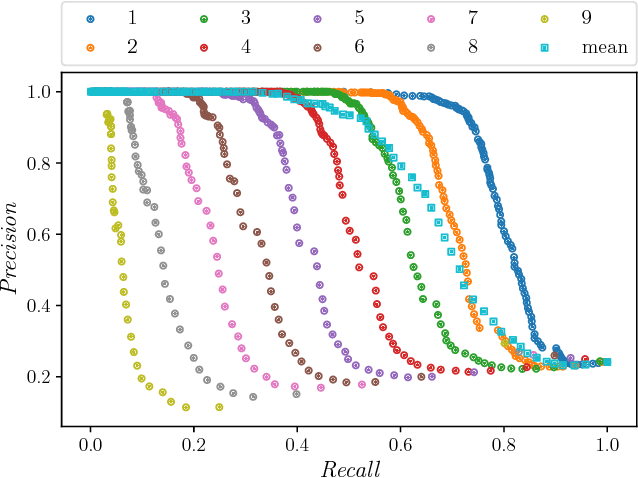

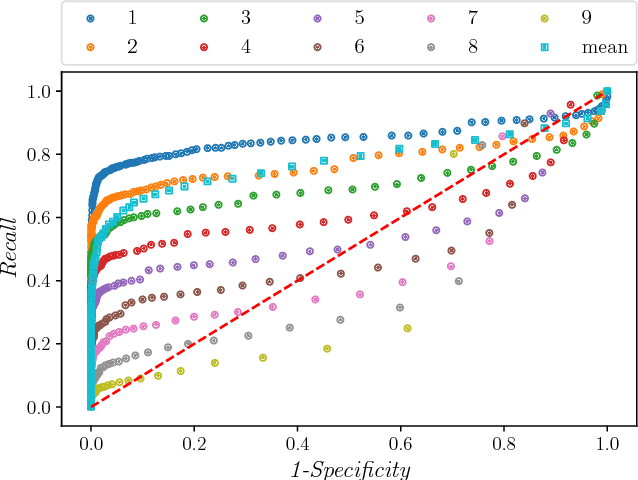

Abstract:In this paper, we present a methodology and the corresponding Python library 1 for the classification of webpages. Our method retrieves a fixed number of images from a given webpage, and based on them classifies the webpage into a set of established classes with a given probability. The library trains a random forest model build upon the features extracted from images by a pre-trained deep network. The implementation is tested by recognizing weapon class webpages in a curated list of 3859 websites. The results show that the best method of classifying a webpage into the studies classes is to assign the class according to the maximum probability of any image belonging to this (weapon) class being above the threshold, across all the retrieved images. Further research explores the possibilities for the developed methodology to also apply in image classification for healthcare applications.

Incremental ELMVIS for unsupervised learning

Dec 18, 2019

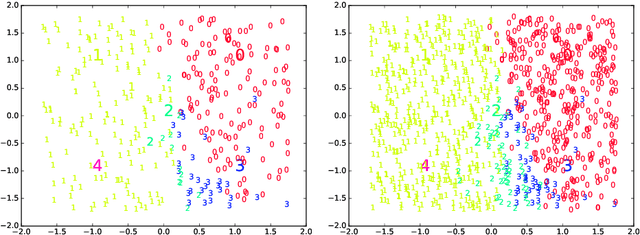

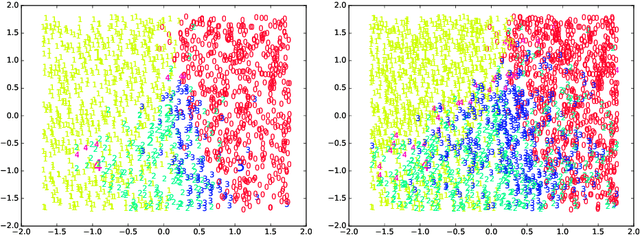

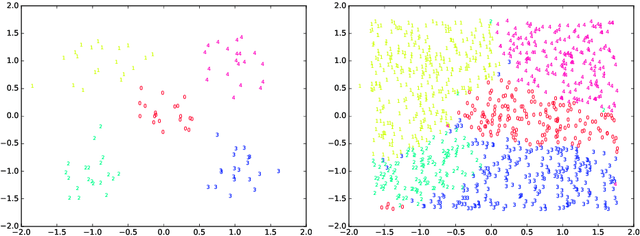

Abstract:An incremental version of the ELMVIS+ method is proposed in this paper. It iteratively selects a few best fitting data samples from a large pool, and adds them to the model. The method keeps high speed of ELMVIS+ while allowing for much larger possible sample pools due to lower memory requirements. The extension is useful for reaching a better local optimum with greedy optimization of ELMVIS, and the data structure can be specified in semi-supervised optimization. The major new application of incremental ELMVIS is not to visualization, but to a general dataset processing. The method is capable of learning dependencies from non-organized unsupervised data -- either reconstructing a shuffled dataset, or learning dependencies in complex high-dimensional space. The results are interesting and promising, although there is space for improvements.

Deep Spectral Descriptors: Learning the point-wise correspondence metric via Siamese deep neural networks

Jun 25, 2018

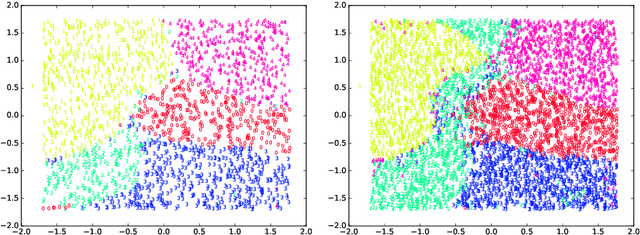

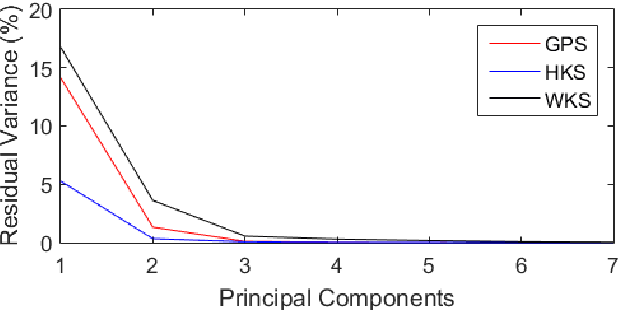

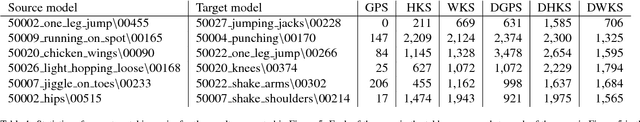

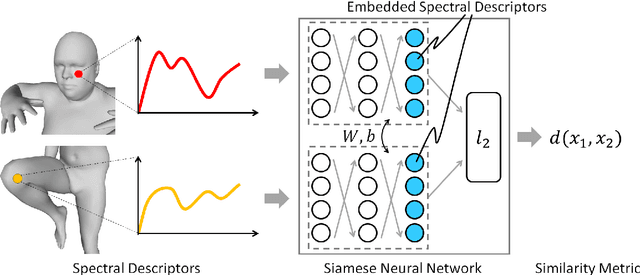

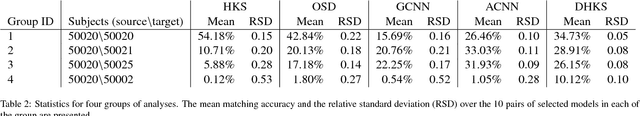

Abstract:A robust and informative local shape descriptor plays an important role in mesh registration. In this regard, spectral descriptors that are based on the spectrum of the Laplace-Beltrami operator have gained a spotlight among the researchers for the last decade due to their desirable properties, such as isometry invariance. Despite such, however, spectral descriptors often fail to give a correct similarity measure for non-isometric cases where the metric distortion between the models is large. Hence, they are in general not suitable for the registration problems, except for the special cases when the models are near-isometry. In this paper, we investigate a way to develop shape descriptors for non-isometric registration tasks by embedding the spectral shape descriptors into a different metric space where the Euclidean distance between the elements directly indicates the geometric dissimilarity. We design and train a Siamese deep neural network to find such an embedding, where the embedded descriptors are promoted to rearrange based on the geometric similarity. We found our approach can significantly enhance the performance of the conventional spectral descriptors for the non-isometric registration tasks, and outperforms recent state-of-the-art method reported in literature.

HSR: L1/2 Regularized Sparse Representation for Fast Face Recognition using Hierarchical Feature Selection

Sep 23, 2014

Abstract:In this paper, we propose a novel method for fast face recognition called L1/2 Regularized Sparse Representation using Hierarchical Feature Selection (HSR). By employing hierarchical feature selection, we can compress the scale and dimension of global dictionary, which directly contributes to the decrease of computational cost in sparse representation that our approach is strongly rooted in. It consists of Gabor wavelets and Extreme Learning Machine Auto-Encoder (ELM-AE) hierarchically. For Gabor wavelets part, local features can be extracted at multiple scales and orientations to form Gabor-feature based image, which in turn improves the recognition rate. Besides, in the presence of occluded face image, the scale of Gabor-feature based global dictionary can be compressed accordingly because redundancies exist in Gabor-feature based occlusion dictionary. For ELM-AE part, the dimension of Gabor-feature based global dictionary can be compressed because high-dimensional face images can be rapidly represented by low-dimensional feature. By introducing L1/2 regularization, our approach can produce sparser and more robust representation compared to regularized Sparse Representation based Classification (SRC), which also contributes to the decrease of the computational cost in sparse representation. In comparison with related work such as SRC and Gabor-feature based SRC (GSRC), experimental results on a variety of face databases demonstrate the great advantage of our method for computational cost. Moreover, we also achieve approximate or even better recognition rate.

RMSE-ELM: Recursive Model based Selective Ensemble of Extreme Learning Machines for Robustness Improvement

Sep 23, 2014

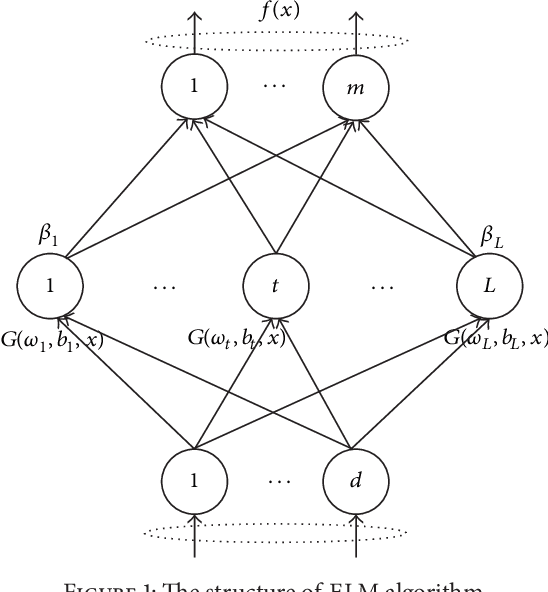

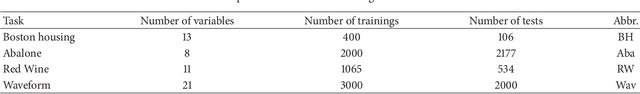

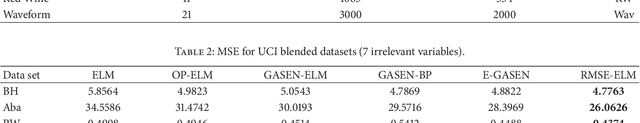

Abstract:Extreme learning machine (ELM) as an emerging branch of shallow networks has shown its excellent generalization and fast learning speed. However, for blended data, the robustness of ELM is weak because its weights and biases of hidden nodes are set randomly. Moreover, the noisy data exert a negative effect. To solve this problem, a new framework called RMSE-ELM is proposed in this paper. It is a two-layer recursive model. In the first layer, the framework trains lots of ELMs in different groups concurrently, then employs selective ensemble to pick out an optimal set of ELMs in each group, which can be merged into a large group of ELMs called candidate pool. In the second layer, selective ensemble is recursively used on candidate pool to acquire the final ensemble. In the experiments, we apply UCI blended datasets to confirm the robustness of our new approach in two key aspects (mean square error and standard deviation). The space complexity of our method is increased to some degree, but the results have shown that RMSE-ELM significantly improves robustness with slightly computational time compared with representative methods (ELM, OP-ELM, GASEN-ELM, GASEN-BP and E-GASEN). It becomes a potential framework to solve robustness issue of ELM for high-dimensional blended data in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge