Alireza Sedghi

Deep Information Theoretic Registration

Dec 31, 2018

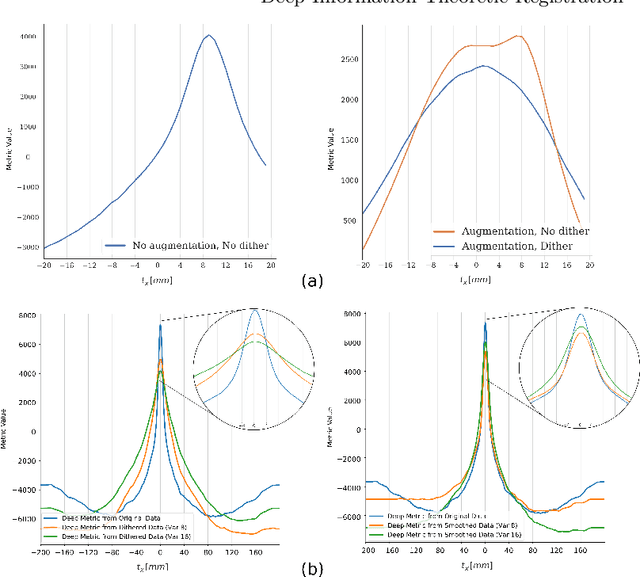

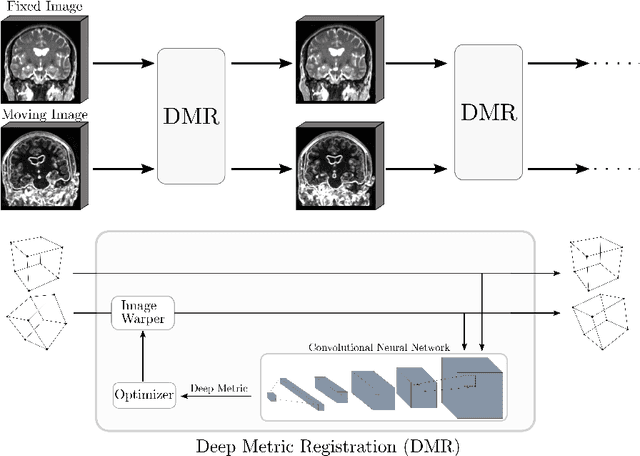

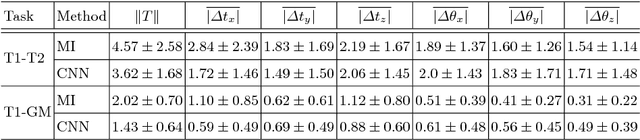

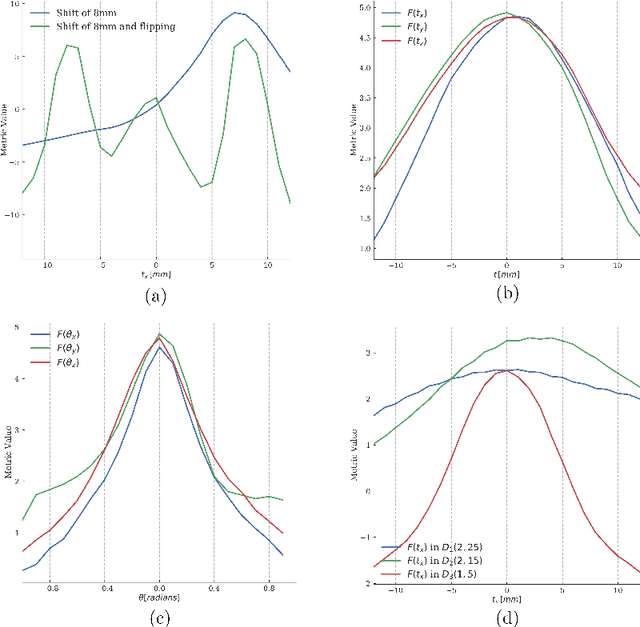

Abstract:This paper establishes an information theoretic framework for deep metric based image registration techniques. We show an exact equivalence between maximum profile likelihood and minimization of joint entropy, an important early information theoretic registration method. We further derive deep classifier-based metrics that can be used with iterated maximum likelihood to achieve Deep Information Theoretic Registration on patches rather than pixels. This alleviates a major shortcoming of previous information theoretic registration approaches, namely the implicit pixel-wise independence assumptions. Our proposed approach does not require well-registered training data; this brings previous fully supervised deep metric registration approaches to the realm of weak supervision. We evaluate our approach on several image registration tasks and show significantly better performance compared to mutual information, specifically when images have substantially different contrasts. This work enables general-purpose registration in applications where current methods are not successful.

Semi-Supervised Deep Metrics for Image Registration

Apr 04, 2018

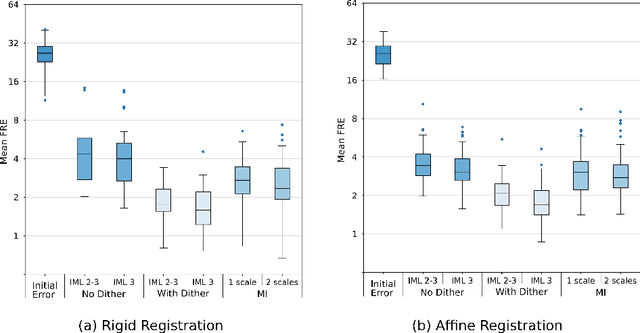

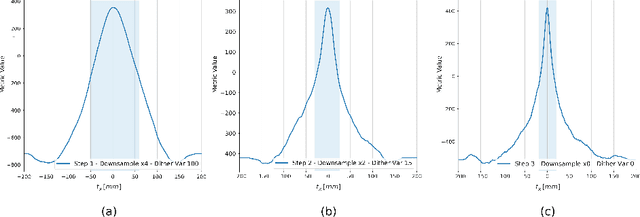

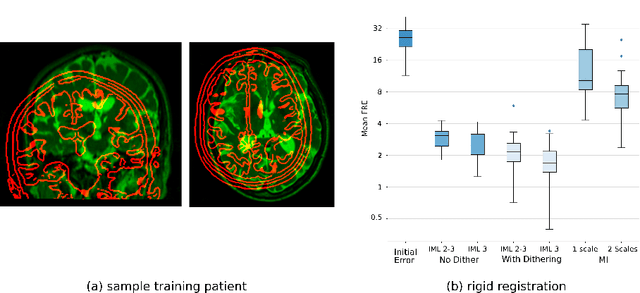

Abstract:Deep metrics have been shown effective as similarity measures in multi-modal image registration; however, the metrics are currently constructed from aligned image pairs in the training data. In this paper, we propose a strategy for learning such metrics from roughly aligned training data. Symmetrizing the data corrects bias in the metric that results from misalignment in the data (at the expense of increased variance), while random perturbations to the data, i.e. dithering, ensures that the metric has a single mode, and is amenable to registration by optimization. Evaluation is performed on the task of registration on separate unseen test image pairs. The results demonstrate the feasibility of learning a useful deep metric from substantially misaligned training data, in some cases the results are significantly better than from Mutual Information. Data augmentation via dithering is, therefore, an effective strategy for discharging the need for well-aligned training data; this brings deep metric registration from the realm of supervised to semi-supervised machine learning.

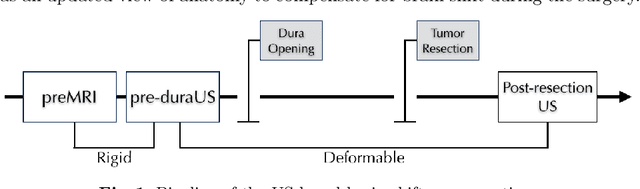

A Feature-Driven Active Framework for Ultrasound-Based Brain Shift Compensation

Mar 20, 2018

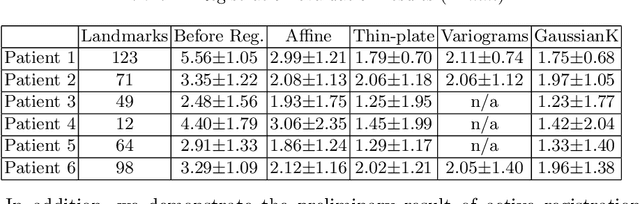

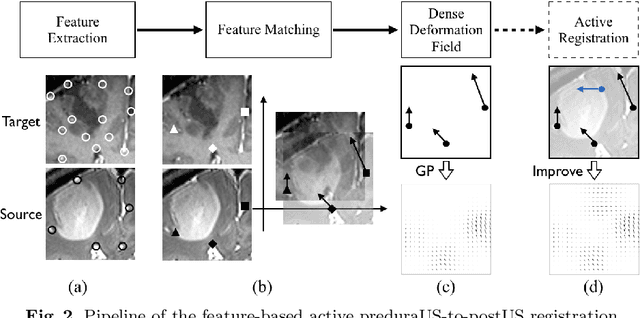

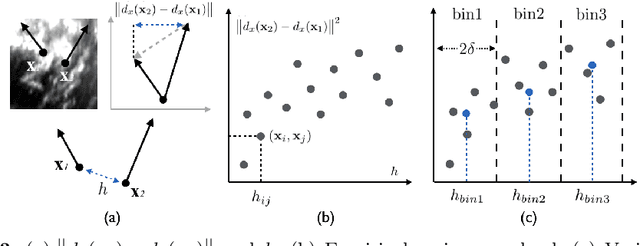

Abstract:A reliable Ultrasound (US)-to-US registration method to compensate for brain shift would substantially improve Image-Guided Neurological Surgery. Developing such a registration method is very challenging, due to factors such as missing correspondence in images, the complexity of brain pathology and the demand for fast computation. We propose a novel feature-driven active framework. Here, landmarks and their displacement are first estimated from a pair of US images using corresponding local image features. Subsequently, a Gaussian Process (GP) model is used to interpolate a dense deformation field from the sparse landmarks. Kernels of the GP are estimated by using variograms and a discrete grid search method. If necessary, the user can actively add new landmarks based on the image context and visualization of the uncertainty measure provided by the GP to further improve the result. We retrospectively demonstrate our registration framework as a robust and accurate brain shift compensation solution on clinical data acquired during neurosurgery.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge