Deep Information Theoretic Registration

Paper and Code

Dec 31, 2018

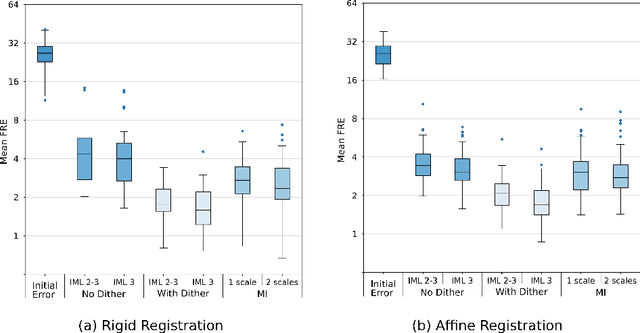

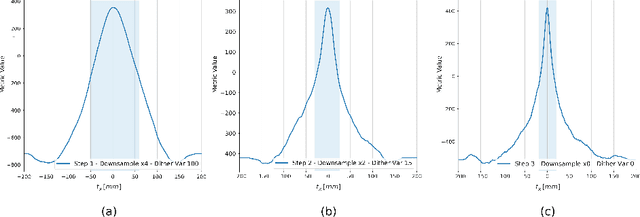

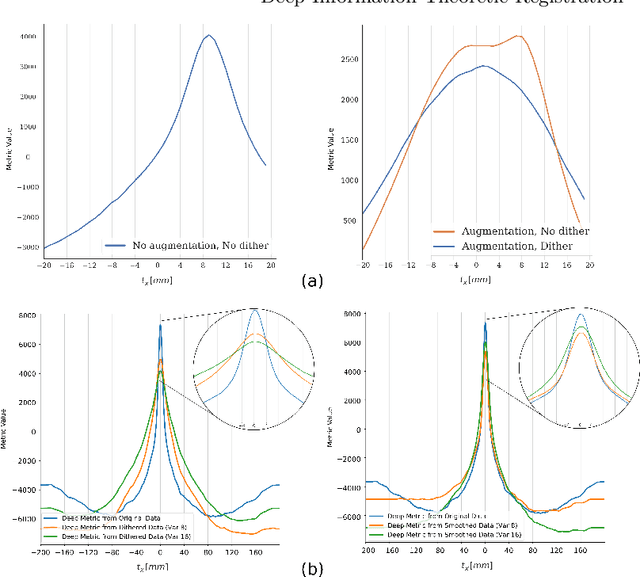

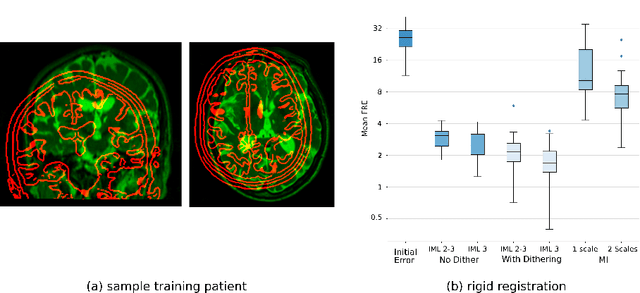

This paper establishes an information theoretic framework for deep metric based image registration techniques. We show an exact equivalence between maximum profile likelihood and minimization of joint entropy, an important early information theoretic registration method. We further derive deep classifier-based metrics that can be used with iterated maximum likelihood to achieve Deep Information Theoretic Registration on patches rather than pixels. This alleviates a major shortcoming of previous information theoretic registration approaches, namely the implicit pixel-wise independence assumptions. Our proposed approach does not require well-registered training data; this brings previous fully supervised deep metric registration approaches to the realm of weak supervision. We evaluate our approach on several image registration tasks and show significantly better performance compared to mutual information, specifically when images have substantially different contrasts. This work enables general-purpose registration in applications where current methods are not successful.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge