Ali Safa

Real-Time Human-Robot Interaction Intent Detection Using RGB-based Pose and Emotion Cues with Cross-Camera Model Generalization

Dec 18, 2025

Abstract:Service robots in public spaces require real-time understanding of human behavioral intentions for natural interaction. We present a practical multimodal framework for frame-accurate human-robot interaction intent detection that fuses camera-invariant 2D skeletal pose and facial emotion features extracted from monocular RGB video. Unlike prior methods requiring RGB-D sensors or GPU acceleration, our approach resource-constrained embedded hardware (Raspberry Pi 5, CPU-only). To address the severe class imbalance in natural human-robot interaction datasets, we introduce a novel approach to synthesize temporally coherent pose-emotion-label sequences for data re-balancing called MINT-RVAE (Multimodal Recurrent Variational Autoencoder for Intent Sequence Generation). Comprehensive offline evaluations under cross-subject and cross-scene protocols demonstrate strong generalization performance, achieving frame- and sequence-level AUROC of 0.95. Crucially, we validate real-world generalization through cross-camera evaluation on the MIRA robot head, which employs a different onboard RGB sensor and operates in uncontrolled environments not represented in the training data. Despite this domain shift, the deployed system achieves 91% accuracy and 100% recall across 32 live interaction trials. The close correspondence between offline and deployed performance confirms the cross-sensor and cross-environment robustness of the proposed multimodal approach, highlighting its suitability for ubiquitous multimedia-enabled social robots.

MINT-RVAE: Multi-Cues Intention Prediction of Human-Robot Interaction using Human Pose and Emotion Information from RGB-only Camera Data

Sep 26, 2025Abstract:Efficiently detecting human intent to interact with ubiquitous robots is crucial for effective human-robot interaction (HRI) and collaboration. Over the past decade, deep learning has gained traction in this field, with most existing approaches relying on multimodal inputs, such as RGB combined with depth (RGB-D), to classify time-sequence windows of sensory data as interactive or non-interactive. In contrast, we propose a novel RGB-only pipeline for predicting human interaction intent with frame-level precision, enabling faster robot responses and improved service quality. A key challenge in intent prediction is the class imbalance inherent in real-world HRI datasets, which can hinder the model's training and generalization. To address this, we introduce MINT-RVAE, a synthetic sequence generation method, along with new loss functions and training strategies that enhance generalization on out-of-sample data. Our approach achieves state-of-the-art performance (AUROC: 0.95) outperforming prior works (AUROC: 0.90-0.912), while requiring only RGB input and supporting precise frame onset prediction. Finally, to support future research, we openly release our new dataset with frame-level labeling of human interaction intent.

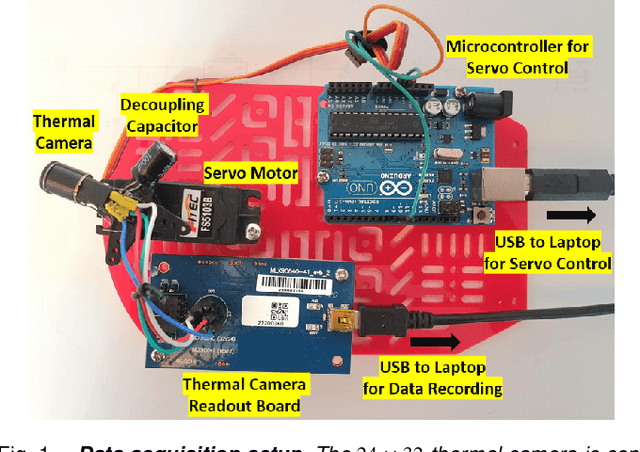

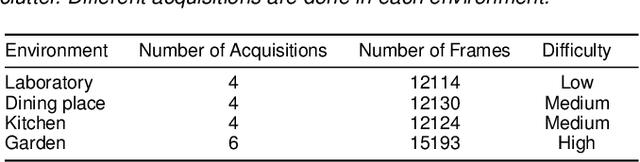

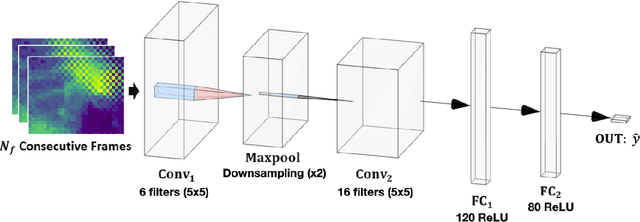

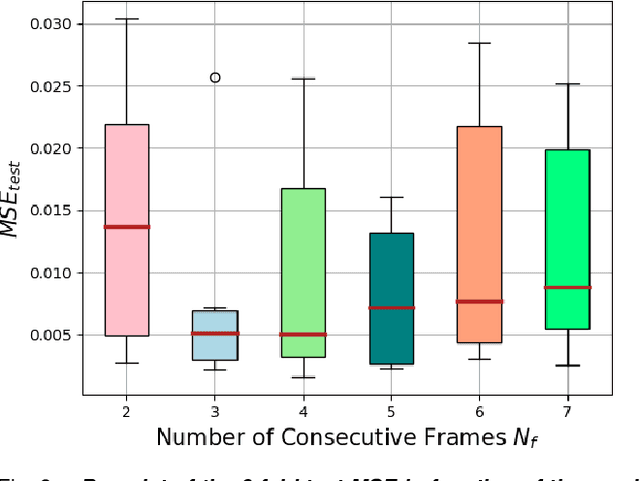

Deep Fusion of Ultra-Low-Resolution Thermal Camera and Gyroscope Data for Lighting-Robust and Compute-Efficient Rotational Odometry

Jun 14, 2025

Abstract:Accurate rotational odometry is crucial for autonomous robotic systems, particularly for small, power-constrained platforms such as drones and mobile robots. This study introduces thermal-gyro fusion, a novel sensor fusion approach that integrates ultra-low-resolution thermal imaging with gyroscope readings for rotational odometry. Unlike RGB cameras, thermal imaging is invariant to lighting conditions and, when fused with gyroscopic data, mitigates drift which is a common limitation of inertial sensors. We first develop a multimodal data acquisition system to collect synchronized thermal and gyroscope data, along with rotational speed labels, across diverse environments. Subsequently, we design and train a lightweight Convolutional Neural Network (CNN) that fuses both modalities for rotational speed estimation. Our analysis demonstrates that thermal-gyro fusion enables a significant reduction in thermal camera resolution without significantly compromising accuracy, thereby improving computational efficiency and memory utilization. These advantages make our approach well-suited for real-time deployment in resource-constrained robotic systems. Finally, to facilitate further research, we publicly release our dataset as supplementary material.

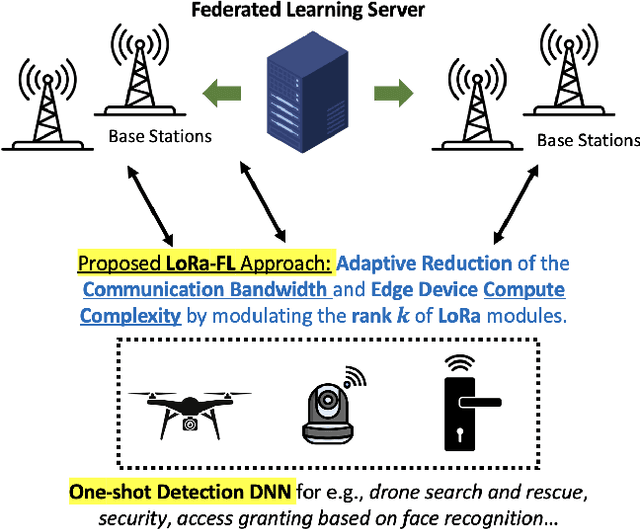

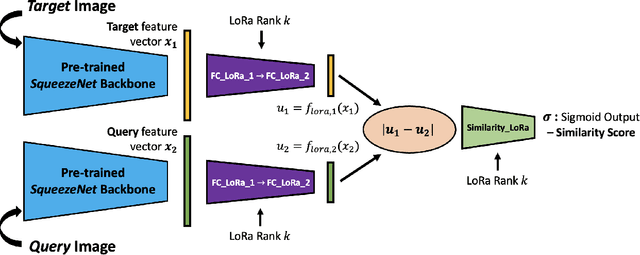

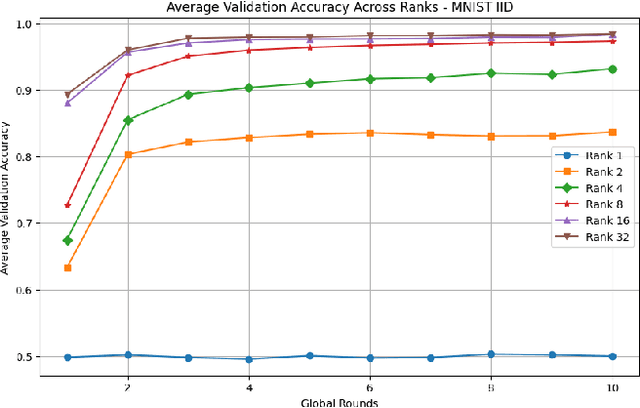

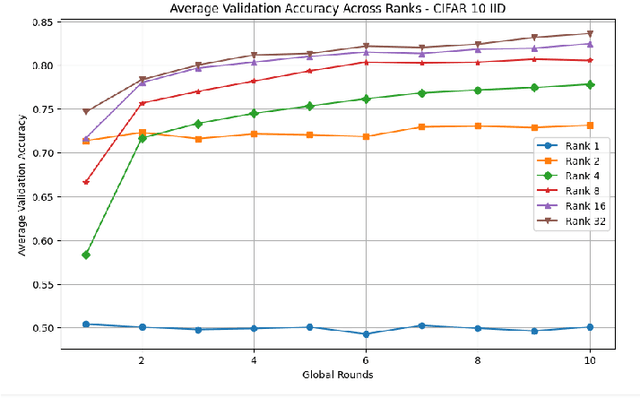

Federated Learning of Low-Rank One-Shot Image Detection Models in Edge Devices with Scalable Accuracy and Compute Complexity

Apr 23, 2025

Abstract:This paper introduces a novel federated learning framework termed LoRa-FL designed for training low-rank one-shot image detection models deployed on edge devices. By incorporating low-rank adaptation techniques into one-shot detection architectures, our method significantly reduces both computational and communication overhead while maintaining scalable accuracy. The proposed framework leverages federated learning to collaboratively train lightweight image recognition models, enabling rapid adaptation and efficient deployment across heterogeneous, resource-constrained devices. Experimental evaluations on the MNIST and CIFAR10 benchmark datasets, both in an independent-and-identically-distributed (IID) and non-IID setting, demonstrate that our approach achieves competitive detection performance while significantly reducing communication bandwidth and compute complexity. This makes it a promising solution for adaptively reducing the communication and compute power overheads, while not sacrificing model accuracy.

Benchmarking Online Object Trackers for Underwater Robot Position Locking Applications

Feb 23, 2025

Abstract:Autonomously controlling the position of Remotely Operated underwater Vehicles (ROVs) is of crucial importance for a wide range of underwater engineering applications, such as in the inspection and maintenance of underwater industrial structures. Consequently, studying vision-based underwater robot navigation and control has recently gained increasing attention to counter the numerous challenges faced in underwater conditions, such as lighting variability, turbidity, camera image distortions (due to bubbles), and ROV positional disturbances (due to underwater currents). In this paper, we propose (to the best of our knowledge) a first rigorous unified benchmarking of more than seven Machine Learning (ML)-based one-shot object tracking algorithms for vision-based position locking of ROV platforms. We propose a position-locking system that processes images of an object of interest in front of which the ROV must be kept stable. Then, our proposed system uses the output result of different object tracking algorithms to automatically correct the position of the ROV against external disturbances. We conducted numerous real-world experiments using a BlueROV2 platform within an indoor pool and provided clear demonstrations of the strengths and weaknesses of each tracking approach. Finally, to help alleviate the scarcity of underwater ROV data, we release our acquired data base as open-source with the hope of benefiting future research.

Co-Design of a Robot Controller Board and Indoor Positioning System for IoT-Enabled Applications

Jan 02, 2025

Abstract:This paper describes the development of a cost-effective yet precise indoor robot navigation system composed of a custom robot controller board and an indoor positioning system. First, the proposed robot controller board has been specially designed for emerging IoT-based robot applications and is capable of driving two 6-Amp motor channels. The controller board also embeds an on-board micro-controller with WIFI connectivity, enabling robot-to-server communications for IoT applications. Then, working together with the robot controller board, the proposed positioning system detects the robot's location using a down-looking webcam and uses the robot's position on the webcam images to estimate the real-world position of the robot in the environment. The positioning system can then send commands via WIFI to the robot in order to steer it to any arbitrary location in the environment. Our experiments show that the proposed system reaches a navigation error smaller or equal to 0.125 meters while being more than two orders of magnitude more cost-effective compared to off-the-shelve motion capture (MOCAP) positioning systems.

Rotational Odometry using Ultra Low Resolution Thermal Cameras

Nov 02, 2024

Abstract:This letter provides what is, to the best of our knowledge, a first study on the applicability of ultra-low-resolution thermal cameras for providing rotational odometry measurements to navigational devices such as rovers and drones. Our use of an ultra-low-resolution thermal camera instead of other modalities such as an RGB camera is motivated by its robustness to lighting conditions, while being one order of magnitude less cost-expensive compared to higher-resolution thermal cameras. After setting up a custom data acquisition system and acquiring thermal camera data together with its associated rotational speed label, we train a small 4-layer Convolutional Neural Network (CNN) for regressing the rotational speed from the thermal data. Experiments and ablation studies are conducted for determining the impact of thermal camera resolution and the number of successive frames on the CNN estimation precision. Finally, our novel dataset for the study of low-resolution thermal odometry is openly released with the hope of benefiting future research.

Automating the Design of Multi-band Microstrip Antennas via Uniform Cross-Entropy Optimizatio

Oct 03, 2024

Abstract:Automating the design of microstrip antennas has been an active area of research for the past decade. By leveraging machine learning techniques such as Genetic Algorithms (GAs) or, more recently, Deep Neural Networks (DNNs), a number of work have demonstrated the possibility of producing non-trivial antenna geometries that can be efficient in terms of area utilization or be used in complex multi-frequency-band scenarios. However, both GAs and DNNs are notoriously compute-expensive, often requiring hour-long run times in order to produce new antenna geometries. In this paper, we propose to explore the novel use of Cross-Entropy optimization as a Monte-Carlo sampling technique for optimizing the geometry of patch antennas given a target $S_{11}$ scattering parameter curve that a user wants to obtain. We compare our proposed Uniform Cross-Entropy (UCE) method against other popular Monte-Carlo optimization techniques such as Gaussian Processes, Forest optimization and baseline random search approaches. We demonstrate that the proposed UCE technique outperforms the competing methods while still having a reasonable compute complexity, taking around 16 minutes to converge. Finally, our code is released as open-source with the hope of being useful to future research.

Continual Learning in Bio-plausible Spiking Neural Networks with Hebbian and Spike Timing Dependent Plasticity: A Survey and Perspective

Jul 24, 2024Abstract:Recently, the use bio-plausible learning techniques such as Hebbian and Spike-Timing-Dependent Plasticity (STDP) have drawn significant attention for the design of compute-efficient AI systems that can continuously learn on-line at the edge. A key differentiating factor regarding this emerging class of neuromorphic continual learning system lies in the fact that learning must be carried using a data stream received in its natural order, as opposed to conventional gradient-based offline training where a static training dataset is assumed available a priori and randomly shuffled to make the training set independent and identically distributed (i.i.d). In contrast, the emerging class of neuromorphic continual learning systems covered in this survey must learn to integrate new information on the fly in a non-i.i.d manner, which makes these systems subject to catastrophic forgetting. In order to build the next generation of neuromorphic AI systems that can continuously learn at the edge, a growing number of research groups are studying the use of bio-plausible Hebbian neural network architectures and Spiking Neural Networks (SNNs) equipped with STDP learning. However, since this research field is still emerging, there is a need for providing a holistic view of the different approaches proposed in literature so far. To this end, this survey covers a number of recent works in the field of neuromorphic continual learning; provides background theory to help interested researchers to quickly learn the key concepts; and discusses important future research questions in light of the different works covered in this paper. It is hoped that this survey will contribute towards future research in the field of neuromorphic continual learning.

Towards Chip-in-the-loop Spiking Neural Network Training via Metropolis-Hastings Sampling

Feb 09, 2024

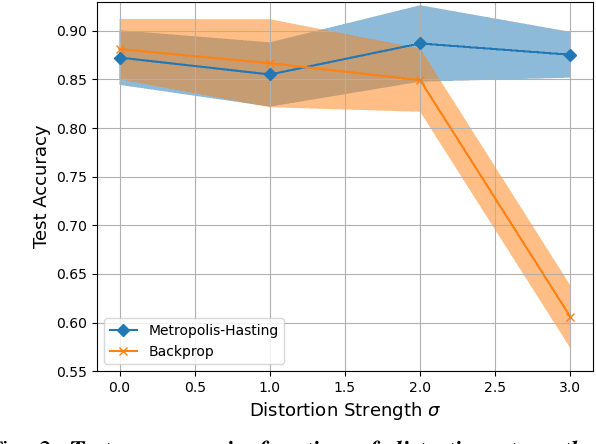

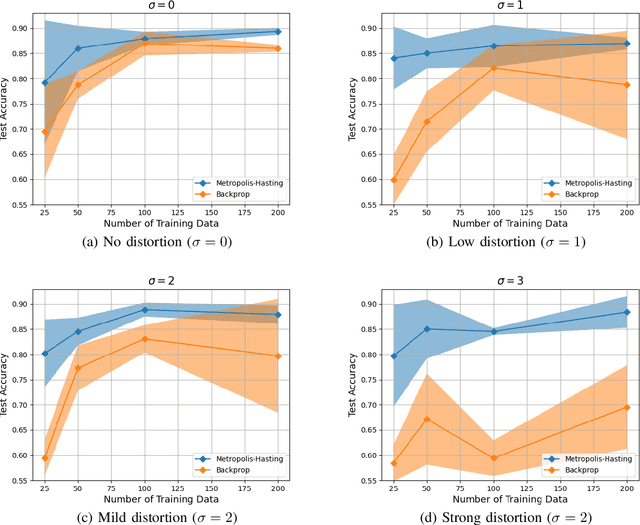

Abstract:This paper studies the use of Metropolis-Hastings sampling for training Spiking Neural Network (SNN) hardware subject to strong unknown non-idealities, and compares the proposed approach to the common use of the backpropagation of error (backprop) algorithm and surrogate gradients, widely used to train SNNs in literature. Simulations are conducted within a chip-in-the-loop training context, where an SNN subject to unknown distortion must be trained to detect cancer from measurements, within a biomedical application context. Our results show that the proposed approach strongly outperforms the use of backprop by up to $27\%$ higher accuracy when subject to strong hardware non-idealities. Furthermore, our results also show that the proposed approach outperforms backprop in terms of SNN generalization, needing $>10 \times$ less training data for achieving effective accuracy. These findings make the proposed training approach well-suited for SNN implementations in analog subthreshold circuits and other emerging technologies where unknown hardware non-idealities can jeopardize backprop.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge