Aiman Erbad

IntAgent: NWDAF-Based Intent LLM Agent Towards Advanced Next Generation Networks

Jan 19, 2026Abstract:Intent-based networks (IBNs) are gaining prominence as an innovative technology that automates network operations through high-level request statements, defining what the network should achieve. In this work, we introduce IntAgent, an intelligent intent LLM agent that integrates NWDAF analytics and tools to fulfill the network operator's intents. Unlike previous approaches, we develop an intent tools engine directly within the NWDAF analytics engine, allowing our agent to utilize live network analytics to inform its reasoning and tool selection. We offer an enriched, 3GPP-compliant data source that enhances the dynamic, context-aware fulfillment of network operator goals, along with an MCP tools server for scheduling, monitoring, and analytics tools. We demonstrate the efficacy of our framework through two practical use cases: ML-based traffic prediction and scheduled policy enforcement, which validate IntAgent's ability to autonomously fulfill complex network intents.

Balancing Decentralized Trust and Physical Evidence: A Blockchain-Physical Layer Co-Design for Real-Time 3D Prioritization in Disaster Zones

Dec 23, 2025Abstract:During disaster response, making rapid and well-informed decisions about which areas require immediate attention can save lives. However, current coordination models often struggle with unreliable data, intentional misinformation, and the breakdown of critical communication infrastructure. A decentralized, vote-based blockchain model offers a compelling substrate for achieving this real-time, trusted coordination. This article explores a blockchain-driven approach to rapidly update a dynamic 3D crisis map based on inputs from users and local sensors. Each node submits a timestamped and geotagged vote to a public ledger, enabling agencies to visualize needs as they emerge. However, ensuring the physical authenticity of these claims demands more than cryptography alone. We propose a dual-layer architecture where mobile UAV verifiers perform physical-layer attestation and issue independent location flags to the blockchain. This dual-signature mechanism fuses immutable digital records with sensory-grounded trust. We analyze core technical and human centric challenges, ranging from spoofing and vote ambiguity to verifier compromise and connectivity loss, and outline layered mitigation strategies and future research directions. As a concrete instantiation, we present a UAV mapping scheme leveraging modulated retro-reflector (MRR) sensors and 3D-aware LoS placement to maximize verifiability under urban occlusion, offering a path toward resilient, trust-anchored crisis coordination.

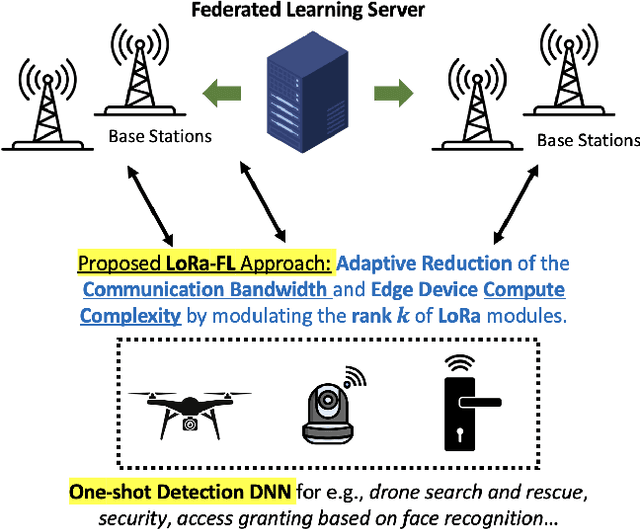

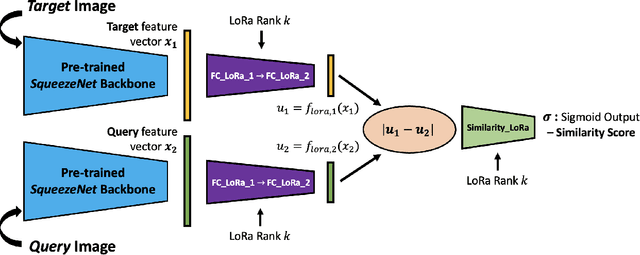

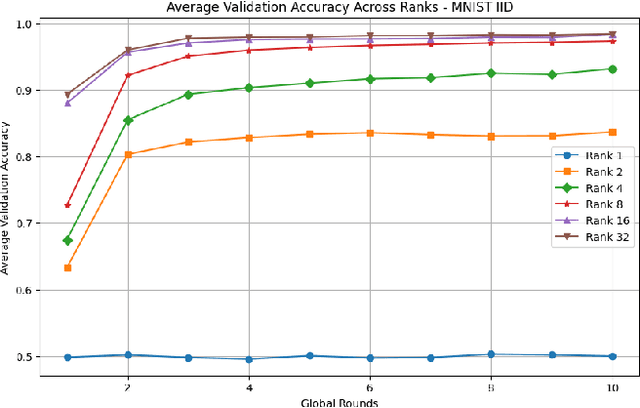

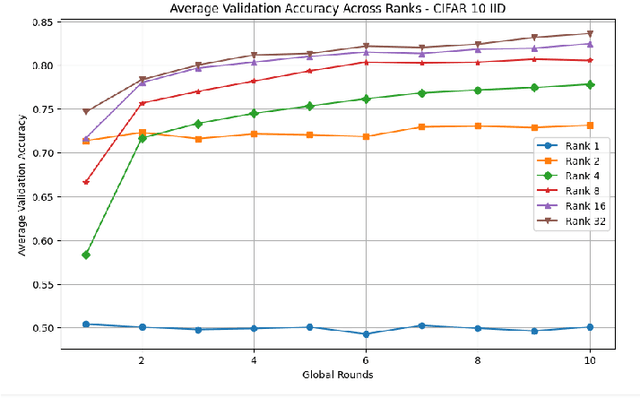

Federated Learning of Low-Rank One-Shot Image Detection Models in Edge Devices with Scalable Accuracy and Compute Complexity

Apr 23, 2025

Abstract:This paper introduces a novel federated learning framework termed LoRa-FL designed for training low-rank one-shot image detection models deployed on edge devices. By incorporating low-rank adaptation techniques into one-shot detection architectures, our method significantly reduces both computational and communication overhead while maintaining scalable accuracy. The proposed framework leverages federated learning to collaboratively train lightweight image recognition models, enabling rapid adaptation and efficient deployment across heterogeneous, resource-constrained devices. Experimental evaluations on the MNIST and CIFAR10 benchmark datasets, both in an independent-and-identically-distributed (IID) and non-IID setting, demonstrate that our approach achieves competitive detection performance while significantly reducing communication bandwidth and compute complexity. This makes it a promising solution for adaptively reducing the communication and compute power overheads, while not sacrificing model accuracy.

Multi-Agent DRL for Queue-Aware Task Offloading in Hierarchical MEC-Enabled Air-Ground Networks

Mar 05, 2025

Abstract:Mobile edge computing (MEC)-enabled air-ground networks are a key component of 6G, employing aerial base stations (ABSs) such as unmanned aerial vehicles (UAVs) and high-altitude platform stations (HAPS) to provide dynamic services to ground IoT devices (IoTDs). These IoTDs support real-time applications (e.g., multimedia and Metaverse services) that demand high computational resources and strict quality of service (QoS) guarantees in terms of latency and task queue management. Given their limited energy and processing capabilities, IoTDs rely on UAVs and HAPS to offload tasks for distributed processing, forming a multi-tier MEC system. This paper tackles the overall energy minimization problem in MEC-enabled air-ground integrated networks (MAGIN) by jointly optimizing UAV trajectories, computing resource allocation, and queue-aware task offloading decisions. The optimization is challenging due to the nonconvex, nonlinear nature of this hierarchical system, which renders traditional methods ineffective. We reformulate the problem as a multi-agent Markov decision process (MDP) with continuous action spaces and heterogeneous agents, and propose a novel variant of multi-agent proximal policy optimization with a Beta distribution (MAPPO-BD) to solve it. Extensive simulations show that MAPPO-BD outperforms baseline schemes, achieving superior energy savings and efficient resource management in MAGIN while meeting queue delay and edge computing constraints.

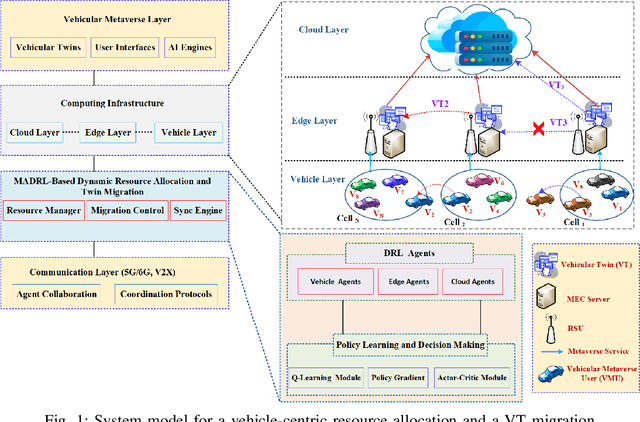

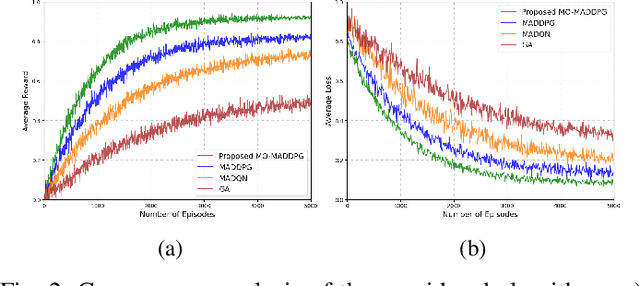

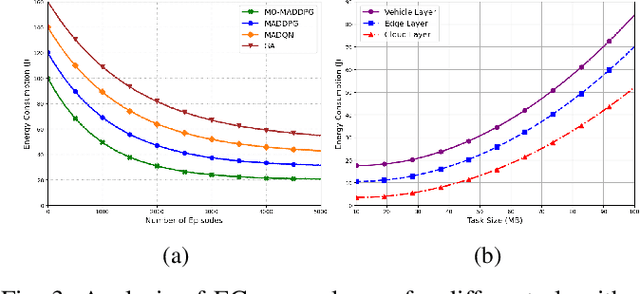

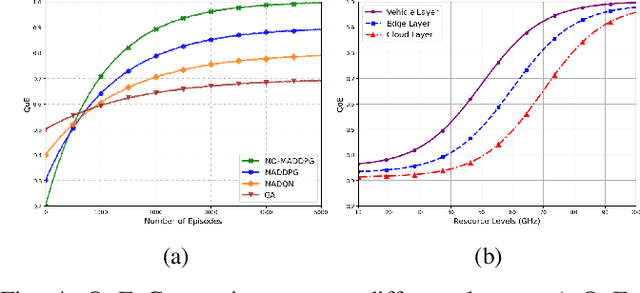

A Multi-Agent DRL-Based Framework for Optimal Resource Allocation and Twin Migration in the Multi-Tier Vehicular Metaverse

Feb 26, 2025

Abstract:Although multi-tier vehicular Metaverse promises to transform vehicles into essential nodes -- within an interconnected digital ecosystem -- using efficient resource allocation and seamless vehicular twin (VT) migration, this can hardly be achieved by the existing techniques operating in a highly dynamic vehicular environment, since they can hardly balance multi-objective optimization problems such as latency reduction, resource utilization, and user experience (UX). To address these challenges, we introduce a novel multi-tier resource allocation and VT migration framework that integrates Graph Convolutional Networks (GCNs), a hierarchical Stackelberg game-based incentive mechanism, and Multi-Agent Deep Reinforcement Learning (MADRL). The GCN-based model captures both spatial and temporal dependencies within the vehicular network; the Stackelberg game-based incentive mechanism fosters cooperation between vehicles and infrastructure; and the MADRL algorithm jointly optimizes resource allocation and VT migration in real time. By modeling this dynamic and multi-tier vehicular Metaverse as a Markov Decision Process (MDP), we develop a MADRL-based algorithm dubbed the Multi-Objective Multi-Agent Deep Deterministic Policy Gradient (MO-MADDPG), which can effectively balances the various conflicting objectives. Extensive simulations validate the effectiveness of this algorithm that is demonstrated to enhance scalability, reliability, and efficiency while considerably improving latency, resource utilization, migration cost, and overall UX by 12.8%, 9.7%, 14.2%, and 16.1%, respectively.

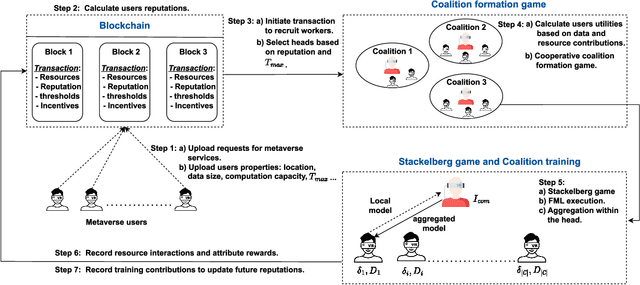

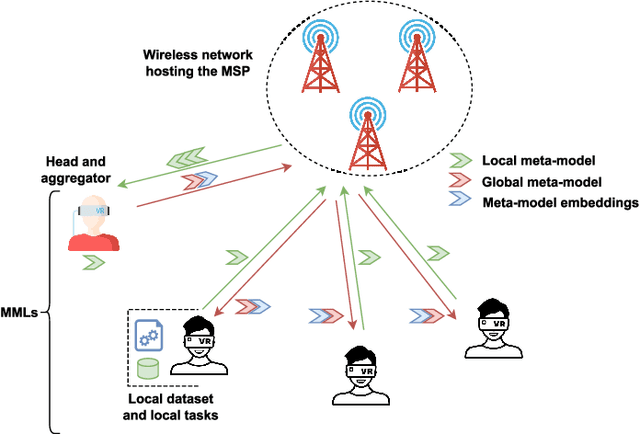

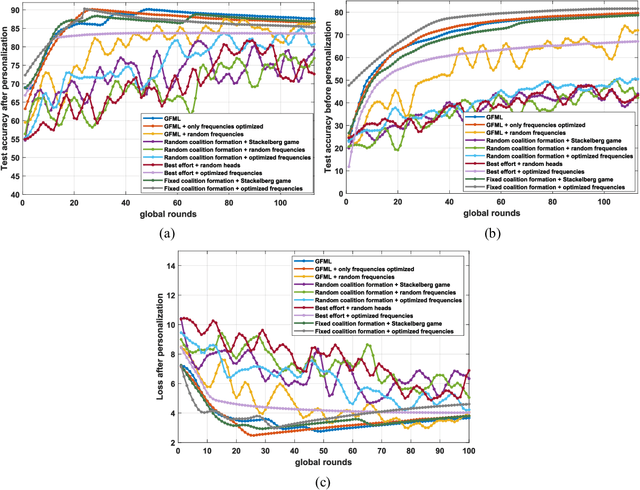

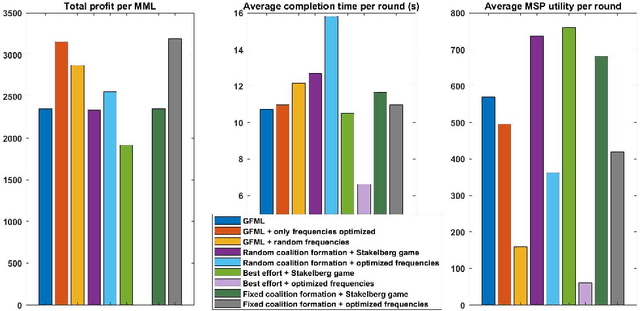

A Blockchain-based Reliable Federated Meta-learning for Metaverse: A Dual Game Framework

Aug 07, 2024

Abstract:The metaverse, envisioned as the next digital frontier for avatar-based virtual interaction, involves high-performance models. In this dynamic environment, users' tasks frequently shift, requiring fast model personalization despite limited data. This evolution consumes extensive resources and requires vast data volumes. To address this, meta-learning emerges as an invaluable tool for metaverse users, with federated meta-learning (FML), offering even more tailored solutions owing to its adaptive capabilities. However, the metaverse is characterized by users heterogeneity with diverse data structures, varied tasks, and uneven sample sizes, potentially undermining global training outcomes due to statistical difference. Given this, an urgent need arises for smart coalition formation that accounts for these disparities. This paper introduces a dual game-theoretic framework for metaverse services involving meta-learners as workers to manage FML. A blockchain-based cooperative coalition formation game is crafted, grounded on a reputation metric, user similarity, and incentives. We also introduce a novel reputation system based on users' historical contributions and potential contributions to present tasks, leveraging correlations between past and new tasks. Finally, a Stackelberg game-based incentive mechanism is presented to attract reliable workers to participate in meta-learning, minimizing users' energy costs, increasing payoffs, boosting FML efficacy, and improving metaverse utility. Results show that our dual game framework outperforms best-effort, random, and non-uniform clustering schemes - improving training performance by up to 10%, cutting completion times by as much as 30%, enhancing metaverse utility by more than 25%, and offering up to 5% boost in training efficiency over non-blockchain systems, effectively countering misbehaving users.

* Accepted in IEEE Internet of Things Journal

Multi-UAV Multi-RIS QoS-Aware Aerial Communication Systems using DRL and PSO

Jun 16, 2024

Abstract:Recently, Unmanned Aerial Vehicles (UAVs) have attracted the attention of researchers in academia and industry for providing wireless services to ground users in diverse scenarios like festivals, large sporting events, natural and man-made disasters due to their advantages in terms of versatility and maneuverability. However, the limited resources of UAVs (e.g., energy budget and different service requirements) can pose challenges for adopting UAVs for such applications. Our system model considers a UAV swarm that navigates an area, providing wireless communication to ground users with RIS support to improve the coverage of the UAVs. In this work, we introduce an optimization model with the aim of maximizing the throughput and UAVs coverage through optimal path planning of UAVs and multi-RIS phase configurations. The formulated optimization is challenging to solve using standard linear programming techniques, limiting its applicability in real-time decision-making. Therefore, we introduce a two-step solution using deep reinforcement learning and particle swarm optimization. We conduct extensive simulations and compare our approach to two competitive solutions presented in the recent literature. Our simulation results demonstrate that our adopted approach is 20 \% better than the brute-force approach and 30\% better than the baseline solution in terms of QoS.

LEMDA: A Novel Feature Engineering Method for Intrusion Detection in IoT Systems

Apr 20, 2024

Abstract:Intrusion detection systems (IDS) for the Internet of Things (IoT) systems can use AI-based models to ensure secure communications. IoT systems tend to have many connected devices producing massive amounts of data with high dimensionality, which requires complex models. Complex models have notorious problems such as overfitting, low interpretability, and high computational complexity. Adding model complexity penalty (i.e., regularization) can ease overfitting, but it barely helps interpretability and computational efficiency. Feature engineering can solve these issues; hence, it has become critical for IDS in large-scale IoT systems to reduce the size and dimensionality of data, resulting in less complex models with excellent performance, smaller data storage, and fast detection. This paper proposes a new feature engineering method called LEMDA (Light feature Engineering based on the Mean Decrease in Accuracy). LEMDA applies exponential decay and an optional sensitivity factor to select and create the most informative features. The proposed method has been evaluated and compared to other feature engineering methods using three IoT datasets and four AI/ML models. The results show that LEMDA improves the F1 score performance of all the IDS models by an average of 34% and reduces the average training and detection times in most cases.

Meta Reinforcement Learning for Strategic IoT Deployments Coverage in Disaster-Response UAV Swarms

Jan 20, 2024

Abstract:In the past decade, Unmanned Aerial Vehicles (UAVs) have grabbed the attention of researchers in academia and industry for their potential use in critical emergency applications, such as providing wireless services to ground users and collecting data from areas affected by disasters, due to their advantages in terms of maneuverability and movement flexibility. The UAVs' limited resources, energy budget, and strict mission completion time have posed challenges in adopting UAVs for these applications. Our system model considers a UAV swarm that navigates an area collecting data from ground IoT devices focusing on providing better service for strategic locations and allowing UAVs to join and leave the swarm (e.g., for recharging) in a dynamic way. In this work, we introduce an optimization model with the aim of minimizing the total energy consumption and provide the optimal path planning of UAVs under the constraints of minimum completion time and transmit power. The formulated optimization is NP-hard making it not applicable for real-time decision making. Therefore, we introduce a light-weight meta-reinforcement learning solution that can also cope with sudden changes in the environment through fast convergence. We conduct extensive simulations and compare our approach to three state-of-the-art learning models. Our simulation results prove that our introduced approach is better than the three state-of-the-art algorithms in providing coverage to strategic locations with fast convergence.

Intelligent DRL-Based Adaptive Region of Interest for Delay-sensitive Telemedicine Applications

Oct 08, 2023

Abstract:Telemedicine applications have recently received substantial potential and interest, especially after the COVID-19 pandemic. Remote experience will help people get their complex surgery done or transfer knowledge to local surgeons, without the need to travel abroad. Even with breakthrough improvements in internet speeds, the delay in video streaming is still a hurdle in telemedicine applications. This imposes using image compression and region of interest (ROI) techniques to reduce the data size and transmission needs. This paper proposes a Deep Reinforcement Learning (DRL) model that intelligently adapts the ROI size and non-ROI quality depending on the estimated throughput. The delay and structural similarity index measure (SSIM) comparison are used to assess the DRL model. The comparison findings and the practical application reveal that DRL is capable of reducing the delay by 13% and keeping the overall quality in an acceptable range. Since the latency has been significantly reduced, these findings are a valuable enhancement to telemedicine applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge