Georges Gielen

AnaFlow: Agentic LLM-based Workflow for Reasoning-Driven Explainable and Sample-Efficient Analog Circuit Sizing

Nov 05, 2025Abstract:Analog/mixed-signal circuits are key for interfacing electronics with the physical world. Their design, however, remains a largely handcrafted process, resulting in long and error-prone design cycles. While the recent rise of AI-based reinforcement learning and generative AI has created new techniques to automate this task, the need for many time-consuming simulations is a critical bottleneck hindering the overall efficiency. Furthermore, the lack of explainability of the resulting design solutions hampers widespread adoption of the tools. To address these issues, a novel agentic AI framework for sample-efficient and explainable analog circuit sizing is presented. It employs a multi-agent workflow where specialized Large Language Model (LLM)-based agents collaborate to interpret the circuit topology, to understand the design goals, and to iteratively refine the circuit's design parameters towards the target goals with human-interpretable reasoning. The adaptive simulation strategy creates an intelligent control that yields a high sample efficiency. The AnaFlow framework is demonstrated for two circuits of varying complexity and is able to complete the sizing task fully automatically, differently from pure Bayesian optimization and reinforcement learning approaches. The system learns from its optimization history to avoid past mistakes and to accelerate convergence. The inherent explainability makes this a powerful tool for analog design space exploration and a new paradigm in analog EDA, where AI agents serve as transparent design assistants.

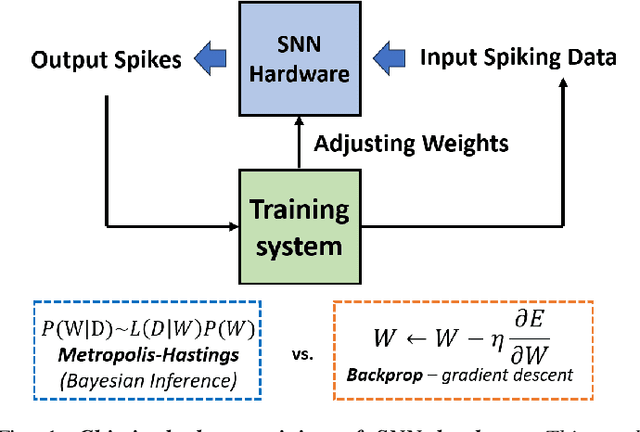

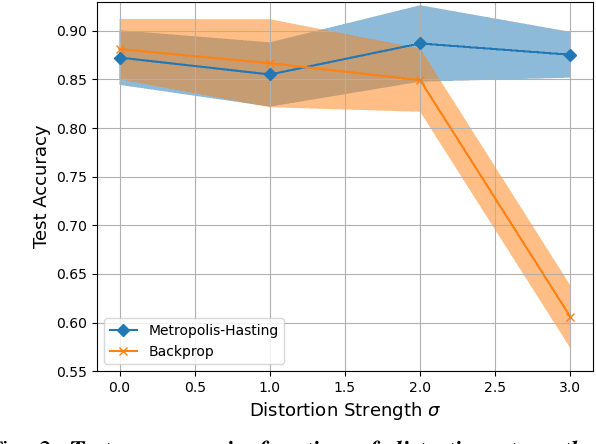

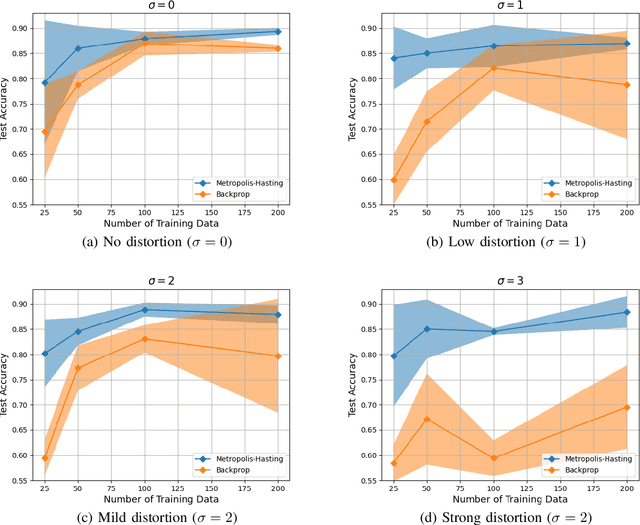

Towards Chip-in-the-loop Spiking Neural Network Training via Metropolis-Hastings Sampling

Feb 09, 2024

Abstract:This paper studies the use of Metropolis-Hastings sampling for training Spiking Neural Network (SNN) hardware subject to strong unknown non-idealities, and compares the proposed approach to the common use of the backpropagation of error (backprop) algorithm and surrogate gradients, widely used to train SNNs in literature. Simulations are conducted within a chip-in-the-loop training context, where an SNN subject to unknown distortion must be trained to detect cancer from measurements, within a biomedical application context. Our results show that the proposed approach strongly outperforms the use of backprop by up to $27\%$ higher accuracy when subject to strong hardware non-idealities. Furthermore, our results also show that the proposed approach outperforms backprop in terms of SNN generalization, needing $>10 \times$ less training data for achieving effective accuracy. These findings make the proposed training approach well-suited for SNN implementations in analog subthreshold circuits and other emerging technologies where unknown hardware non-idealities can jeopardize backprop.

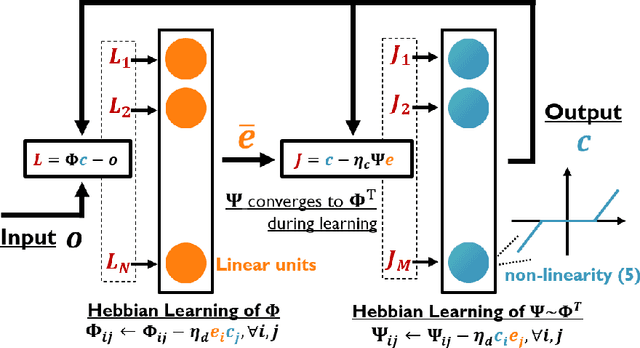

Active Inference in Hebbian Learning Networks

Jun 22, 2023

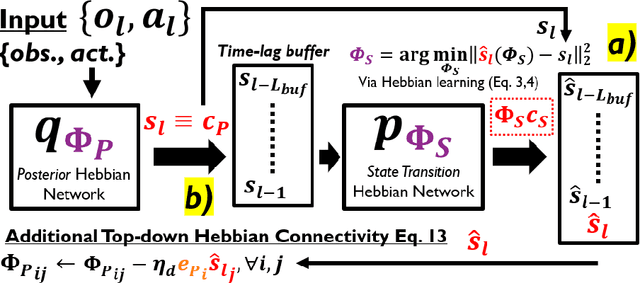

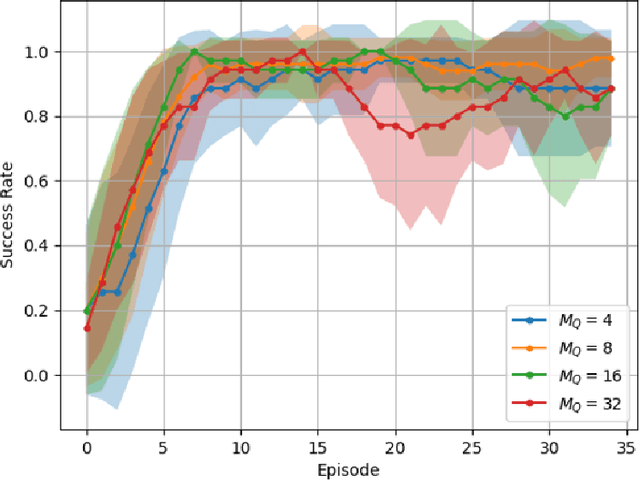

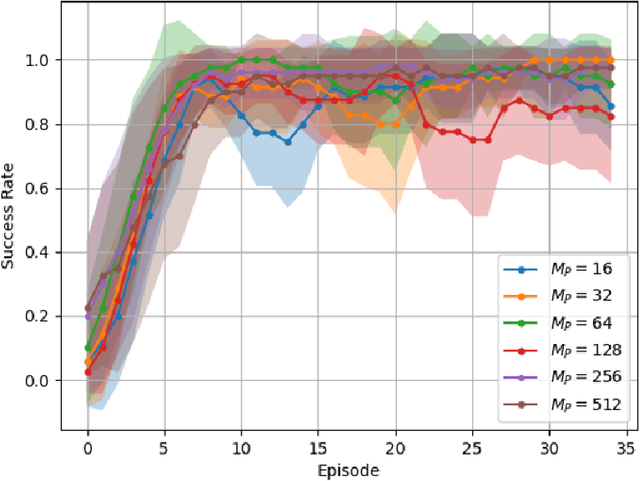

Abstract:This work studies how brain-inspired neural ensembles equipped with local Hebbian plasticity can perform active inference (AIF) in order to control dynamical agents. A generative model capturing the environment dynamics is learned by a network composed of two distinct Hebbian ensembles: a posterior network, which infers latent states given the observations, and a state transition network, which predicts the next expected latent state given current state-action pairs. Experimental studies are conducted using the Mountain Car environment from the OpenAI gym suite, to study the effect of the various Hebbian network parameters on the task performance. It is shown that the proposed Hebbian AIF approach outperforms the use of Q-learning, while not requiring any replay buffer, as in typical reinforcement learning systems. These results motivate further investigations of Hebbian learning for the design of AIF networks that can learn environment dynamics without the need for revisiting past buffered experiences.

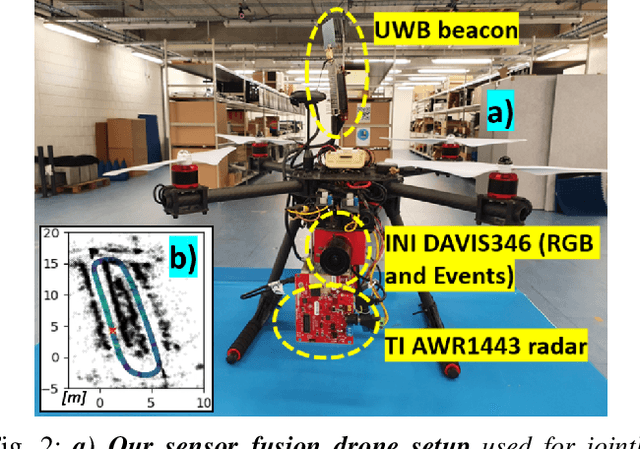

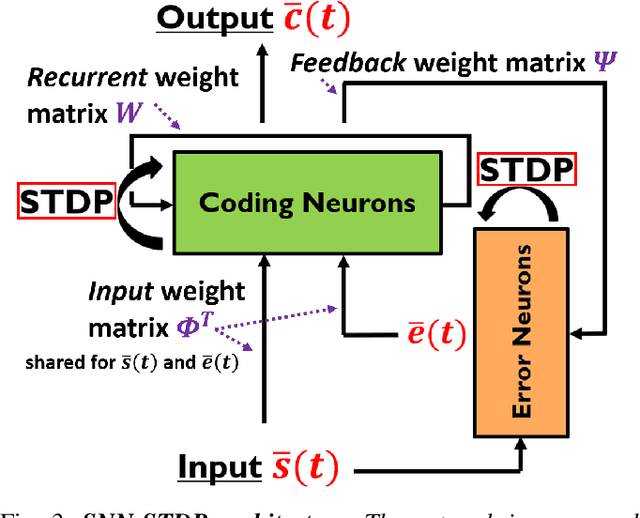

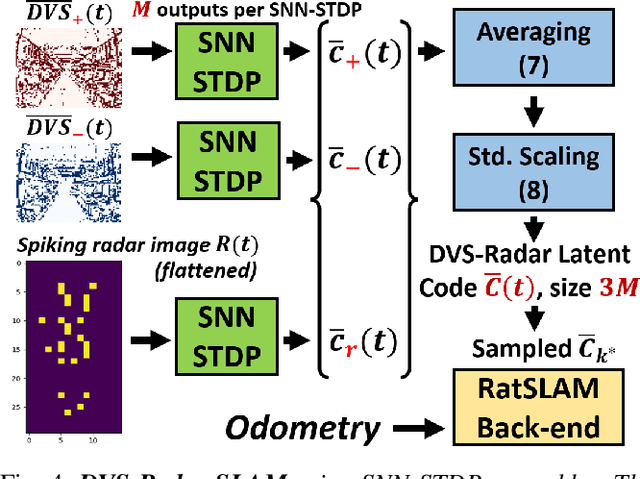

Fusing Event-based Camera and Radar for SLAM Using Spiking Neural Networks with Continual STDP Learning

Oct 09, 2022

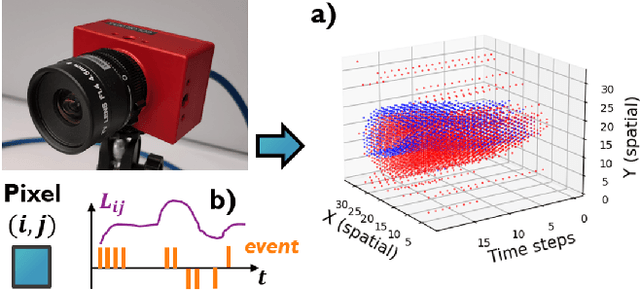

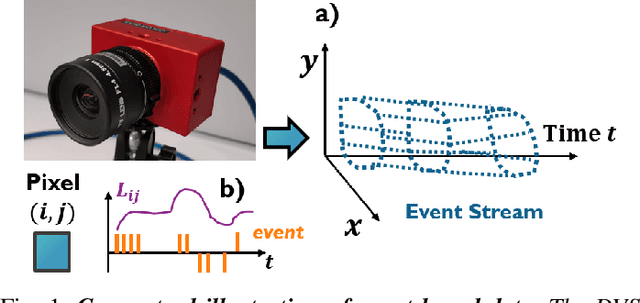

Abstract:This work proposes a first-of-its-kind SLAM architecture fusing an event-based camera and a Frequency Modulated Continuous Wave (FMCW) radar for drone navigation. Each sensor is processed by a bio-inspired Spiking Neural Network (SNN) with continual Spike-Timing-Dependent Plasticity (STDP) learning, as observed in the brain. In contrast to most learning-based SLAM systems%, which a) require the acquisition of a representative dataset of the environment in which navigation must be performed and b) require an off-line training phase, our method does not require any offline training phase, but rather the SNN continuously learns features from the input data on the fly via STDP. At the same time, the SNN outputs are used as feature descriptors for loop closure detection and map correction. We conduct numerous experiments to benchmark our system against state-of-the-art RGB methods and we demonstrate the robustness of our DVS-Radar SLAM approach under strong lighting variations.

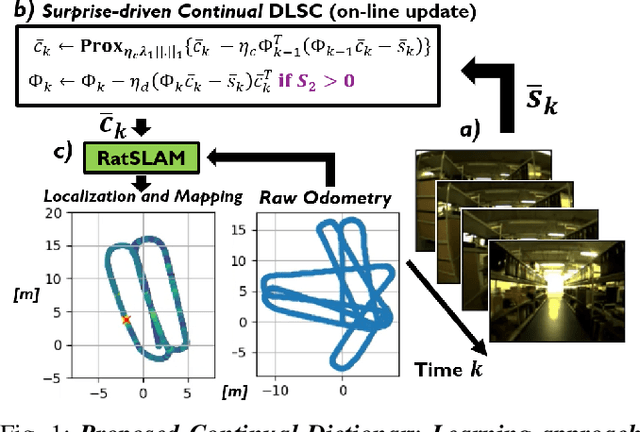

Learning to SLAM on the Fly in Unknown Environments: A Continual Learning Approach for Drones in Visually Ambiguous Scenes

Aug 27, 2022

Abstract:Learning to safely navigate in unknown environments is an important task for autonomous drones used in surveillance and rescue operations. In recent years, a number of learning-based Simultaneous Localisation and Mapping (SLAM) systems relying on deep neural networks (DNNs) have been proposed for applications where conventional feature descriptors do not perform well. However, such learning-based SLAM systems rely on DNN feature encoders trained offline in typical deep learning settings. This makes them less suited for drones deployed in environments unseen during training, where continual adaptation is paramount. In this paper, we present a new method for learning to SLAM on the fly in unknown environments, by modulating a low-complexity Dictionary Learning and Sparse Coding (DLSC) pipeline with a newly proposed Quadratic Bayesian Surprise (QBS) factor. We experimentally validate our approach with data collected by a drone in a challenging warehouse scenario, where the high number of ambiguous scenes makes visual disambiguation hard.

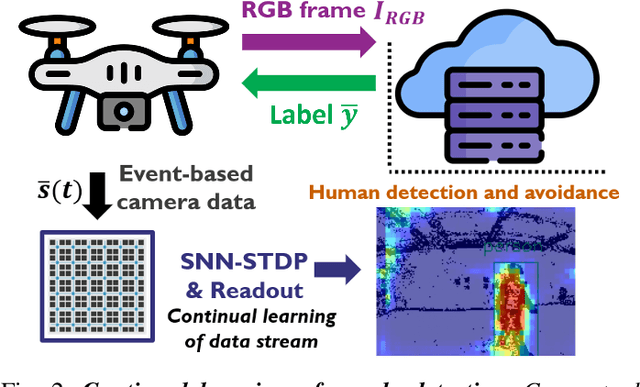

Continuously Learning to Detect People on the Fly: A Bio-inspired Visual System for Drones

Feb 20, 2022

Abstract:This paper demonstrates for the first time that a biologically-plausible spiking neural network (SNN) equipped with Spike-Timing-Dependent Plasticity (STDP) can continuously learn to detect walking people on the fly using retina-inspired, event-based cameras. Our pipeline works as follows. First, a short sequence of event data ($<2$ minutes), capturing a walking human by a flying drone, is forwarded to a convolutional SNNSTDP system which also receives teacher spiking signals from a readout (forming a semi-supervised system). Then, STDP adaptation is stopped and the learned system is assessed on testing sequences. We conduct several experiments to study the effect of key parameters in our system and to compare it against conventionally-trained CNNs. We show that our system reaches a higher peak $F_1$ score (+19%) compared to CNNs with event-based camera frames, while enabling on-line adaptation.

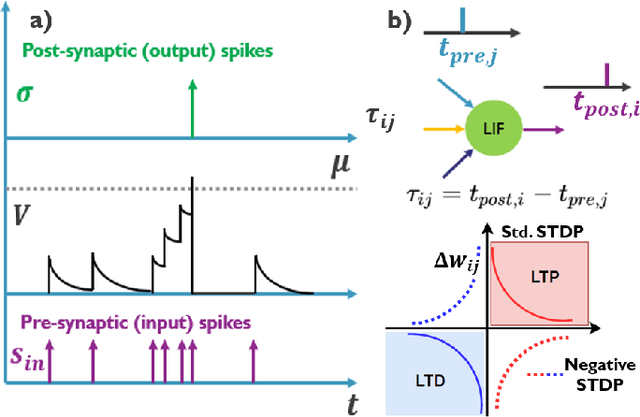

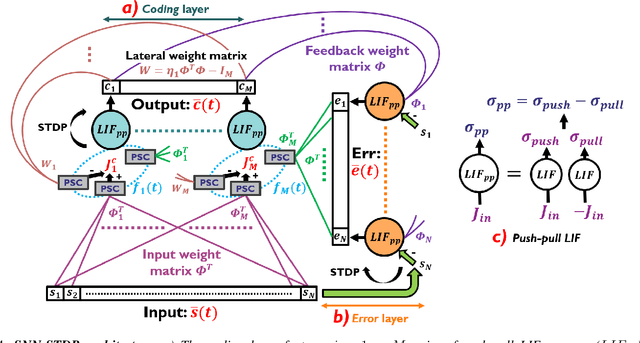

Learning Event-based Spatio-Temporal Feature Descriptors via Local Synaptic Plasticity: A Biologically-realistic Perspective of Computer Vision

Nov 04, 2021

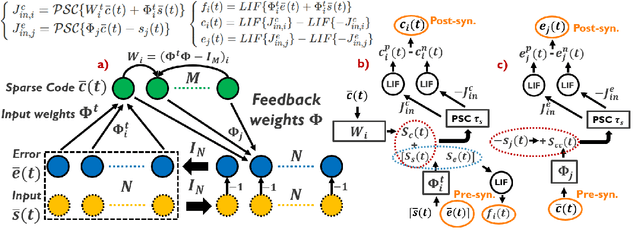

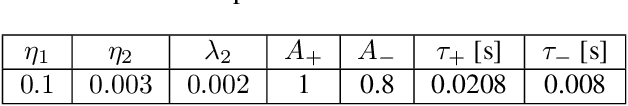

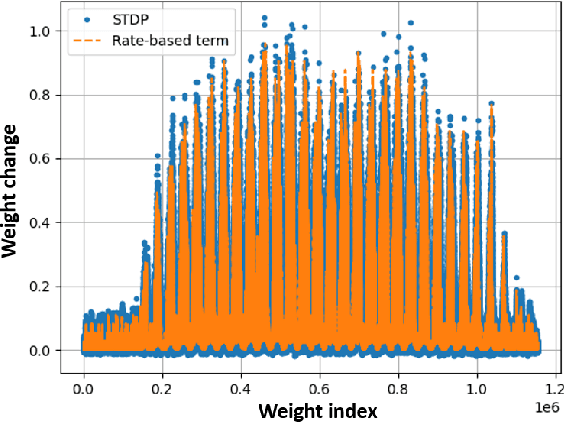

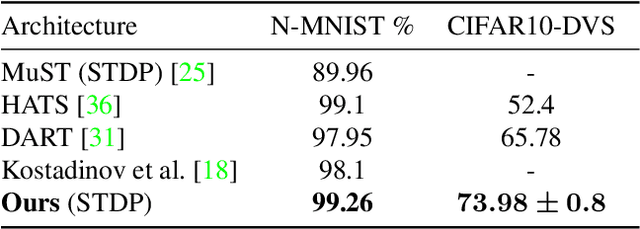

Abstract:We present an optimization-based theory describing spiking cortical ensembles equipped with Spike-Timing-Dependent Plasticity (STDP) learning, as empirically observed in the visual cortex. Using our methods, we build a class of fully-connected, convolutional and action-based feature descriptors for event-based camera that we respectively assess on N-MNIST, challenging CIFAR10-DVS and on the IBM DVS128 gesture dataset. We report significant accuracy improvements compared to conventional state-of-the-art event-based feature descriptors (+8% on CIFAR10-DVS). We report large improvements in accuracy compared to state-of-the-art STDP-based systems (+10% on N-MNIST, +7.74% on IBM DVS128 Gesture). In addition to ultra-low-power learning in neuromorphic edge devices, our work helps paving the way towards a biologically-realistic, optimization-based theory of cortical vision.

A Low-Complexity Radar Detector Outperforming OS-CFAR for Indoor Drone Obstacle Avoidance

Jul 15, 2021

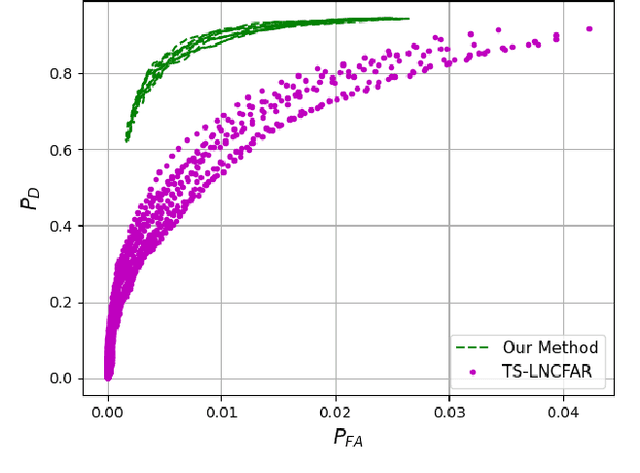

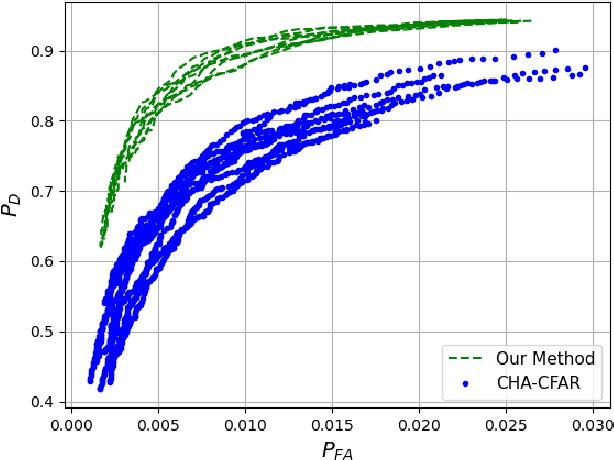

Abstract:As radar sensors are being miniaturized, there is a growing interest for using them in indoor sensing applications such as indoor drone obstacle avoidance. In those novel scenarios, radars must perform well in dense scenes with a large number of neighboring scatterers. Central to radar performance is the detection algorithm used to separate targets from the background noise and clutter. Traditionally, most radar systems use conventional CFAR detectors but their performance degrades in indoor scenarios with many reflectors. Inspired by the advances in non-linear target detection, we propose a novel high-performance, yet low-complexity target detector and we experimentally validate our algorithm on a dataset acquired using a radar mounted on a drone. We experimentally show that our proposed algorithm drastically outperforms OS-CFAR (standard detector used in automotive systems) for our specific task of indoor drone navigation with more than 19% higher probability of detection for a given probability of false alarm. We also benchmark our proposed detector against a number of recently proposed multi-target CFAR detectors and show an improvement of 16% in probability of detection compared to CHA-CFAR, with even larger improvements compared to both OR-CFAR and TS-LNCFAR in our particular indoor scenario. To the best of our knowledge, this work improves the state of the art for high-performance yet low-complexity radar detection in critical indoor sensing applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge