Matthias Hartmann

A Method for Optimizing Connections in Differentiable Logic Gate Networks

Jul 08, 2025Abstract:We introduce a novel method for partial optimization of the connections in Deep Differentiable Logic Gate Networks (LGNs). Our training method utilizes a probability distribution over a subset of connections per gate input, selecting the connection with highest merit, after which the gate-types are selected. We show that the connection-optimized LGNs outperform standard fixed-connection LGNs on the Yin-Yang, MNIST and Fashion-MNIST benchmarks, while requiring only a fraction of the number of logic gates. When training all connections, we demonstrate that 8000 simple logic gates are sufficient to achieve over 98% on the MNIST data set. Additionally, we show that our network has 24 times fewer gates, while performing better on the MNIST data set compared to standard fully connected LGNs. As such, our work shows a pathway towards fully trainable Boolean logic.

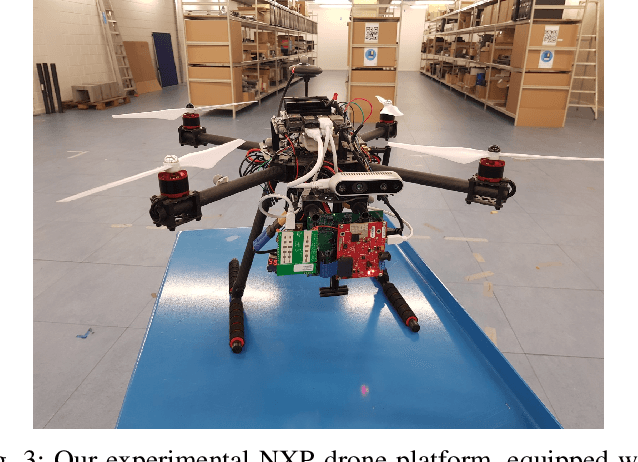

FMCW Radar Sensing for Indoor Drones Using Learned Representations

Jan 06, 2023

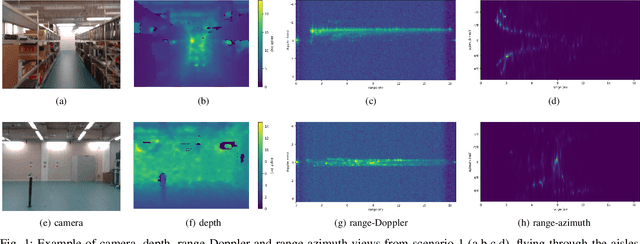

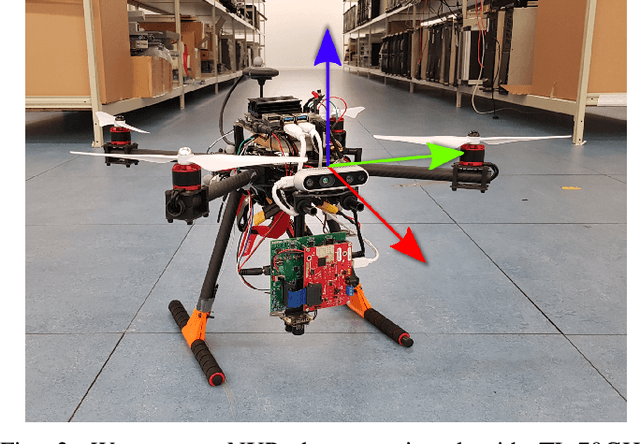

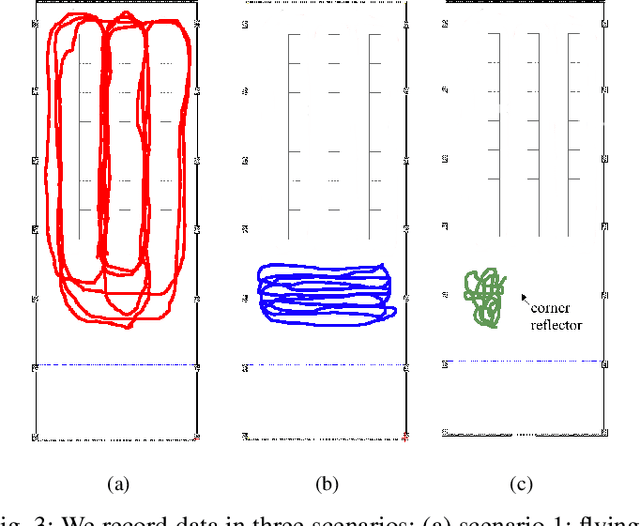

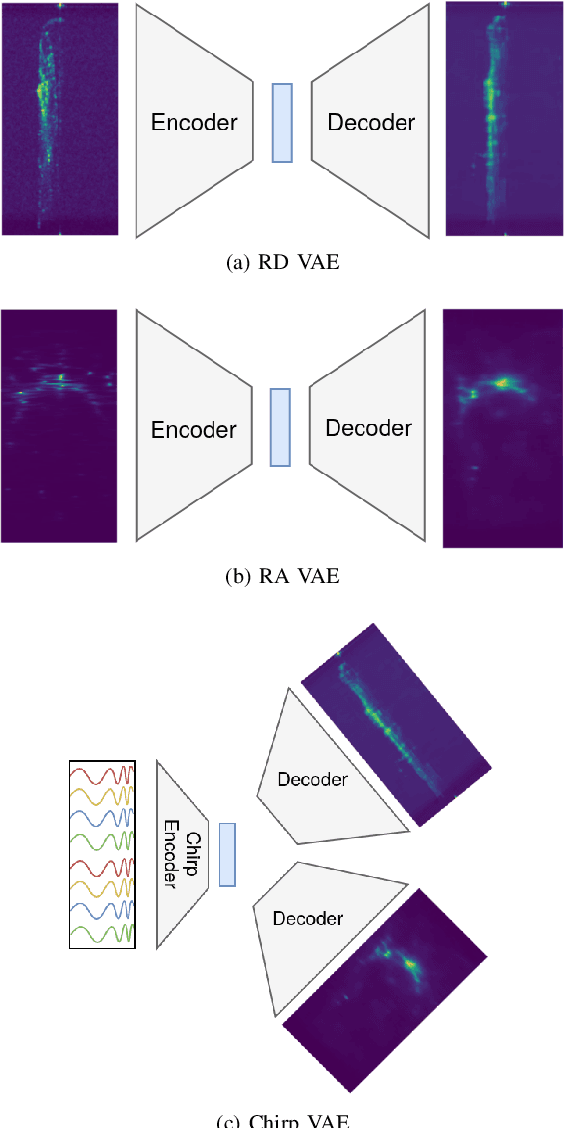

Abstract:Frequency-modulated continuous-wave (FMCW) radar is a promising sensor technology for indoor drones as it provides range, angular as well as Doppler-velocity information about obstacles in the environment. Recently, deep learning approaches have been proposed for processing FMCW data, outperforming traditional detection techniques on range-Doppler or range-azimuth maps. However, these techniques come at a cost; for each novel task a deep neural network architecture has to be trained on high-dimensional input data, stressing both data bandwidth and processing budget. In this paper, we investigate unsupervised learning techniques that generate low-dimensional representations from FMCW radar data, and evaluate to what extent these representations can be reused for multiple downstream tasks. To this end, we introduce a novel dataset of raw radar ADC data recorded from a radar mounted on a flying drone platform in an indoor environment, together with ground truth detection targets. We show with real radar data that, utilizing our learned representations, we match the performance of conventional radar processing techniques and that our model can be trained on different input modalities such as raw ADC samples of only two consecutively transmitted chirps.

A Low-Complexity Radar Detector Outperforming OS-CFAR for Indoor Drone Obstacle Avoidance

Jul 15, 2021

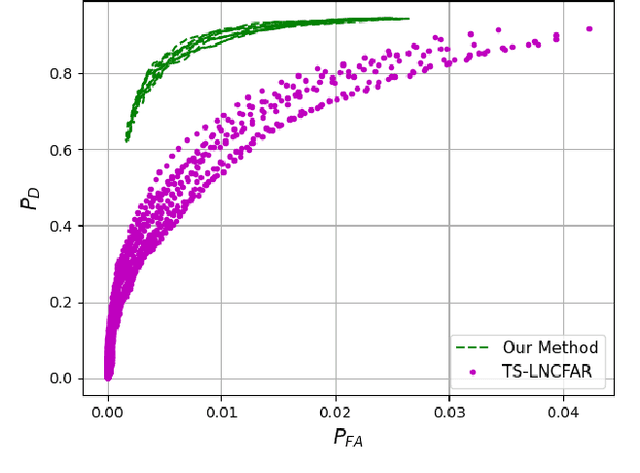

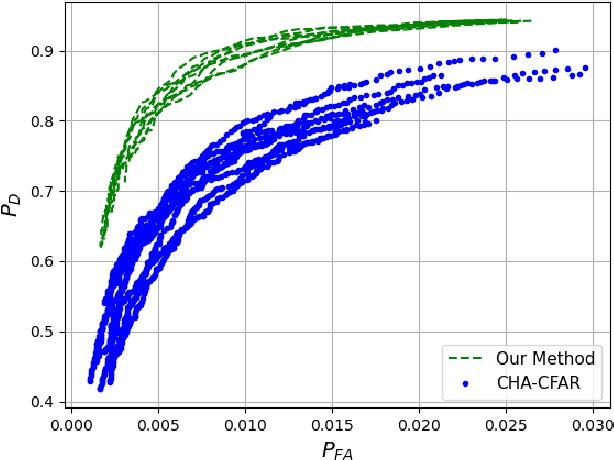

Abstract:As radar sensors are being miniaturized, there is a growing interest for using them in indoor sensing applications such as indoor drone obstacle avoidance. In those novel scenarios, radars must perform well in dense scenes with a large number of neighboring scatterers. Central to radar performance is the detection algorithm used to separate targets from the background noise and clutter. Traditionally, most radar systems use conventional CFAR detectors but their performance degrades in indoor scenarios with many reflectors. Inspired by the advances in non-linear target detection, we propose a novel high-performance, yet low-complexity target detector and we experimentally validate our algorithm on a dataset acquired using a radar mounted on a drone. We experimentally show that our proposed algorithm drastically outperforms OS-CFAR (standard detector used in automotive systems) for our specific task of indoor drone navigation with more than 19% higher probability of detection for a given probability of false alarm. We also benchmark our proposed detector against a number of recently proposed multi-target CFAR detectors and show an improvement of 16% in probability of detection compared to CHA-CFAR, with even larger improvements compared to both OR-CFAR and TS-LNCFAR in our particular indoor scenario. To the best of our knowledge, this work improves the state of the art for high-performance yet low-complexity radar detection in critical indoor sensing applications.

Towards bio-inspired unsupervised representation learning for indoor aerial navigation

Jun 17, 2021

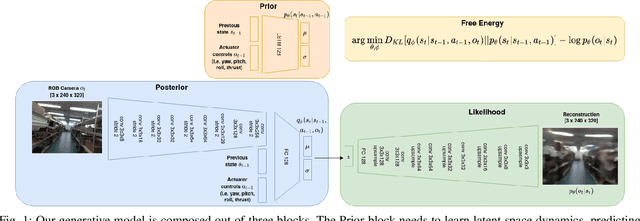

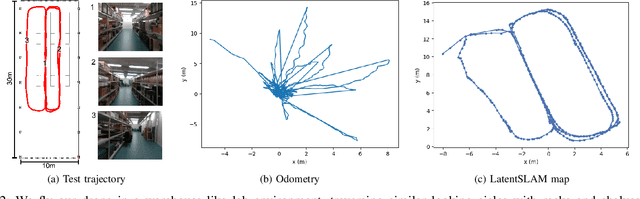

Abstract:Aerial navigation in GPS-denied, indoor environments, is still an open challenge. Drones can perceive the environment from a richer set of viewpoints, while having more stringent compute and energy constraints than other autonomous platforms. To tackle that problem, this research displays a biologically inspired deep-learning algorithm for simultaneous localization and mapping (SLAM) and its application in a drone navigation system. We propose an unsupervised representation learning method that yields low-dimensional latent state descriptors, that mitigates the sensitivity to perceptual aliasing, and works on power-efficient, embedded hardware. The designed algorithm is evaluated on a dataset collected in an indoor warehouse environment, and initial results show the feasibility for robust indoor aerial navigation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge