Bart Dhoedt

Zero-shot Structure Learning and Planning for Autonomous Robot Navigation using Active Inference

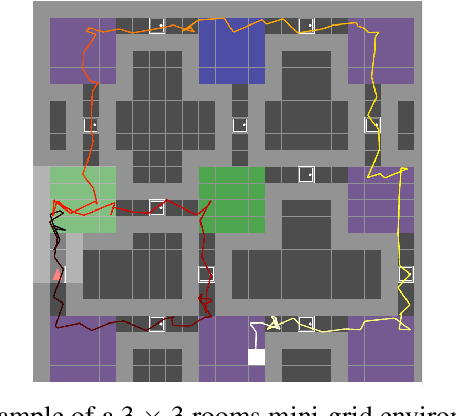

Oct 10, 2025Abstract:Autonomous navigation in unfamiliar environments requires robots to simultaneously explore, localise, and plan under uncertainty, without relying on predefined maps or extensive training. We present a biologically inspired, Active Inference-based framework, Active Inference MAPping and Planning (AIMAPP). This model unifies mapping, localisation, and decision-making within a single generative model. Inspired by hippocampal navigation, it uses topological reasoning, place-cell encoding, and episodic memory to guide behaviour. The agent builds and updates a sparse topological map online, learns state transitions dynamically, and plans actions by minimising Expected Free Energy. This allows it to balance goal-directed and exploratory behaviours. We implemented a ROS-compatible navigation system that is sensor and robot-agnostic, capable of integrating with diverse hardware configurations. It operates in a fully self-supervised manner, is resilient to drift, and supports both exploration and goal-directed navigation without any pre-training. We demonstrate robust performance in large-scale real and simulated environments against state-of-the-art planning models, highlighting the system's adaptability to ambiguous observations, environmental changes, and sensor noise. The model offers a biologically inspired, modular solution to scalable, self-supervised navigation in unstructured settings. AIMAPP is available at https://github.com/decide-ugent/AIMAPP.

Bio-Inspired Topological Autonomous Navigation with Active Inference in Robotics

Aug 10, 2025Abstract:Achieving fully autonomous exploration and navigation remains a critical challenge in robotics, requiring integrated solutions for localisation, mapping, decision-making and motion planning. Existing approaches either rely on strict navigation rules lacking adaptability or on pre-training, which requires large datasets. These AI methods are often computationally intensive or based on static assumptions, limiting their adaptability in dynamic or unknown environments. This paper introduces a bio-inspired agent based on the Active Inference Framework (AIF), which unifies mapping, localisation, and adaptive decision-making for autonomous navigation, including exploration and goal-reaching. Our model creates and updates a topological map of the environment in real-time, planning goal-directed trajectories to explore or reach objectives without requiring pre-training. Key contributions include a probabilistic reasoning framework for interpretable navigation, robust adaptability to dynamic changes, and a modular ROS2 architecture compatible with existing navigation systems. Our method was tested in simulated and real-world environments. The agent successfully explores large-scale simulated environments and adapts to dynamic obstacles and drift, proving to be comparable to other exploration strategies such as Gbplanner, FAEL and Frontiers. This approach offers a scalable and transparent approach for navigating complex, unstructured environments.

Navigation and Exploration with Active Inference: from Biology to Industry

Aug 10, 2025Abstract:By building and updating internal cognitive maps, animals exhibit extraordinary navigation abilities in complex, dynamic environments. Inspired by these biological mechanisms, we present a real time robotic navigation system grounded in the Active Inference Framework (AIF). Our model incrementally constructs a topological map, infers the agent's location, and plans actions by minimising expected uncertainty and fulfilling perceptual goals without any prior training. Integrated into the ROS2 ecosystem, we validate its adaptability and efficiency across both 2D and 3D environments (simulated and real world), demonstrating competitive performance with traditional and state of the art exploration approaches while offering a biologically inspired navigation approach.

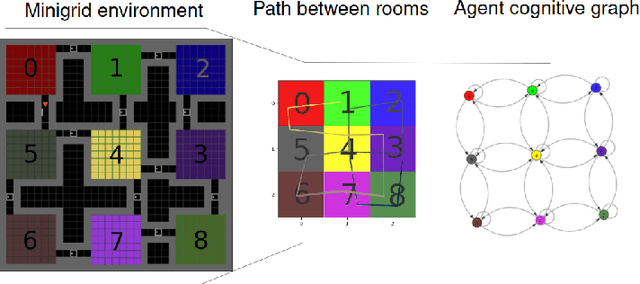

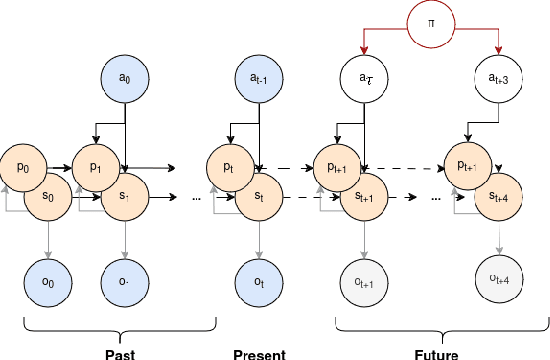

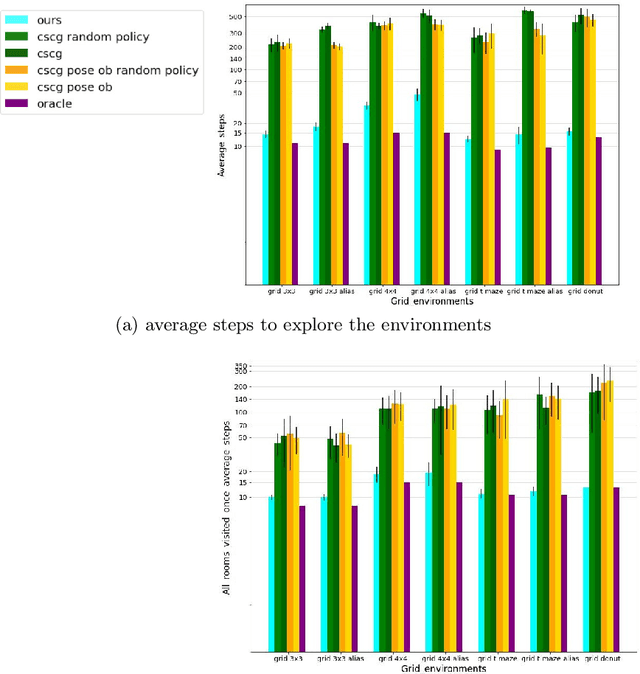

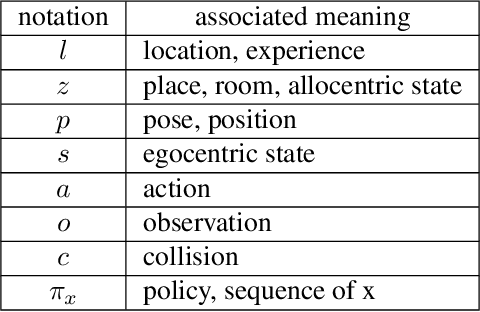

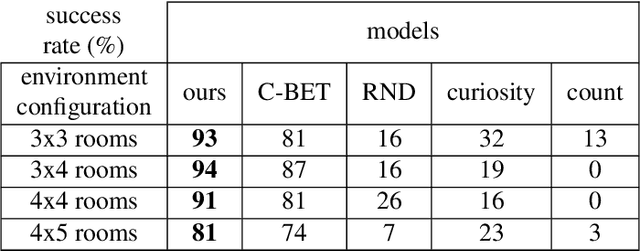

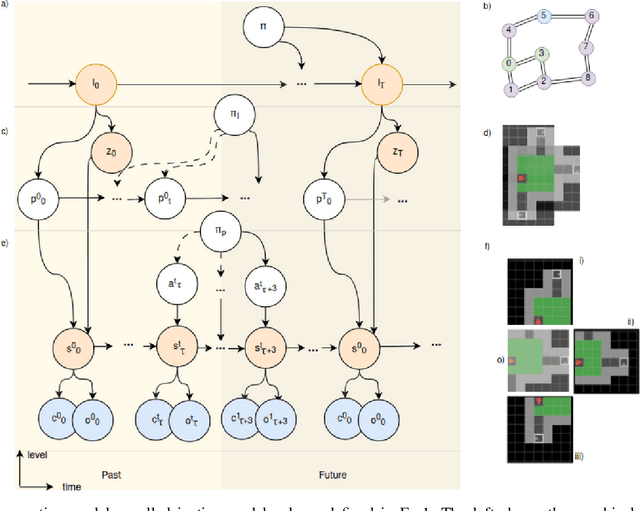

Learning Dynamic Cognitive Map with Autonomous Navigation

Nov 13, 2024Abstract:Inspired by animal navigation strategies, we introduce a novel computational model to navigate and map a space rooted in biologically inspired principles. Animals exhibit extraordinary navigation prowess, harnessing memory, imagination, and strategic decision-making to traverse complex and aliased environments adeptly. Our model aims to replicate these capabilities by incorporating a dynamically expanding cognitive map over predicted poses within an Active Inference framework, enhancing our agent's generative model plasticity to novelty and environmental changes. Through structure learning and active inference navigation, our model demonstrates efficient exploration and exploitation, dynamically expanding its model capacity in response to anticipated novel un-visited locations and updating the map given new evidence contradicting previous beliefs. Comparative analyses in mini-grid environments with the Clone-Structured Cognitive Graph model (CSCG), which shares similar objectives, highlight our model's ability to rapidly learn environmental structures within a single episode, with minimal navigation overlap. Our model achieves this without prior knowledge of observation and world dimensions, underscoring its robustness and efficacy in navigating intricate environments.

Representing Positional Information in Generative World Models for Object Manipulation

Sep 19, 2024

Abstract:Object manipulation capabilities are essential skills that set apart embodied agents engaging with the world, especially in the realm of robotics. The ability to predict outcomes of interactions with objects is paramount in this setting. While model-based control methods have started to be employed for tackling manipulation tasks, they have faced challenges in accurately manipulating objects. As we analyze the causes of this limitation, we identify the cause of underperformance in the way current world models represent crucial positional information, especially about the target's goal specification for object positioning tasks. We introduce a general approach that empowers world model-based agents to effectively solve object-positioning tasks. We propose two declinations of this approach for generative world models: position-conditioned (PCP) and latent-conditioned (LCP) policy learning. In particular, LCP employs object-centric latent representations that explicitly capture object positional information for goal specification. This naturally leads to the emergence of multimodal capabilities, enabling the specification of goals through spatial coordinates or a visual goal. Our methods are rigorously evaluated across several manipulation environments, showing favorable performance compared to current model-based control approaches.

Exploring and Learning Structure: Active Inference Approach in Navigational Agents

Aug 12, 2024

Abstract:Drawing inspiration from animal navigation strategies, we introduce a novel computational model for navigation and mapping, rooted in biologically inspired principles. Animals exhibit remarkable navigation abilities by efficiently using memory, imagination, and strategic decision-making to navigate complex and aliased environments. Building on these insights, we integrate traditional cognitive mapping approaches with an Active Inference Framework (AIF) to learn an environment structure in a few steps. Through the incorporation of topological mapping for long-term memory and AIF for navigation planning and structure learning, our model can dynamically apprehend environmental structures and expand its internal map with predicted beliefs during exploration. Comparative experiments with the Clone-Structured Graph (CSCG) model highlight our model's ability to rapidly learn environmental structures in a single episode, with minimal navigation overlap. this is achieved without prior knowledge of the dimensions of the environment or the type of observations, showcasing its robustness and effectiveness in navigating ambiguous environments.

Multimodal foundation world models for generalist embodied agents

Jun 26, 2024Abstract:Learning generalist embodied agents, able to solve multitudes of tasks in different domains is a long-standing problem. Reinforcement learning (RL) is hard to scale up as it requires a complex reward design for each task. In contrast, language can specify tasks in a more natural way. Current foundation vision-language models (VLMs) generally require fine-tuning or other adaptations to be functional, due to the significant domain gap. However, the lack of multimodal data in such domains represents an obstacle toward developing foundation models for embodied applications. In this work, we overcome these problems by presenting multimodal foundation world models, able to connect and align the representation of foundation VLMs with the latent space of generative world models for RL, without any language annotations. The resulting agent learning framework, GenRL, allows one to specify tasks through vision and/or language prompts, ground them in the embodied domain's dynamics, and learns the corresponding behaviors in imagination. As assessed through large-scale multi-task benchmarking, GenRL exhibits strong multi-task generalization performance in several locomotion and manipulation domains. Furthermore, by introducing a data-free RL strategy, it lays the groundwork for foundation model-based RL for generalist embodied agents.

Spatial and Temporal Hierarchy for Autonomous Navigation using Active Inference in Minigrid Environment

Dec 08, 2023

Abstract:Robust evidence suggests that humans explore their environment using a combination of topological landmarks and coarse grained path-integration. This approach relies on identifiable environmental features (topological landmarks) in tandem with estimations of distance and direction (coarse grained path-integration) to construct cognitive maps of the surroundings. This cognitive map is believed to exhibit a hierarchical structure, allowing efficient planning when solving complex navigation tasks. Inspired by the human behaviour, this paper presents a scalable hierarchical active inference model for autonomous navigation, exploration, and goal-oriented behaviour. The model uses visual observation and motion perception to combine curiosity-driven exploration with goal-oriented behaviour. Motion is planned using different levels of reasoning, i.e. from context to place to motion. This allows for efficient navigation in new spaces and rapid progress toward a target. By incorporating these human navigational strategies and their hierarchical representation of the environment, this model proposes a new solution for autonomous navigation and exploration. The approach is validated through simulations in a mini-grid environment.

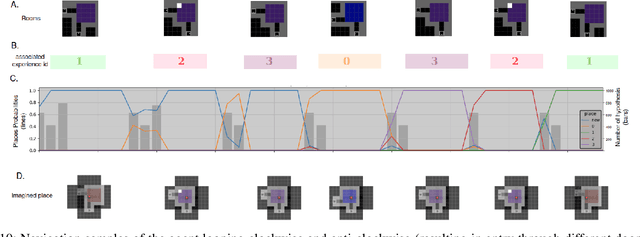

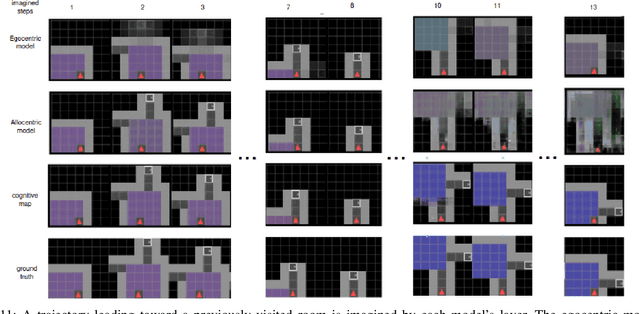

Learning Spatial and Temporal Hierarchies: Hierarchical Active Inference for navigation in Multi-Room Maze Environments

Sep 18, 2023

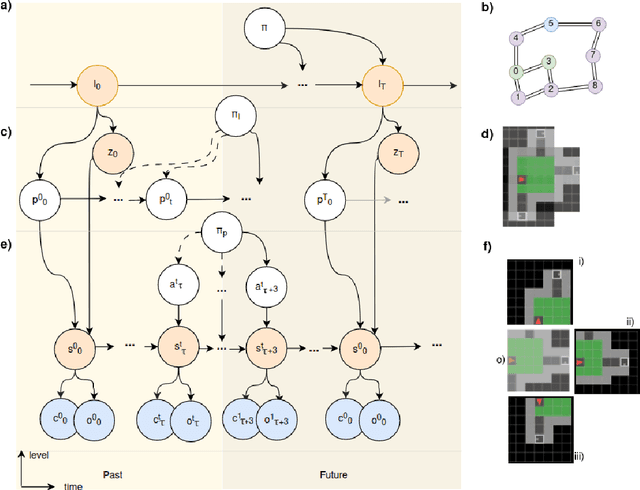

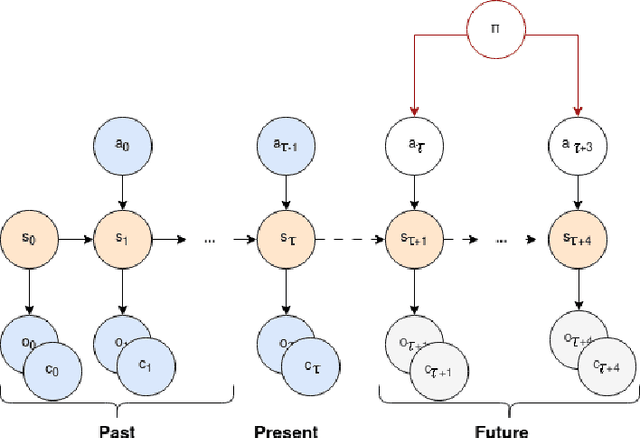

Abstract:Cognitive maps play a crucial role in facilitating flexible behaviour by representing spatial and conceptual relationships within an environment. The ability to learn and infer the underlying structure of the environment is crucial for effective exploration and navigation. This paper introduces a hierarchical active inference model addressing the challenge of inferring structure in the world from pixel-based observations. We propose a three-layer hierarchical model consisting of a cognitive map, an allocentric, and an egocentric world model, combining curiosity-driven exploration with goal-oriented behaviour at the different levels of reasoning from context to place to motion. This allows for efficient exploration and goal-directed search in room-structured mini-grid environments.

Learning to Navigate from Scratch using World Models and Curiosity: the Good, the Bad, and the Ugly

Aug 30, 2023

Abstract:Learning to navigate unknown environments from scratch is a challenging problem. This work presents a system that integrates world models with curiosity-driven exploration for autonomous navigation in new environments. We evaluate performance through simulations and real-world experiments of varying scales and complexities. In simulated environments, the approach rapidly and comprehensively explores the surroundings. Real-world scenarios introduce additional challenges. Despite demonstrating promise in a small controlled environment, we acknowledge that larger and dynamic environments can pose challenges for the current system. Our analysis emphasizes the significance of developing adaptable and robust world models that can handle environmental changes to prevent repetitive exploration of the same areas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge