Daria de Tinguy

Bio-Inspired Topological Autonomous Navigation with Active Inference in Robotics

Aug 10, 2025Abstract:Achieving fully autonomous exploration and navigation remains a critical challenge in robotics, requiring integrated solutions for localisation, mapping, decision-making and motion planning. Existing approaches either rely on strict navigation rules lacking adaptability or on pre-training, which requires large datasets. These AI methods are often computationally intensive or based on static assumptions, limiting their adaptability in dynamic or unknown environments. This paper introduces a bio-inspired agent based on the Active Inference Framework (AIF), which unifies mapping, localisation, and adaptive decision-making for autonomous navigation, including exploration and goal-reaching. Our model creates and updates a topological map of the environment in real-time, planning goal-directed trajectories to explore or reach objectives without requiring pre-training. Key contributions include a probabilistic reasoning framework for interpretable navigation, robust adaptability to dynamic changes, and a modular ROS2 architecture compatible with existing navigation systems. Our method was tested in simulated and real-world environments. The agent successfully explores large-scale simulated environments and adapts to dynamic obstacles and drift, proving to be comparable to other exploration strategies such as Gbplanner, FAEL and Frontiers. This approach offers a scalable and transparent approach for navigating complex, unstructured environments.

Navigation and Exploration with Active Inference: from Biology to Industry

Aug 10, 2025Abstract:By building and updating internal cognitive maps, animals exhibit extraordinary navigation abilities in complex, dynamic environments. Inspired by these biological mechanisms, we present a real time robotic navigation system grounded in the Active Inference Framework (AIF). Our model incrementally constructs a topological map, infers the agent's location, and plans actions by minimising expected uncertainty and fulfilling perceptual goals without any prior training. Integrated into the ROS2 ecosystem, we validate its adaptability and efficiency across both 2D and 3D environments (simulated and real world), demonstrating competitive performance with traditional and state of the art exploration approaches while offering a biologically inspired navigation approach.

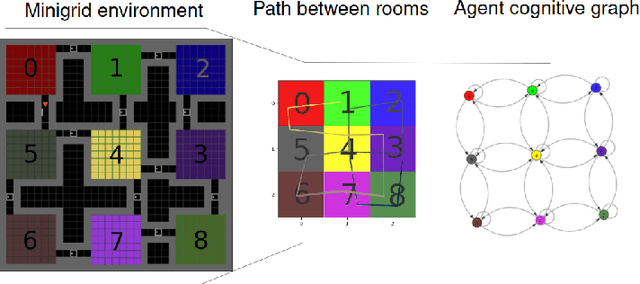

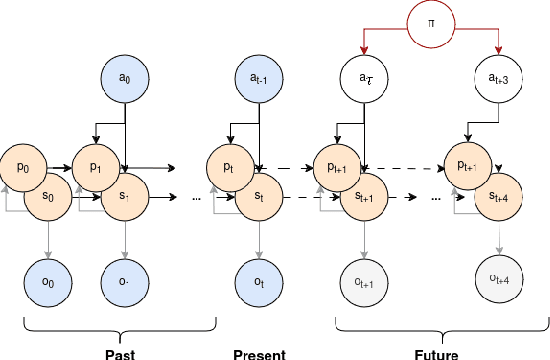

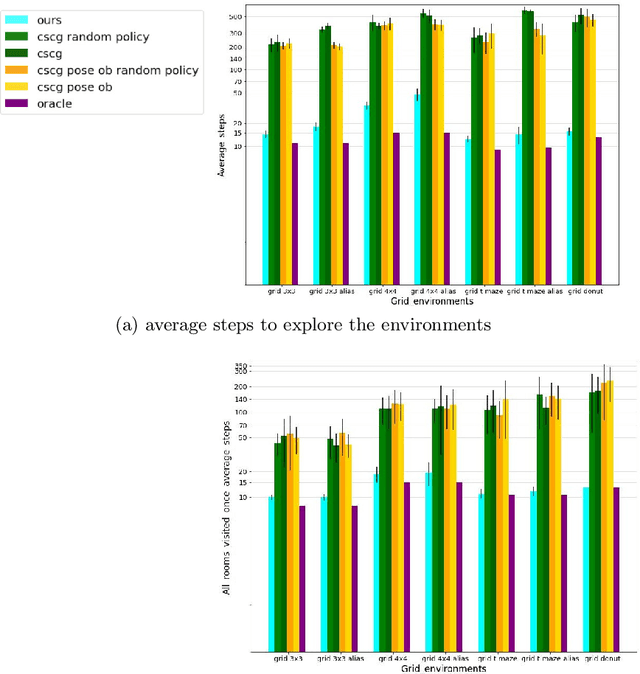

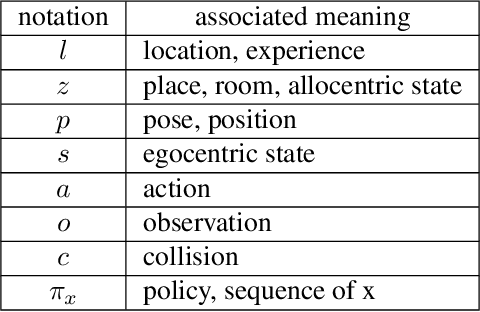

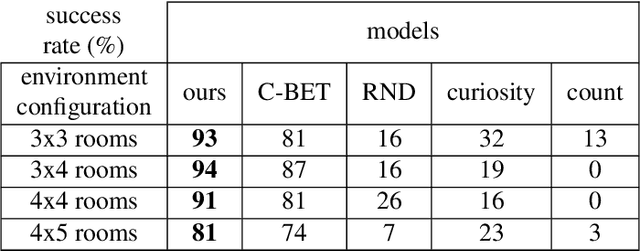

Learning Dynamic Cognitive Map with Autonomous Navigation

Nov 13, 2024Abstract:Inspired by animal navigation strategies, we introduce a novel computational model to navigate and map a space rooted in biologically inspired principles. Animals exhibit extraordinary navigation prowess, harnessing memory, imagination, and strategic decision-making to traverse complex and aliased environments adeptly. Our model aims to replicate these capabilities by incorporating a dynamically expanding cognitive map over predicted poses within an Active Inference framework, enhancing our agent's generative model plasticity to novelty and environmental changes. Through structure learning and active inference navigation, our model demonstrates efficient exploration and exploitation, dynamically expanding its model capacity in response to anticipated novel un-visited locations and updating the map given new evidence contradicting previous beliefs. Comparative analyses in mini-grid environments with the Clone-Structured Cognitive Graph model (CSCG), which shares similar objectives, highlight our model's ability to rapidly learn environmental structures within a single episode, with minimal navigation overlap. Our model achieves this without prior knowledge of observation and world dimensions, underscoring its robustness and efficacy in navigating intricate environments.

Exploring and Learning Structure: Active Inference Approach in Navigational Agents

Aug 12, 2024

Abstract:Drawing inspiration from animal navigation strategies, we introduce a novel computational model for navigation and mapping, rooted in biologically inspired principles. Animals exhibit remarkable navigation abilities by efficiently using memory, imagination, and strategic decision-making to navigate complex and aliased environments. Building on these insights, we integrate traditional cognitive mapping approaches with an Active Inference Framework (AIF) to learn an environment structure in a few steps. Through the incorporation of topological mapping for long-term memory and AIF for navigation planning and structure learning, our model can dynamically apprehend environmental structures and expand its internal map with predicted beliefs during exploration. Comparative experiments with the Clone-Structured Graph (CSCG) model highlight our model's ability to rapidly learn environmental structures in a single episode, with minimal navigation overlap. this is achieved without prior knowledge of the dimensions of the environment or the type of observations, showcasing its robustness and effectiveness in navigating ambiguous environments.

Spatial and Temporal Hierarchy for Autonomous Navigation using Active Inference in Minigrid Environment

Dec 08, 2023

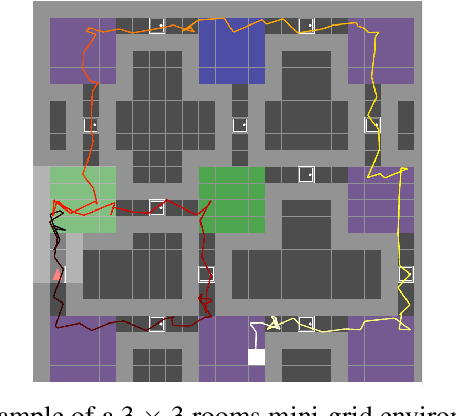

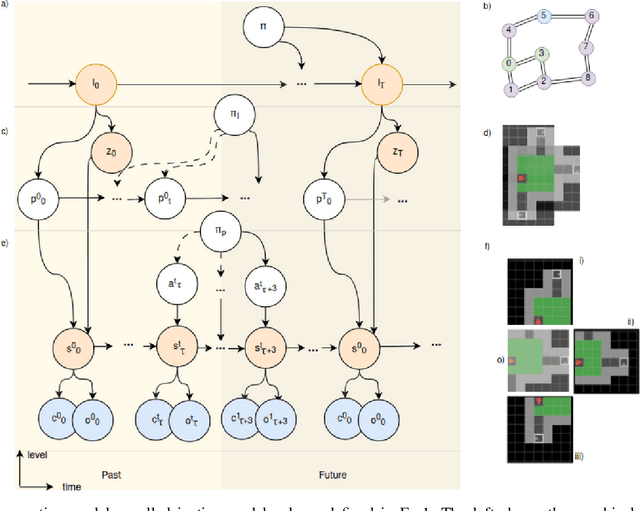

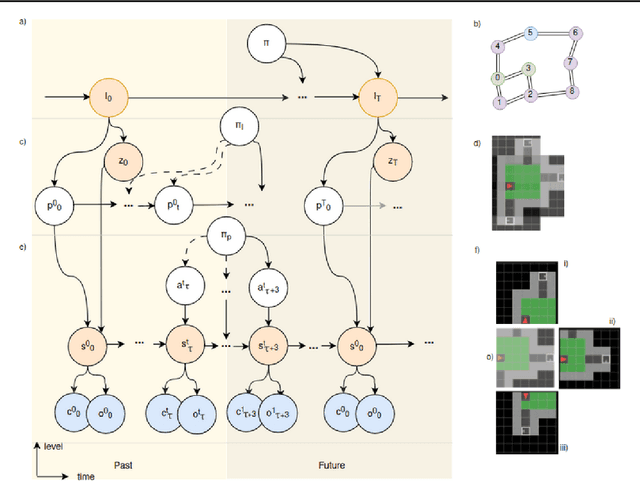

Abstract:Robust evidence suggests that humans explore their environment using a combination of topological landmarks and coarse grained path-integration. This approach relies on identifiable environmental features (topological landmarks) in tandem with estimations of distance and direction (coarse grained path-integration) to construct cognitive maps of the surroundings. This cognitive map is believed to exhibit a hierarchical structure, allowing efficient planning when solving complex navigation tasks. Inspired by the human behaviour, this paper presents a scalable hierarchical active inference model for autonomous navigation, exploration, and goal-oriented behaviour. The model uses visual observation and motion perception to combine curiosity-driven exploration with goal-oriented behaviour. Motion is planned using different levels of reasoning, i.e. from context to place to motion. This allows for efficient navigation in new spaces and rapid progress toward a target. By incorporating these human navigational strategies and their hierarchical representation of the environment, this model proposes a new solution for autonomous navigation and exploration. The approach is validated through simulations in a mini-grid environment.

Learning Spatial and Temporal Hierarchies: Hierarchical Active Inference for navigation in Multi-Room Maze Environments

Sep 18, 2023

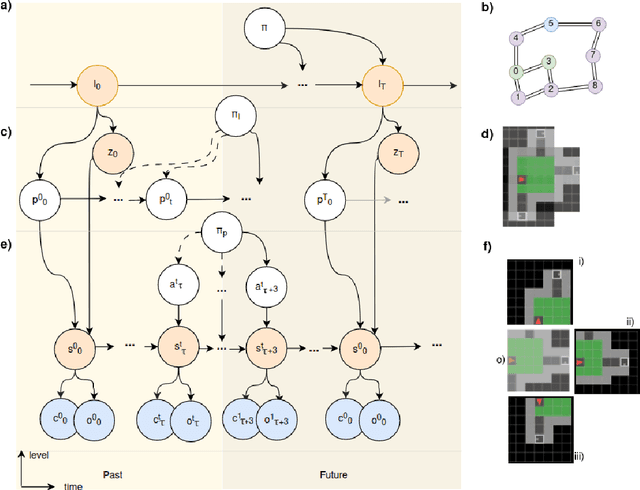

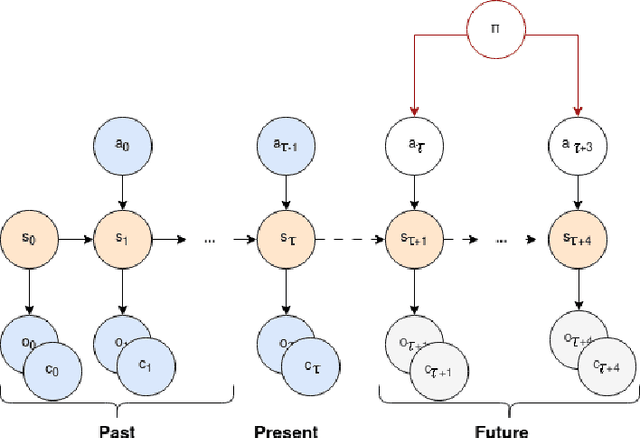

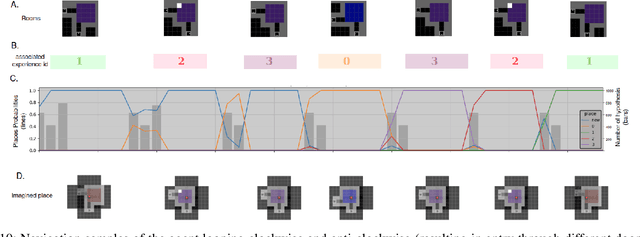

Abstract:Cognitive maps play a crucial role in facilitating flexible behaviour by representing spatial and conceptual relationships within an environment. The ability to learn and infer the underlying structure of the environment is crucial for effective exploration and navigation. This paper introduces a hierarchical active inference model addressing the challenge of inferring structure in the world from pixel-based observations. We propose a three-layer hierarchical model consisting of a cognitive map, an allocentric, and an egocentric world model, combining curiosity-driven exploration with goal-oriented behaviour at the different levels of reasoning from context to place to motion. This allows for efficient exploration and goal-directed search in room-structured mini-grid environments.

Learning to Navigate from Scratch using World Models and Curiosity: the Good, the Bad, and the Ugly

Aug 30, 2023

Abstract:Learning to navigate unknown environments from scratch is a challenging problem. This work presents a system that integrates world models with curiosity-driven exploration for autonomous navigation in new environments. We evaluate performance through simulations and real-world experiments of varying scales and complexities. In simulated environments, the approach rapidly and comprehensively explores the surroundings. Real-world scenarios introduce additional challenges. Despite demonstrating promise in a small controlled environment, we acknowledge that larger and dynamic environments can pose challenges for the current system. Our analysis emphasizes the significance of developing adaptable and robust world models that can handle environmental changes to prevent repetitive exploration of the same areas.

Inferring Hierarchical Structure in Multi-Room Maze Environments

Jun 23, 2023

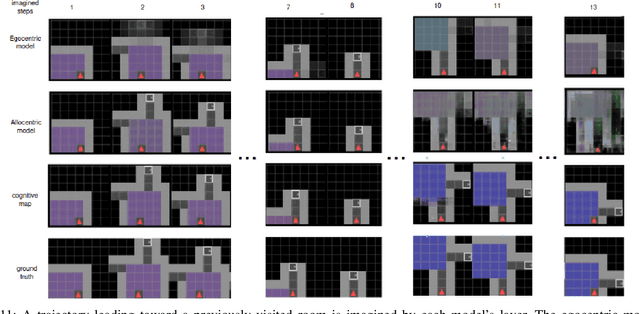

Abstract:Cognitive maps play a crucial role in facilitating flexible behaviour by representing spatial and conceptual relationships within an environment. The ability to learn and infer the underlying structure of the environment is crucial for effective exploration and navigation. This paper introduces a hierarchical active inference model addressing the challenge of inferring structure in the world from pixel-based observations. We propose a three-layer hierarchical model consisting of a cognitive map, an allocentric, and an egocentric world model, combining curiosity-driven exploration with goal-oriented behaviour at the different levels of reasoning from context to place to motion. This allows for efficient exploration and goal-directed search in room-structured mini-grid environments.

Home Run: Finding Your Way Home by Imagining Trajectories

Aug 19, 2022

Abstract:When studying unconstrained behaviour and allowing mice to leave their cage to navigate a complex labyrinth, the mice exhibit foraging behaviour in the labyrinth searching for rewards, returning to their home cage now and then, e.g. to drink. Surprisingly, when executing such a ``home run'', the mice do not follow the exact reverse path, in fact, the entry path and home path have very little overlap. Recent work proposed a hierarchical active inference model for navigation, where the low level model makes inferences about hidden states and poses that explain sensory inputs, whereas the high level model makes inferences about moving between locations, effectively building a map of the environment. However, using this ``map'' for planning, only allows the agent to find trajectories that it previously explored, far from the observed mice's behaviour. In this paper, we explore ways of incorporating before-unvisited paths in the planning algorithm, by using the low level generative model to imagine potential, yet undiscovered paths. We demonstrate a proof of concept in a grid-world environment, showing how an agent can accurately predict a new, shorter path in the map leading to its starting point, using a generative model learnt from pixel-based observations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge