Samira Sebt

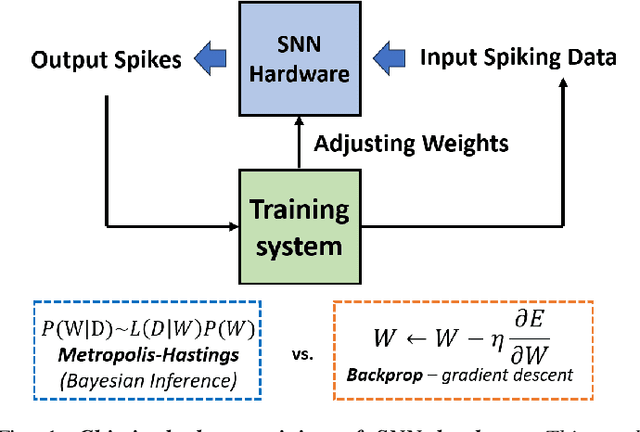

Towards Chip-in-the-loop Spiking Neural Network Training via Metropolis-Hastings Sampling

Feb 09, 2024

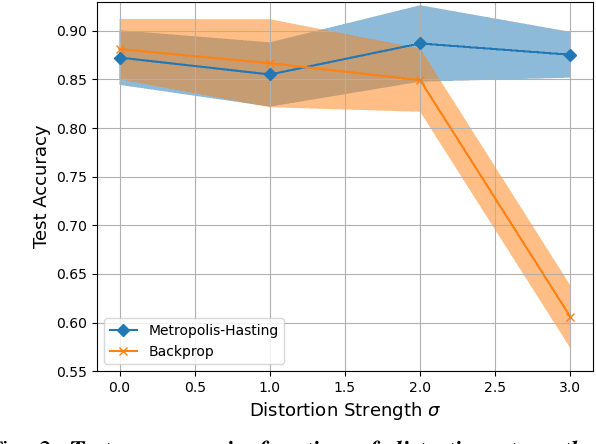

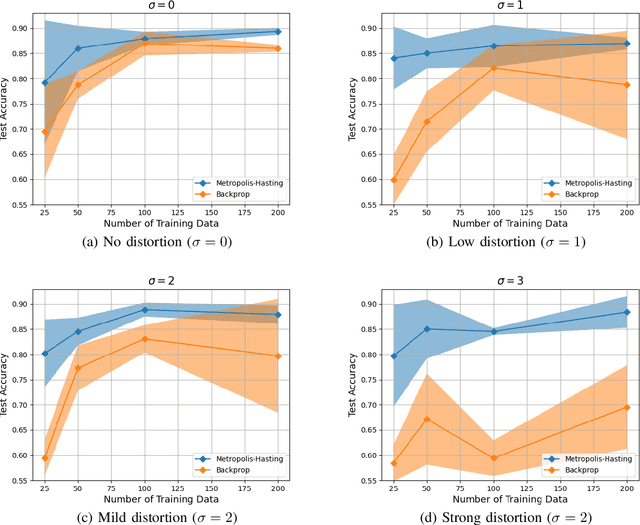

Abstract:This paper studies the use of Metropolis-Hastings sampling for training Spiking Neural Network (SNN) hardware subject to strong unknown non-idealities, and compares the proposed approach to the common use of the backpropagation of error (backprop) algorithm and surrogate gradients, widely used to train SNNs in literature. Simulations are conducted within a chip-in-the-loop training context, where an SNN subject to unknown distortion must be trained to detect cancer from measurements, within a biomedical application context. Our results show that the proposed approach strongly outperforms the use of backprop by up to $27\%$ higher accuracy when subject to strong hardware non-idealities. Furthermore, our results also show that the proposed approach outperforms backprop in terms of SNN generalization, needing $>10 \times$ less training data for achieving effective accuracy. These findings make the proposed training approach well-suited for SNN implementations in analog subthreshold circuits and other emerging technologies where unknown hardware non-idealities can jeopardize backprop.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge