Continuously Learning to Detect People on the Fly: A Bio-inspired Visual System for Drones

Paper and Code

Feb 20, 2022

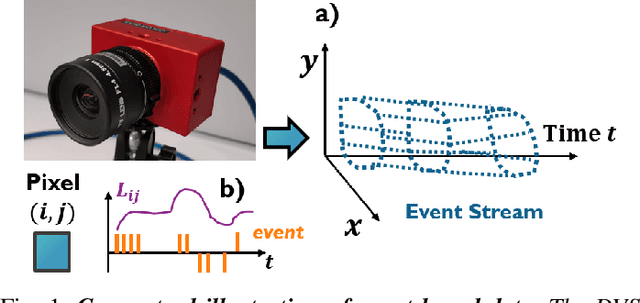

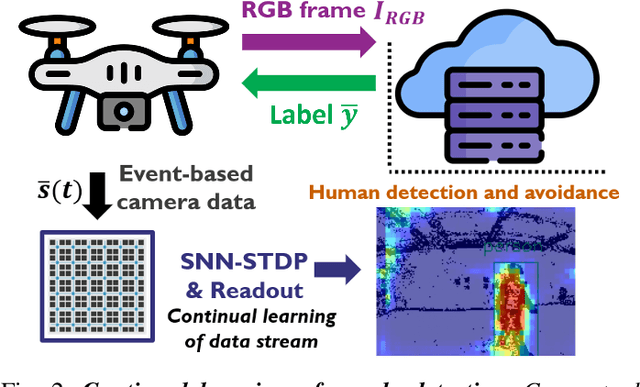

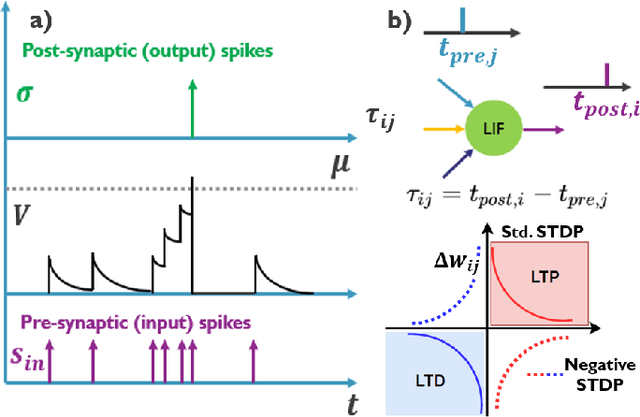

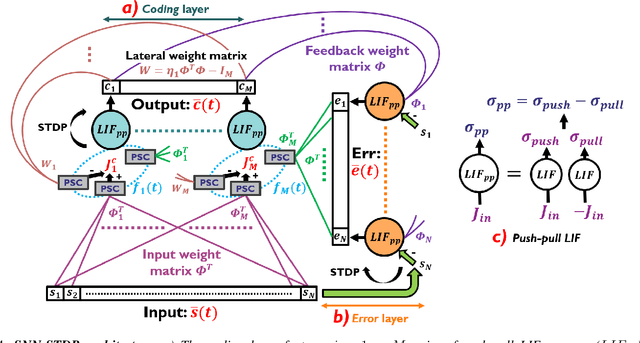

This paper demonstrates for the first time that a biologically-plausible spiking neural network (SNN) equipped with Spike-Timing-Dependent Plasticity (STDP) can continuously learn to detect walking people on the fly using retina-inspired, event-based cameras. Our pipeline works as follows. First, a short sequence of event data ($<2$ minutes), capturing a walking human by a flying drone, is forwarded to a convolutional SNNSTDP system which also receives teacher spiking signals from a readout (forming a semi-supervised system). Then, STDP adaptation is stopped and the learned system is assessed on testing sequences. We conduct several experiments to study the effect of key parameters in our system and to compare it against conventionally-trained CNNs. We show that our system reaches a higher peak $F_1$ score (+19%) compared to CNNs with event-based camera frames, while enabling on-line adaptation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge