Ali Mollahosseini

TweetNERD -- End to End Entity Linking Benchmark for Tweets

Oct 14, 2022

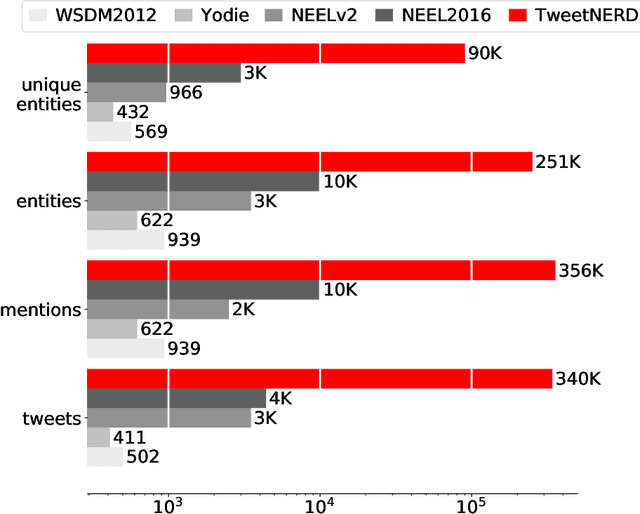

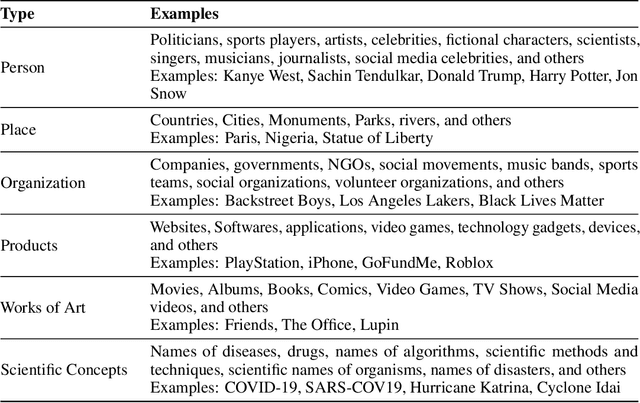

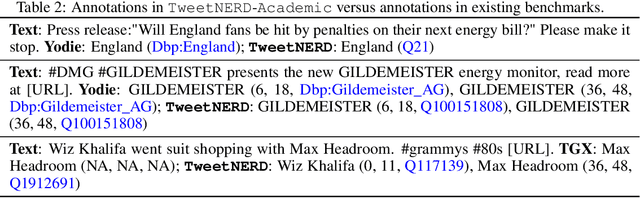

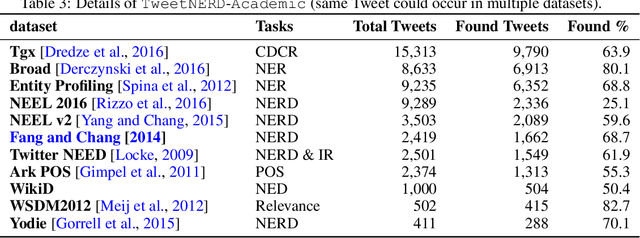

Abstract:Named Entity Recognition and Disambiguation (NERD) systems are foundational for information retrieval, question answering, event detection, and other natural language processing (NLP) applications. We introduce TweetNERD, a dataset of 340K+ Tweets across 2010-2021, for benchmarking NERD systems on Tweets. This is the largest and most temporally diverse open sourced dataset benchmark for NERD on Tweets and can be used to facilitate research in this area. We describe evaluation setup with TweetNERD for three NERD tasks: Named Entity Recognition (NER), Entity Linking with True Spans (EL), and End to End Entity Linking (End2End); and provide performance of existing publicly available methods on specific TweetNERD splits. TweetNERD is available at: https://doi.org/10.5281/zenodo.6617192 under Creative Commons Attribution 4.0 International (CC BY 4.0) license. Check out more details at https://github.com/twitter-research/TweetNERD.

CBAG: An Efficient Genetic Algorithm for the Graph Burning Problem

Aug 06, 2022

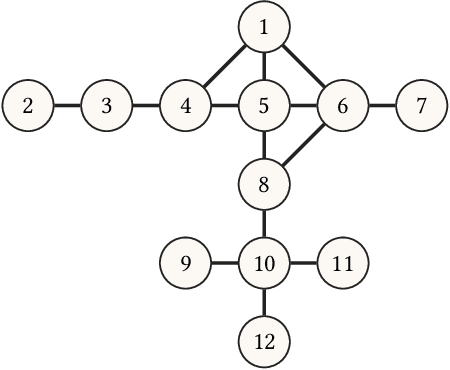

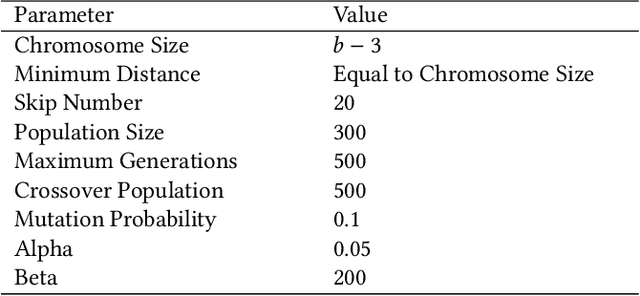

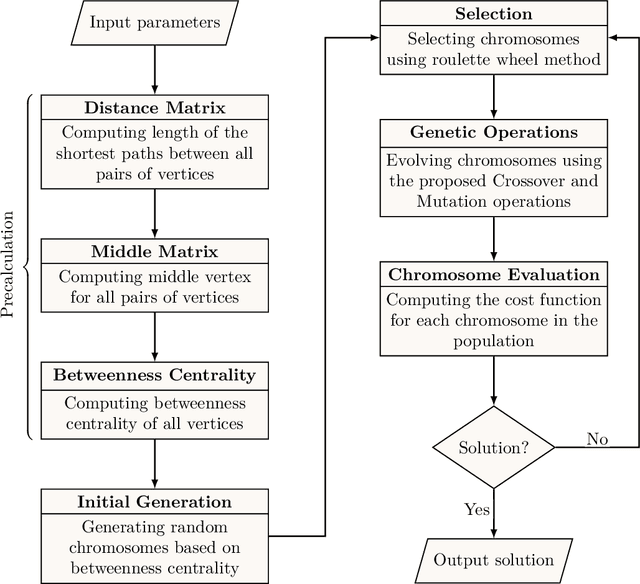

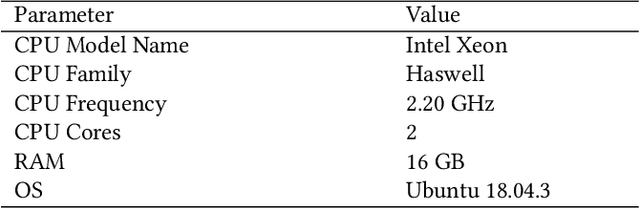

Abstract:Information spread is an intriguing topic to study in network science, which investigates how information, influence, or contagion propagate through networks. Graph burning is a simplified deterministic model for how information spreads within networks. The complicated NP-complete nature of the problem makes it computationally difficult to solve using exact algorithms. Accordingly, a number of heuristics and approximation algorithms have been proposed in the literature for the graph burning problem. In this paper, we propose an efficient genetic algorithm called Centrality BAsed Genetic-algorithm (CBAG) for solving the graph burning problem. Considering the unique characteristics of the graph burning problem, we introduce novel genetic operators, chromosome representation, and evaluation method. In the proposed algorithm, the well-known betweenness centrality is used as the backbone of our chromosome initialization procedure. The proposed algorithm is implemented and compared with previous heuristics and approximation algorithms on 15 benchmark graphs of different sizes. Based on the results, it can be seen that the proposed algorithm achieves better performance in comparison to the previous state-of-the-art heuristics. The complete source code is available online and can be used to find optimal or near-optimal solutions for the graph burning problem.

A Pilot Study on Using an Intelligent Life-like Robot as a Companion for Elderly Individuals with Dementia and Depression

Dec 07, 2017

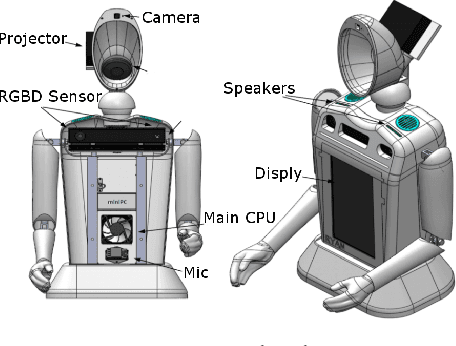

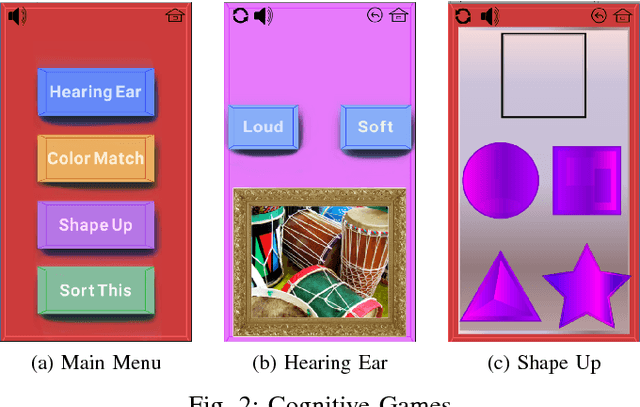

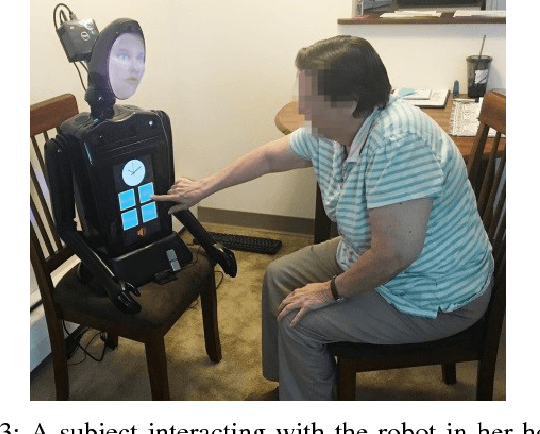

Abstract:This paper presents the design, development, methodology, and the results of a pilot study on using an intelligent, emotive and perceptive social robot (aka Companionbot) for improving the quality of life of elderly people with dementia and/or depression. Ryan Companionbot prototyped in this project, is a rear-projected life-like conversational robot. Ryan is equipped with features that can (1) interpret and respond to users' emotions through facial expressions and spoken language, (2) proactively engage in conversations with users, and (3) remind them about their daily life schedules (e.g. taking their medicine on time). Ryan engages users in cognitive games and reminiscence activities. We conducted a pilot study with six elderly individuals with moderate dementia and/or depression living in a senior living facility in Denver. Each individual had 24/7 access to a Ryan in his/her room for a period of 4-6 weeks. Our observations of these individuals, interviews with them and their caregivers, and analyses of their interactions during this period revealed that they established rapport with the robot and greatly valued and enjoyed having a Companionbot in their room.

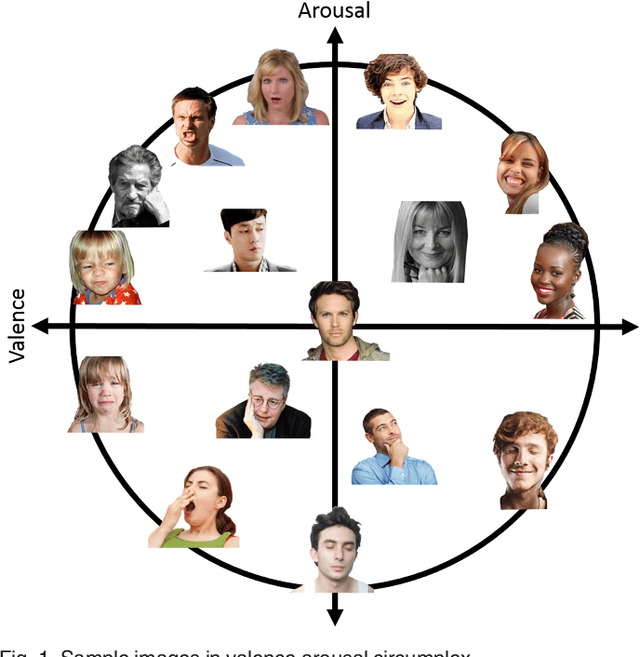

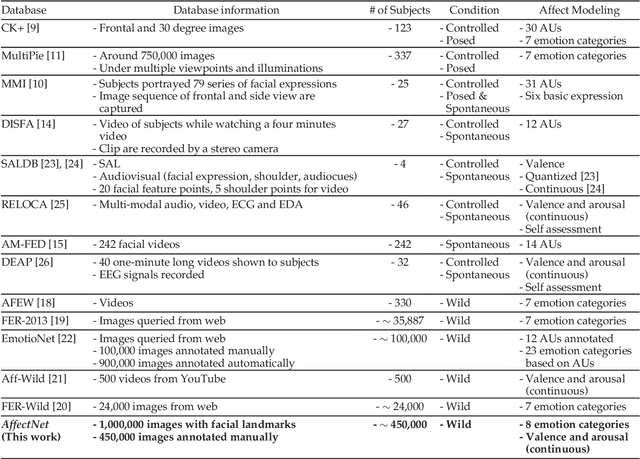

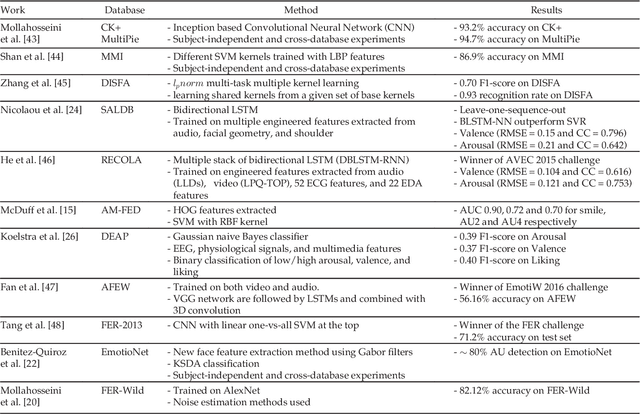

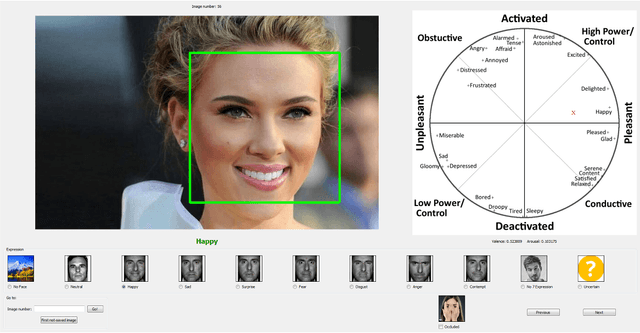

AffectNet: A Database for Facial Expression, Valence, and Arousal Computing in the Wild

Oct 09, 2017

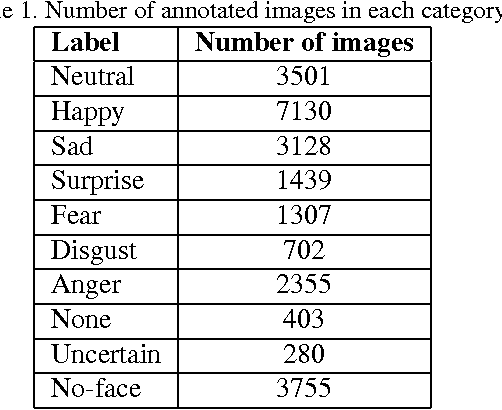

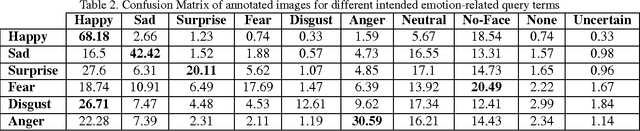

Abstract:Automated affective computing in the wild setting is a challenging problem in computer vision. Existing annotated databases of facial expressions in the wild are small and mostly cover discrete emotions (aka the categorical model). There are very limited annotated facial databases for affective computing in the continuous dimensional model (e.g., valence and arousal). To meet this need, we collected, annotated, and prepared for public distribution a new database of facial emotions in the wild (called AffectNet). AffectNet contains more than 1,000,000 facial images from the Internet by querying three major search engines using 1250 emotion related keywords in six different languages. About half of the retrieved images were manually annotated for the presence of seven discrete facial expressions and the intensity of valence and arousal. AffectNet is by far the largest database of facial expression, valence, and arousal in the wild enabling research in automated facial expression recognition in two different emotion models. Two baseline deep neural networks are used to classify images in the categorical model and predict the intensity of valence and arousal. Various evaluation metrics show that our deep neural network baselines can perform better than conventional machine learning methods and off-the-shelf facial expression recognition systems.

Facial Expression Recognition from World Wild Web

Jan 05, 2017

Abstract:Recognizing facial expression in a wild setting has remained a challenging task in computer vision. The World Wide Web is a good source of facial images which most of them are captured in uncontrolled conditions. In fact, the Internet is a Word Wild Web of facial images with expressions. This paper presents the results of a new study on collecting, annotating, and analyzing wild facial expressions from the web. Three search engines were queried using 1250 emotion related keywords in six different languages and the retrieved images were mapped by two annotators to six basic expressions and neutral. Deep neural networks and noise modeling were used in three different training scenarios to find how accurately facial expressions can be recognized when trained on noisy images collected from the web using query terms (e.g. happy face, laughing man, etc)? The results of our experiments show that deep neural networks can recognize wild facial expressions with an accuracy of 82.12%.

ExpressionBot: An Emotive Lifelike Robotic Face for Face-to-Face Communication

Nov 20, 2015

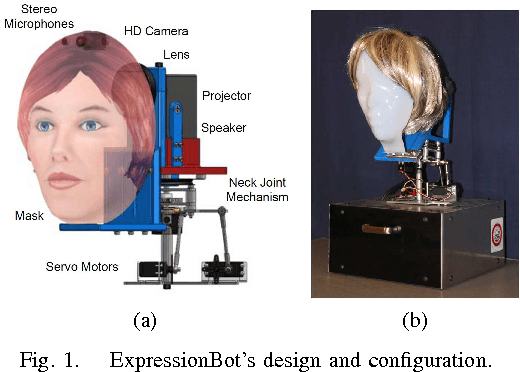

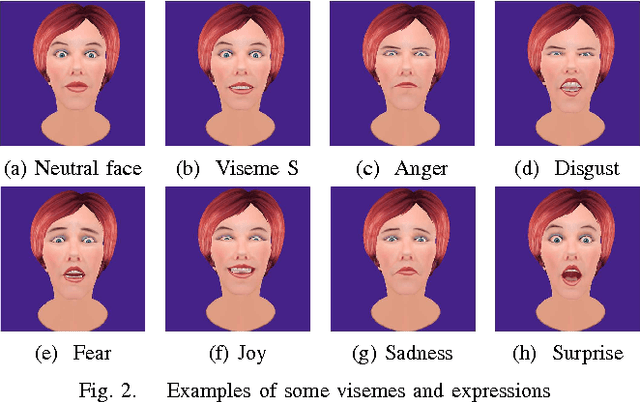

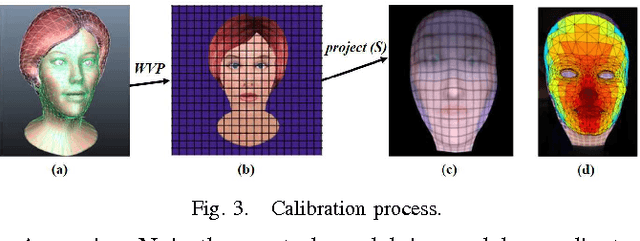

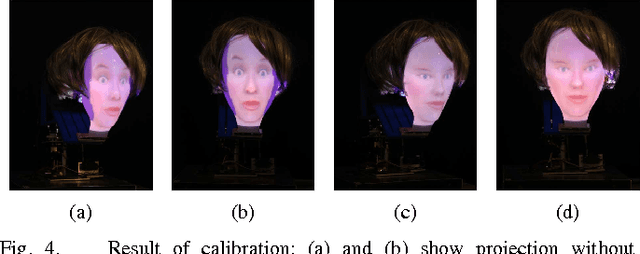

Abstract:This article proposes an emotive lifelike robotic face, called ExpressionBot, that is designed to support verbal and non-verbal communication between the robot and humans, with the goal of closely modeling the dynamics of natural face-to-face communication. The proposed robotic head consists of two major components: 1) a hardware component that contains a small projector, a fish-eye lens, a custom-designed mask and a neck system with 3 degrees of freedom; 2) a facial animation system, projected onto the robotic mask, that is capable of presenting facial expressions, realistic eye movement, and accurate visual speech. We present three studies that compare Human-Robot Interaction with Human-Computer Interaction with a screen-based model of the avatar. The studies indicate that the robotic face is well accepted by users, with some advantages in recognition of facial expression and mutual eye gaze contact.

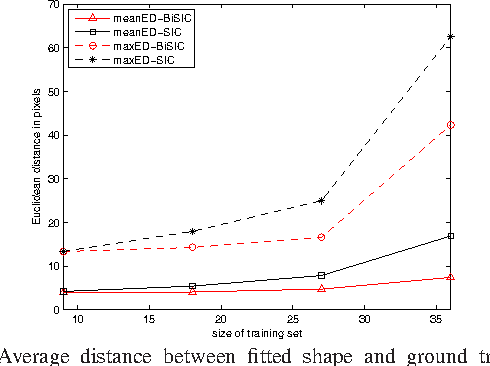

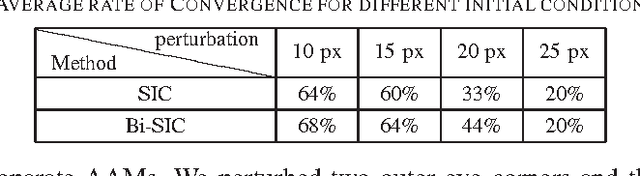

Bidirectional Warping of Active Appearance Model

Nov 20, 2015

Abstract:Active Appearance Model (AAM) is a commonly used method for facial image analysis with applications in face identification and facial expression recognition. This paper proposes a new approach based on image alignment for AAM fitting called bidirectional warping. Previous approaches warp either the input image or the appearance template. We propose to warp both the input image, using incremental update by an affine transformation, and the appearance template, using an inverse compositional approach. Our experimental results on Multi-PIE face database show that the bidirectional approach outperforms state-of-the-art inverse compositional fitting approaches in extracting landmark points of faces with shape and pose variations.

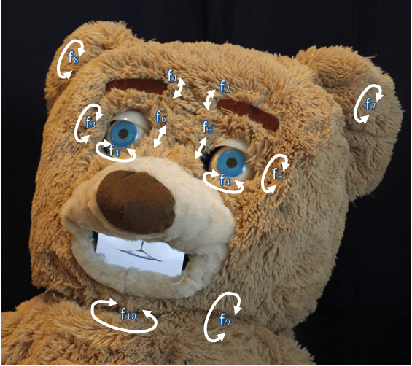

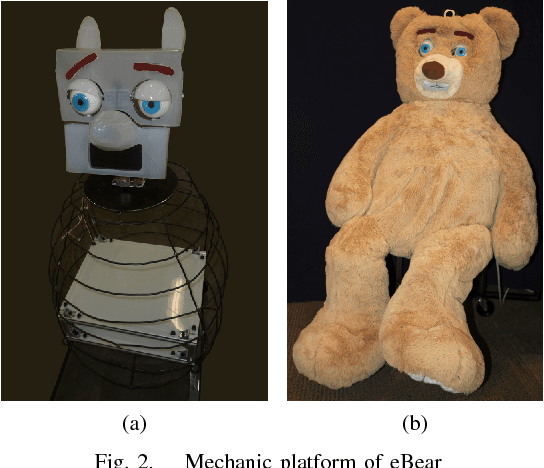

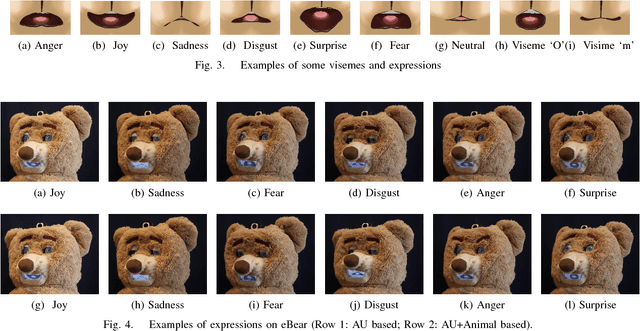

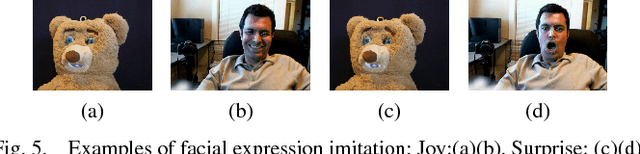

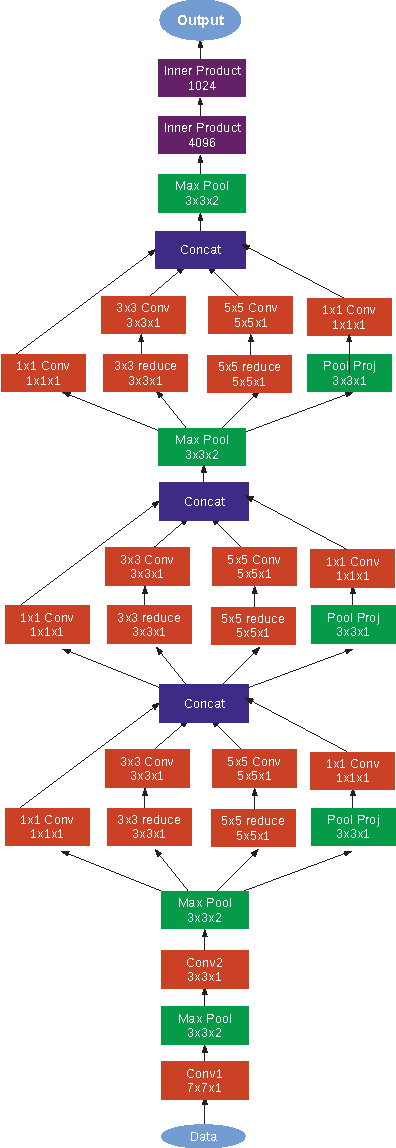

eBear: An Expressive Bear-Like Robot

Nov 20, 2015

Abstract:This paper presents an anthropomorphic robotic bear for the exploration of human-robot interaction including verbal and non-verbal communications. This robot is implemented with a hybrid face composed of a mechanical faceplate with 10 DOFs and an LCD-display-equipped mouth. The facial emotions of the bear are designed based on the description of the Facial Action Coding System as well as some animal-like gestures described by Darwin. The mouth movements are realized by synthesizing emotions with speech. User acceptance investigations have been conducted to evaluate the likability of these facial behaviors exhibited by the eBear. Multiple Kernel Learning is proposed to fuse different features for recognizing user's facial expressions. Our experimental results show that the developed Bear-Like robot can perceive basic facial expressions and provide emotive conveyance towards human beings.

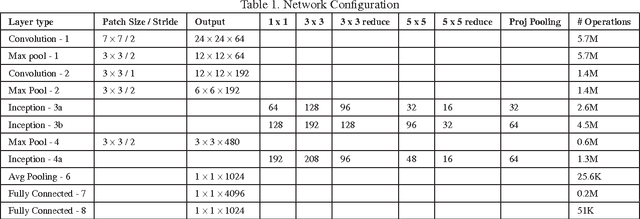

Going Deeper in Facial Expression Recognition using Deep Neural Networks

Nov 12, 2015

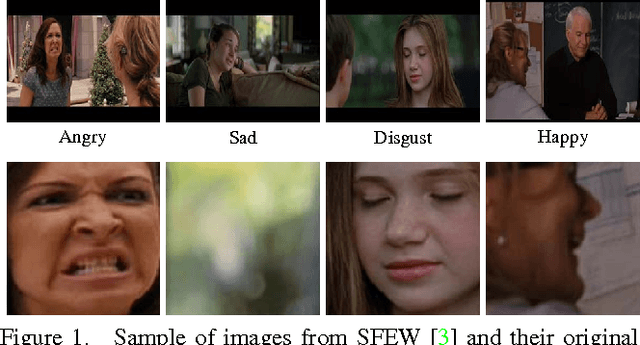

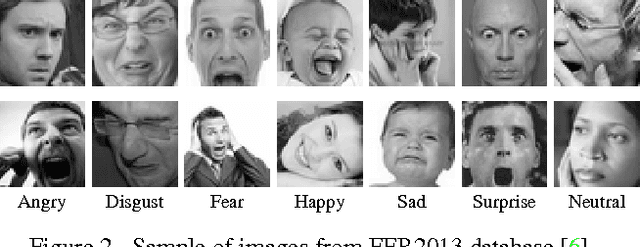

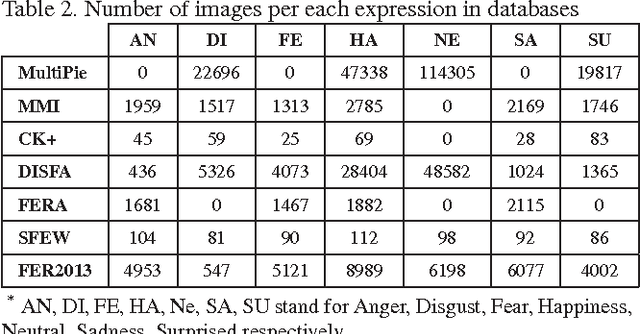

Abstract:Automated Facial Expression Recognition (FER) has remained a challenging and interesting problem. Despite efforts made in developing various methods for FER, existing approaches traditionally lack generalizability when applied to unseen images or those that are captured in wild setting. Most of the existing approaches are based on engineered features (e.g. HOG, LBPH, and Gabor) where the classifier's hyperparameters are tuned to give best recognition accuracies across a single database, or a small collection of similar databases. Nevertheless, the results are not significant when they are applied to novel data. This paper proposes a deep neural network architecture to address the FER problem across multiple well-known standard face datasets. Specifically, our network consists of two convolutional layers each followed by max pooling and then four Inception layers. The network is a single component architecture that takes registered facial images as the input and classifies them into either of the six basic or the neutral expressions. We conducted comprehensive experiments on seven publically available facial expression databases, viz. MultiPIE, MMI, CK+, DISFA, FERA, SFEW, and FER2013. The results of proposed architecture are comparable to or better than the state-of-the-art methods and better than traditional convolutional neural networks and in both accuracy and training time.

* To be appear in IEEE Winter Conference on Applications of Computer Vision (WACV), 2016 {Accepted in first round submission}

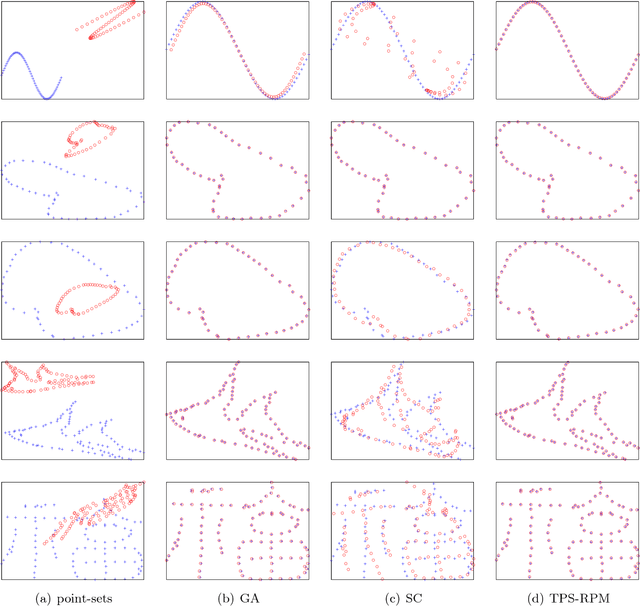

Affine Image Registration Transformation Estimation Using a Real Coded Genetic Algorithm with SBX

Apr 10, 2012

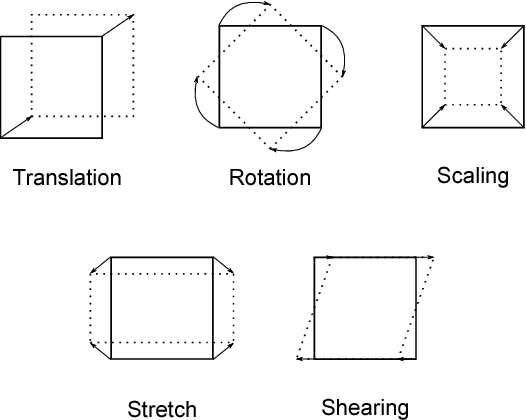

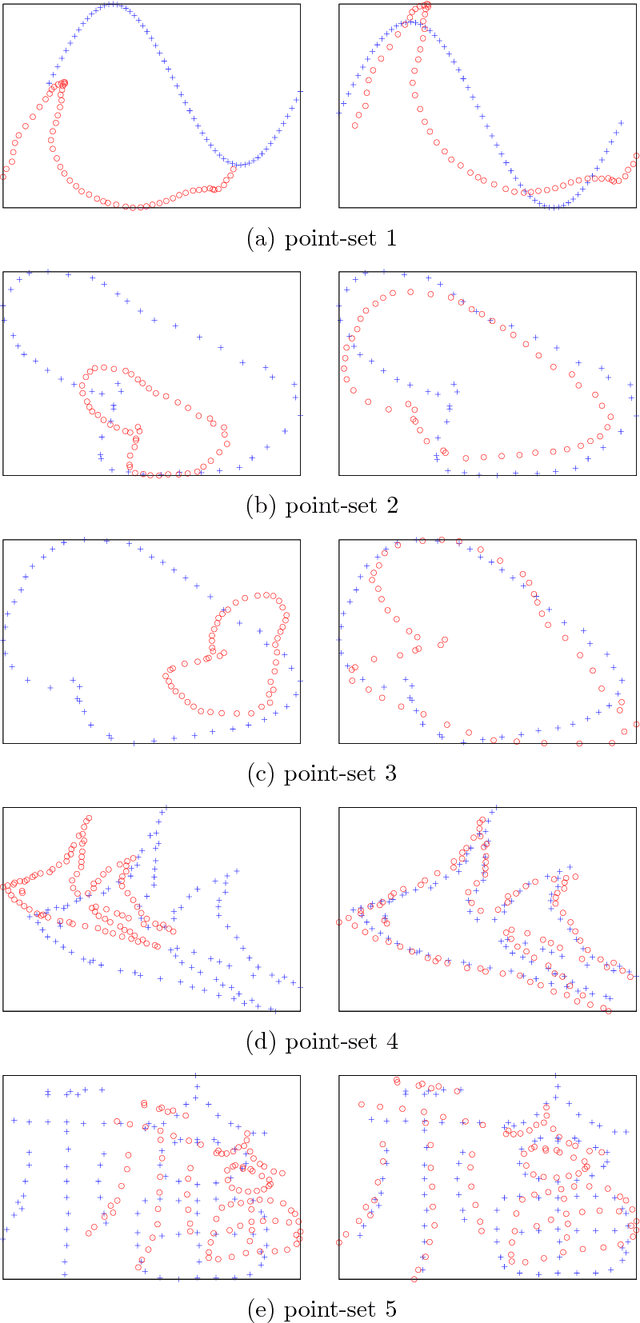

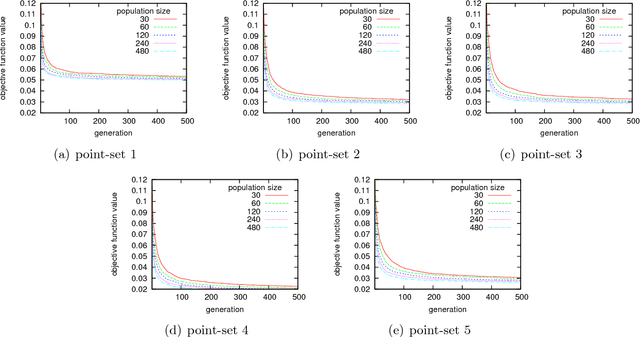

Abstract:This paper describes the application of a real coded genetic algorithm (GA) to align two or more 2-D images by means of image registration. The proposed search strategy is a transformation parameters-based approach involving the affine transform. The real coded GA uses Simulated Binary Crossover (SBX), a parent-centric recombination operator that has shown to deliver a good performance in many optimization problems in the continuous domain. In addition, we propose a new technique for matching points between a warped and static images by using a randomized ordering when visiting the points during the matching procedure. This new technique makes the evaluation of the objective function somewhat noisy, but GAs and other population-based search algorithms have been shown to cope well with noisy fitness evaluations. The results obtained are competitive to those obtained by state-of-the-art classical methods in image registration, confirming the usefulness of the proposed noisy objective function and the suitability of SBX as a recombination operator for this type of problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge