eBear: An Expressive Bear-Like Robot

Paper and Code

Nov 20, 2015

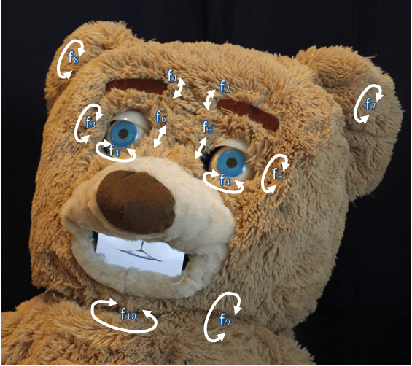

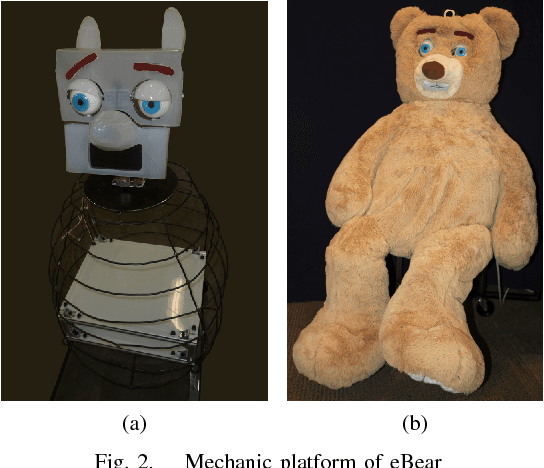

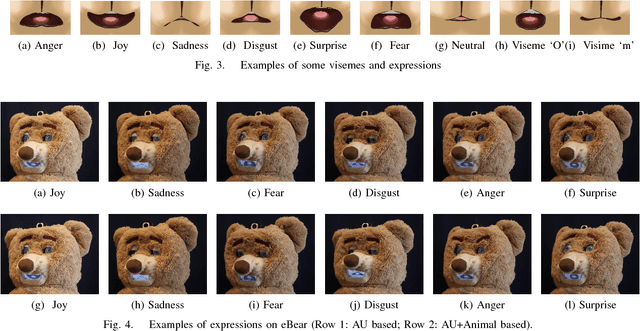

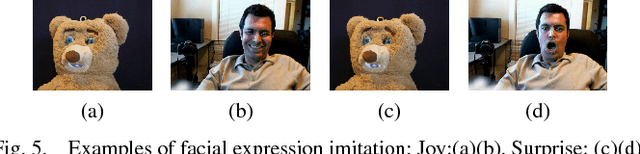

This paper presents an anthropomorphic robotic bear for the exploration of human-robot interaction including verbal and non-verbal communications. This robot is implemented with a hybrid face composed of a mechanical faceplate with 10 DOFs and an LCD-display-equipped mouth. The facial emotions of the bear are designed based on the description of the Facial Action Coding System as well as some animal-like gestures described by Darwin. The mouth movements are realized by synthesizing emotions with speech. User acceptance investigations have been conducted to evaluate the likability of these facial behaviors exhibited by the eBear. Multiple Kernel Learning is proposed to fuse different features for recognizing user's facial expressions. Our experimental results show that the developed Bear-Like robot can perceive basic facial expressions and provide emotive conveyance towards human beings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge