Stephen Conyers

ExpressionBot: An Emotive Lifelike Robotic Face for Face-to-Face Communication

Nov 20, 2015

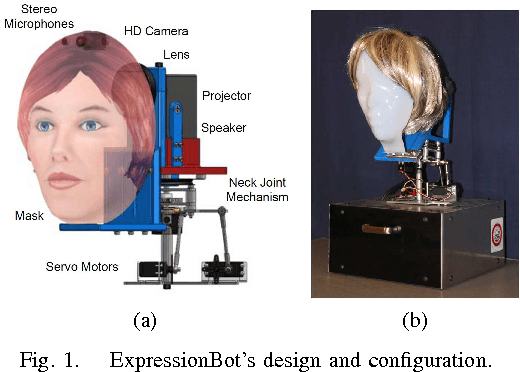

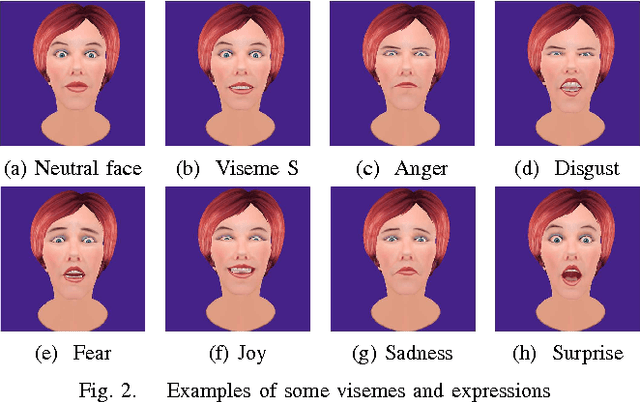

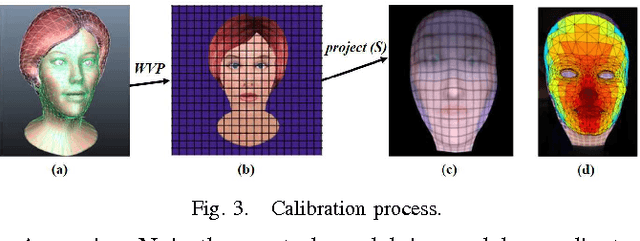

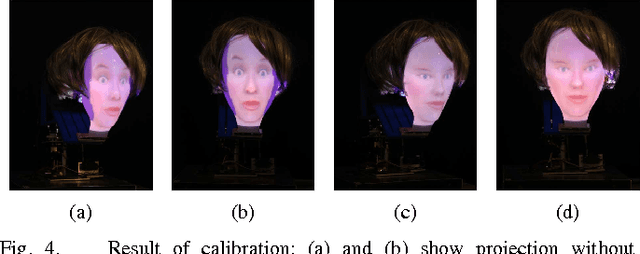

Abstract:This article proposes an emotive lifelike robotic face, called ExpressionBot, that is designed to support verbal and non-verbal communication between the robot and humans, with the goal of closely modeling the dynamics of natural face-to-face communication. The proposed robotic head consists of two major components: 1) a hardware component that contains a small projector, a fish-eye lens, a custom-designed mask and a neck system with 3 degrees of freedom; 2) a facial animation system, projected onto the robotic mask, that is capable of presenting facial expressions, realistic eye movement, and accurate visual speech. We present three studies that compare Human-Robot Interaction with Human-Computer Interaction with a screen-based model of the avatar. The studies indicate that the robotic face is well accepted by users, with some advantages in recognition of facial expression and mutual eye gaze contact.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge