Hojjat Abdollahi

Artificial Emotional Intelligence in Socially Assistive Robots for Older Adults: A Pilot Study

Jan 26, 2022

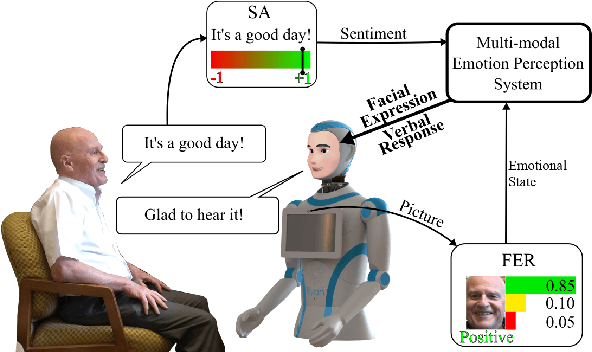

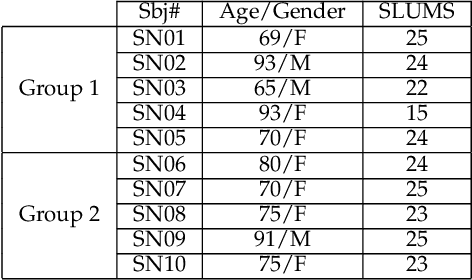

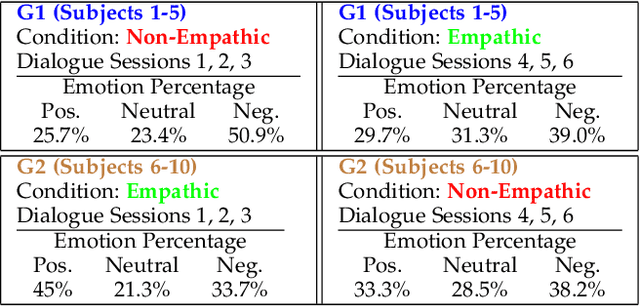

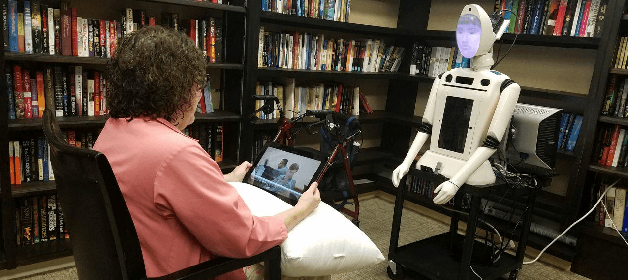

Abstract:This paper presents our recent research on integrating artificial emotional intelligence in a social robot (Ryan) and studies the robot's effectiveness in engaging older adults. Ryan is a socially assistive robot designed to provide companionship for older adults with depression and dementia through conversation. We used two versions of Ryan for our study, empathic and non-empathic. The empathic Ryan utilizes a multimodal emotion recognition algorithm and a multimodal emotion expression system. Using different input modalities for emotion, i.e. facial expression and speech sentiment, the empathic Ryan detects users' emotional state and utilizes an affective dialogue manager to generate a response. On the other hand, the non-empathic Ryan lacks facial expression and uses scripted dialogues that do not factor in the users' emotional state. We studied these two versions of Ryan with 10 older adults living in a senior care facility. The statistically significant improvement in the users' reported face-scale mood measurement indicates an overall positive effect from the interaction with both the empathic and non-empathic versions of Ryan. However, the number of spoken words measurement and the exit survey analysis suggest that the users perceive the empathic Ryan as more engaging and likable.

Deep Active Shape Model for Face Alignment and Pose Estimation in Mobile Environment

Mar 11, 2021

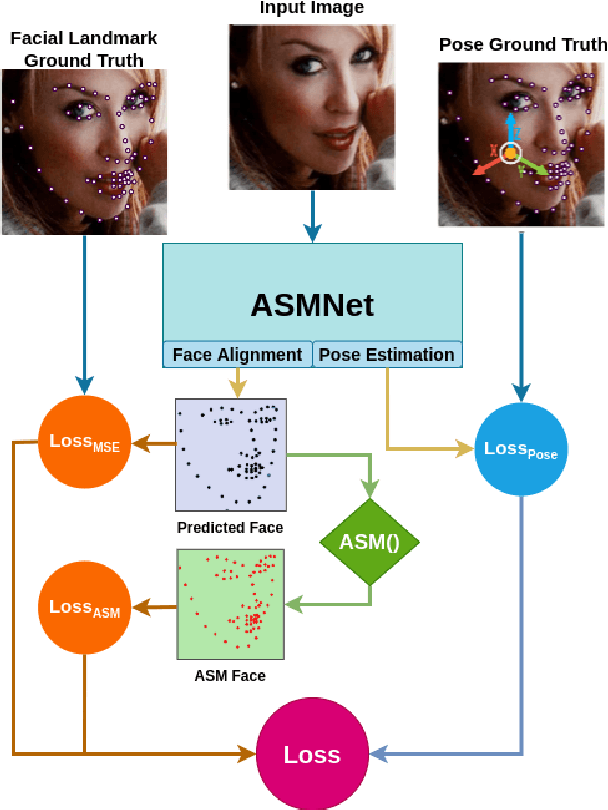

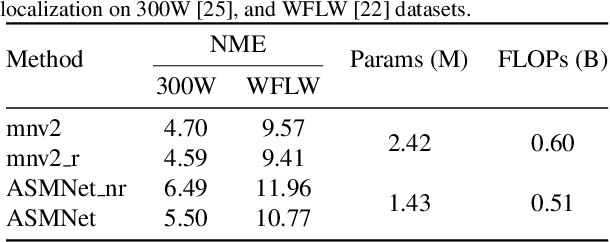

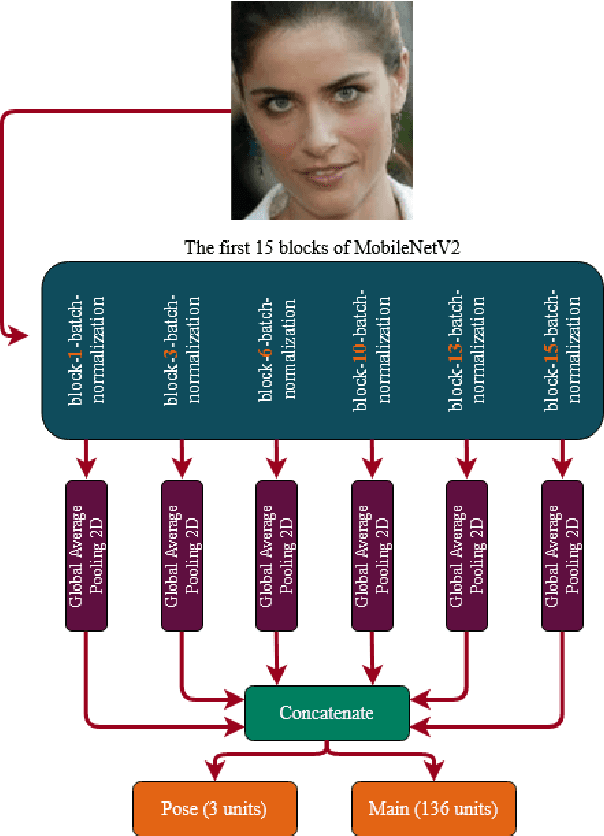

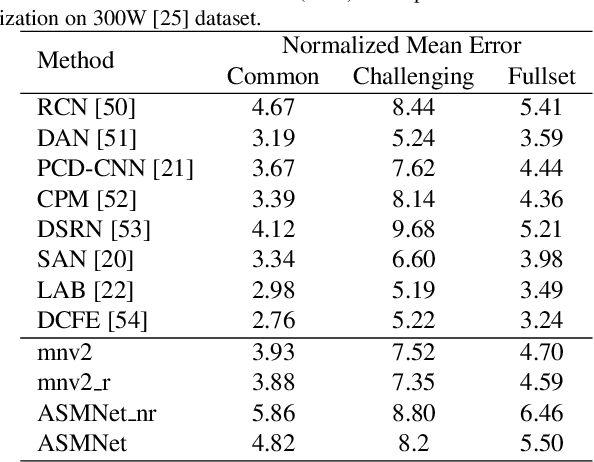

Abstract:Active Shape Model (ASM) is a statistical model of object shapes that represents a target structure. ASM can guide machine learning algorithms to fit a set of points representing an object (e.g., face) onto an image. This paper presents a lightweight Convolutional Neural Network (CNN) architecture with a loss function being assisted by ASM for face alignment and estimating head pose in the wild. We use ASM to first guide the network towards learning the smoother distribution of the facial landmark points. Then, during the training process, inspired by the transfer learning, we gradually harden the regression problem and lead the network towards learning the original landmark points distribution. We define multi-tasks in our loss function that are responsible for detecting facial landmark points, as well as estimating face pose. Learning multiple correlated tasks simultaneously builds synergy and improves the performance of individual tasks. We compare the performance of our proposed CNN, ASMNet with MobileNetV2 (which is about 2 times bigger ASMNet) in both face alignment and pose estimation tasks. Experimental results on challenging datasets show that by using the proposed ASM assisted loss function, ASMNet performance is comparable with MobileNetV2 in face alignment task. Besides, for face pose estimation, ASMNet performs much better than MobileNetV2. Moreover, overall ASMNet achieves an acceptable performance for facial landmark points detection and pose estimation while having a significantly smaller number of parameters and floating-point operations comparing to many CNN-based proposed models.

Delivering Cognitive Behavioral Therapy Using A Conversational SocialRobot

Sep 14, 2019

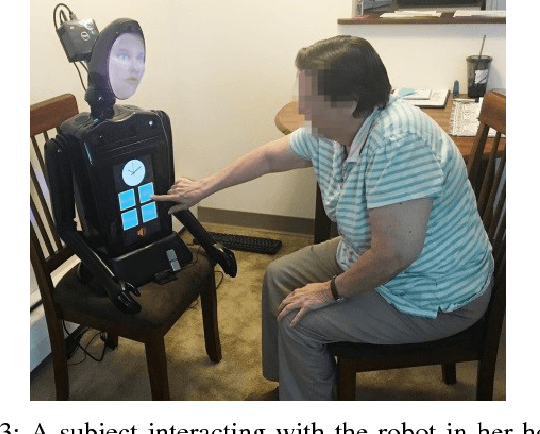

Abstract:Social robots are becoming an integrated part of our daily life due to their ability to provide companionship and entertainment. A subfield of robotics, Socially Assistive Robotics (SAR), is particularly suitable for expanding these benefits into the healthcare setting because of its unique ability to provide cognitive, social, and emotional support. This paper presents our recent research on developing SAR by evaluating the ability of a life-like conversational social robot, called Ryan, to administer internet-delivered cognitive behavioral therapy (iCBT) to older adults with depression. For Ryan to administer the therapy, we developed a dialogue-management system, called Program-R. Using an accredited CBT manual for the treatment of depression, we created seven hour-long iCBT dialogues and integrated them into Program-R using Artificial Intelligence Markup Language (AIML). To assess the effectiveness of Robot-based iCBT and users' likability of our approach, we conducted an HRI study with a cohort of elderly people with mild-to-moderate depression over a period of four weeks. Quantitative analyses of participant's spoken responses (e.g. word count and sentiment analysis), face-scale mood scores, and exit surveys, strongly support the notion robot-based iCBT is a viable alternative to traditional human-delivered therapy.

A Pilot Study on Using an Intelligent Life-like Robot as a Companion for Elderly Individuals with Dementia and Depression

Dec 07, 2017

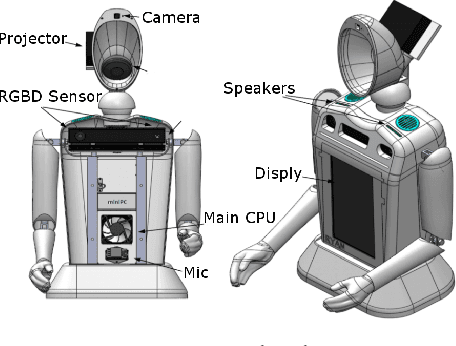

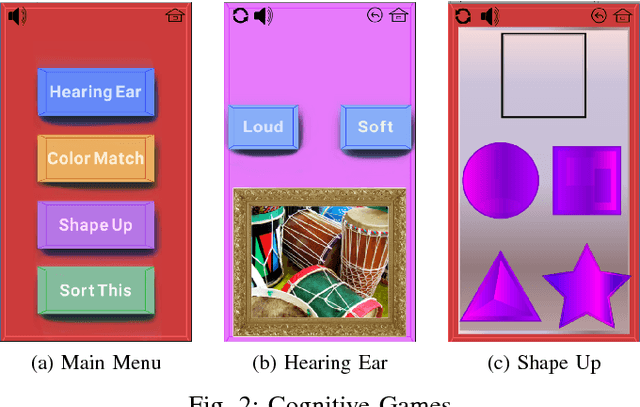

Abstract:This paper presents the design, development, methodology, and the results of a pilot study on using an intelligent, emotive and perceptive social robot (aka Companionbot) for improving the quality of life of elderly people with dementia and/or depression. Ryan Companionbot prototyped in this project, is a rear-projected life-like conversational robot. Ryan is equipped with features that can (1) interpret and respond to users' emotions through facial expressions and spoken language, (2) proactively engage in conversations with users, and (3) remind them about their daily life schedules (e.g. taking their medicine on time). Ryan engages users in cognitive games and reminiscence activities. We conducted a pilot study with six elderly individuals with moderate dementia and/or depression living in a senior living facility in Denver. Each individual had 24/7 access to a Ryan in his/her room for a period of 4-6 weeks. Our observations of these individuals, interviews with them and their caregivers, and analyses of their interactions during this period revealed that they established rapport with the robot and greatly valued and enjoyed having a Companionbot in their room.

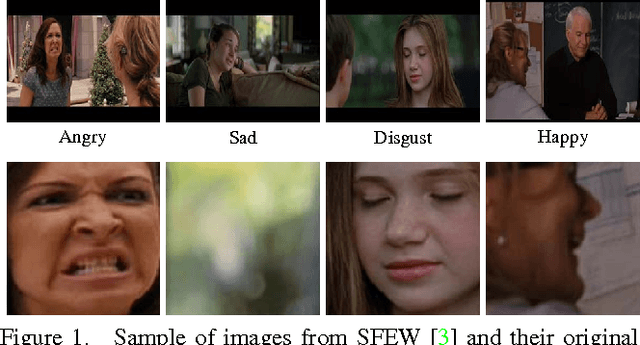

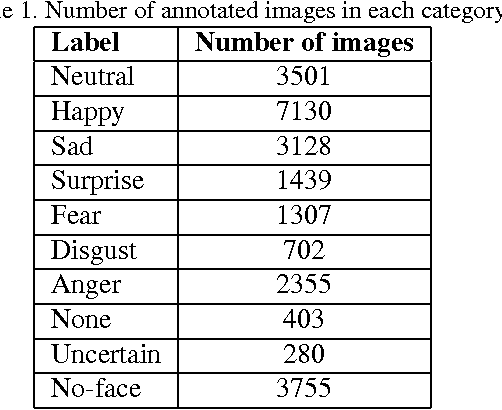

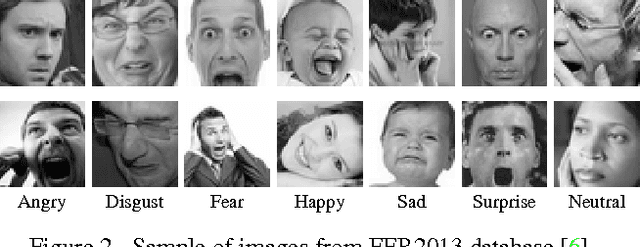

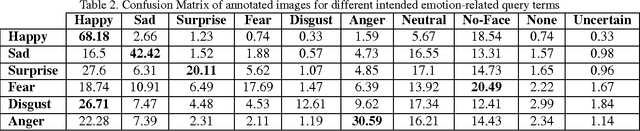

Facial Expression Recognition from World Wild Web

Jan 05, 2017

Abstract:Recognizing facial expression in a wild setting has remained a challenging task in computer vision. The World Wide Web is a good source of facial images which most of them are captured in uncontrolled conditions. In fact, the Internet is a Word Wild Web of facial images with expressions. This paper presents the results of a new study on collecting, annotating, and analyzing wild facial expressions from the web. Three search engines were queried using 1250 emotion related keywords in six different languages and the retrieved images were mapped by two annotators to six basic expressions and neutral. Deep neural networks and noise modeling were used in three different training scenarios to find how accurately facial expressions can be recognized when trained on noisy images collected from the web using query terms (e.g. happy face, laughing man, etc)? The results of our experiments show that deep neural networks can recognize wild facial expressions with an accuracy of 82.12%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge