Ahmad Sajedi

Data-to-Model Distillation: Data-Efficient Learning Framework

Nov 19, 2024Abstract:Dataset distillation aims to distill the knowledge of a large-scale real dataset into small yet informative synthetic data such that a model trained on it performs as well as a model trained on the full dataset. Despite recent progress, existing dataset distillation methods often struggle with computational efficiency, scalability to complex high-resolution datasets, and generalizability to deep architectures. These approaches typically require retraining when the distillation ratio changes, as knowledge is embedded in raw pixels. In this paper, we propose a novel framework called Data-to-Model Distillation (D2M) to distill the real dataset's knowledge into the learnable parameters of a pre-trained generative model by aligning rich representations extracted from real and generated images. The learned generative model can then produce informative training images for different distillation ratios and deep architectures. Extensive experiments on 15 datasets of varying resolutions show D2M's superior performance, re-distillation efficiency, and cross-architecture generalizability. Our method effectively scales up to high-resolution 128x128 ImageNet-1K. Furthermore, we verify D2M's practical benefits for downstream applications in neural architecture search.

Emphasizing Discriminative Features for Dataset Distillation in Complex Scenarios

Oct 22, 2024

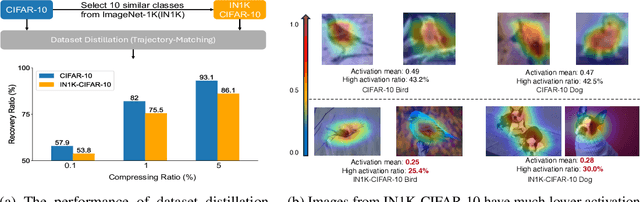

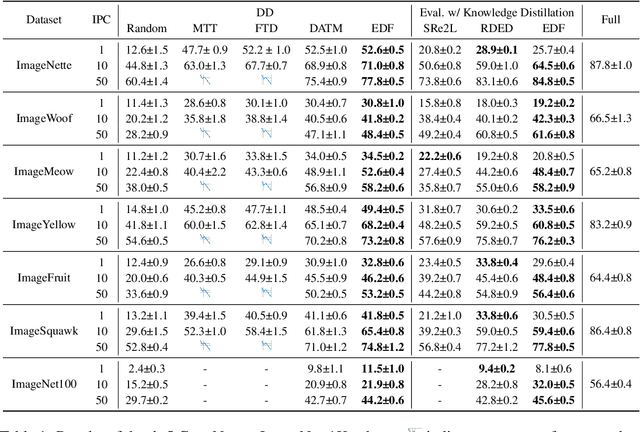

Abstract:Dataset distillation has demonstrated strong performance on simple datasets like CIFAR, MNIST, and TinyImageNet but struggles to achieve similar results in more complex scenarios. In this paper, we propose EDF (emphasizes the discriminative features), a dataset distillation method that enhances key discriminative regions in synthetic images using Grad-CAM activation maps. Our approach is inspired by a key observation: in simple datasets, high-activation areas typically occupy most of the image, whereas in complex scenarios, the size of these areas is much smaller. Unlike previous methods that treat all pixels equally when synthesizing images, EDF uses Grad-CAM activation maps to enhance high-activation areas. From a supervision perspective, we downplay supervision signals that have lower losses, as they contain common patterns. Additionally, to help the DD community better explore complex scenarios, we build the Complex Dataset Distillation (Comp-DD) benchmark by meticulously selecting sixteen subsets, eight easy and eight hard, from ImageNet-1K. In particular, EDF consistently outperforms SOTA results in complex scenarios, such as ImageNet-1K subsets. Hopefully, more researchers will be inspired and encouraged to improve the practicality and efficacy of DD. Our code and benchmark will be made public at https://github.com/NUS-HPC-AI-Lab/EDF.

GSTAM: Efficient Graph Distillation with Structural Attention-Matching

Aug 29, 2024

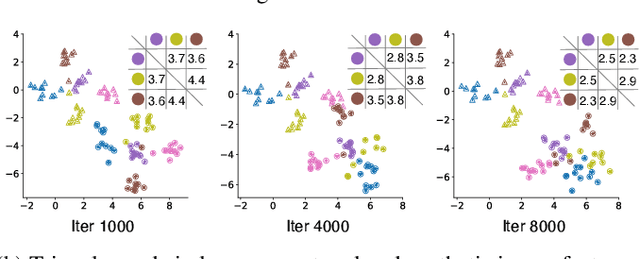

Abstract:Graph distillation has emerged as a solution for reducing large graph datasets to smaller, more manageable, and informative ones. Existing methods primarily target node classification, involve computationally intensive processes, and fail to capture the true distribution of the full graph dataset. To address these issues, we introduce Graph Distillation with Structural Attention Matching (GSTAM), a novel method for condensing graph classification datasets. GSTAM leverages the attention maps of GNNs to distill structural information from the original dataset into synthetic graphs. The structural attention-matching mechanism exploits the areas of the input graph that GNNs prioritize for classification, effectively distilling such information into the synthetic graphs and improving overall distillation performance. Comprehensive experiments demonstrate GSTAM's superiority over existing methods, achieving 0.45% to 6.5% better performance in extreme condensation ratios, highlighting its potential use in advancing distillation for graph classification tasks (Code available at https://github.com/arashrasti96/GSTAM).

ATOM: Attention Mixer for Efficient Dataset Distillation

May 02, 2024

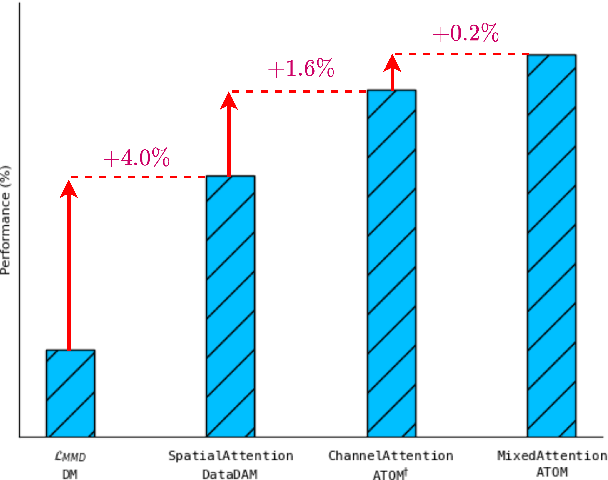

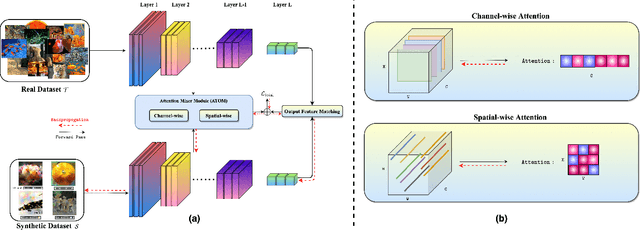

Abstract:Recent works in dataset distillation seek to minimize training expenses by generating a condensed synthetic dataset that encapsulates the information present in a larger real dataset. These approaches ultimately aim to attain test accuracy levels akin to those achieved by models trained on the entirety of the original dataset. Previous studies in feature and distribution matching have achieved significant results without incurring the costs of bi-level optimization in the distillation process. Despite their convincing efficiency, many of these methods suffer from marginal downstream performance improvements, limited distillation of contextual information, and subpar cross-architecture generalization. To address these challenges in dataset distillation, we propose the ATtentiOn Mixer (ATOM) module to efficiently distill large datasets using a mixture of channel and spatial-wise attention in the feature matching process. Spatial-wise attention helps guide the learning process based on consistent localization of classes in their respective images, allowing for distillation from a broader receptive field. Meanwhile, channel-wise attention captures the contextual information associated with the class itself, thus making the synthetic image more informative for training. By integrating both types of attention, our ATOM module demonstrates superior performance across various computer vision datasets, including CIFAR10/100 and TinyImagenet. Notably, our method significantly improves performance in scenarios with a low number of images per class, thereby enhancing its potential. Furthermore, we maintain the improvement in cross-architectures and applications such as neural architecture search.

ProbMCL: Simple Probabilistic Contrastive Learning for Multi-label Visual Classification

Jan 02, 2024

Abstract:Multi-label image classification presents a challenging task in many domains, including computer vision and medical imaging. Recent advancements have introduced graph-based and transformer-based methods to improve performance and capture label dependencies. However, these methods often include complex modules that entail heavy computation and lack interpretability. In this paper, we propose Probabilistic Multi-label Contrastive Learning (ProbMCL), a novel framework to address these challenges in multi-label image classification tasks. Our simple yet effective approach employs supervised contrastive learning, in which samples that share enough labels with an anchor image based on a decision threshold are introduced as a positive set. This structure captures label dependencies by pulling positive pair embeddings together and pushing away negative samples that fall below the threshold. We enhance representation learning by incorporating a mixture density network into contrastive learning and generating Gaussian mixture distributions to explore the epistemic uncertainty of the feature encoder. We validate the effectiveness of our framework through experimentation with datasets from the computer vision and medical imaging domains. Our method outperforms the existing state-of-the-art methods while achieving a low computational footprint on both datasets. Visualization analyses also demonstrate that ProbMCL-learned classifiers maintain a meaningful semantic topology.

DataDAM: Efficient Dataset Distillation with Attention Matching

Sep 29, 2023

Abstract:Researchers have long tried to minimize training costs in deep learning while maintaining strong generalization across diverse datasets. Emerging research on dataset distillation aims to reduce training costs by creating a small synthetic set that contains the information of a larger real dataset and ultimately achieves test accuracy equivalent to a model trained on the whole dataset. Unfortunately, the synthetic data generated by previous methods are not guaranteed to distribute and discriminate as well as the original training data, and they incur significant computational costs. Despite promising results, there still exists a significant performance gap between models trained on condensed synthetic sets and those trained on the whole dataset. In this paper, we address these challenges using efficient Dataset Distillation with Attention Matching (DataDAM), achieving state-of-the-art performance while reducing training costs. Specifically, we learn synthetic images by matching the spatial attention maps of real and synthetic data generated by different layers within a family of randomly initialized neural networks. Our method outperforms the prior methods on several datasets, including CIFAR10/100, TinyImageNet, ImageNet-1K, and subsets of ImageNet-1K across most of the settings, and achieves improvements of up to 6.5% and 4.1% on CIFAR100 and ImageNet-1K, respectively. We also show that our high-quality distilled images have practical benefits for downstream applications, such as continual learning and neural architecture search.

* Accepted in International Conference in Computer Vision (ICCV) 2023

End-to-End Supervised Multilabel Contrastive Learning

Jul 08, 2023

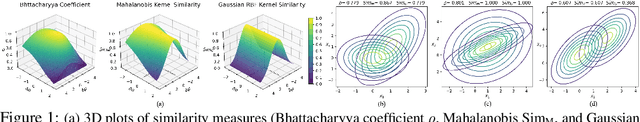

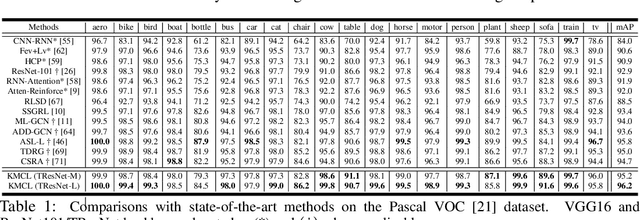

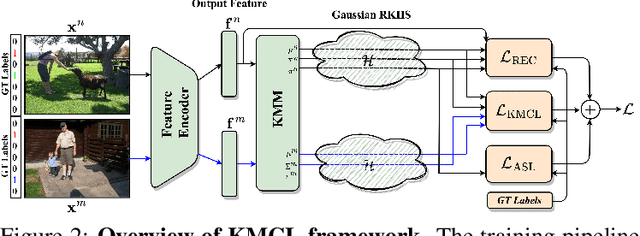

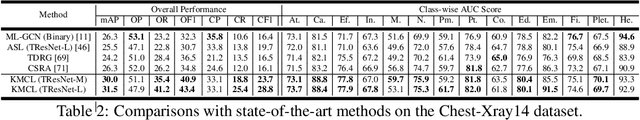

Abstract:Multilabel representation learning is recognized as a challenging problem that can be associated with either label dependencies between object categories or data-related issues such as the inherent imbalance of positive/negative samples. Recent advances address these challenges from model- and data-centric viewpoints. In model-centric, the label correlation is obtained by an external model designs (e.g., graph CNN) to incorporate an inductive bias for training. However, they fail to design an end-to-end training framework, leading to high computational complexity. On the contrary, in data-centric, the realistic nature of the dataset is considered for improving the classification while ignoring the label dependencies. In this paper, we propose a new end-to-end training framework -- dubbed KMCL (Kernel-based Mutlilabel Contrastive Learning) -- to address the shortcomings of both model- and data-centric designs. The KMCL first transforms the embedded features into a mixture of exponential kernels in Gaussian RKHS. It is then followed by encoding an objective loss that is comprised of (a) reconstruction loss to reconstruct kernel representation, (b) asymmetric classification loss to address the inherent imbalance problem, and (c) contrastive loss to capture label correlation. The KMCL models the uncertainty of the feature encoder while maintaining a low computational footprint. Extensive experiments are conducted on image classification tasks to showcase the consistent improvements of KMCL over the SOTA methods. PyTorch implementation is provided in \url{https://github.com/mahdihosseini/KMCL}.

A New Probabilistic Distance Metric With Application In Gaussian Mixture Reduction

Jun 12, 2023

Abstract:This paper presents a new distance metric to compare two continuous probability density functions. The main advantage of this metric is that, unlike other statistical measurements, it can provide an analytic, closed-form expression for a mixture of Gaussian distributions while satisfying all metric properties. These characteristics enable fast, stable, and efficient calculations, which are highly desirable in real-world signal processing applications. The application in mind is Gaussian Mixture Reduction (GMR), which is widely used in density estimation, recursive tracking, and belief propagation. To address this problem, we developed a novel algorithm dubbed the Optimization-based Greedy GMR (OGGMR), which employs our metric as a criterion to approximate a high-order Gaussian mixture with a lower order. Experimental results show that the OGGMR algorithm is significantly faster and more efficient than state-of-the-art GMR algorithms while retaining the geometric shape of the original mixture.

Subclass Knowledge Distillation with Known Subclass Labels

Jul 17, 2022

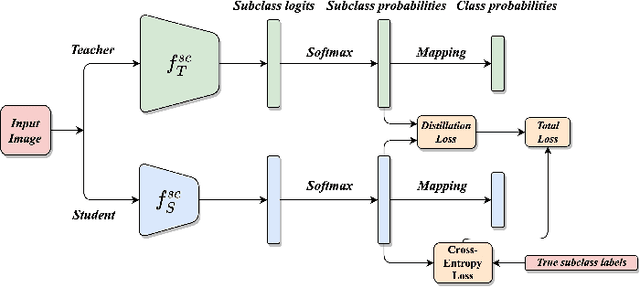

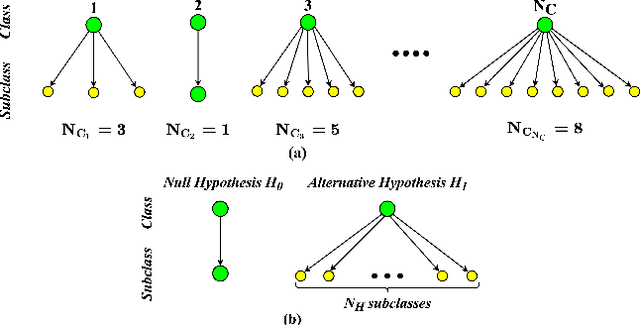

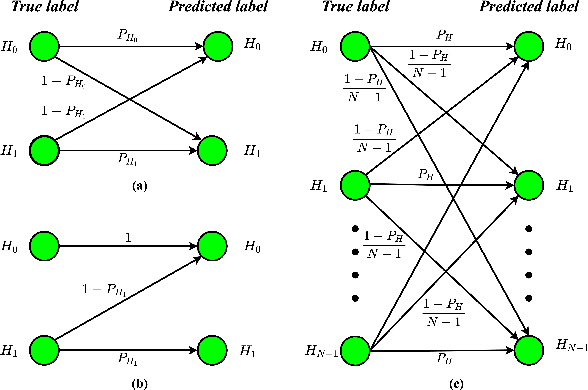

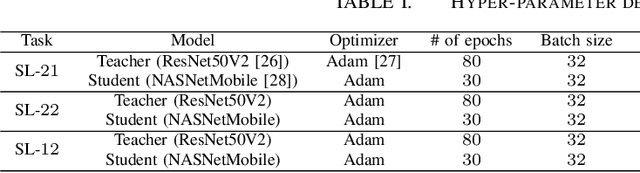

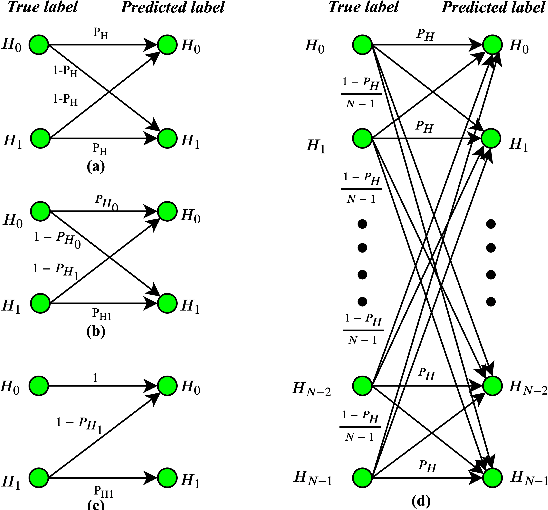

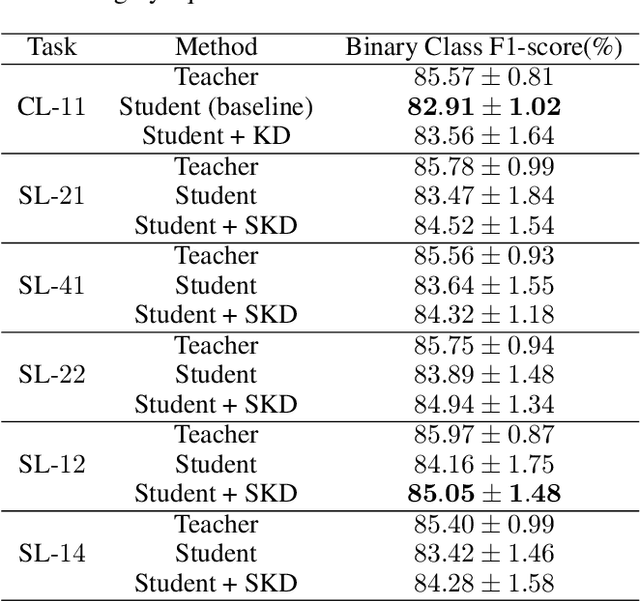

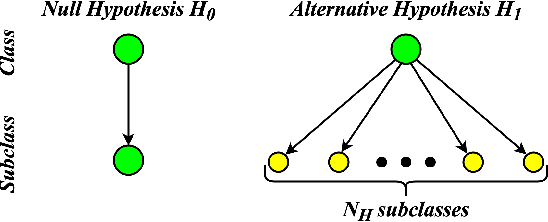

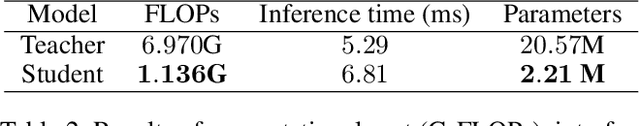

Abstract:This work introduces a novel knowledge distillation framework for classification tasks where information on existing subclasses is available and taken into consideration. In classification tasks with a small number of classes or binary detection, the amount of information transferred from the teacher to the student is restricted, thus limiting the utility of knowledge distillation. Performance can be improved by leveraging information of possible subclasses within the classes. To that end, we propose the so-called Subclass Knowledge Distillation (SKD), a process of transferring the knowledge of predicted subclasses from a teacher to a smaller student. Meaningful information that is not in the teacher's class logits but exists in subclass logits (e.g., similarities within classes) will be conveyed to the student through the SKD, which will then boost the student's performance. Analytically, we measure how much extra information the teacher can provide the student via the SKD to demonstrate the efficacy of our work. The framework developed is evaluated in clinical application, namely colorectal polyp binary classification. It is a practical problem with two classes and a number of subclasses per class. In this application, clinician-provided annotations are used to define subclasses based on the annotation label's variability in a curriculum style of learning. A lightweight, low-complexity student trained with the SKD framework achieves an F1-score of 85.05%, an improvement of 1.47%, and a 2.10% gain over the student that is trained with and without conventional knowledge distillation, respectively. The 2.10% F1-score gap between students trained with and without the SKD can be explained by the extra subclass knowledge, i.e., the extra 0.4656 label bits per sample that the teacher can transfer in our experiment.

On the Efficiency of Subclass Knowledge Distillation in Classification Tasks

Sep 12, 2021

Abstract:This work introduces a novel knowledge distillation framework for classification tasks where information on existing subclasses is available and taken into consideration. In classification tasks with a small number of classes or binary detection (two classes) the amount of information transferred from the teacher to the student network is restricted, thus limiting the utility of knowledge distillation. Performance can be improved by leveraging information about possible subclasses within the available classes in the classification task. To that end, we propose the so-called Subclass Knowledge Distillation (SKD) framework, which is the process of transferring the subclasses' prediction knowledge from a large teacher model into a smaller student one. Through SKD, additional meaningful information which is not in the teacher's class logits but exists in subclasses (e.g., similarities inside classes) will be conveyed to the student and boost its performance. Mathematically, we measure how many extra information bits the teacher can provide for the student via SKD framework. The framework developed is evaluated in clinical application, namely colorectal polyp binary classification. In this application, clinician-provided annotations are used to define subclasses based on the annotation label's variability in a curriculum style of learning. A lightweight, low complexity student trained with the proposed framework achieves an F1-score of 85.05%, an improvement of 2.14% and 1.49% gain over the student that trains without and with conventional knowledge distillation, respectively. These results show that the extra subclasses' knowledge (i.e., 0.4656 label bits per training sample in our experiment) can provide more information about the teacher generalization, and therefore SKD can benefit from using more information to increase the student performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge